Display Method:

,

To address the problem of insufficient labeled data in radar-based human action recognition, a semisupervised learning method based on multidomain collaborative training is proposed herein. This method fuses action features from the slow time–range, slow time–Doppler frequency, and range–Doppler frequency domains to construct a decision-level ensemble framework. An interdomain consistency evaluation mechanism is employed to dynamically adjust the contribution of each domain in ensemble prediction. Furthermore, a stratified confidence dynamic pseudo-labeling strategy is designed to balance pseudo-label quality and utilization rate through multilevel quality assessment and dynamic threshold calibration. In addition, a feature alignment constraint mechanism is introduced, where fast principal component analysis is utilized to extract the principal components of multidomain features. This mechanism guides the deep network to learn compact feature representations and enhances model discriminability. On the through-wall human action dataset based on a random code radar, an average recognition accuracy of (93.6±1.6)% is achieved with 5% labeled data; meanwhile, the corresponding accuracy on the indoor human action dataset based on a frequency modulated continuous-wave (FMCW) radar is (91.3±1.9)%. The proposed method outperforms supervised learning methods, including the use of Bi-LSTM, LH-ViT, and MFAFN, as well as semisupervised learning methods, including the use of FixMatch, C-TGAN, MF-Match, and LW-HGR. Experimental results demonstrate that the proposed method exhibits stable performance across two different radar systems (random code and FMCW radars) and two detection scenarios (through-wall and indoor scenarios), validating its cross-system and cross-scenario adaptability. Finally, the semisupervised learning model based on multidomain collaborative training has 1.30M parameters, requires 26.16M floating-point operations, and exhibits a size of 5.01 MB, demonstrating high computational efficiency.

To address the problem of insufficient labeled data in radar-based human action recognition, a semisupervised learning method based on multidomain collaborative training is proposed herein. This method fuses action features from the slow time–range, slow time–Doppler frequency, and range–Doppler frequency domains to construct a decision-level ensemble framework. An interdomain consistency evaluation mechanism is employed to dynamically adjust the contribution of each domain in ensemble prediction. Furthermore, a stratified confidence dynamic pseudo-labeling strategy is designed to balance pseudo-label quality and utilization rate through multilevel quality assessment and dynamic threshold calibration. In addition, a feature alignment constraint mechanism is introduced, where fast principal component analysis is utilized to extract the principal components of multidomain features. This mechanism guides the deep network to learn compact feature representations and enhances model discriminability. On the through-wall human action dataset based on a random code radar, an average recognition accuracy of (93.6±1.6)% is achieved with 5% labeled data; meanwhile, the corresponding accuracy on the indoor human action dataset based on a frequency modulated continuous-wave (FMCW) radar is (91.3±1.9)%. The proposed method outperforms supervised learning methods, including the use of Bi-LSTM, LH-ViT, and MFAFN, as well as semisupervised learning methods, including the use of FixMatch, C-TGAN, MF-Match, and LW-HGR. Experimental results demonstrate that the proposed method exhibits stable performance across two different radar systems (random code and FMCW radars) and two detection scenarios (through-wall and indoor scenarios), validating its cross-system and cross-scenario adaptability. Finally, the semisupervised learning model based on multidomain collaborative training has 1.30M parameters, requires 26.16M floating-point operations, and exhibits a size of 5.01 MB, demonstrating high computational efficiency.

Formed by the cooling and solidification of flowing lava during volcanic activity, lunar lava tubes are considered promising candidates for future lunar bases due to their stable and protective roofs. However, these tubes are typically buried hundreds of meters to kilometers beneath the surface, making direct detection extremely difficult. Current detection methods mainly rely on radar and gravity anomaly analysis. However, the resolution of orbital radar is insufficient to distinguish similar subsurface structures, whereas in situ lunar penetrating radar is limited by a small detection range and vulnerability to near-field interference. Gravity anomaly detection also performs poorly when identifying tubes oriented north–south or with roofs narrower than a kilometer. Skylights serve as critical indicators for locating subsurface tubes and can be identified through optical imagery and infrared radiation thermal anomalies. However, optical images are constrained by illumination conditions, making full three-dimensional reconstruction of skylights difficult. Infrared data are further limited by penetration depth and spatial resolution (320 m × 160 m), which hinders the detection of subsurface thermal anomalies and the assessment of the thermophysical properties of materials at the pit floor. To address these challenges, this paper explores the feasibility of detecting skylight thermal anomalies using microwave radiation. Owing to its penetration capability and sensitivity to dielectric properties, this approach can probe subsurface thermal features and effectively determine the material composition of the pit floor. However, a significant scale disparity exists between the kilometer-scale resolution of current data and the relatively small size of skylights. Therefore, enhancing the detection capability of passive microwave methods for 100-m-scale skylights remains a critical issue that requires immediate attention.

Formed by the cooling and solidification of flowing lava during volcanic activity, lunar lava tubes are considered promising candidates for future lunar bases due to their stable and protective roofs. However, these tubes are typically buried hundreds of meters to kilometers beneath the surface, making direct detection extremely difficult. Current detection methods mainly rely on radar and gravity anomaly analysis. However, the resolution of orbital radar is insufficient to distinguish similar subsurface structures, whereas in situ lunar penetrating radar is limited by a small detection range and vulnerability to near-field interference. Gravity anomaly detection also performs poorly when identifying tubes oriented north–south or with roofs narrower than a kilometer. Skylights serve as critical indicators for locating subsurface tubes and can be identified through optical imagery and infrared radiation thermal anomalies. However, optical images are constrained by illumination conditions, making full three-dimensional reconstruction of skylights difficult. Infrared data are further limited by penetration depth and spatial resolution (320 m × 160 m), which hinders the detection of subsurface thermal anomalies and the assessment of the thermophysical properties of materials at the pit floor. To address these challenges, this paper explores the feasibility of detecting skylight thermal anomalies using microwave radiation. Owing to its penetration capability and sensitivity to dielectric properties, this approach can probe subsurface thermal features and effectively determine the material composition of the pit floor. However, a significant scale disparity exists between the kilometer-scale resolution of current data and the relatively small size of skylights. Therefore, enhancing the detection capability of passive microwave methods for 100-m-scale skylights remains a critical issue that requires immediate attention.

Synthetic Aperture Radar (SAR) is widely used in military and civilian applications, with intelligent target interpretation of SAR images being a crucial component of SAR applications. Vision-language Models (VLMs) play an important role in SAR target interpretation. By incorporating natural language understanding, VLMs effectively address the challenges posed by large intraclass variability in target characteristics and the scarcity of high-quality labeled samples, thereby advancing the field from purely visual interpretation toward semantic understanding of targets. Drawing upon our team’s extensive research experience in SAR target interpretation theory, algorithms, and applications, this paper provides a comprehensive review of intelligent SAR target interpretation based on VLMs. We provide an in-depth analysis of existing challenges and tasks, summarize the current state of research, and compile available open-source datasets. Furthermore, we systematically outline the evolution, ranging from task-specific VLMs to contrastive-, conversational-, and generative-based VLMs and foundational models. Finally, we discuss the latest challenges and future outlooks in SAR target interpretation by VLMs.

Synthetic Aperture Radar (SAR) is widely used in military and civilian applications, with intelligent target interpretation of SAR images being a crucial component of SAR applications. Vision-language Models (VLMs) play an important role in SAR target interpretation. By incorporating natural language understanding, VLMs effectively address the challenges posed by large intraclass variability in target characteristics and the scarcity of high-quality labeled samples, thereby advancing the field from purely visual interpretation toward semantic understanding of targets. Drawing upon our team’s extensive research experience in SAR target interpretation theory, algorithms, and applications, this paper provides a comprehensive review of intelligent SAR target interpretation based on VLMs. We provide an in-depth analysis of existing challenges and tasks, summarize the current state of research, and compile available open-source datasets. Furthermore, we systematically outline the evolution, ranging from task-specific VLMs to contrastive-, conversational-, and generative-based VLMs and foundational models. Finally, we discuss the latest challenges and future outlooks in SAR target interpretation by VLMs.

In locating ground moving radiating sources, traditional passive positioning methods, such as Direction of Arrival (DOA), often rely on long-term observation and filtering, resulting in low positioning efficiency. Existing synthetic aperture-based positioning methods are primarily designed for stationary radiating sources, making high-precision positioning of moving sources difficult. To address this limitation, this paper proposes synthetic aperture-based fast positioning and velocity estimation methods for moving radiating sources under single- and dual-station positioning systems, respectively. The proposed methods establish an instantaneous slant range model of the radiating source and derive the mapping relationship between the positioning parameters (position and velocity) and the imaging parameters. Specifically, in the single-station scenario, the traditional second-order slant range model is extended to third order, and a third-order chirp rate is introduced to supplement the degrees of freedom, thereby enabling simultaneous estimation of position and velocity. In the dual-station scenario, an additional observation station is used to introduce two new imaging parameters, thereby further improving the rapidity and accuracy of positioning. To address the multi-solution problem inherent in the positioning equations, this paper proposes true-solution determination criteria for the single- and dual-station systems and presents an initialization strategy to ensure a unique solution for dual-station positioning. Furthermore, the paper analyzes how various factors affect the positioning accuracy of single- and dual-station models, compares the performance of the proposed single- and dual-station passive positioning models, and verifies the effectiveness of the proposed algorithms through simulations.

In locating ground moving radiating sources, traditional passive positioning methods, such as Direction of Arrival (DOA), often rely on long-term observation and filtering, resulting in low positioning efficiency. Existing synthetic aperture-based positioning methods are primarily designed for stationary radiating sources, making high-precision positioning of moving sources difficult. To address this limitation, this paper proposes synthetic aperture-based fast positioning and velocity estimation methods for moving radiating sources under single- and dual-station positioning systems, respectively. The proposed methods establish an instantaneous slant range model of the radiating source and derive the mapping relationship between the positioning parameters (position and velocity) and the imaging parameters. Specifically, in the single-station scenario, the traditional second-order slant range model is extended to third order, and a third-order chirp rate is introduced to supplement the degrees of freedom, thereby enabling simultaneous estimation of position and velocity. In the dual-station scenario, an additional observation station is used to introduce two new imaging parameters, thereby further improving the rapidity and accuracy of positioning. To address the multi-solution problem inherent in the positioning equations, this paper proposes true-solution determination criteria for the single- and dual-station systems and presents an initialization strategy to ensure a unique solution for dual-station positioning. Furthermore, the paper analyzes how various factors affect the positioning accuracy of single- and dual-station models, compares the performance of the proposed single- and dual-station passive positioning models, and verifies the effectiveness of the proposed algorithms through simulations.

To address the urgent need to identify birds and rotary-wing Unmanned Aerial Vehicles (UAVs), this paper proposes a vortex radar-based method for extracting micromotion parameters of targets. The study focused on target parameter acquisition and systematically extended target modeling and parameter extraction strategies. First, mathematical models were developed for the body motion and wing flapping behavior of birds as well as for the rotor movement characteristics and body structure of rotary-wing UAVs. Further, analytical expressions for the radial and rotational Doppler frequency shifts at scattering points were derived, and micro-Doppler features were extracted from radar echo signals to enable target parameter inversion. For bird targets, the radial Doppler frequency was estimated by extracting the spectral peak of the echo signal to obtain the flight velocity. In addition, by combining the rotational Doppler frequency shifts of the scattering points and analyzing the variations of the rotational Doppler frequency using the Short-Time Fourier Transform (STFT), the wing-flapping length was estimated. Even under low Signal-to-Noise Ratio (SNR) conditions, the estimation error of the wing-flapping length remained within 0.03 m. For rotary-wing UAV targets, an echo signal model was first constructed, and the analytical relationship between the radial and rotational components of the micro-Doppler frequency shift was derived. Using the reconstructed Doppler information and through range-time domain analysis, six structural and motion parameters were retrieved, including the Euler angles rotor rotational speed, rotor length, and the distance between the UAV body and rotor. The estimation errors for all parameters were significantly lower than those obtained with conventional approaches based on individual Doppler features, with all parameters remaining within 2%. Simulation results demonstrated that the proposed vortex radar-based parameter extraction method enables accurate multiparameter estimation for birds and rotary-wing UAVs. The method also exhibits stable and reliable performance under low SNR conditions, confirming its effectiveness and applicability in practical engineering scenarios.

To address the urgent need to identify birds and rotary-wing Unmanned Aerial Vehicles (UAVs), this paper proposes a vortex radar-based method for extracting micromotion parameters of targets. The study focused on target parameter acquisition and systematically extended target modeling and parameter extraction strategies. First, mathematical models were developed for the body motion and wing flapping behavior of birds as well as for the rotor movement characteristics and body structure of rotary-wing UAVs. Further, analytical expressions for the radial and rotational Doppler frequency shifts at scattering points were derived, and micro-Doppler features were extracted from radar echo signals to enable target parameter inversion. For bird targets, the radial Doppler frequency was estimated by extracting the spectral peak of the echo signal to obtain the flight velocity. In addition, by combining the rotational Doppler frequency shifts of the scattering points and analyzing the variations of the rotational Doppler frequency using the Short-Time Fourier Transform (STFT), the wing-flapping length was estimated. Even under low Signal-to-Noise Ratio (SNR) conditions, the estimation error of the wing-flapping length remained within 0.03 m. For rotary-wing UAV targets, an echo signal model was first constructed, and the analytical relationship between the radial and rotational components of the micro-Doppler frequency shift was derived. Using the reconstructed Doppler information and through range-time domain analysis, six structural and motion parameters were retrieved, including the Euler angles rotor rotational speed, rotor length, and the distance between the UAV body and rotor. The estimation errors for all parameters were significantly lower than those obtained with conventional approaches based on individual Doppler features, with all parameters remaining within 2%. Simulation results demonstrated that the proposed vortex radar-based parameter extraction method enables accurate multiparameter estimation for birds and rotary-wing UAVs. The method also exhibits stable and reliable performance under low SNR conditions, confirming its effectiveness and applicability in practical engineering scenarios.

,

A large-scale Vision-Language Model (VLM) pre-trained on massive image-text datasets performs well when processing natural images. However, there are two major challenges in applying it to Synthetic Aperture Radar (SAR) images: (1) The high cost of high-quality text annotation limits the construction of SAR image-text paired datasets, and (2) The considerable differences in image features between SAR images and optical natural images increase the difficulty of cross-domain knowledge transfer. To address these problems, this study developed a knowledge transfer method for VLM tailored to SAR images. First, this study leveraged paired SAR and optical remote sensing images and employed a generative VLM to automatically produce textual descriptions of the optical images, thereby indirectly constructing a low-cost SAR-text paired dataset. Second, a two-stage transfer strategy was designed to address the large domain discrepancy between natural and SAR images, reducing the difficulty of each transfer stage. Finally, experimental validation was conducted through the zero-shot scene classification, image retrieval, and object recognition of SAR images. The results demonstrated that the proposed method enables effective knowledge transfer from a large-scale VLM to the SAR image domain.

A large-scale Vision-Language Model (VLM) pre-trained on massive image-text datasets performs well when processing natural images. However, there are two major challenges in applying it to Synthetic Aperture Radar (SAR) images: (1) The high cost of high-quality text annotation limits the construction of SAR image-text paired datasets, and (2) The considerable differences in image features between SAR images and optical natural images increase the difficulty of cross-domain knowledge transfer. To address these problems, this study developed a knowledge transfer method for VLM tailored to SAR images. First, this study leveraged paired SAR and optical remote sensing images and employed a generative VLM to automatically produce textual descriptions of the optical images, thereby indirectly constructing a low-cost SAR-text paired dataset. Second, a two-stage transfer strategy was designed to address the large domain discrepancy between natural and SAR images, reducing the difficulty of each transfer stage. Finally, experimental validation was conducted through the zero-shot scene classification, image retrieval, and object recognition of SAR images. The results demonstrated that the proposed method enables effective knowledge transfer from a large-scale VLM to the SAR image domain.

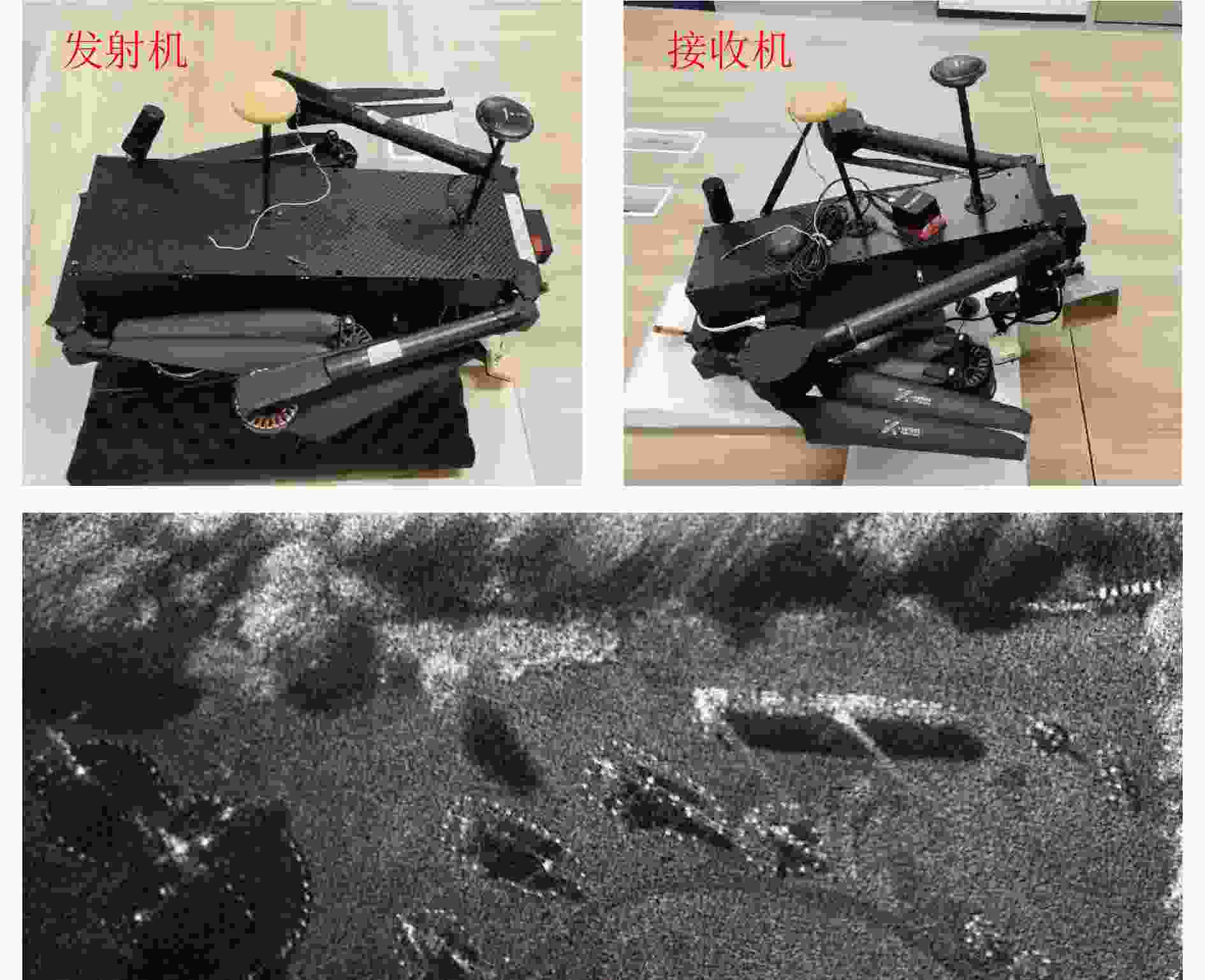

The millimeter-Wave (mmWave) radar is widely used in security screening, nondestructive testing, and through-the-wall imaging due to its compact size, high resolution, and strong penetration capability. High-resolution mmWave radar imaging typically requires synthetic aperture emulation, which involves dense two-dimensional spatial sampling via structured scanning on a mechanical platform. However, this process is time-consuming in practical applications. Therefore, many existing studies have focused on reconstructing echo data under sparse sampling conditions for imaging. However, most existing sparse recovery methods assume uniformly random sparse sampling or involve high computational complexity, making them difficult to apply in practical Synthetic Aperture Radar (SAR) imaging systems. This paper proposes a fast, structured sparse, mmWave three-Dimensional (3D) SAR imaging algorithm based on low-rank and smooth Matrix Completion (MC) to address this problem. First, the global low-rank property and local smoothness prior of echo data are analyzed within the framework of near-field mmWave SAR imaging theory. Our analysis demonstrated that structured sparse SAR data arising from missing entire rows or columns in practical scanning can be recovered. Building on this, an MC model incorporating low-rank and smoothness constraints was constructed. This MC model jointly regularizes with nuclear norm and total variation and can be solved efficiently using the Alternating Direction Method of Multipliers (ADMM). Finally, the performance of the proposed algorithm was validated through multiple simulation runs and real-world experiments. Experimental results showed that, using only 20%–30% of randomly sampled rows or columns of echo data, the proposed algorithm can achieve fast data recovery and high-resolution 3D imaging within tens of seconds.

The millimeter-Wave (mmWave) radar is widely used in security screening, nondestructive testing, and through-the-wall imaging due to its compact size, high resolution, and strong penetration capability. High-resolution mmWave radar imaging typically requires synthetic aperture emulation, which involves dense two-dimensional spatial sampling via structured scanning on a mechanical platform. However, this process is time-consuming in practical applications. Therefore, many existing studies have focused on reconstructing echo data under sparse sampling conditions for imaging. However, most existing sparse recovery methods assume uniformly random sparse sampling or involve high computational complexity, making them difficult to apply in practical Synthetic Aperture Radar (SAR) imaging systems. This paper proposes a fast, structured sparse, mmWave three-Dimensional (3D) SAR imaging algorithm based on low-rank and smooth Matrix Completion (MC) to address this problem. First, the global low-rank property and local smoothness prior of echo data are analyzed within the framework of near-field mmWave SAR imaging theory. Our analysis demonstrated that structured sparse SAR data arising from missing entire rows or columns in practical scanning can be recovered. Building on this, an MC model incorporating low-rank and smoothness constraints was constructed. This MC model jointly regularizes with nuclear norm and total variation and can be solved efficiently using the Alternating Direction Method of Multipliers (ADMM). Finally, the performance of the proposed algorithm was validated through multiple simulation runs and real-world experiments. Experimental results showed that, using only 20%–30% of randomly sampled rows or columns of echo data, the proposed algorithm can achieve fast data recovery and high-resolution 3D imaging within tens of seconds.

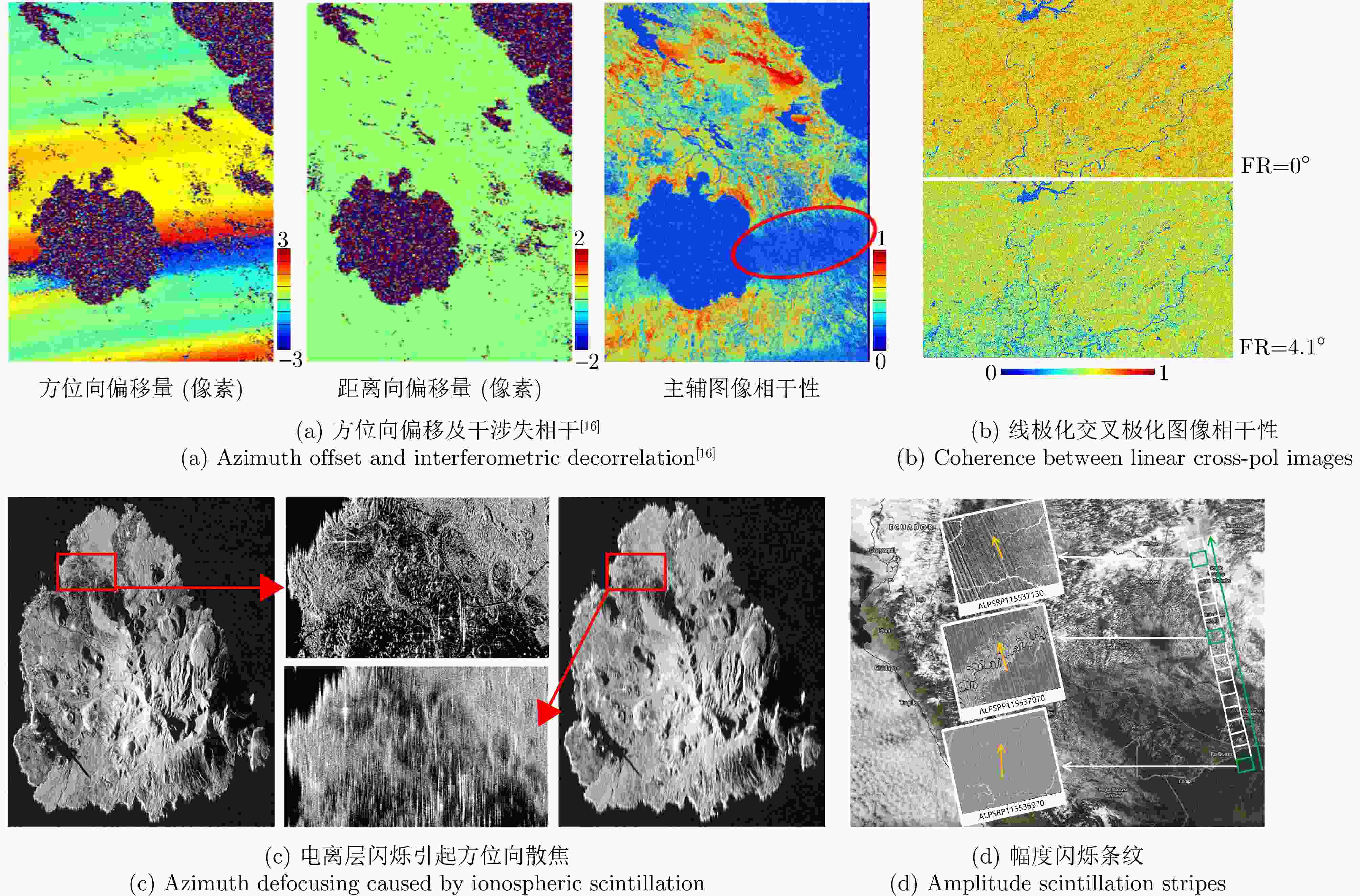

Small Unmanned Aerial Vehicle (UAV)-borne distributed tomographic synthetic aperture radar (TomoSAR) systems exhibit remarkable residual time-varying baseline errors due to the limited precision of the position and orientation system on small UAV platforms. These errors critically degrade the performance of three-Dimensional (3D) target reconstruction. Compared with airborne repeat-pass 3D Synthetic Aperture Radar (SAR), distributed TomoSAR mounted on small UAVs imposes stricter compensation accuracy requirements for time-varying baseline errors because of the altitude constraints of the carrying platform. Under the conditions of low signal-to-noise ratio and substantial time-varying baseline errors, existing estimation methods often fail to provide stable and reliable results. In this paper, a two-step time-varying baseline error estimation method based on image azimuth displacement is proposed. The method sequentially estimates the low-frequency component through the co-registration of the master and slave images and the high-frequency component using a multisquint algorithm. Iterative refinement is applied to enhance estimation accuracy. The experimental results obtained from real C-band small UAV-borne distributed TomoSAR data demonstrate that, compared with the enhanced multisquint processing method, the proposed method considerably reduces the root mean square of differential interferometric phases across most channels, thereby effectively improving interchannel coherence. In addition, the elevation-direction standard deviation of the reconstructed point cloud is reduced from 5.16 to 1.33 m, and the height reconstruction error of building targets is less than 0.5 m, validating the effectiveness and superiority of the proposed method.

Small Unmanned Aerial Vehicle (UAV)-borne distributed tomographic synthetic aperture radar (TomoSAR) systems exhibit remarkable residual time-varying baseline errors due to the limited precision of the position and orientation system on small UAV platforms. These errors critically degrade the performance of three-Dimensional (3D) target reconstruction. Compared with airborne repeat-pass 3D Synthetic Aperture Radar (SAR), distributed TomoSAR mounted on small UAVs imposes stricter compensation accuracy requirements for time-varying baseline errors because of the altitude constraints of the carrying platform. Under the conditions of low signal-to-noise ratio and substantial time-varying baseline errors, existing estimation methods often fail to provide stable and reliable results. In this paper, a two-step time-varying baseline error estimation method based on image azimuth displacement is proposed. The method sequentially estimates the low-frequency component through the co-registration of the master and slave images and the high-frequency component using a multisquint algorithm. Iterative refinement is applied to enhance estimation accuracy. The experimental results obtained from real C-band small UAV-borne distributed TomoSAR data demonstrate that, compared with the enhanced multisquint processing method, the proposed method considerably reduces the root mean square of differential interferometric phases across most channels, thereby effectively improving interchannel coherence. In addition, the elevation-direction standard deviation of the reconstructed point cloud is reduced from 5.16 to 1.33 m, and the height reconstruction error of building targets is less than 0.5 m, validating the effectiveness and superiority of the proposed method.

Vortex Electromagnetic (EM) wave radars utilize EM waves carrying orbital angular momentum to enrich target scattering information, thereby providing intrinsic in-beam azimuth resolution. Hence, this technology holds significant potential for advanced target detection and imaging. However, as sensing scenarios become more complex, conventional electronic vortex EM wave radars are increasingly limited by device bandwidth. Specifically, they encounter substantial challenges in broadband signal generation and control, making it difficult to achieve high range and azimuth resolutions simultaneously. Microwave photonics technology, with its inherent advantages of wide bandwidth, low transmission loss, and robustness against electromagnetic interference, is an effectivesolution to overcome these limitations. This paper reviews recent progress in microwave photonic broadband vortex EM wave radars, addressing the requirements for forward-looking imaging. The fundamental system architectures and imaging mechanisms are elucidated, followed by a critical analysis of the frequency-dependent characteristics of broadband vortex waves and their implications for imaging performance. Key microwave photonic enabling technologies, including broadband phase shifting, optical beamforming, and broadband signal generation, are summarized, and their advantages over traditional electronic schemes in terms of performance are highlighted. Based on these insights, typical system implementation schemes are described, and their high-resolution forward-looking imaging capabilities are demonstrated through proof-of-concept experiments. Finally, future development trends and research directions are discussed.

Vortex Electromagnetic (EM) wave radars utilize EM waves carrying orbital angular momentum to enrich target scattering information, thereby providing intrinsic in-beam azimuth resolution. Hence, this technology holds significant potential for advanced target detection and imaging. However, as sensing scenarios become more complex, conventional electronic vortex EM wave radars are increasingly limited by device bandwidth. Specifically, they encounter substantial challenges in broadband signal generation and control, making it difficult to achieve high range and azimuth resolutions simultaneously. Microwave photonics technology, with its inherent advantages of wide bandwidth, low transmission loss, and robustness against electromagnetic interference, is an effectivesolution to overcome these limitations. This paper reviews recent progress in microwave photonic broadband vortex EM wave radars, addressing the requirements for forward-looking imaging. The fundamental system architectures and imaging mechanisms are elucidated, followed by a critical analysis of the frequency-dependent characteristics of broadband vortex waves and their implications for imaging performance. Key microwave photonic enabling technologies, including broadband phase shifting, optical beamforming, and broadband signal generation, are summarized, and their advantages over traditional electronic schemes in terms of performance are highlighted. Based on these insights, typical system implementation schemes are described, and their high-resolution forward-looking imaging capabilities are demonstrated through proof-of-concept experiments. Finally, future development trends and research directions are discussed.

The spaceborne Hybrid-Polarimetric Synthetic Aperture Radar (HP-SAR) balances the acquisition of rich polarimetric information with high-performance imaging. It offers advantages such as low system complexity and reduced data acquisition costs, and has emerged as a prominent direction in multidimensional microwave remote sensing. LT-1 is China’s first radar satellite equipped with spaceborne HP imaging capability, and it is also the world’s first satellite to implement a multi-channel HP radar system. This study utilizes HP imagery from the LT-1 satellite to construct and systematically elaborate the HP-SAR Evaluation and Analytical Dataset (HEAD-1.0), thereby addressing the lack of high-quality open-source HP datasets. HEAD-1.0 aims to provide data support for the quantitative assessment of HP-SAR image quality, the development of HP-SAR technology, and the design of new satellite missions, with particular emphasis on supporting novel observational technologies for terrestrial, oceanic, and deep-space applications. It comprises three components: (1) LT-1 SAR imagery, including 30 HP-SAR images and 16 Quad-Polarimetric SAR (QP-SAR) images, covering an area of approximately 64000 km2; (2) Auxiliary data, including six optical images and Digital Elevation Model (DEM) data in the same area as SAR images; and (3) Annotation data, including 28 active/passive calibrators, approximately 17 km2 of land cover classification, and 23 polygonal/linear annotated planetary analog scenes. Based on HEAD-1.0, a preliminary qualitative and quantitative study was conducted, involving HP-SAR calibration, a comparison of terrain classification between HP-SAR and QP-SAR, and an analysis of HP characterizations of planetary analog scenes. In the future, an internationally advanced polarimetric SAR benchmark database will be constructed by integrating multi-platform, multi-band, multi-angle, and multi-temporal imaging data. In particular, the future study will focus on supporting innovative research on key technologies, including planetary surface and subsurface exploration, intelligent fusion of multisource remote sensing data, and advanced interpretation algorithms for SAR imagery.

The spaceborne Hybrid-Polarimetric Synthetic Aperture Radar (HP-SAR) balances the acquisition of rich polarimetric information with high-performance imaging. It offers advantages such as low system complexity and reduced data acquisition costs, and has emerged as a prominent direction in multidimensional microwave remote sensing. LT-1 is China’s first radar satellite equipped with spaceborne HP imaging capability, and it is also the world’s first satellite to implement a multi-channel HP radar system. This study utilizes HP imagery from the LT-1 satellite to construct and systematically elaborate the HP-SAR Evaluation and Analytical Dataset (HEAD-1.0), thereby addressing the lack of high-quality open-source HP datasets. HEAD-1.0 aims to provide data support for the quantitative assessment of HP-SAR image quality, the development of HP-SAR technology, and the design of new satellite missions, with particular emphasis on supporting novel observational technologies for terrestrial, oceanic, and deep-space applications. It comprises three components: (1) LT-1 SAR imagery, including 30 HP-SAR images and 16 Quad-Polarimetric SAR (QP-SAR) images, covering an area of approximately 64000 km2; (2) Auxiliary data, including six optical images and Digital Elevation Model (DEM) data in the same area as SAR images; and (3) Annotation data, including 28 active/passive calibrators, approximately 17 km2 of land cover classification, and 23 polygonal/linear annotated planetary analog scenes. Based on HEAD-1.0, a preliminary qualitative and quantitative study was conducted, involving HP-SAR calibration, a comparison of terrain classification between HP-SAR and QP-SAR, and an analysis of HP characterizations of planetary analog scenes. In the future, an internationally advanced polarimetric SAR benchmark database will be constructed by integrating multi-platform, multi-band, multi-angle, and multi-temporal imaging data. In particular, the future study will focus on supporting innovative research on key technologies, including planetary surface and subsurface exploration, intelligent fusion of multisource remote sensing data, and advanced interpretation algorithms for SAR imagery.

Reinforcement Learning (RL) is a critical approach for enabling cognitive radar target detection. Existing studies primarily focus on detection methods for centralized Multiple-Input Multiple-Output (MIMO) radar, which are limited to a single observation perspective. To address this issue, this paper proposes an RL-based multi-target detection method for a distributed MIMO radar system that possesses waveform and spatial diversity. The proposed method exploits spatial diversity to ensure robust target detection, while waveform diversity is used to construct a Markov decision process. Specifically, the radar first perceives target attributes through statistical signal detection techniques, then optimizes the transmit waveform accordingly, and iteratively updates its understanding of the environmental context using accumulated experience. This cyclic process gradually converges, yielding radar waveforms focused on target directions and achieving improved detection performance. To facilitate target localization, a maximization grid-based generalized likelihood ratio test detector for multi-antenna configurations is derived, using regularly shaped grids as the cell under test. For waveform optimization, two types of optimization problems, namely conventional and strong-target-limited formulations, are developed, and their solutions are obtained using continuous convex approximation. Simulation results across static and dynamic scenarios demonstrate that the proposed method can autonomously perceive environmental context and achieve superior detection performance compared with benchmark methods, particularly in weak target detection.

Reinforcement Learning (RL) is a critical approach for enabling cognitive radar target detection. Existing studies primarily focus on detection methods for centralized Multiple-Input Multiple-Output (MIMO) radar, which are limited to a single observation perspective. To address this issue, this paper proposes an RL-based multi-target detection method for a distributed MIMO radar system that possesses waveform and spatial diversity. The proposed method exploits spatial diversity to ensure robust target detection, while waveform diversity is used to construct a Markov decision process. Specifically, the radar first perceives target attributes through statistical signal detection techniques, then optimizes the transmit waveform accordingly, and iteratively updates its understanding of the environmental context using accumulated experience. This cyclic process gradually converges, yielding radar waveforms focused on target directions and achieving improved detection performance. To facilitate target localization, a maximization grid-based generalized likelihood ratio test detector for multi-antenna configurations is derived, using regularly shaped grids as the cell under test. For waveform optimization, two types of optimization problems, namely conventional and strong-target-limited formulations, are developed, and their solutions are obtained using continuous convex approximation. Simulation results across static and dynamic scenarios demonstrate that the proposed method can autonomously perceive environmental context and achieve superior detection performance compared with benchmark methods, particularly in weak target detection.

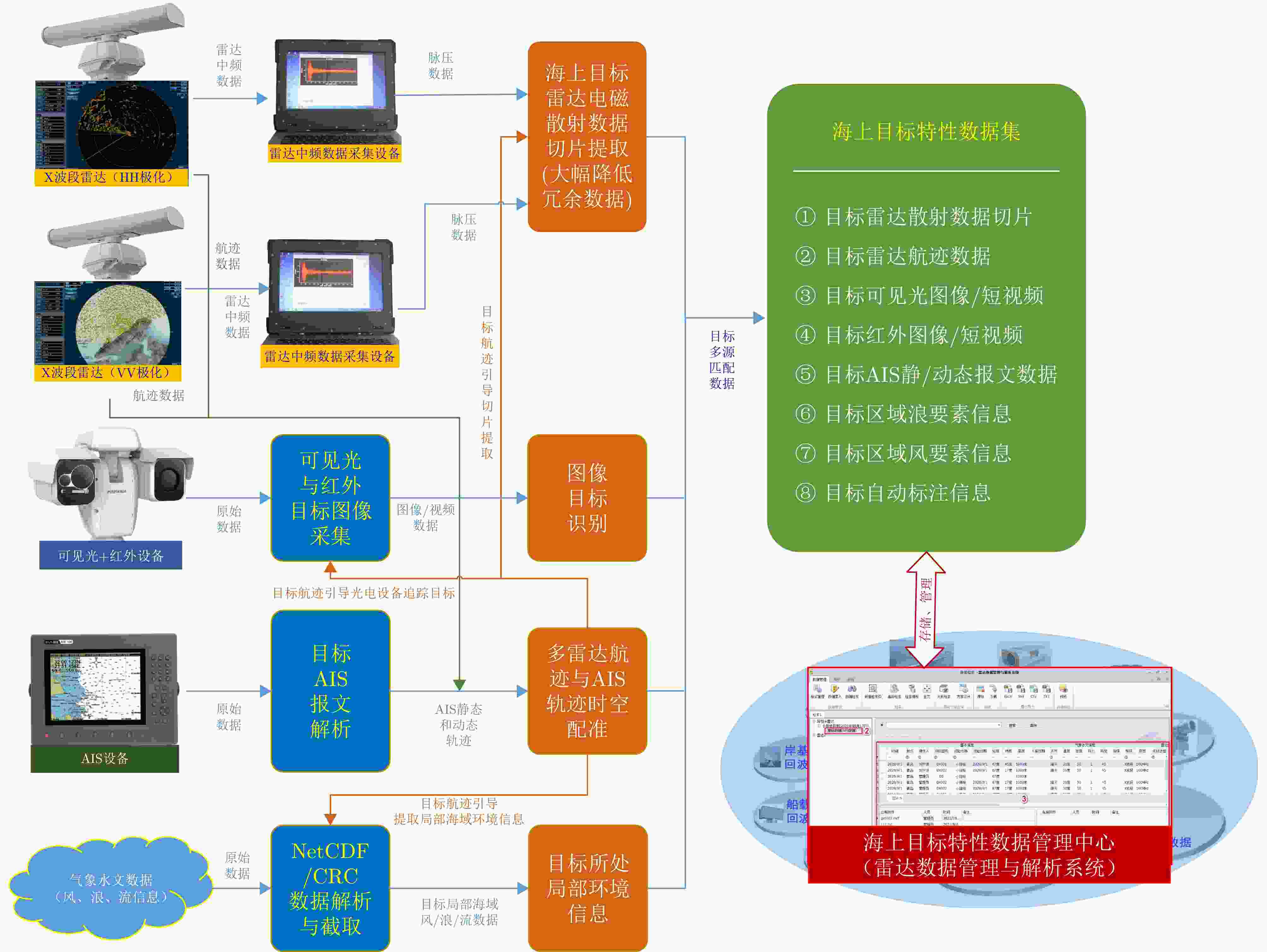

A maritime multimodal data resource system provides a foundation for multisensor collaborative detection using radar, Synthetic Aperture Radar (SAR), and electro-optical sensors, enabling fine-grained target perception. Such systems are essential for advancing the practical application of detection algorithms and improving maritime target surveillance capabilities. To this end, this study constructs a maritime multimodal data resource system using multisource data collected from the sea area near a port in the Bohai Sea. Data were acquired using SAR, radar, visible-light cameras, infrared cameras, and other sensors mounted on shore-based and airborne platforms. The data were labeled by performing automatic correlation registration and manual correction. According to the requirements of different tasks, multiple task-oriented multimodal associated datasets were compiled. This paper focuses on one subset of the overall resource system, namely the Dual-Modal Ship Detection (DMSD), which consists exclusively of visible-light and infrared image pairs. The dataset contains 2163 registered image pairs, with intermodal alignment achieved through an affine transformation. All images were collected in real maritime environments and cover diverse sea conditions and backgrounds, including cloud, rain, fog, and backlighting. The dataset was evaluated using representative algorithms, including YOLO and CFT. Experimental results show that the dataset achieves an mAP@50 of approximately 0.65 with YOLOv8 and 0.63 with CFT, demonstrating its effectiveness in supporting research on optimizing bimodal fusion strategies and enhancing detection robustness in complex maritime scenarios.

A maritime multimodal data resource system provides a foundation for multisensor collaborative detection using radar, Synthetic Aperture Radar (SAR), and electro-optical sensors, enabling fine-grained target perception. Such systems are essential for advancing the practical application of detection algorithms and improving maritime target surveillance capabilities. To this end, this study constructs a maritime multimodal data resource system using multisource data collected from the sea area near a port in the Bohai Sea. Data were acquired using SAR, radar, visible-light cameras, infrared cameras, and other sensors mounted on shore-based and airborne platforms. The data were labeled by performing automatic correlation registration and manual correction. According to the requirements of different tasks, multiple task-oriented multimodal associated datasets were compiled. This paper focuses on one subset of the overall resource system, namely the Dual-Modal Ship Detection (DMSD), which consists exclusively of visible-light and infrared image pairs. The dataset contains 2163 registered image pairs, with intermodal alignment achieved through an affine transformation. All images were collected in real maritime environments and cover diverse sea conditions and backgrounds, including cloud, rain, fog, and backlighting. The dataset was evaluated using representative algorithms, including YOLO and CFT. Experimental results show that the dataset achieves an mAP@50 of approximately 0.65 with YOLOv8 and 0.63 with CFT, demonstrating its effectiveness in supporting research on optimizing bimodal fusion strategies and enhancing detection robustness in complex maritime scenarios.

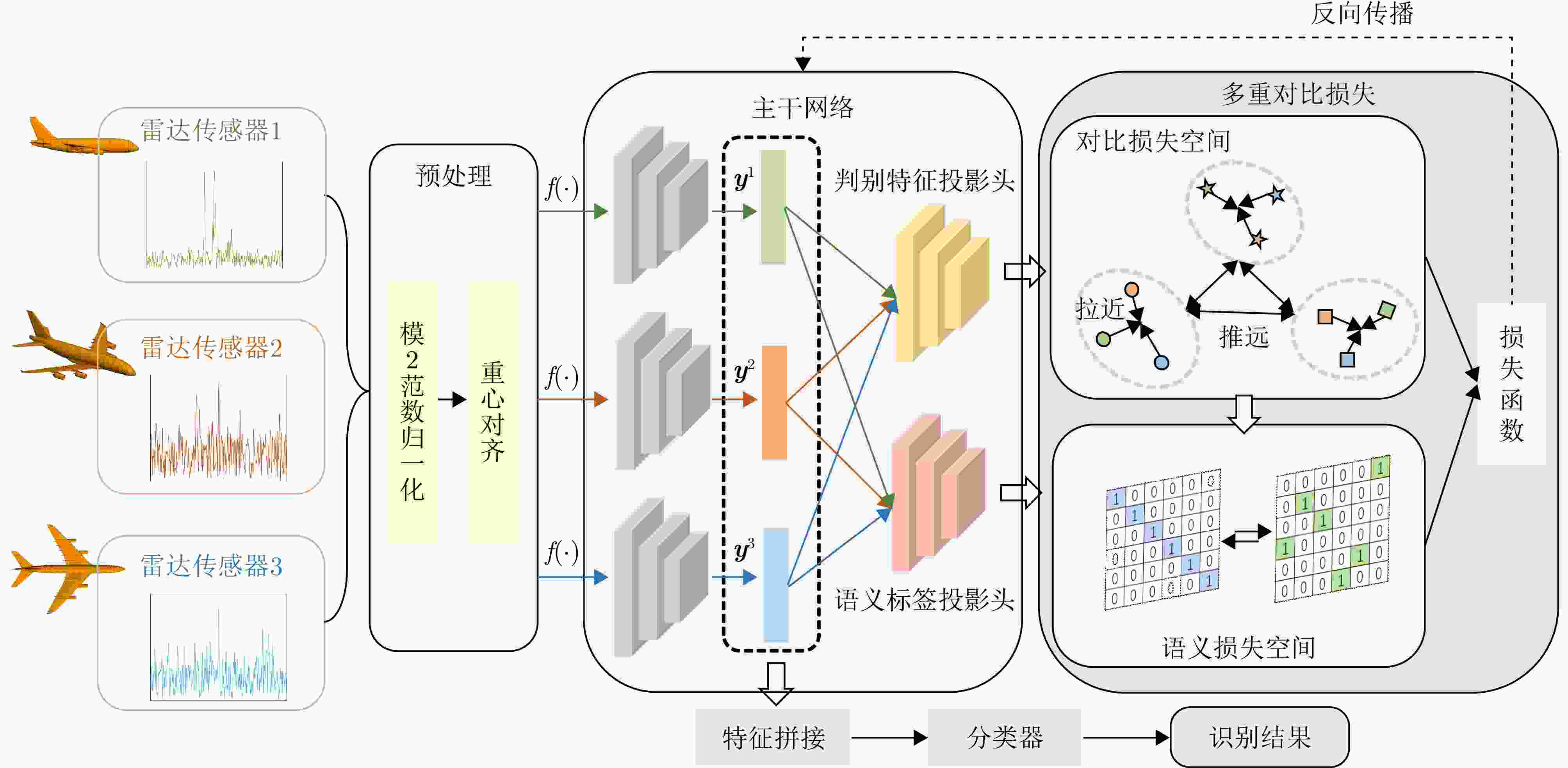

Research on target recognition using radar High-Resolution Range Profiles (HRRPs) is extensive and diverse in methodology. In particular, the application and development of deep learning to radar HRRP target recognition have enabled efficient, precise target perception directly from radar echoes. However, deep learning-based recognition networks rely on large amounts of training data. For non-cooperative targets, due to limited radar system parameters and rapid target attitude variations, acquiring adequate HRRP training samples that comprehensively cover target attitudes in advance is difficult in practice. Consequently, deep recognition networks are prone to overfitting and exhibit considerably degraded generalization capability. To address these issues, and given the ease of obtaining full-attitude electromagnetic simulation data for the target, this paper leverages simulated data as auxiliary information to mitigate the small-sample-size problem through data augmentation and cross-domain knowledge-transfer learning. For data augmentation, based on the analysis of differences in mean and variance between simulated and measured HRRPs within a given attitude-angle range, a linear transformation is applied to a set of simulated HRRPs spanning the same angular domain as a small set of measured HRRPs. This adjustment ensures that the simulated data’s mean and variance match the characteristics of the measured HRRPs, thereby achieving data augmentation that approximates the true distributional properties of HRRPs. Meanwhile, for cross-domain knowledge transfer learning, the proposed method introduces a domain alignment strategy based on generative adversarial constraints and a class alignment strategy based on contrastive learning constraints. These approaches draw the domain features of full-attitude simulation—strong discriminability and generalizability—closer to the measured domain features on a class-by-class basis, thereby further aiding learning from the measured domain data and leading to substantial improvements in few-shot recognition performance. Experimental results based on electromagnetic simulated and measured HRRP data for three and ten types of aircraft and ground vehicle targets, respectively, demonstrate that the proposed method yields superior recognition robustness compared with existing few-shot recognition methods.

Research on target recognition using radar High-Resolution Range Profiles (HRRPs) is extensive and diverse in methodology. In particular, the application and development of deep learning to radar HRRP target recognition have enabled efficient, precise target perception directly from radar echoes. However, deep learning-based recognition networks rely on large amounts of training data. For non-cooperative targets, due to limited radar system parameters and rapid target attitude variations, acquiring adequate HRRP training samples that comprehensively cover target attitudes in advance is difficult in practice. Consequently, deep recognition networks are prone to overfitting and exhibit considerably degraded generalization capability. To address these issues, and given the ease of obtaining full-attitude electromagnetic simulation data for the target, this paper leverages simulated data as auxiliary information to mitigate the small-sample-size problem through data augmentation and cross-domain knowledge-transfer learning. For data augmentation, based on the analysis of differences in mean and variance between simulated and measured HRRPs within a given attitude-angle range, a linear transformation is applied to a set of simulated HRRPs spanning the same angular domain as a small set of measured HRRPs. This adjustment ensures that the simulated data’s mean and variance match the characteristics of the measured HRRPs, thereby achieving data augmentation that approximates the true distributional properties of HRRPs. Meanwhile, for cross-domain knowledge transfer learning, the proposed method introduces a domain alignment strategy based on generative adversarial constraints and a class alignment strategy based on contrastive learning constraints. These approaches draw the domain features of full-attitude simulation—strong discriminability and generalizability—closer to the measured domain features on a class-by-class basis, thereby further aiding learning from the measured domain data and leading to substantial improvements in few-shot recognition performance. Experimental results based on electromagnetic simulated and measured HRRP data for three and ten types of aircraft and ground vehicle targets, respectively, demonstrate that the proposed method yields superior recognition robustness compared with existing few-shot recognition methods.

The Moon’s shallow subsurface structure provides crucial insights into its geological evolution, material composition, and space weathering processes. With the acquisition of extensive radar datasets from recent lunar exploration programs, such as the Chang’E missions, high-resolution characterization of the lunar regolith’s stratigraphic and physical properties has become a focus and challenge in lunar science. Conventional radar layer identification and tracking methods often suffer from instability in complex scattering environments, because of their sensitivity to noise and subsurface heterogeneity. To address these limitations, this study proposes an automatic layer-tracking algorithm based on a Dynamic Search Center (DSC) approach. This algorithm employs a Gaussian-weighted prediction mechanism to balance historical trajectory trends with local signal responses and uses a multifeatured fusion decision scheme to enhance tracking robustness under noisy conditions. Numerical simulations demonstrate that, with a search radius l = 20 and a historical window n = 20, the algorithm achieves a layer identification error of less than 2% for shallow strata (<140 ns). Meanwhile, for deep layers (>170 ns), with considerable signal attenuation, incorporating an edge-direction weighting term reduces the tracking error by over 30%. When applied to lunar penetrating radar data from the Chang’E-4 mission, the proposed method successfully realizes automatic stratigraphic tracing in lunar radar profiles, producing layer boundaries that are highly consistent with previous interpretations. Simulation and in-situ results confirm that the DSC-based algorithm accurately delineates real subsurface interfaces across media and structural morphologies, effectively suppressing noise, while maintaining smooth trajectories. Overall, the proposed method achieves low manual dependence, high robustness, and high precision in automatic radar layer tracking, thereby providing a valuable reference for analyzing radar data from upcoming missions such as Chang’E-7 and Martian shallow-subsurface explorations.

The Moon’s shallow subsurface structure provides crucial insights into its geological evolution, material composition, and space weathering processes. With the acquisition of extensive radar datasets from recent lunar exploration programs, such as the Chang’E missions, high-resolution characterization of the lunar regolith’s stratigraphic and physical properties has become a focus and challenge in lunar science. Conventional radar layer identification and tracking methods often suffer from instability in complex scattering environments, because of their sensitivity to noise and subsurface heterogeneity. To address these limitations, this study proposes an automatic layer-tracking algorithm based on a Dynamic Search Center (DSC) approach. This algorithm employs a Gaussian-weighted prediction mechanism to balance historical trajectory trends with local signal responses and uses a multifeatured fusion decision scheme to enhance tracking robustness under noisy conditions. Numerical simulations demonstrate that, with a search radius l = 20 and a historical window n = 20, the algorithm achieves a layer identification error of less than 2% for shallow strata (<140 ns). Meanwhile, for deep layers (>170 ns), with considerable signal attenuation, incorporating an edge-direction weighting term reduces the tracking error by over 30%. When applied to lunar penetrating radar data from the Chang’E-4 mission, the proposed method successfully realizes automatic stratigraphic tracing in lunar radar profiles, producing layer boundaries that are highly consistent with previous interpretations. Simulation and in-situ results confirm that the DSC-based algorithm accurately delineates real subsurface interfaces across media and structural morphologies, effectively suppressing noise, while maintaining smooth trajectories. Overall, the proposed method achieves low manual dependence, high robustness, and high precision in automatic radar layer tracking, thereby providing a valuable reference for analyzing radar data from upcoming missions such as Chang’E-7 and Martian shallow-subsurface explorations.

Ultra-Wideband (UWB) Multiple-Input Multiple-Output (MIMO) radar has demonstrated enormous potential in the field of human intelligent perception due to its excellent resolution, strong penetration capability, strong privacy protection, and insensitivity to illumination conditions. However, its low image resolution results in blurred contours and indistinguishable actions. To address this issue, this study developes a joint framework, Spatiotemporal Wavelet Transformer network (STWTnet), for human contour restoration and action recognition by integrating spatiotemporal features. By adopting a multi-task network architecture, the proposed framework leverages Res2Net and wavelet downsampling to extract spatial detail features from radar images and employs a Transformer to establish spatiotemporal dependencies. Through multi-task learning, it shares the common features of human contour restoration and action recognition, enabling mutual complementarity between the two tasks while avoiding feature conflicts. Experiments conducted on a self-built, synchronized UWB optical dataset demonstrate that STWTnet achieves high action recognition accuracy and significantly outperforms existing techniques in contour restoration precision, providing a new approach for privacy-preserving, all-weather human behavior understanding.

Ultra-Wideband (UWB) Multiple-Input Multiple-Output (MIMO) radar has demonstrated enormous potential in the field of human intelligent perception due to its excellent resolution, strong penetration capability, strong privacy protection, and insensitivity to illumination conditions. However, its low image resolution results in blurred contours and indistinguishable actions. To address this issue, this study developes a joint framework, Spatiotemporal Wavelet Transformer network (STWTnet), for human contour restoration and action recognition by integrating spatiotemporal features. By adopting a multi-task network architecture, the proposed framework leverages Res2Net and wavelet downsampling to extract spatial detail features from radar images and employs a Transformer to establish spatiotemporal dependencies. Through multi-task learning, it shares the common features of human contour restoration and action recognition, enabling mutual complementarity between the two tasks while avoiding feature conflicts. Experiments conducted on a self-built, synchronized UWB optical dataset demonstrate that STWTnet achieves high action recognition accuracy and significantly outperforms existing techniques in contour restoration precision, providing a new approach for privacy-preserving, all-weather human behavior understanding.

Synthetic Aperture Radar (SAR), as an active microwave remote sensing system, offers all-weather, all-day observation capabilities and has considerable application value in disaster monitoring, urban management, and military reconnaissance. Although deep learning techniques have achieved remarkable progress in interpreting SAR images, existing methods for target recognition and detection primarily focus on local feature extraction and single-target discrimination. They struggle to comprehensively characterize the global semantic structure and multitarget relationships in complex scenes, and the interpretation process remains highly dependent on human expertise with limited automation. SAR image captioning aims to translate visual information into natural language, serving as a key technology to bridge the gap between “perceiving targets” and “cognizing scenes,” which is of great importance for enhancing the automation and intelligence of SAR image interpretation. However, the inherent speckle noise, the scarcity of textural details, and the substantial semantic gap in SAR images further exacerbate the difficulty of cross-modal understanding. To address these challenges, this paper proposes a spatial-frequency aware model for SAR image captioning. First, a spatial-frequency aware module is constructed. It employs a Discrete Cosine Transform (DCT) mask attention mechanism to reweight spectral components for noise suppression and structure enhancement, combined with a Gabor multiscale texture enhancement submodule to improve sensitivity to directional and edge details. Second, a cross-modal semantic enhancement loss function is designed to bridge the semantic gap between visual features and natural language through bidirectional image-text alignment and mutual information maximization. Furthermore, a large-scale fine-grained SAR image captioning dataset, FSAR-Cap, containing 72400 high-quality image-text pairs, is constructed. The experimental results demonstrate that the proposed method achieves CIDEr scores of 151.00 and 95.14 on the SARLANG and FSAR-Cap datasets, respectively. Qualitatively, the model effectively suppresses hallucinations and accurately captures fine-grained spatial-textural details, considerably outperforming mainstream methods.

Synthetic Aperture Radar (SAR), as an active microwave remote sensing system, offers all-weather, all-day observation capabilities and has considerable application value in disaster monitoring, urban management, and military reconnaissance. Although deep learning techniques have achieved remarkable progress in interpreting SAR images, existing methods for target recognition and detection primarily focus on local feature extraction and single-target discrimination. They struggle to comprehensively characterize the global semantic structure and multitarget relationships in complex scenes, and the interpretation process remains highly dependent on human expertise with limited automation. SAR image captioning aims to translate visual information into natural language, serving as a key technology to bridge the gap between “perceiving targets” and “cognizing scenes,” which is of great importance for enhancing the automation and intelligence of SAR image interpretation. However, the inherent speckle noise, the scarcity of textural details, and the substantial semantic gap in SAR images further exacerbate the difficulty of cross-modal understanding. To address these challenges, this paper proposes a spatial-frequency aware model for SAR image captioning. First, a spatial-frequency aware module is constructed. It employs a Discrete Cosine Transform (DCT) mask attention mechanism to reweight spectral components for noise suppression and structure enhancement, combined with a Gabor multiscale texture enhancement submodule to improve sensitivity to directional and edge details. Second, a cross-modal semantic enhancement loss function is designed to bridge the semantic gap between visual features and natural language through bidirectional image-text alignment and mutual information maximization. Furthermore, a large-scale fine-grained SAR image captioning dataset, FSAR-Cap, containing 72400 high-quality image-text pairs, is constructed. The experimental results demonstrate that the proposed method achieves CIDEr scores of 151.00 and 95.14 on the SARLANG and FSAR-Cap datasets, respectively. Qualitatively, the model effectively suppresses hallucinations and accurately captures fine-grained spatial-textural details, considerably outperforming mainstream methods.

The automatic target recognition performance of radar is critically dependent on the quality of features extracted from target echo signals. As the information carrier that actively shapes echo signals, the transmitted waveform substantially affects the target classification performance. However, conventional waveform design is often decoupled from classifier optimization, thereby ignoring the critical synergy between the two. This disconnect, combined with the lack of a direct link between waveform optimization criteria and task-specific classification metrics, limits the target classification performance. Most existing approaches are confined to monostatic radar models. Further, they fail to establish relationships between the target’s aspect angle, the transmitted waveform, and classification performance, and lack a cooperative waveform design mechanism among nodes. Hence, they are unable to achieve spatial and waveform diversity gains. To overcome these limitations, this paper proposes an end-to-end “waveform aspect matching” optimization framework for target classification in distributed radar systems. This framework parameterizes the waveform as a trainable waveform generation module, cascaded with a downstream classification network. This transforms the isolated waveform design problem into a joint optimization of the waveform and classifier, directly guided by the classification task. Leveraging prior target information, the model is trained to jointly optimize and produce aspect-matched waveforms along with the corresponding classification network. Furthermore, to enhance the classification performance in distributed radar systems, a dual-branch network based on noncausal state-space duality modules is proposed to extract and fuse multiview information. Experimental results demonstrate that the proposed method can synergistically utilize waveform and spatial diversity to improve the target classification performance. It demonstrates robustness against node failures, offering a novel solution for intelligent waveform design in distributed radar systems.

The automatic target recognition performance of radar is critically dependent on the quality of features extracted from target echo signals. As the information carrier that actively shapes echo signals, the transmitted waveform substantially affects the target classification performance. However, conventional waveform design is often decoupled from classifier optimization, thereby ignoring the critical synergy between the two. This disconnect, combined with the lack of a direct link between waveform optimization criteria and task-specific classification metrics, limits the target classification performance. Most existing approaches are confined to monostatic radar models. Further, they fail to establish relationships between the target’s aspect angle, the transmitted waveform, and classification performance, and lack a cooperative waveform design mechanism among nodes. Hence, they are unable to achieve spatial and waveform diversity gains. To overcome these limitations, this paper proposes an end-to-end “waveform aspect matching” optimization framework for target classification in distributed radar systems. This framework parameterizes the waveform as a trainable waveform generation module, cascaded with a downstream classification network. This transforms the isolated waveform design problem into a joint optimization of the waveform and classifier, directly guided by the classification task. Leveraging prior target information, the model is trained to jointly optimize and produce aspect-matched waveforms along with the corresponding classification network. Furthermore, to enhance the classification performance in distributed radar systems, a dual-branch network based on noncausal state-space duality modules is proposed to extract and fuse multiview information. Experimental results demonstrate that the proposed method can synergistically utilize waveform and spatial diversity to improve the target classification performance. It demonstrates robustness against node failures, offering a novel solution for intelligent waveform design in distributed radar systems.

With the widespread use of millimeter-wave radar technology in indoor target detection and tracking, multipath effects have become a key factor affecting tracking accuracy. Indoor millimeter-wave radar target tracking is highly susceptible to multipath interference, and conventional point-target tracking methods, which ignore the extended characteristics of targets and the multipath propagation mechanism, struggle to effectively suppress ghost targets caused by multipath reflections. To address this issue, this paper proposes an extension mapping-based extended target tracking (EM-ETT) method for indoor target tracking using millimeter-wave radar. First, a random matrix model is used to characterize the target’s geometric shape, with the extension modeled as an inverse Wishart distribution. Next, an extended projection framework is constructed by integrating a Monte Carlo-based statistical propagation mechanism. Through nonlinear multipath mapping of scattering points from the true target, ghost point clouds are generated, and their extended state priors are estimated. Furthermore, a target–path association method is introduced to establish path associations in multipath propagation based on geometric consistency and likelihood evaluation, enhancing state discrimination capability. Experimental results demonstrate that in multitarget scenarios with multipath interference, the proposed method significantly improves state estimation accuracy and effectively prevents the generation of false trajectories. Compared with conventional point-target tracking algorithms, the proposed method exhibits significant advantages in both tracking accuracy and robustness.

With the widespread use of millimeter-wave radar technology in indoor target detection and tracking, multipath effects have become a key factor affecting tracking accuracy. Indoor millimeter-wave radar target tracking is highly susceptible to multipath interference, and conventional point-target tracking methods, which ignore the extended characteristics of targets and the multipath propagation mechanism, struggle to effectively suppress ghost targets caused by multipath reflections. To address this issue, this paper proposes an extension mapping-based extended target tracking (EM-ETT) method for indoor target tracking using millimeter-wave radar. First, a random matrix model is used to characterize the target’s geometric shape, with the extension modeled as an inverse Wishart distribution. Next, an extended projection framework is constructed by integrating a Monte Carlo-based statistical propagation mechanism. Through nonlinear multipath mapping of scattering points from the true target, ghost point clouds are generated, and their extended state priors are estimated. Furthermore, a target–path association method is introduced to establish path associations in multipath propagation based on geometric consistency and likelihood evaluation, enhancing state discrimination capability. Experimental results demonstrate that in multitarget scenarios with multipath interference, the proposed method significantly improves state estimation accuracy and effectively prevents the generation of false trajectories. Compared with conventional point-target tracking algorithms, the proposed method exhibits significant advantages in both tracking accuracy and robustness.

To address issues such as insufficient feature extraction, limited spatiotemporal correlation modeling, and poor classification performance in radar classification of low, slow, and small targets, this paper investigates on graph network-based feature extraction and classification methods. First, focusing on digital array ubiquitous radar, a radar detection dataset for LSS targets, named LSS-DAUR-1.0, is constructed; it contains Doppler and track data for six types of targets: passenger ships, speedboats, helicopters, rotor drones, birds, and fixed-wing drones. Second, based on this dataset, the multidomain and multidimensional characteristics of the targets are analyzed, and the complementarity between Doppler and physical motion features is verified through correlation and cosine similarity analyses. On this basis, a Graph Convolutional Network with Dynamic Graph Construction (DG-GCN) classification method fusing dual features is proposed. An adaptive window adjustment, a hybrid attenuation function, and a dynamic threshold mechanism are designed to construct an adaptive dynamic graph based on spatiotemporal correlation. Combined with graph convolution–based feature learning and classification modules, this approach achieves refined classification of low, slow, and small targets. Validation on the LSS-DAUR-1.0 dataset shows that the DG-GCN achieves 99.66% classification accuracy, which is 6.78% and 17.97% higher than that of ResNet and Transformer models, respectively. The total processing time is only 4.98 ms, which is more than 80% lower than that of the aforementioned comparison models. Hence, the DG-GCN achieves both high accuracy and efficiency. In addition, noise environment tests show good robustness. Ablation experiments verify that the dynamic edge weight mechanism compensates for the lack of spatial feature correlation in purely temporal connections and improves the model’s generalizability.

To address issues such as insufficient feature extraction, limited spatiotemporal correlation modeling, and poor classification performance in radar classification of low, slow, and small targets, this paper investigates on graph network-based feature extraction and classification methods. First, focusing on digital array ubiquitous radar, a radar detection dataset for LSS targets, named LSS-DAUR-1.0, is constructed; it contains Doppler and track data for six types of targets: passenger ships, speedboats, helicopters, rotor drones, birds, and fixed-wing drones. Second, based on this dataset, the multidomain and multidimensional characteristics of the targets are analyzed, and the complementarity between Doppler and physical motion features is verified through correlation and cosine similarity analyses. On this basis, a Graph Convolutional Network with Dynamic Graph Construction (DG-GCN) classification method fusing dual features is proposed. An adaptive window adjustment, a hybrid attenuation function, and a dynamic threshold mechanism are designed to construct an adaptive dynamic graph based on spatiotemporal correlation. Combined with graph convolution–based feature learning and classification modules, this approach achieves refined classification of low, slow, and small targets. Validation on the LSS-DAUR-1.0 dataset shows that the DG-GCN achieves 99.66% classification accuracy, which is 6.78% and 17.97% higher than that of ResNet and Transformer models, respectively. The total processing time is only 4.98 ms, which is more than 80% lower than that of the aforementioned comparison models. Hence, the DG-GCN achieves both high accuracy and efficiency. In addition, noise environment tests show good robustness. Ablation experiments verify that the dynamic edge weight mechanism compensates for the lack of spatial feature correlation in purely temporal connections and improves the model’s generalizability.

Heart Rate (HR), a core physiological indicator of human health, is of substantial clinical importance when accurately monitored in applications such as arrhythmia screening, early warning of coronary heart disease, and chronic heart failure management. However, cardiac echo signals are susceptible to coupled disturbances, including respiratory motion artifacts and environmental electromagnetic interference, which degrade the signal-to-noise ratio and compromise HR estimation accuracy. To address these challenges, we propose a multi-channel joint HR estimation method based on multivariate variational mode decomposition that exploits shared cardiac information across different channels. Specifically, the proposed method first constructs a multi-channel joint optimization model that minimizes the total modal bandwidth under reconstruction residual constraints. It then adaptively initialize the center frequency by leveraging the cumulative effect of multi-channel spectral peaks, enabling robust separation of heartbeat modes with consistent frequencies across channels. Finally, the HR mode is selected from the decomposed modes using a maximum energy criterion to complete HR estimation. Validation on real-world data from six subjects demonstrated that the proposed method achieves a median HR error of 1.53 bpm, outperforming conventional single-channel approaches and existing multi-channel fusion-based HR estimation methods.

Heart Rate (HR), a core physiological indicator of human health, is of substantial clinical importance when accurately monitored in applications such as arrhythmia screening, early warning of coronary heart disease, and chronic heart failure management. However, cardiac echo signals are susceptible to coupled disturbances, including respiratory motion artifacts and environmental electromagnetic interference, which degrade the signal-to-noise ratio and compromise HR estimation accuracy. To address these challenges, we propose a multi-channel joint HR estimation method based on multivariate variational mode decomposition that exploits shared cardiac information across different channels. Specifically, the proposed method first constructs a multi-channel joint optimization model that minimizes the total modal bandwidth under reconstruction residual constraints. It then adaptively initialize the center frequency by leveraging the cumulative effect of multi-channel spectral peaks, enabling robust separation of heartbeat modes with consistent frequencies across channels. Finally, the HR mode is selected from the decomposed modes using a maximum energy criterion to complete HR estimation. Validation on real-world data from six subjects demonstrated that the proposed method achieves a median HR error of 1.53 bpm, outperforming conventional single-channel approaches and existing multi-channel fusion-based HR estimation methods.

Sea surface elevation is crucial for characterizing individual waves, wave groups, and freak waves, offering an accurate representation of inhomogeneous sea states. This study presents a quasi-linear inversion strategy for retrieving sea surface elevation from GF-3 Synthetic Aperture Radar (SAR) images. The algorithm enables rapid inversion within 10 s per scene without the need for external data and effectively resolves range-traveling waves. Case studies conducted under three distinct sea states demonstrate its ability to extract maximum wave heights and identify wave groups. Additionally, inversion results from 2405 GF-3 wave mode SAR images following quality control are compared with ERA5 reanalysis spectra and altimeter data. The comparisons reveal that the retrieved Significant Wave Height (SWH) has a root mean square error of 0.48 m compared with ERA5 data. In low-to-moderate sea states, with significant wave heights below 3 m, the retrieved SWH shows strong consistency with ERA5 spectra and altimeter measurements. This algorithm serves as an effective tool for rapid monitoring and analysis of sea states using GF-3 SAR.

Sea surface elevation is crucial for characterizing individual waves, wave groups, and freak waves, offering an accurate representation of inhomogeneous sea states. This study presents a quasi-linear inversion strategy for retrieving sea surface elevation from GF-3 Synthetic Aperture Radar (SAR) images. The algorithm enables rapid inversion within 10 s per scene without the need for external data and effectively resolves range-traveling waves. Case studies conducted under three distinct sea states demonstrate its ability to extract maximum wave heights and identify wave groups. Additionally, inversion results from 2405 GF-3 wave mode SAR images following quality control are compared with ERA5 reanalysis spectra and altimeter data. The comparisons reveal that the retrieved Significant Wave Height (SWH) has a root mean square error of 0.48 m compared with ERA5 data. In low-to-moderate sea states, with significant wave heights below 3 m, the retrieved SWH shows strong consistency with ERA5 spectra and altimeter measurements. This algorithm serves as an effective tool for rapid monitoring and analysis of sea states using GF-3 SAR.

Pulse Doppler radar provides all-weather operational capability and enables simultaneous acquisition of target range and velocity through Range-Doppler (RD) maps. In near-vertical flight scenarios, the geometric structure of RD maps implicitly encodes key platform motion parameters, including altitude, velocity, and pitch angle. However, these parameters are strongly coupled in the RD domain, making effective decoupling difficult for traditional signal-processing-based inversion methods, particularly under complex terrain and near-vertical incidence conditions. Although recent advances in deep learning have shown strong potential for motion information sensing, multitask learning in this context still faces challenges in achieving both real-time performance and high estimation accuracy. To address these issues, this study proposes a novel network architecture, termed Range-Doppler Map Fusion Network (RDMFNet), that performs multirepresentation information fusion via shared encoders and parallel decoders, along with a two-stage progressive training strategy to enhance parameter estimation accuracy. Experimental results show that RDMFNet achieves estimation errors of 14.447 m for altitude, 4.635 m/s for velocity, and 0.755° for pitch angle, demonstrating its effectiveness for high-precision, real-time perception.

Pulse Doppler radar provides all-weather operational capability and enables simultaneous acquisition of target range and velocity through Range-Doppler (RD) maps. In near-vertical flight scenarios, the geometric structure of RD maps implicitly encodes key platform motion parameters, including altitude, velocity, and pitch angle. However, these parameters are strongly coupled in the RD domain, making effective decoupling difficult for traditional signal-processing-based inversion methods, particularly under complex terrain and near-vertical incidence conditions. Although recent advances in deep learning have shown strong potential for motion information sensing, multitask learning in this context still faces challenges in achieving both real-time performance and high estimation accuracy. To address these issues, this study proposes a novel network architecture, termed Range-Doppler Map Fusion Network (RDMFNet), that performs multirepresentation information fusion via shared encoders and parallel decoders, along with a two-stage progressive training strategy to enhance parameter estimation accuracy. Experimental results show that RDMFNet achieves estimation errors of 14.447 m for altitude, 4.635 m/s for velocity, and 0.755° for pitch angle, demonstrating its effectiveness for high-precision, real-time perception.

Radar systems acquire target information by transmitting waveforms, receiving echoes, and processing signals; thus, waveform performance is a critical determinant of radar system performance. Compared with other radar systems, Synthetic Aperture Radar (SAR) operates under unique conditions, including distributed target scenes, waveforms with large time-bandwidth products, wide-swath and long-range imaging, and range–azimuth coupling. These characteristics impose additional stringent requirements on SAR waveform design. Drawing on the authors’ research and expertise in SAR waveform coding, this paper reviews recent domestic and international advances in SAR waveform design, discusses key technical challenges, and highlights the role of waveform design in enhancing system imaging performance. Finally, this study outlines future trends and potential directions for SAR waveform design methodologies.