| [1] |

保铮, 邢孟道, 王彤. 雷达成像技术[M]. 北京: 电子工业出版社, 2005.

BAO Zheng, XING Mengdao, and WANG Tong. Radar Imaging Technology[M]. Beijing: Publishing House of Electronics Industry, 2005.

|

| [2] |

MOREIRA A, PRATS-IRAOLA P, YOUNIS M, et al. A tutorial on synthetic aperture radar[J]. IEEE Geoscience and Remote Sensing Magazine, 2013, 1(1): 6–43. doi: 10.1109/MGRS.2013.2248301 |

| [3] |

黄培康, 殷红成, 许小剑. 雷达目标特性[M]. 北京: 电子工业出版社, 2005.

HUANG Peikang, YIN Hongcheng, and XU Xiaojian. Radar Target Characteristics[M]. Beijing: Publishing House of Electronics Industry, 2005.

|

| [4] |

孙真真. 基于光学区雷达目标二维像的目标散射特征提取的理论及方法研究[D]. [博士论文], 中国人民解放军国防科学技术大学, 2001.

SUN Zhenzhen. Target scattering characteristic extraction method for radar 2-D image in optical region[D]. [Ph. D. dissertation], National University of Defense Technology, 2001.

|

| [5] |

OLIVER C and QUEGAN S. Understanding Synthetic Aperture Radar Images[M]. Raleigh: SciTech Publishing, 2004.

|

| [6] |

EL-DARYMLI K, GILL E W, MCGUIRE P, et al. Automatic target recognition in synthetic aperture radar imagery: A state-of-the-art review[J]. IEEE Access, 2016, 4: 6014–6058. doi: 10.1109/ACCESS.2016.2611492 |

| [7] |

NOVAK L M, OWIRKA G J, BROWER W S, et al. The automatic target-recognition system in SAIP[J]. The Lincoln Laboratory Journal, 1997, 10(2): 187–202.

|

| [8] |

DIEMUNSCH J R and WISSINGER J. Moving and stationary target acquisition and recognition (MSTAR) model-based automatic target recognition: Search technology for a robust ATR[C]. Algorithms for Synthetic Aperture Radar Imagery V, Orlando, United States, 1998: 481–492.

|

| [9] |

文贡坚, 朱国强, 殷红成, 等. 基于三维电磁散射参数化模型的 SAR 目标识别方法[J]. 雷达学报, 2017, 6(2): 115–135. doi: 10.12000/JR17034WEN Gongjian, ZHU Guoqiang, YIN Hongcheng, et al. SAR ATR based on 3D parametric electromagnetic scattering model[J]. Journal of Radars, 2017, 6(2): 115–135. doi: 10.12000/JR17034 |

| [10] |

HE Yang, HE Siyuan, ZHANG Yuehua, et al. A forward approach to establish parametric scattering center models for known complex radar targets applied to SAR ATR[J]. IEEE Transactions on Antennas and Propagation, 2014, 62(12): 6192–6205. doi: 10.1109/TAP.2014.2360700 |

| [11] |

代大海. 极化雷达成像及目标特征提取研究[D]. [博士论文], 国防科技大学, 2008.

DAI Dahai. Study on polarimetric radar imaging and target feature extraction[D]. [Ph. D. dissertation], National University of Defense Technology, 2008.

|

| [12] |

LING Hao, CHOU R C, and LEE S W. Shooting and bouncing rays: Calculating the RCS of an arbitrarily shaped cavity[J]. IEEE Transactions on Antennas and Propagation, 1989, 37(2): 194–205. doi: 10.1109/8.18706 |

| [13] |

BHALLA R, LING Hao, MOORE J, et al. 3D scattering center representation of complex targets using the shooting and bouncing ray technique: A review[J]. IEEE Antennas and Propagation Magazine, 1998, 40(5): 30–39. doi: 10.1109/74.735963 |

| [14] |

BHALLA R, MOORE J, and LING Hao. A global scattering center representation of complex targets using the shooting and bouncing ray technique[J]. IEEE Transactions on Antennas and Propagation, 1997, 45(12): 1850–1856. doi: 10.1109/8.650204 |

| [15] |

BHALLA R and LING Hao. Three-dimensional scattering center extraction using the shooting and bouncing ray technique[J]. IEEE Transactions on Antennas and Propagation, 1996, 44(11): 1445–1453. doi: 10.1109/8.542068 |

| [16] |

JACKSON J A. Three-dimensional feature models for synthetic aperture radar and experiments in feature extraction[D]. [Ph. D. dissertation], The Ohio State University, 2009.

|

| [17] |

JACKSON J A, RIGLING B D, and MOSES R L. Canonical scattering feature models for 3D and bistatic SAR[J]. IEEE Transactions on Aerospace and Electronic Systems, 2010, 46(2): 525–541. doi: 10.1109/TAES.2010.5461639 |

| [18] |

JACKSON J A, RIGLING B D, and MOSES R L. Parametric scattering models for bistatic synthetic aperture radar[C]. 2008 IEEE Radar Conference, Rome, Italy, 2008: 1–5.

|

| [19] |

HURST M and MITTRA R. Scattering center analysis via Prony's method[J]. IEEE Transactions on Antennas and Propagation, 1987, 35(8): 986–988. doi: 10.1109/TAP.1987.1144210 |

| [20] |

SACCHINI J J, STEEDLY W M, and MOSES R L. Two-dimensional Prony modeling and parameter estimation[J]. IEEE Transactions on Signal Processing, 1993, 41(11): 3127–3137. doi: 10.1109/78.257242 |

| [21] |

POTTER L C, CHIANG D M, CARRIERE R, et al. A GTD-based parametric model for radar scattering[J]. IEEE Transactions on Antennas and Propagation, 1995, 43(10): 1058–1067. doi: 10.1109/8.467641 |

| [22] |

闫华, 张磊, 陆金文, 等. 任意多次散射机理的GTD散射中心模型频率依赖因子表达[J]. 雷达学报, 2021, 10(3): 370–381. doi: 10.12000/JR21005YAN Hua, ZHANG Lei, LU Jinwen, et al. Frequency-dependent factor expression of the GTD scattering center model for the arbitrary multiple scattering mechanism[J]. Journal of Radars, 2021, 10(3): 370–381. doi: 10.12000/JR21005 |

| [23] |

GERRY M J, POTTER L C, GUPTA I J, et al. A parametric model for synthetic aperture radar measurements[J]. IEEE Transactions on Antennas and Propagation, 1999, 47(7): 1179–1188. doi: 10.1109/8.785750 |

| [24] |

李增辉. 稀疏激励的极化逆散射理论研究[D]. [博士论文], 清华大学, 2015.

LI Zenghui. Research on polarimetric inverse scattering through enforcing sparsity[D]. [Ph. D. dissertation], Tsinghua University, 2015.

|

| [25] |

STEEDLY W M and MOSES R L. High resolution exponential modeling of fully polarized radar returns[J]. IEEE Transactions on Aerospace and Electronic Systems, 1991, 27(3): 459–469. doi: 10.1109/7.81427 |

| [26] |

DAI Dahai, WANG Xuesong, XIAO Shunping, et al. High-resolution coherent polarization GTD model and its application[J]. Chinese Journal Of Radio Science, 2008, 23(1): 55–61. doi: 10.3969/j.issn.1005-0388.2008.01.009 |

| [27] |

段佳. SAR/ISAR目标电磁特征提取及应用研究[D]. [博士论文], 西安电子科技大学, 2015.

DUAN Jia. Study on electro-magnetic feature extraction of SAR/ISAR and its applications[D]. [Ph. D. dissertation], Xidian University, 2015.

|

| [28] |

STEER D G, DEWDNEY P E, and ITO M R. Enhancements to the deconvolution algorithm 'CLEAN'[J]. Astronomy and Astrophysics, 1984, 137(2): 159–165.

|

| [29] |

CLARK B G. An efficient implementation of the algorithm 'CLEAN'[J]. Astronomy and Astrophysics, 1980, 89(3): 377–378.

|

| [30] |

GERRY M J. Two-Dimensional Inverse Scattering Based on the GTD Model[M]. The Ohio State University, 1997.

|

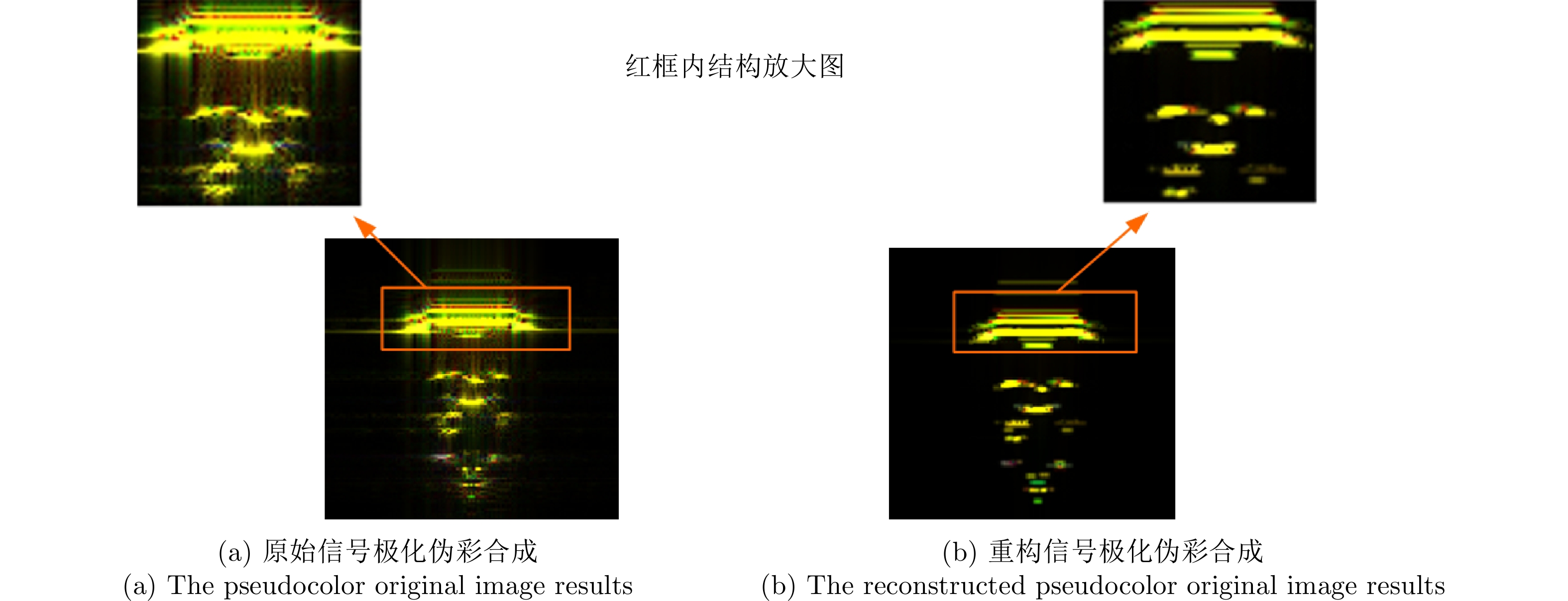

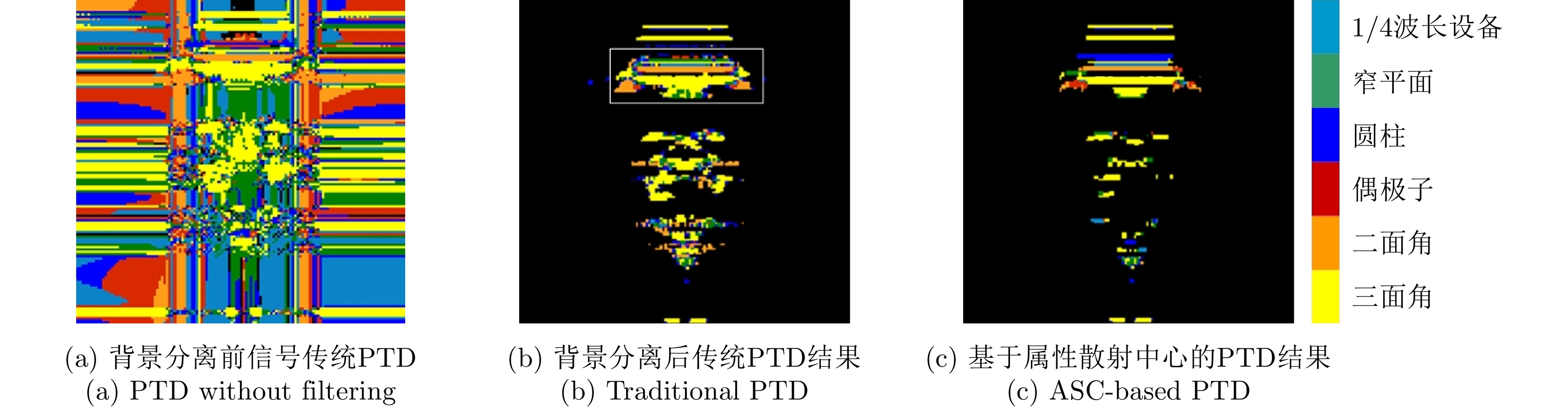

| [31] |

KOETS M A and MOSES R L. Image domain feature extraction from synthetic aperture imagery[C]. 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing, Phoenix, USA, 1999: 2319–2322.

|

| [32] |

STACH J and LEBARON E. Enhanced image editing by peak region segmentation[C]. 18th Annual Meeting & Symposium of the Antenna Measurement Techniques Association, 1996: 303–307.

|

| [33] |

KOETS M A. Automated algorithms for extraction of physically relevant features from synthetic aperture radar imagery[D]. Ohio State University, 1998.

|

| [34] |

MOSES R L, POTTER L C, and GUPTA I J. Feature extraction using attributed scattering center models for model-based automatic target recognition (ATR)[R]. AFRL-SN-WP-TR-2006-1004, 2005.

|

| [35] |

AKYILDIZ Y and MOSES R L. Scattering center model for SAR imagery[C]. SAR Image Analysis, Modeling, and Techniques II, Florence, Italy, 1999: 76–85.

|

| [36] |

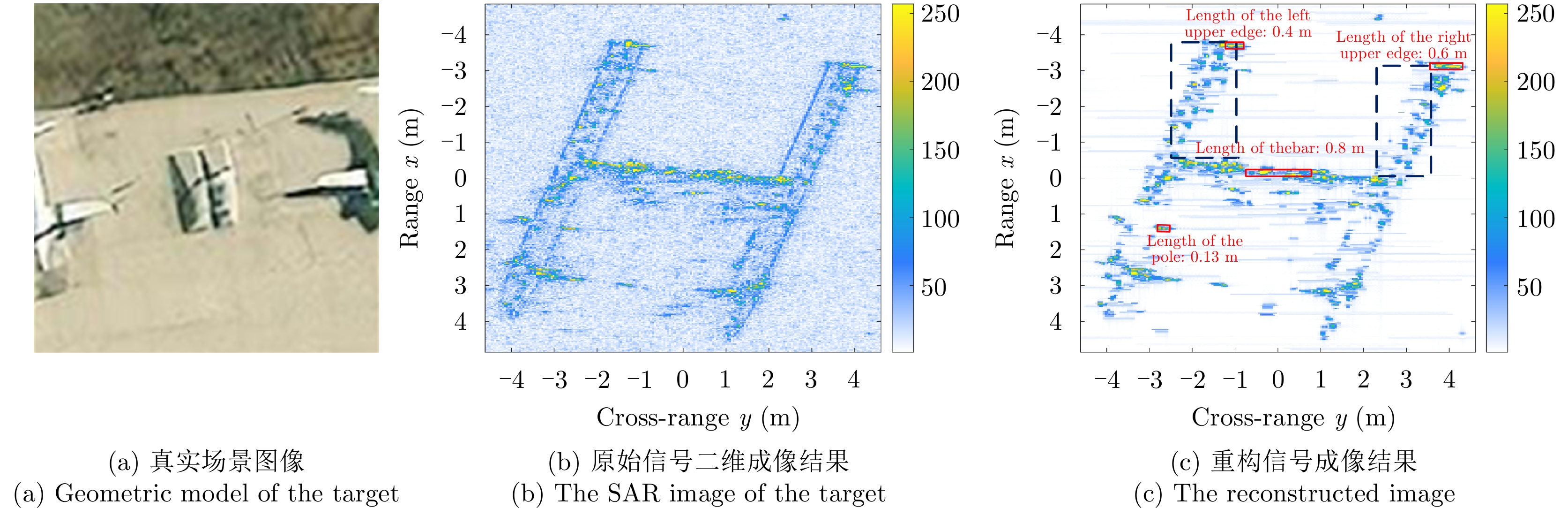

DING Baiyuan and WEN Gongjian. Target reconstruction based on 3-D scattering center model for robust SAR ATR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(7): 3772–3785. doi: 10.1109/TGRS.2018.2810181 |

| [37] |

DING Baiyuan, WEN Gongjian, HUANG Xiaohong, et al. Data augmentation by multilevel reconstruction using attributed scattering center for SAR target recognition[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(6): 979–983. doi: 10.1109/LGRS.2017.2692386 |

| [38] |

丁柏圆. 针对扩展操作条件的合成孔径雷达图像目标识别方法研究[D]. [博士论文], 国防科技大学, 2018.

DING Baiyuan. Research on automatic target recognition of synthetic aperture radar images under extened operating conditions[D]. [Ph. D. dissertation], National University of Defense Technology, 2018.

|

| [39] |

JACKSON J A and MOSES R L. Synthetic aperture radar 3D feature extraction for arbitrary flight paths[J]. IEEE Transactions on Aerospace and Electronic Systems, 2012, 48(3): 2065–2084. doi: 10.1109/TAES.2012.6237579 |

| [40] |

AKYILDIZ Y. Feature extraction from synthetic aperture radar imagery[D]. [Ph. D. dissertation], The Ohio State University, 2000.

|

| [41] |

XU Feng, JIN Yaqiu, and MOREIRA A. A preliminary study on SAR advanced information retrieval and scene reconstruction[J]. IEEE Geoscience and Remote Sensing Letters, 2016, 13(10): 1443–1447. doi: 10.1109/LGRS.2016.2590878 |

| [42] |

DUAN Jia, ZHANG Lei, SHENG Jialian, et al. Parameters decouple and estimation of independent attributed scattering centers[J]. Journal of Electronics & Information Technology, 2012, 34(8): 1853–1859. doi: 10.3724/SP.J.1146.2011.01302 |

| [43] |

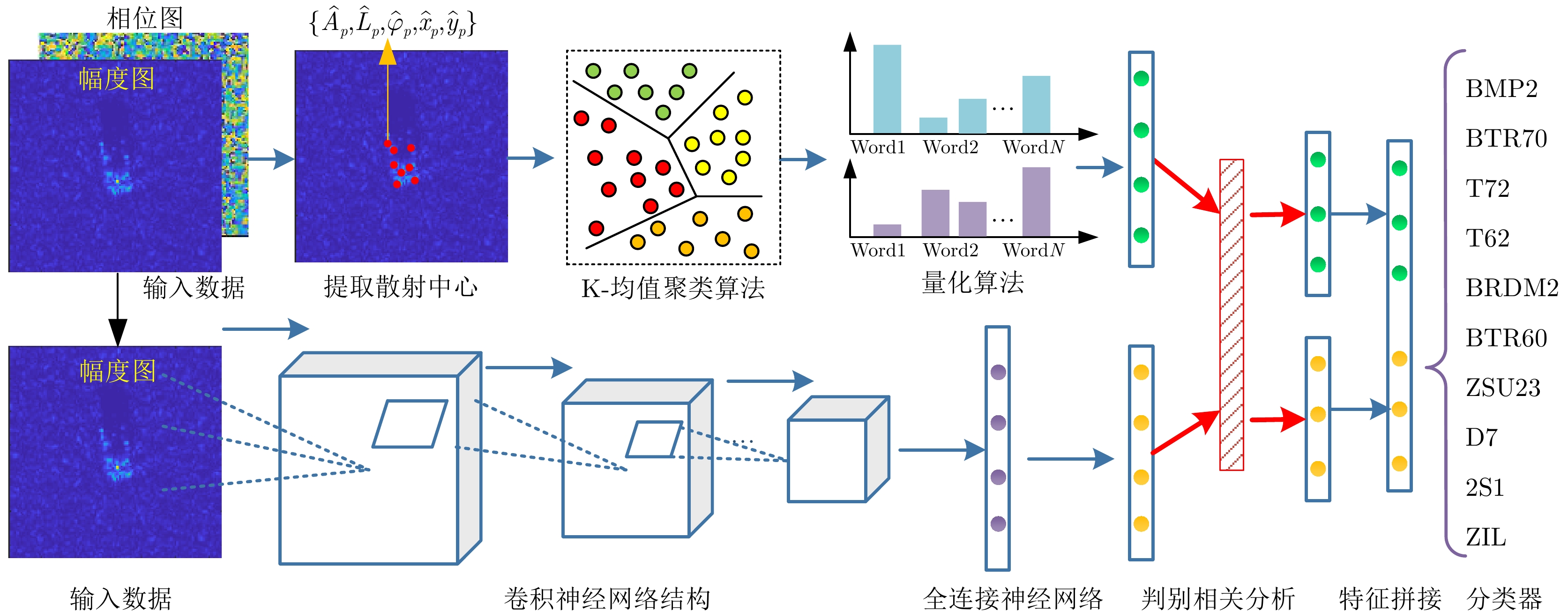

JI Kefeng, KUANG Gangyao, SU Yi, et al. Research on the extracting method of the scattering center feature from SAR imagery[J]. Journal of National University of Defense Technology, 2003, 25(1): 45–50. doi: 10.3969/j.issn.1001-2486.2003.01.010 |

| [44] |

蒋文, 李王哲. 基于幅相分离的属性散射中心参数估计新方法[J]. 雷达学报, 2019, 8(5): 606–615. doi: 10.12000/JR18097JIANG Wen and LI Wangzhe. A new method for parameter estimation of attributed scattering centers based on amplitude-phase separation[J]. Journal of Radars, 2019, 8(5): 606–615. doi: 10.12000/JR18097 |

| [45] |

谢意远, 高悦欣, 邢孟道, 等. 跨谱段SAR散射中心多维参数解耦和估计方法[J]. 电子与信息学报, 2021, 43(3): 632–639. doi: 10.11999/JEIT200319XIE Yiyuan, GAO Yuexin, XING Mengdao, et al. A Decoupling and dimension dividing multi-parameter estimation method for cross-band SAR scattering centers[J]. Journal of Electronics & Information Technology, 2021, 43(3): 632–639. doi: 10.11999/JEIT200319 |

| [46] |

YANG Dongwen, NI Wei, DU Lan, et al. Efficient attributed scatter center extraction based on image-domain sparse representation[J]. IEEE Transactions on Signal Processing, 2020, 68: 4368–4381. doi: 10.1109/TSP.2020.3011332 |

| [47] |

XIE Yiyuan, XING Mengdao, GAO Yuexin, et al. Attributed scattering center extraction method for microwave photonic signals using DSM-PMM-regularized optimization[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5230016. doi: 10.1109/TGRS.2022.3183855 |

| [48] |

周剑雄. 光学区雷达目标三维散射中心重构理论与技术[D]. [博士论文], 国防科学技术大学, 2006.

ZHOU Jianxiong. Theory and technology on reconstructing 3D scattering centers of radar targets in optical region[D]. [Ph. D. dissertation], National University of Defense Technology, 2006.

|

| [49] |

SHI Zhiguang, ZHOU Jianxiong, ZHAO Hongzhong, et al. A GTD scattering center model parameter estimation method based on CPSO[J]. Acta Electronica Sinica, 2007, 35(6): 1102–1107. doi: 10.3321/j.issn:0372-2112.2007.06.020 |

| [50] |

SUN Zhenzhen, CHEN Zengping, ZHUANG Zhaowen, et al. A parametric model for high frequency complex 2-D radar scattering[J]. Journal of National University of Defense Technology, 2001, 23(4): 113–119. doi: 10.3969/j.issn.1001-2486.2001.04.025 |

| [51] |

DUAN Jia, ZHANG Lei, XING Mengdao, et al. Novel feature extraction method for synthetic aperture radar targets[J]. Journal of Xidian University, 2014, 41(4): 13–19. doi: 10.3969/j.issn.1001-2400.2014.04.003 |

| [52] |

ZHAN Ronghui, HU Jiemin, and ZHANG Jun. A novel method for parametric estimation of 2D geometrical theory of diffraction model based on compressed sensing[J]. Journal of Electronics & Information Technology, 2013, 35(2): 419–425. doi: 10.3724/SP.J.1146.2012.00780 |

| [53] |

HAMMOND G B and JACKSON J A. SAR canonical feature extraction using molecule dictionaries[C]. 2013 IEEE Radar Conference (RadarCon13), Ottawa, Canada, 2013: 1–6.

|

| [54] |

WU Min, XING Mengdao, ZHANG Lei, et al. Super-resolution imaging algorithm based on attributed scattering center model[C]. 2014 IEEE China Summit & International Conference on Signal and Information Processing (ChinaSIP), Xi'an, China, 2014: 271–275.

|

| [55] |

LIU Hongwei, JIU Bo, LI Fei, et al. Attributed scattering center extraction algorithm based on sparse representation with dictionary refinement[J]. IEEE Transactions on Antennas and Propagation, 2017, 65(5): 2604–2614. doi: 10.1109/TAP.2017.2673764 |

| [56] |

李飞. 雷达图像目标特征提取方法研究[D]. [博士论文], 西安电子科技大学, 2014.

LI Fei. Study on target feature extraction based on radar image[D]. [Ph. D. dissertation], Xidian University, 2014.

|

| [57] |

LI Fei, JIU Bo, LIU Hongwei, et al. Sparse representation based algorithm for estimation of attributed scattering center parameter on SAR imagery[J]. Journal of Electronics & Information Technology, 2014, 36(4): 931–937. doi: 10.3724/SP.J.1146.2013.00576 |

| [58] |

CONG Yulai, CHEN Bo, LIU Hongwei, et al. Nonparametric bayesian attributed scattering center extraction for synthetic aperture radar targets[J]. IEEE Transactions on Signal Processing, 2016, 64(18): 4723–4736. doi: 10.1109/TSP.2016.2569463 |

| [59] |

丛玉来. 基于深层贝叶斯生成网络的层次特征学习[D]. [博士论文], 西安电子科技大学, 2017.

CONG Yulai. Hierarchical feature learning based on deep bayesian generative networks[D]. [Ph. D. dissertation], Xidian University, 2017.

|

| [60] |

LI Zenghui, JIN Kan, XU Bin, et al. An improved attributed scattering model optimized by incremental sparse Bayesian learning[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(5): 2973–2987. doi: 10.1109/TGRS.2015.2509539 |

| [61] |

KIM K T and KIM H T. Two-dimensional scattering center extraction based on multiple elastic modules network[J]. IEEE Transactions on Antennas and Propagation, 2003, 51(4): 848–861. doi: 10.1109/TAP.2003.811107 |

| [62] |

LV Yuzeng, CAO Min, JIA Yuping, et al. 2-D scattering center extraction technique based on genetic algorithm[J]. Modern Radar, 2006, 28(11): 64–68. doi: 10.3969/j.issn.1004-7859.2006.11.019 |

| [63] |

JING Maoqiang and ZHANG Guo. Attributed scattering center extraction with genetic algorithm[J]. IEEE Transactions on Antennas and Propagation, 2021, 69(5): 2810–2819. doi: 10.1109/TAP.2020.3027630 |

| [64] |

FENG Sijia, JI Kefeng, WANG Fulai, et al. Electromagnetic scattering feature (ESF) module embedded network based on ASC model for robust and interpretable SAR ATR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5235415. doi: 10.1109/TGRS.2022.3208333 |

| [65] |

张磊, 何思远, 朱国强, 等. 雷达目标三维散射中心位置正向推导和分析[J]. 电子与信息学报, 2018, 40(12): 2854–2860. doi: 10.11999/JEIT180115ZHANG Lei, HE Siyuan, ZHU Guoqiang, et al. Forward derivation and analysis for 3-D scattering center position of radar target[J]. Journal of Electronics & Information Technology, 2018, 40(12): 2854–2860. doi: 10.11999/JEIT180115 |

| [66] |

HU Jiemin, WANG Wei, ZHAI Qinglin, et al. Global scattering center extraction for radar targets using a modified RANSAC method[J]. IEEE Transactions on Antennas and Propagation, 2016, 64(8): 3573–3586. doi: 10.1109/TAP.2016.2574880 |

| [67] |

LIU Xiaoming, WEN Gongjian, and ZHONG Jinrong. Methods for parametrically reconstructing position of 3D scattering center model of targets from SAR images[J]. Journal of Radars, 2013, 2(2): 187–194. doi: 10.3724/SP.J.1300.2013.20080 |

| [68] |

MA Conghui, WEN Gongjian, DING Boyuan, et al. Three-dimensional electromagnetic model–based scattering center matching method for synthetic aperture radar automatic target recognition by combining spatial and attributed information[J]. Journal of Applied Remote Sensing, 2016, 10(1): 016025. doi: 10.1117/1.JRS.10.016025 |

| [69] |

马聪慧. 基于三维电磁散射部件模型的SAR目标识别方法研究[D]. [博士论文], 国防科技大学, 2017.

MA Conghui. Research on SAR target recognition with three dimensional electromagnetic part model[D]. [Ph. D. dissertation], National University of Defense Technology, 2017.

|

| [70] |

DAI Dahai, WANG Xuesong, XIAO Shunping, et al. Fully polarized scattering center extraction and parameter estimation: P-MUSIC algorithm[J]. Signal Processing, 2007, 23(6): 818–822. doi: 10.3969/j.issn.1003-0530.2007.06.005 |

| [71] |

DAI Dahai, WANG Xuesong, CHANG Yuliang, et al. Fully-polarized scattering center extraction and parameter estimation: P-SPRIT algorithm[C]. 2006 CIE International Conference on Radar, Shanghai, China, 2006: 1–4.

|

| [72] |

DAI Dahai, WANG Xuesong, and XIAO Shunping. Novel method for scattering center extraction based on coherent polarization GTD model[J]. Systems Engineering and Electronics, 2007, 29(7): 1057–1061. doi: 10.3321/j.issn:1001-506X.2007.07.010 |

| [73] |

DAI Dahai, ZHANG Jingke, WANG Xuesong, et al. Superresolution polarimetric ISAR imaging based on 2D CP-GTD model[J]. Journal of Sensors, 2015, 2015: 293141. doi: 10.1155/2015/293141 |

| [74] |

安文韬. 基于极化SAR的目标极化分解与散射特征提取研究[D]. [博士论文], 清华大学, 2010.

AN Wentao. The polarimetric decomposition and scattering characteristic extraction of polarimetric SAR[D]. [Ph. D. dissertation], Tsinghua University, 2010.

|

| [75] |

CLOUDE S R and POTTIER E. A review of target decomposition theorems in radar polarimetry[J]. IEEE Transactions on Geoscience and Remote Sensing, 1996, 34(2): 498–518. doi: 10.1109/36.485127 |

| [76] |

KROGAGER E. New decomposition of the radar target scattering matrix[J]. Electronics Letters, 1990, 26(18): 1525–1527. doi: 10.1049/el:19900979 |

| [77] |

CAMERON W L and LEUNG L K. Feature motivated polarization scattering matrix decomposition[C]. IEEE International Conference on Radar, Arlington, USA, 1990: 549-557.

|

| [78] |

徐丰. 全极化合成孔径雷达的正向与逆向遥感理论[D]. [博士论文], 复旦大学, 2007.

XU Feng. Direct and inverse remote sensing theories of polarimetric synthetic aperture radar[D]. [Ph. D. dissertation], Fudan University, 2007.

|

| [79] |

FULLER D F and SAVILLE M A. A high-frequency multipeak model for wide-angle SAR imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2013, 51(7): 4279–4291. doi: 10.1109/TGRS.2012.2226732 |

| [80] |

DUAN Jia, ZHANG Lei, XING Mengdao, et al. Polarimetric target decomposition based on attributed scattering center model for synthetic aperture radar targets[J]. IEEE Geoscience and Remote Sensing Letters, 2014, 11(12): 2095–2099. doi: 10.1109/LGRS.2014.2320053 |

| [81] |

高悦欣. ISAR高分辨成像与目标参数估计算法研究[D]. [博士论文], 西安电子科技大学, 2018.

GAO Yuexin. Study of ISAR high resolution imaging and target parameter estimation algorithms[D]. [Ph. D. dissertation], Xidian University, 2018.

|

| [82] |

XU Feng, LI Yongchen, and JIN Yaqiu. Polarimetric–anisotropic decomposition and anisotropic entropies of high-resolution SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(9): 5467–5482. doi: 10.1109/TGRS.2016.2565693 |

| [83] |

高悦欣, 李震宇, 盛佳恋, 等. 一种大转角SAR图像散射中心各向异性提取方法[J]. 电子与信息学报, 2016, 38(8): 1956–1961. doi: 10.11999/JEIT151261GAO Yuexin, LI Zhenyu, SHENG Jialian, et al. Extraction method for anisotropy characteristic of scattering center in wide-angle SAR imagery[J]. Journal of Electronics & Information Technology, 2016, 38(8): 1956–1961. doi: 10.11999/JEIT151261 |

| [84] |

盛佳恋. ISAR高分辨成像和参数估计算法研究[D]. [博士论文], 西安电子科技大学, 2016.

SHENG Jialian. Study on ISAR high resolution imaging and parameter estimation techniques[D]. [Ph. D. dissertation], Xidian University, 2016.

|

| [85] |

CARRARA W G, GOODMAN R S, and MAJEWSKI R M. Spotlight Synthetic Aperture Radar: Signal Processing Algorithms[M]. Boston: Artech House, 1995.

|

| [86] |

ÇETIN M, STOJANOVIĆ I, ÖNHON N Ö, et al. Sparsity-driven synthetic aperture radar imaging: Reconstruction, autofocusing, moving targets, and compressed sensing[J]. IEEE Signal Processing Magazine, 2014, 31(4): 27–40. doi: 10.1109/MSP.2014.2312834 |

| [87] |

VARSHNEY K R, ÇETIN M, FISHER III J W, et al. Joint image formation and anisotropy characterization in wide-angle SAR[C]. Algorithms for Synthetic Aperture Radar Imagery XIII, Orlando, USA, 2006: 95–106.

|

| [88] |

VARSHNEY K R, ÇETIN M, FISHER J W, et al. Sparse representation in structured dictionaries with application to synthetic aperture radar[J]. IEEE Transactions on Signal Processing, 2008, 56(8): 3548–3561. doi: 10.1109/TSP.2008.919392 |

| [89] |

ASH J, ERTIN E, POTTER L C, et al. Wide-angle synthetic aperture radar imaging: Models and algorithms for anisotropic scattering[J]. IEEE Signal Processing Magazine, 2014, 31(4): 16–26. doi: 10.1109/MSP.2014.2311828 |

| [90] |

MOSES R L and ASH J N. An autoregressive formulation for SAR backprojection imaging[J]. IEEE Transactions on Aerospace and Electronic Systems, 2011, 47(4): 2860–2873. doi: 10.1109/TAES.2011.6034669 |

| [91] |

TRINTINALIA L C, BHALLA R, and LING Hao. Scattering center parameterization of wide-angle backscattered data using adaptive Gaussian representation[J]. IEEE Transactions on Antennas and Propagation, 1997, 45(11): 1664–1668. doi: 10.1109/8.650078 |

| [92] |

STOJANOVIC I, CETIN M, and KARL W C. Joint space aspect reconstruction of wide-angle SAR exploiting sparsity[C]. Algorithms for Synthetic Aperture Radar Imagery XV, Orlando, USA, 2008: 37–48.

|

| [93] |

ZINIEL J and SCHNITER P. Dynamic compressive sensing of time-varying signals via approximate message passing[J]. IEEE Transactions on Signal Processing, 2013, 61(21): 5270–5284. doi: 10.1109/TSP.2013.2273196 |

| [94] |

JIANG Wen, LIU Jianwei, YANG Jiyao, et al. A novel multiband fusion method based on a modified RELAX algorithm for high-resolution and anti-non-gaussian colored clutter microwave imaging[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 60: 5105312. doi: 10.1109/TGRS.2021.3109724 |

| [95] |

吴敏. 逆合成孔径雷达提高分辨率成像方法研究[D]. [博士论文], 西安电子科技大学, 2016.

WU Min. Study on high resolution ISAR imaging techniques[D]. [Ph. D. dissertation], Xidian University, 2016.

|

| [96] |

WU Min, ZHANG Lei, DUAN Jia, et al. Super-resolution SAR imaging algorithm based on attribute scattering center model[J]. Journal of Astronautics, 2014, 35(9): 1058–1064. doi: 10.3873/j.issn.1000-1328.2014.09.011 |

| [97] |

HUANG Lanqing, LIU Bin, LI Boying, et al. OpenSARShip: A dataset dedicated to Sentinel-1 ship interpretation[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2018, 11(1): 195–208. doi: 10.1109/JSTARS.2017.2755672 |

| [98] |

JI Kefeng, KUANG Gangyao, SU Yi, et al. Methods of target’s peak extraction and azimuth estimation from SAR imagery[J]. Journal of Astronautics, 2004, 25(1): 102–108,113. doi: 10.3321/j.issn:1000-1328.2004.01.018 |

| [99] |

ZHANG Cui, LI Sudan, ZOU Tao, et al. An automatic target recognition method in SAR imagery using peak feature matching[J]. Journal of Image and Graphics, 2002, 7(7): 729–734. doi: 10.3969/j.issn.1006-8961.2002.07.020 |

| [100] |

CHIANG H C, MOSES R L, and POTTER L C. Model-based classification of radar images[J]. IEEE Transactions on Information Theory, 2000, 46(5): 1842–1854. doi: 10.1109/18.857795 |

| [101] |

DUNGAN K E and POTTER L C. Classifying transformation-variant attributed point patterns[J]. Pattern Recognition, 2010, 43(11): 3805–3816. doi: 10.1016/j.patcog.2010.05.033 |

| [102] |

LI Tingli and DU Lan. Target discrimination for SAR ATR based on scattering center feature and K-center one-class classification[J]. IEEE Sensors Journal, 2018, 18(6): 2453–2461. doi: 10.1109/JSEN.2018.2791947 |

| [103] |

LIN Yuesong, ZHANG Le, XUE Anke, et al. SAR imagery scattering center extraction and target recognition based on scattering center model[C]. 2006 6th World Congress on Intelligent Control and Automation, Dalian, China, 2006: 9631–9636.

|

| [104] |

文贡坚, 马聪慧, 丁柏圆, 等. 基于部件级三维参数化电磁模型的SAR目标物理可解释识别方法[J]. 雷达学报, 2020, 9(4): 608–621. doi: 10.12000/JR20099WEN Gongjian, MA Conghui, DING Baiyuan, et al. SAR target physics interpretable recognition method based on three dimensional parametric electromagnetic part model[J]. Journal of Radars, 2020, 9(4): 608–621. doi: 10.12000/JR20099 |

| [105] |

丁柏圆, 文贡坚, 余连生, 等. 属性散射中心匹配及其在SAR目标识别中的应用[J]. 雷达学报, 2017, 6(2): 157–166. doi: 10.12000/JR16104DING Baiyuan, WEN Gongjian, YU Liansheng, et al. Matching of attributed scattering center and its application to synthetic aperture radar automatic target recognition[J]. Journal of Radars, 2017, 6(2): 157–166. doi: 10.12000/JR16104 |

| [106] |

DING Baiyuan, WEN Gongjian, MA Conghui, et al. Decision fusion based on physically relevant features for SAR ATR[J]. IET Radar, Sonar & Navigation, 2017, 11(4): 682–690. doi: 10.1049/iet-rsn.2016.0357 |

| [107] |

DING Baiyuan, WEN Gongjian, HUANG Xiaohong, et al. Target recognition in synthetic aperture radar images via matching of attributed scattering centers[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2017, 10(7): 3334–3347. doi: 10.1109/JSTARS.2017.2671919 |

| [108] |

CHIANG H C, MOSES R L, and POTTER L C. Model-based Bayesian feature matching with application to synthetic aperture radar target recognition[J]. Pattern Recognition, 2001, 34(8): 1539–1553. doi: 10.1016/S0031-3203(00)00089-3 |

| [109] |

ZHANG Lamei, SUN Liangjie, ZOU Bin, et al. Fully polarimetric SAR image classification via sparse representation and polarimetric features[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2015, 8(8): 3923–3932. doi: 10.1109/JSTARS.2014.2359459 |

| [110] |

CHEN Sizhe, WANG Haipeng, XU Feng, et al. Target classification using the deep convolutional networks for SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(8): 4806–4817. doi: 10.1109/TGRS.2016.2551720 |

| [111] |

DING Baiyuan, WEN Gongjian, MA Conghui, et al. An efficient and robust framework for SAR target recognition by hierarchically fusing global and local features[J]. IEEE Transactions on Image Processing, 2018, 27(12): 5983–5995. doi: 10.1109/TIP.2018.2863046 |

| [112] |

LI Tingli and DU Lan. SAR automatic target recognition based on attribute scattering center model and discriminative dictionary learning[J]. IEEE Sensors Journal, 2019, 19(12): 4598–4611. doi: 10.1109/JSEN.2019.2901050 |

| [113] |

LI Yi, DU Lan, and WEI Di. Multiscale CNN based on component analysis for SAR ATR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5211212. doi: 10.1109/TGRS.2021.3100137 |

| [114] |

ZHANG Jinsong, XING Mengdao, SUN Guangcai, et al. Integrating the reconstructed scattering center feature maps with deep CNN feature maps for automatic SAR target recognition[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4009605. doi: 10.1109/LGRS.2021.3054747 |

| [115] |

ZHANG Jinsong, XING Mengdao, and XIE Yiyuan. FEC: A feature fusion framework for SAR target recognition based on electromagnetic scattering features and deep CNN features[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(3): 2174–2187. doi: 10.1109/TGRS.2020.3003264 |

| [116] |

LIU Jiaming, XING Mengdao, YU Hanwen, et al. EFTL: Complex convolutional networks with electromagnetic feature transfer learning for sar target recognition[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5209811. doi: 10.1109/TGRS.2021.3083261 |

| [117] |

YANG Lichao, XING Mengdao, ZHANG Lei, et al. Integration of rotation estimation and high-order compensation for ultrahigh-resolution microwave photonic ISAR imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(3): 2095–2115. doi: 10.1109/TGRS.2020.2994337 |

| [118] |

DENG Yuhui, XING Mengdao, SUN Guangcai, et al. A processing framework for airborne microwave photonic SAR with resolution up to 0.03 m: motion estimation and compensation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022.

|

Submit Manuscript

Submit Manuscript Peer Review

Peer Review Editor Work

Editor Work

DownLoad:

DownLoad: