Cooperative Detection of Ships in Optical and SAR Remote Sensing Images Based on Neighborhood Saliency

-

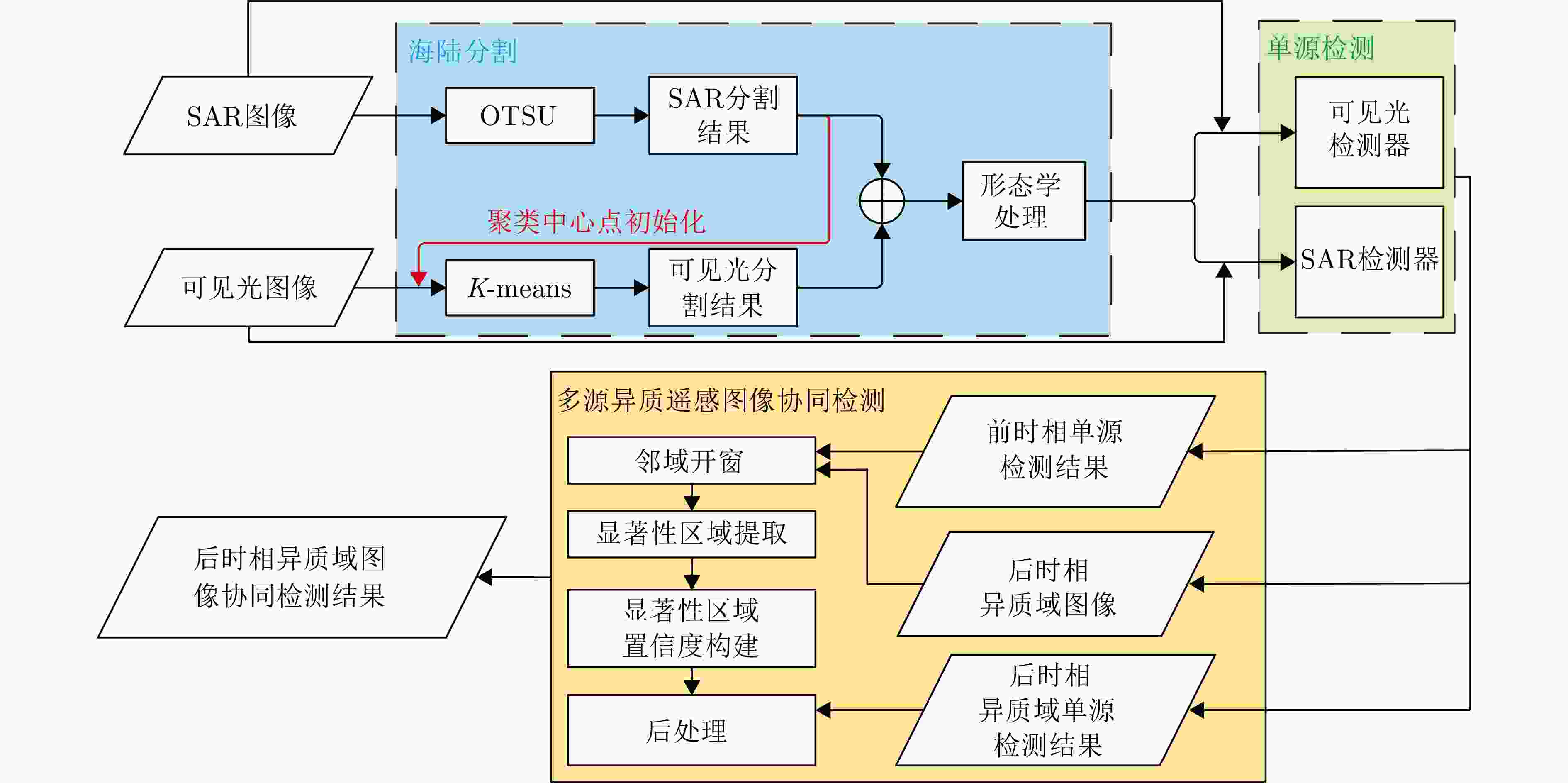

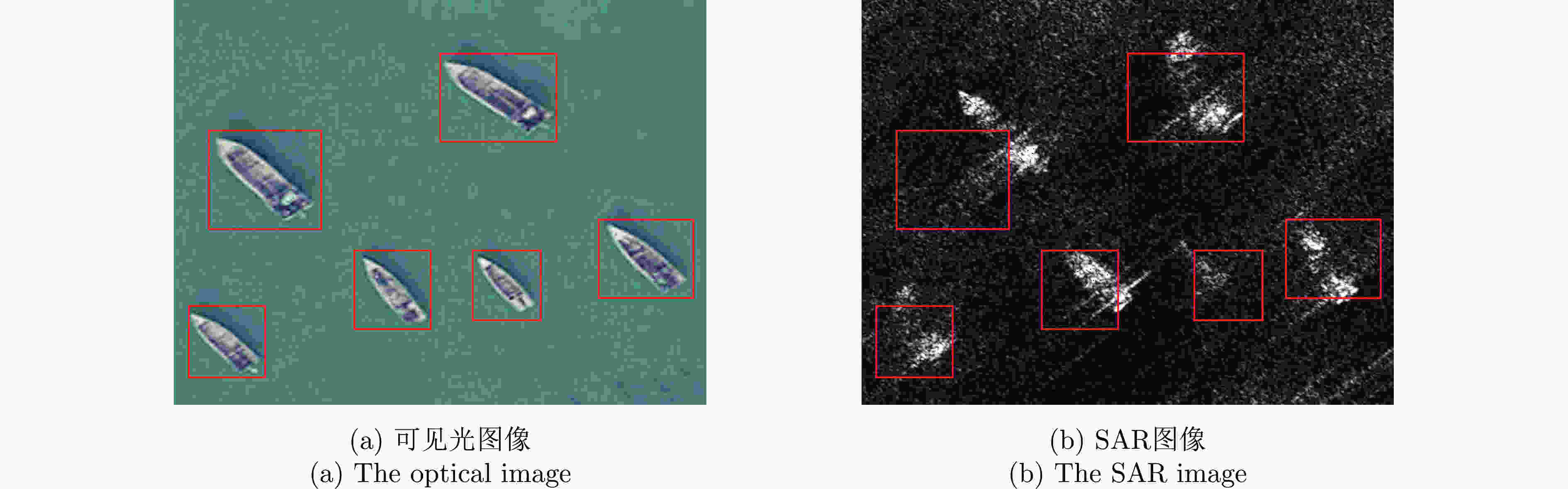

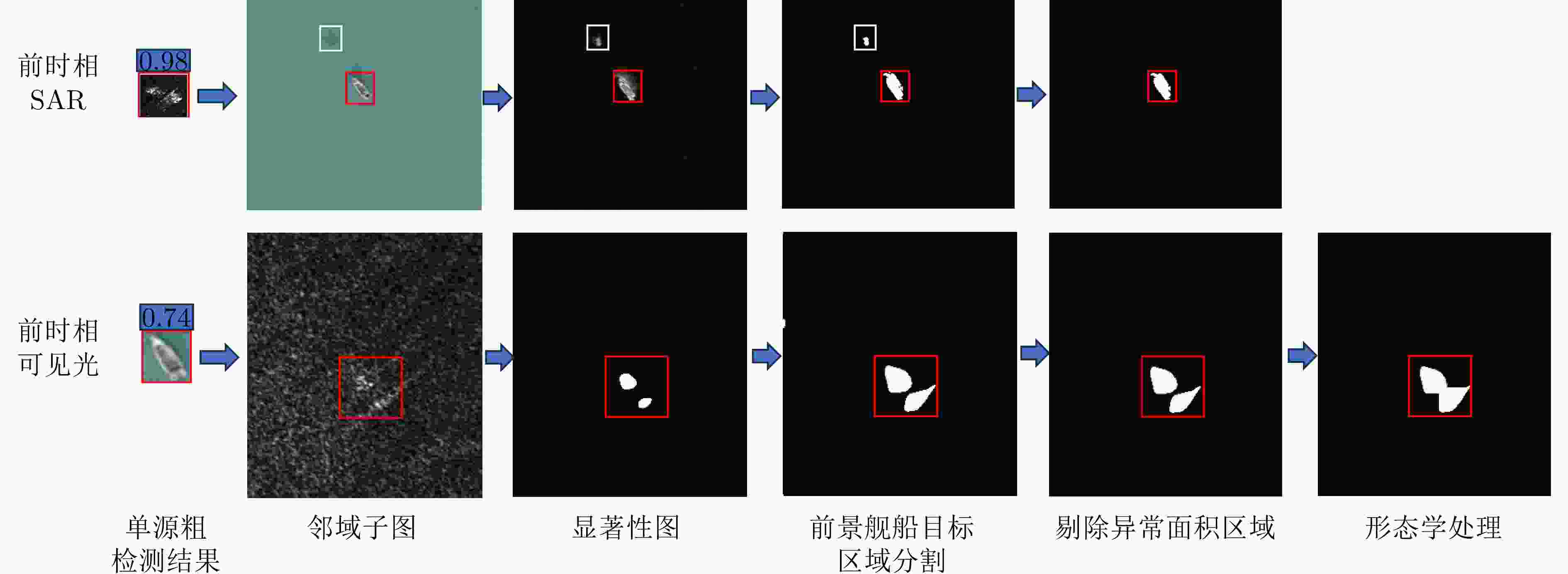

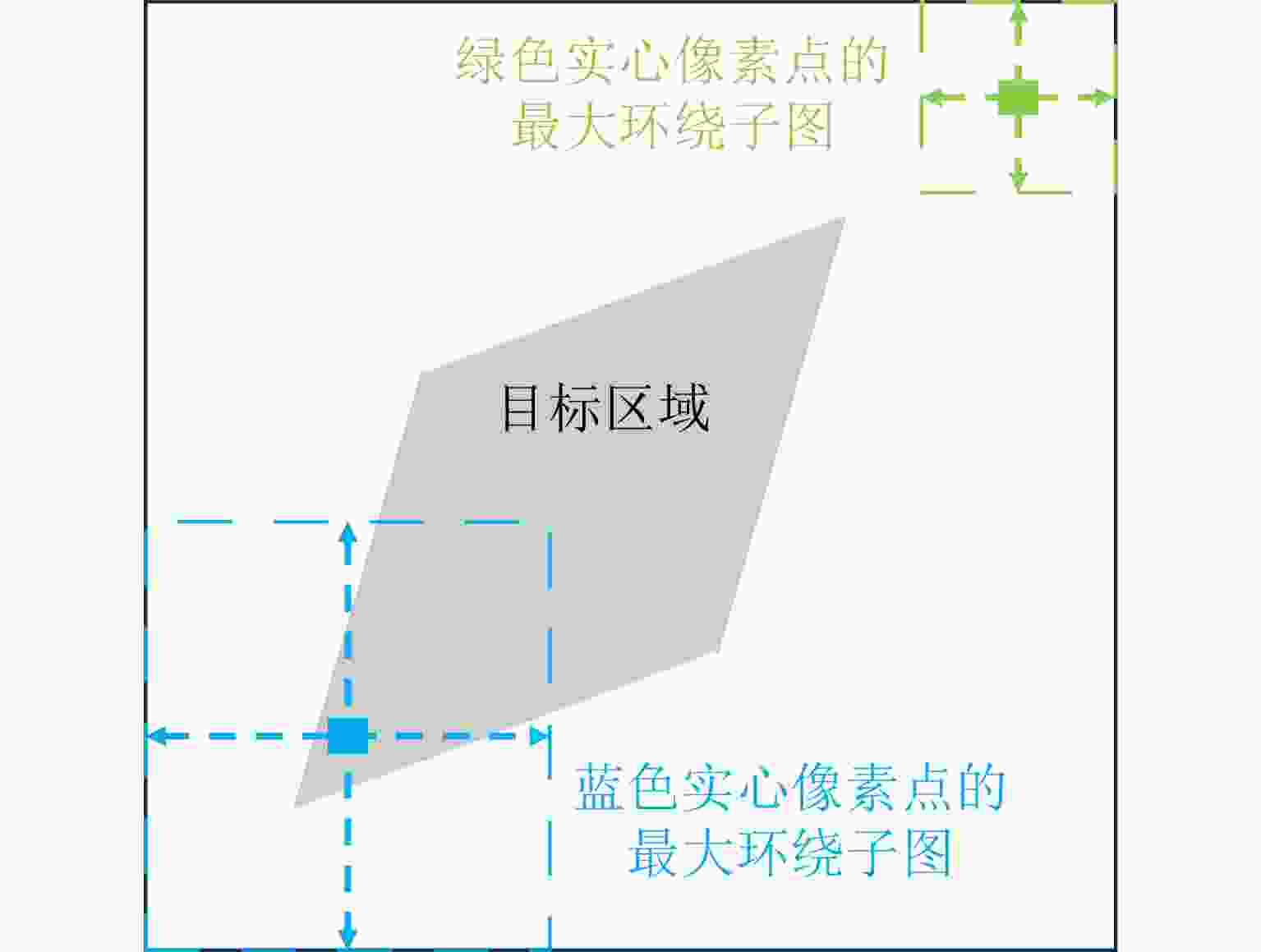

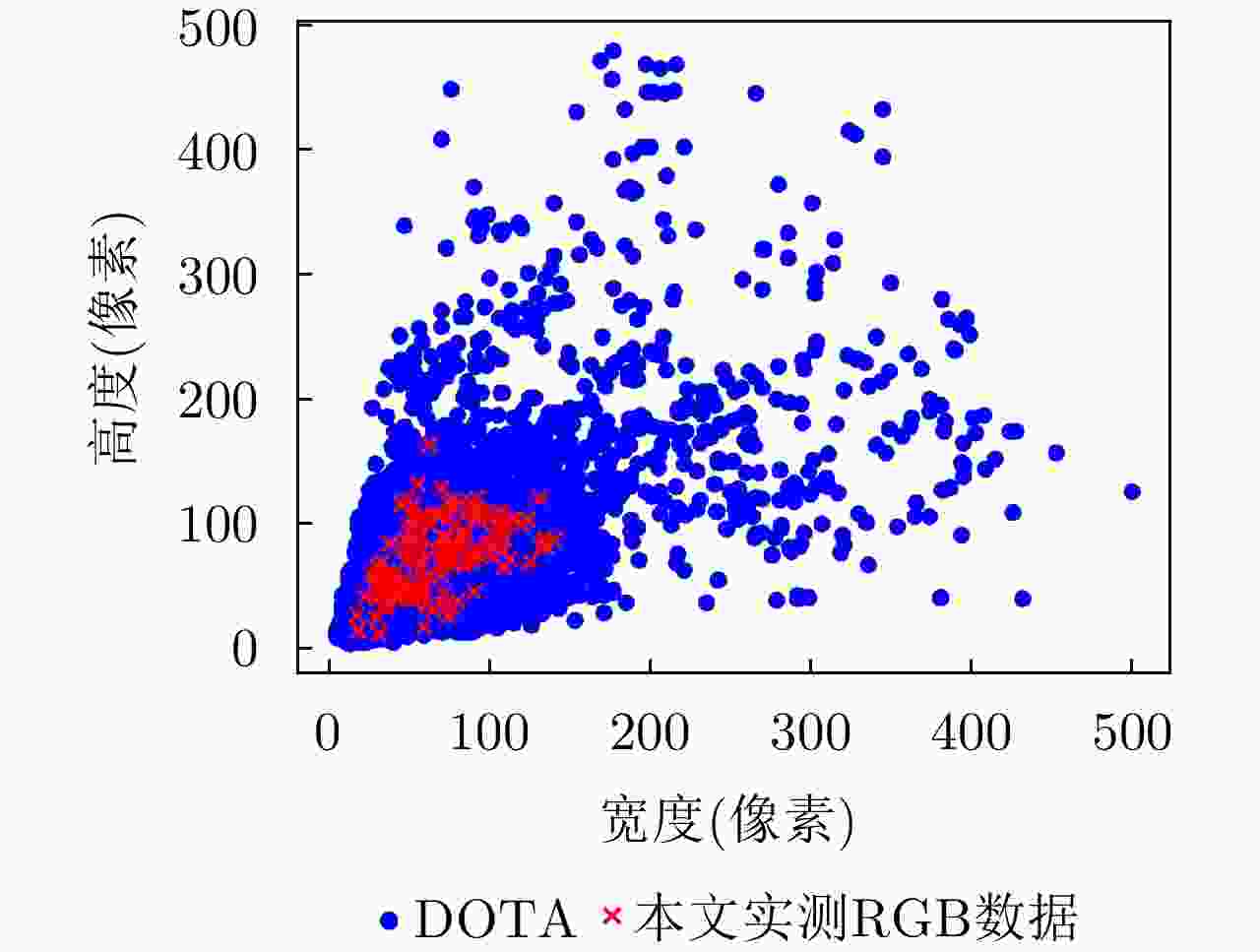

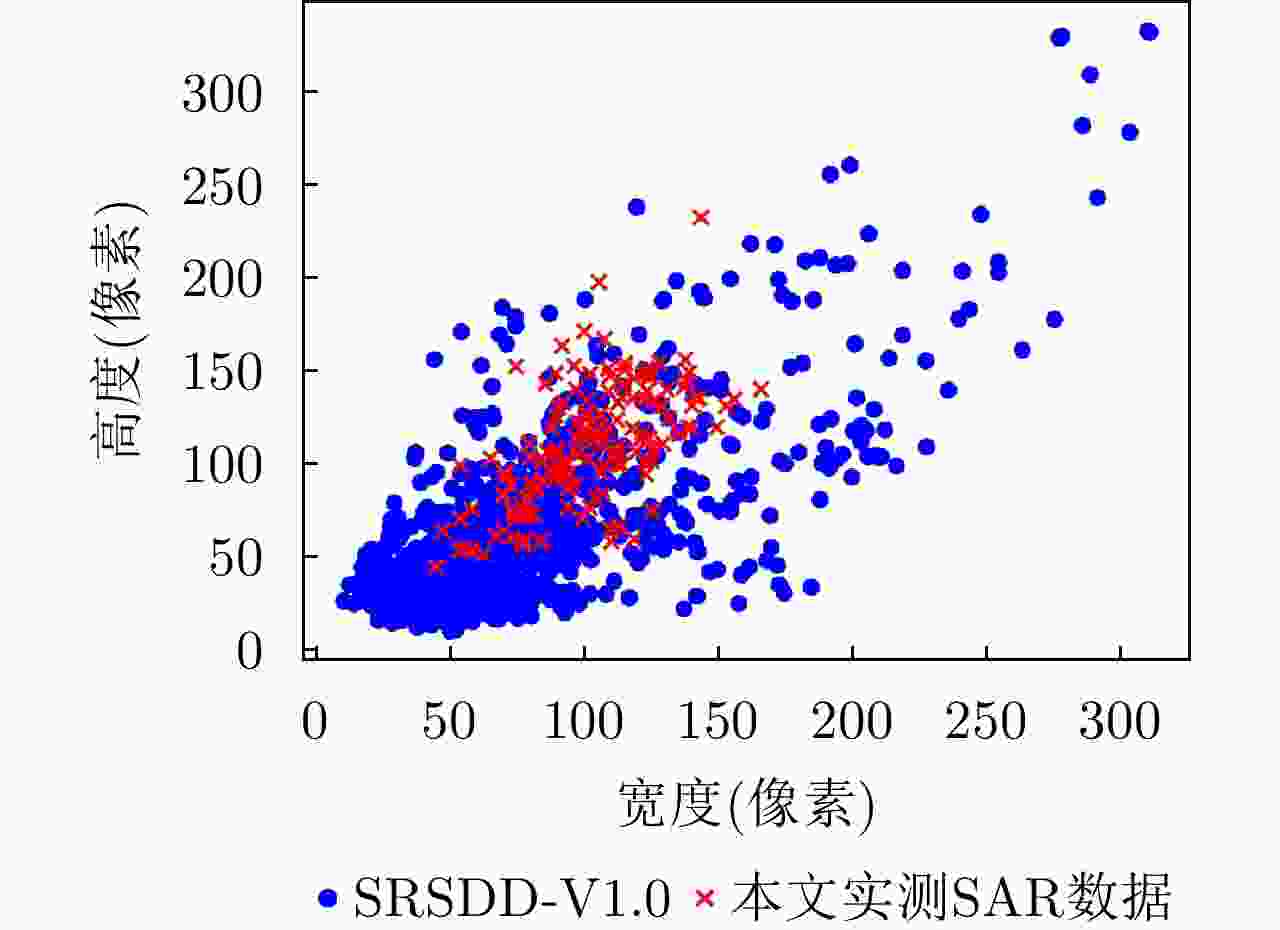

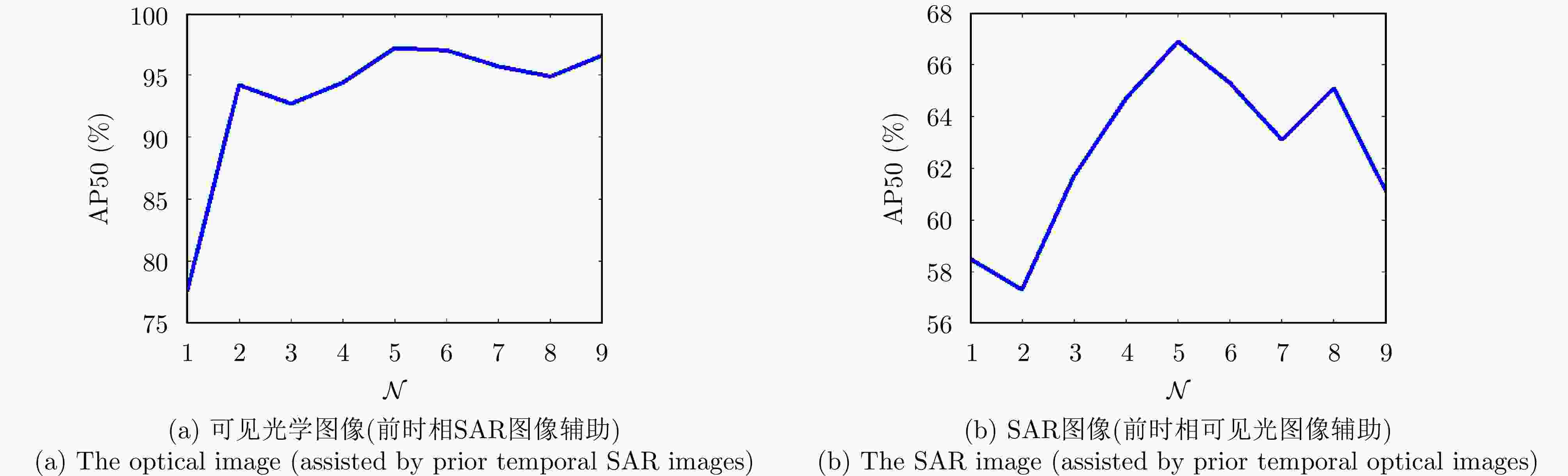

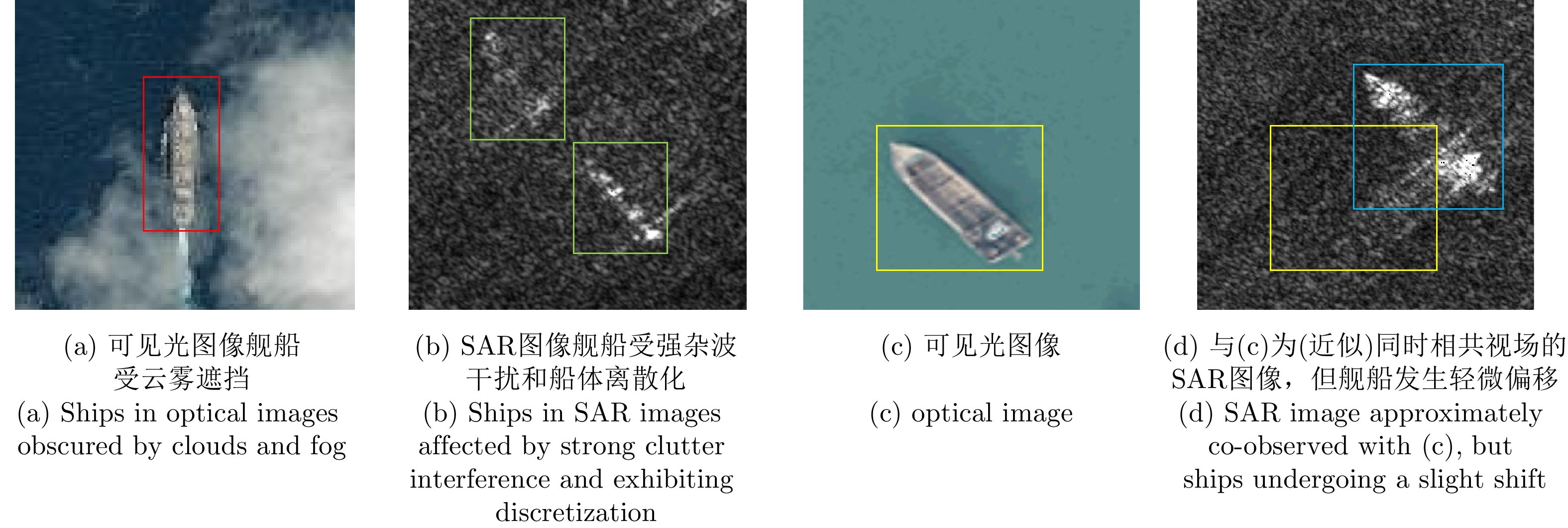

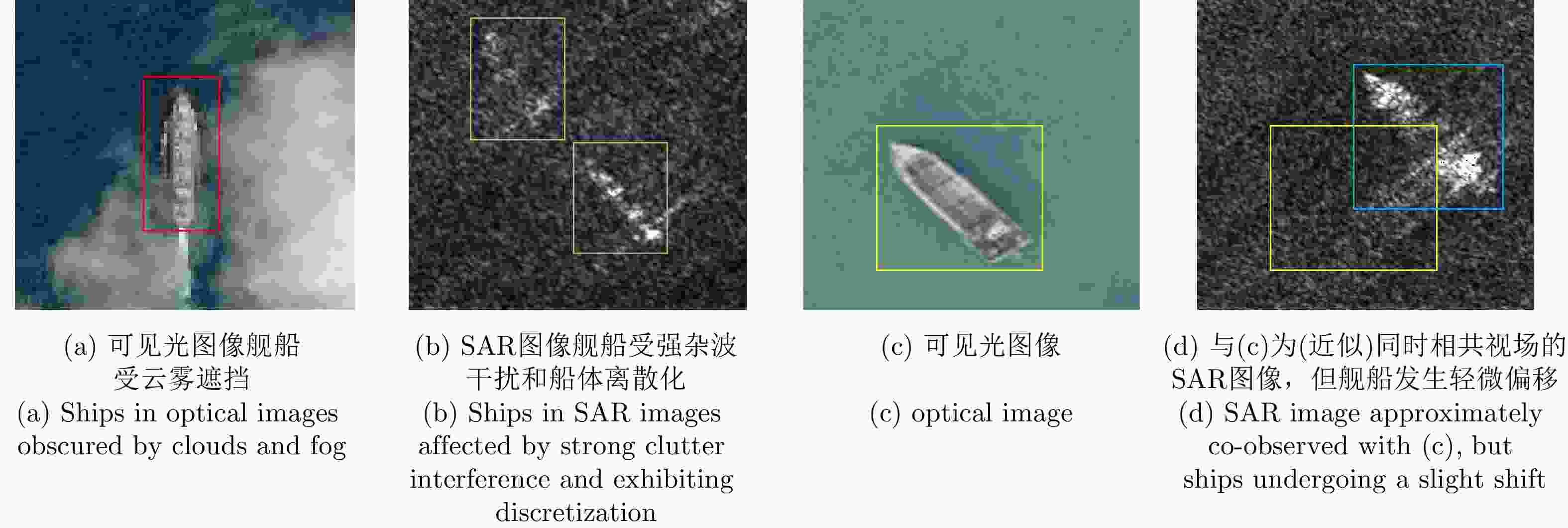

摘要: 在遥感图像舰船检测任务中,可见光图像细节和纹理信息丰富,但成像质量易受云雾干扰,合成孔径雷达(SAR)图像具有全天时和全天候的特点,但图像质量易受复杂海杂波影响。结合可见光和SAR图像优势的协同检测方法可以提高舰船目标的检测性能。针对在前后时相图像中,舰船目标在极小邻域范围内发生轻微偏移的场景,该文提出一种基于邻域显著性的可见光和SAR多源异质遥感图像舰船协同检测方法。首先,通过可见光和SAR的协同海陆分割降低陆地区域的干扰,并通过RetinaNet和YOLOv5s分别进行可见光和SAR图像的单源目标初步检测;其次,提出了基于单源检测结果对遥感图像邻域开窗进行邻域显著性目标二次检测的多源协同舰船目标检测策略,实现可见光和SAR异质图像的优势互补,减少舰船目标漏检、虚警以提升检测性能。在2022年烟台地区拍摄的可见光和SAR遥感图像数据上,该方法的检测精度AP50相比现有舰船检测方法提升了1.9%以上,验证了所提方法的有效性和先进性。Abstract: In ship detection through remote sensing images, optical images often provide rich details and texture information; however, the quality of such optical images can be affected by cloud and fog interferences. In contrast, Synthetic Aperture Radar (SAR) provides all-weather and all-day imaging capabilities; however, SAR images are susceptible to interference from complex sea clutter. Cooperative ship detection combining the advantages of optical and SAR images can enhance the detection performance of ships. In this paper, by focusing on the slight shift of ships in a small neighborhood range in the prior and later temporal images, we propose a method for cooperative ship detection based on neighborhood saliency in multisource heterogeneous remote sensing images, including optical and SAR data. Initially, a sea-land segmentation algorithm of optical and SAR images is applied to reduce interference from land regions. Next, single-source ship detection from optical and SAR images is performed using the RetinaNet and YOLOv5s models, respectively. Then, we introduce a multisource cooperative ship target detection strategy based on the neighborhood window opening of single-source detection results in remote sensing images and secondary detection of neighborhood salient ships. This strategy further leverages the complementary advantages of both optical and SAR heterogeneous images, reducing the possibility of missing ship and false alarms to improve overall detection performance. The performance of the proposed method has been validated using optical and SAR remote sensing data measured from Yantai, China, in 2022. Compared with existing ship detection methods, our method improves detection accuracy AP50 by ≥1.9%, demonstrating its effectiveness and superiority.

-

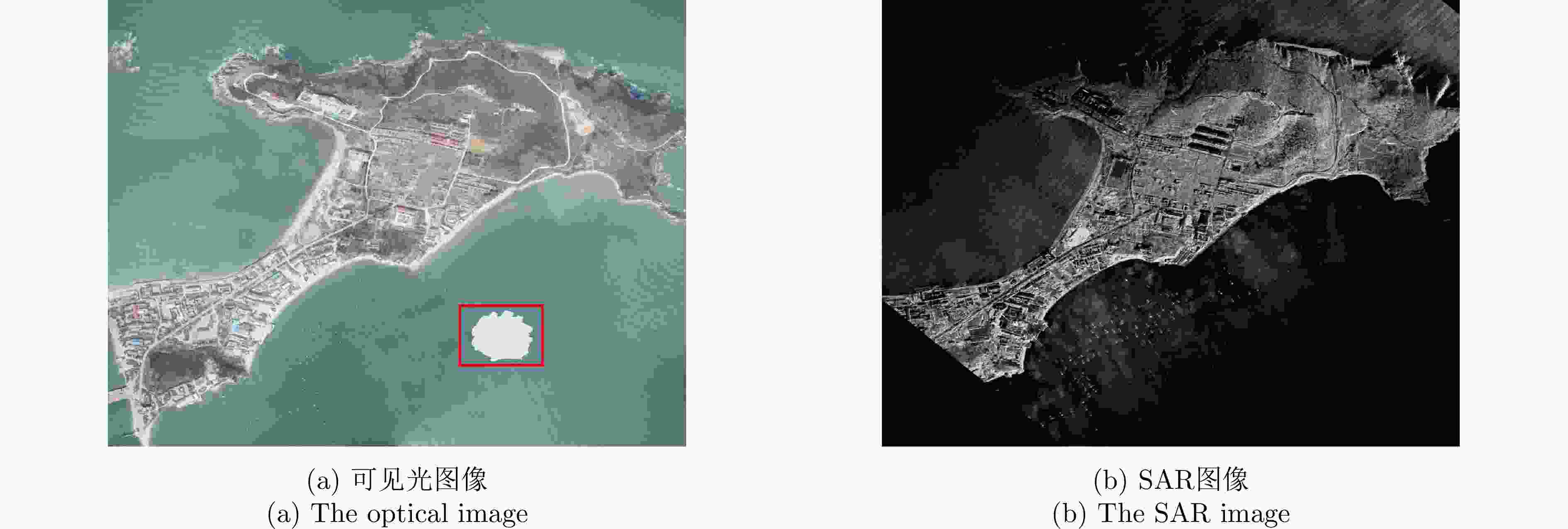

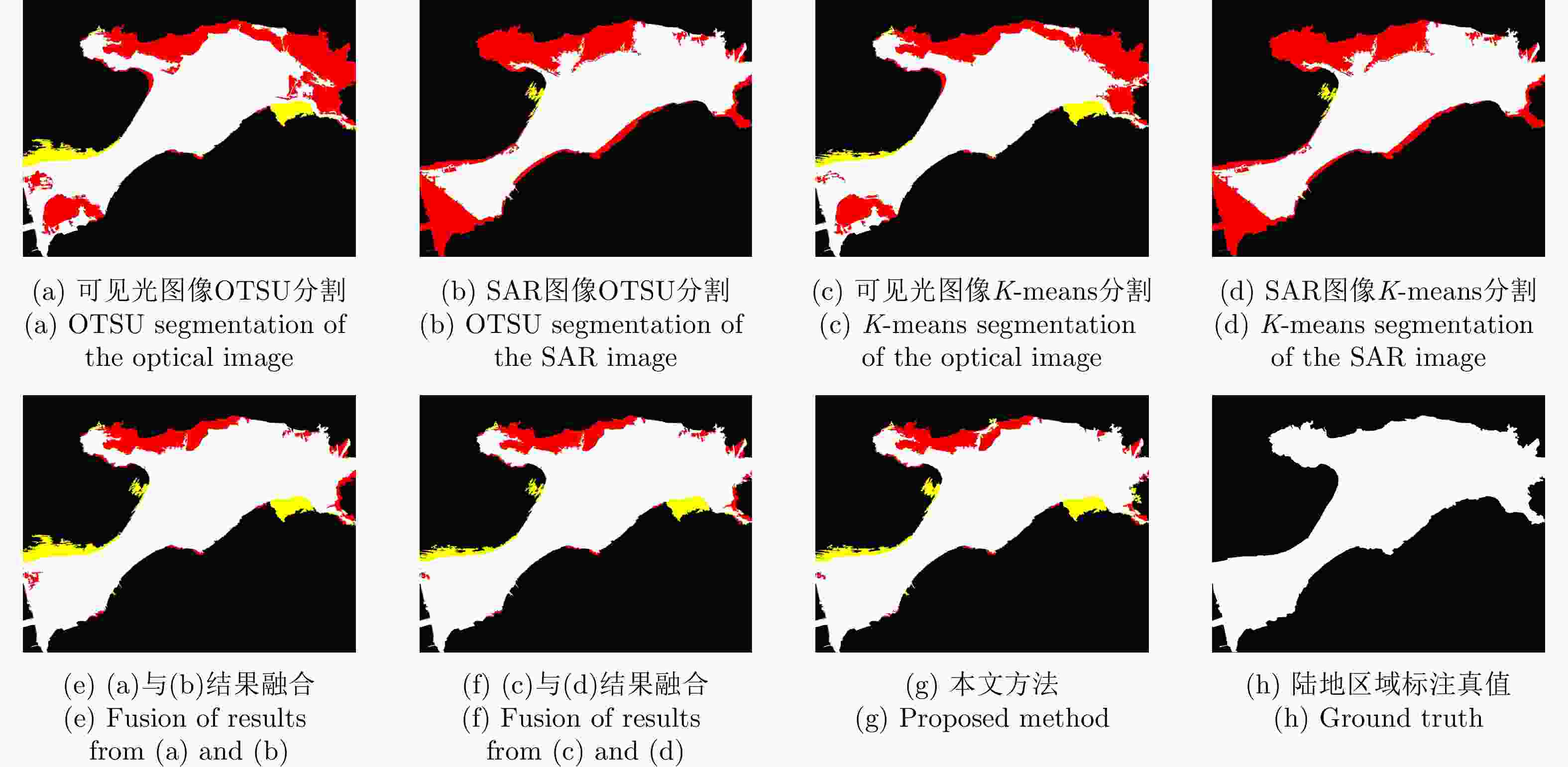

图 12 海陆分割结果(白色区域是指预测并真属于陆地区域、黄色区域是预测属于陆地但实际属于海面区域、黑色区域是预测并真属于海面区域、红色区域是预测属于海面但实际属于陆地区域)

Figure 12. Sea-land segmentation results (white regions indicate predicted and true land, yellow regions indicate predicted land but sea, black regions indicate predicted and true sea, and red regions indicate predicted sea)

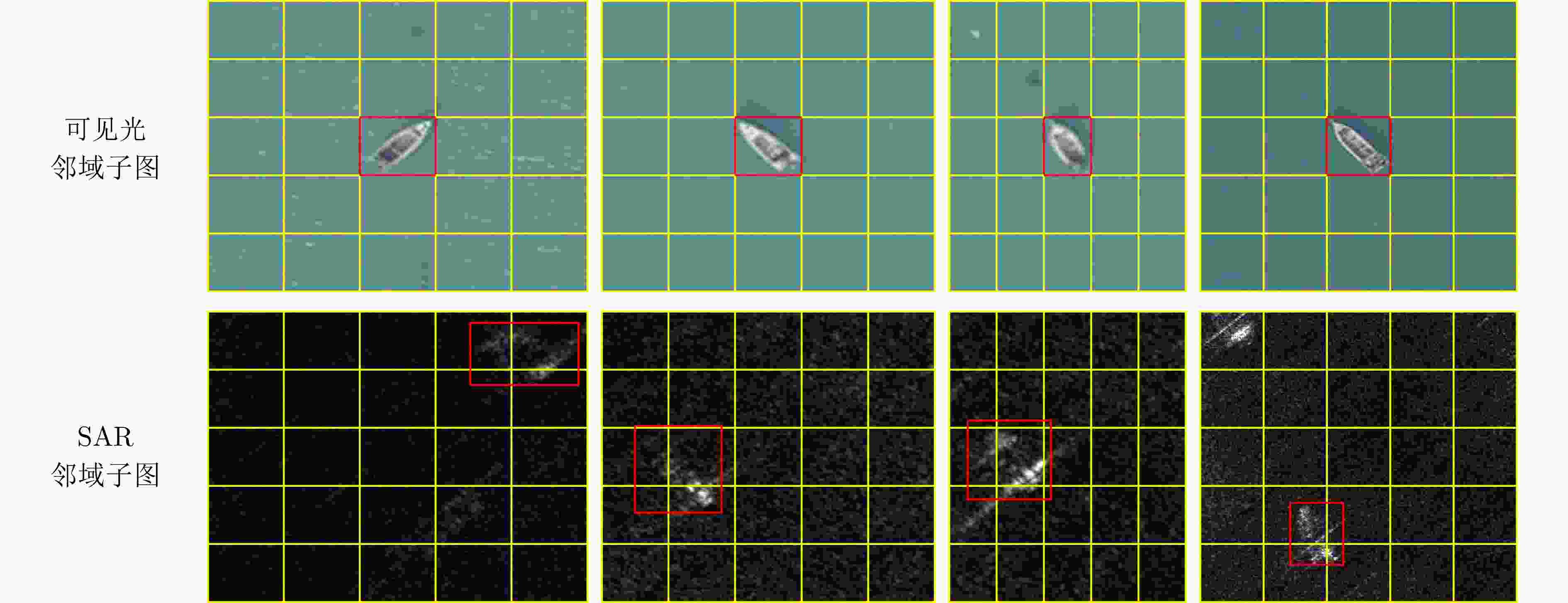

图 14 (近似)共时相多源异质遥感数据5倍开窗示意图(舰船目标的真实位置用红色框标出,黄色框为以可见光图像中舰船目标为中心的5倍邻域开窗结果)

Figure 14. 5-fold windowing of approximately co-observed multisource heterogeneous remote sensing images (the real position of the ship is marked with a red box, while the yellow box represents the 5-fold windowing result centered on the ship in optical images)

表 1 单源检测器性能对比(%)

Table 1. Performance comparison of single-source detectors (%)

图像类型 方法 P R F1 AP50 可见光 RetinaNet 95.7 91.5 93.6 95.3 YOLOv5s 81.5 75.8 78.6 77.1 YOLOv5m 81.6 77.1 79.3 79.7 YOLOv5l 70.6 65.9 68.2 58.6 YOLOv5x 67.7 65.4 66.5 58.6 SAR RetinaNet 50.2 39.6 44.3 38.5 YOLOv5s 62.6 50.0 55.6 50.7 YOLOv5m 60.0 38.9 47.2 48.6 YOLOv5l 54.6 37.5 44.5 42.5 YOLOv5x 40.1 33.3 36.4 31.4 1 基于前时相SAR单源检测结果辅助的后时相可见光图像显著性舰船目标检测算法

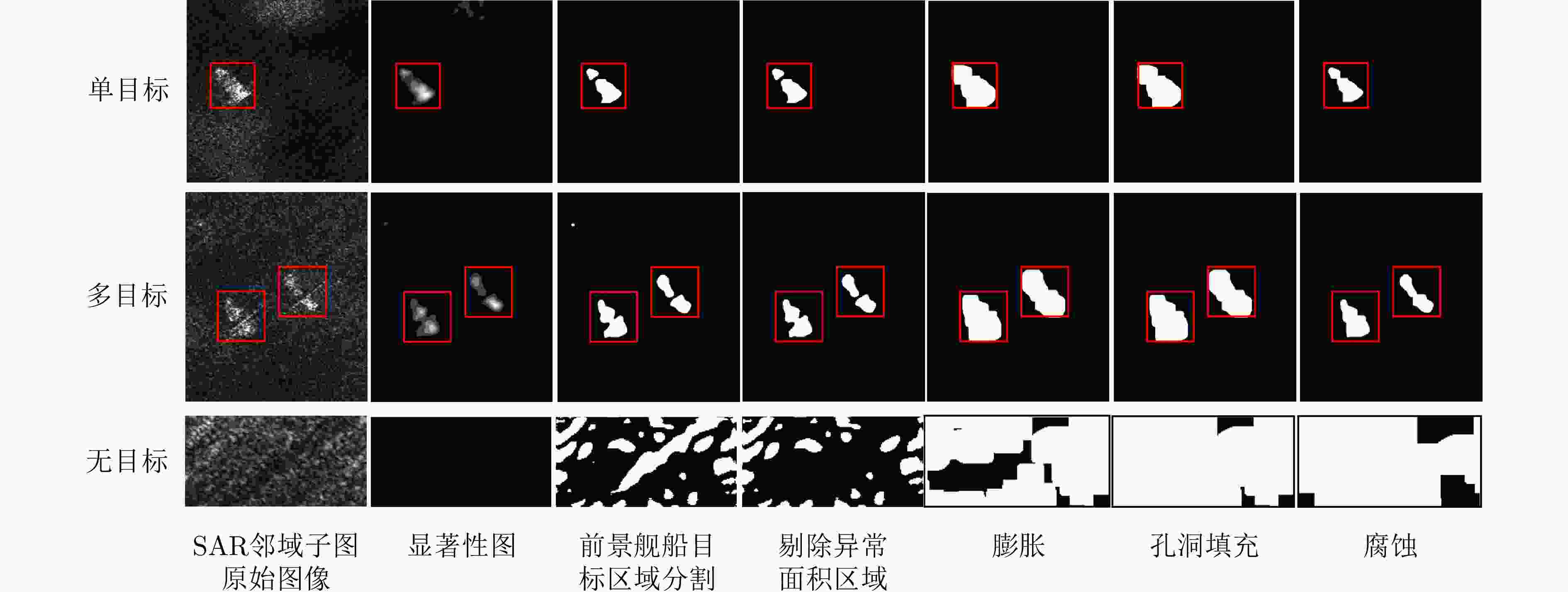

1. Algorithm for detecting salient ships in later temporal optical images assisted by prior temporal SAR single-source detection results

输入:SAR单源检测结果框 $ {B^{\rm S}} = \{ [b_1^{\rm S},c_1^{\rm S}],[b_2^{\rm S},c_2^{\rm S}], \cdots ,[b_m^{\rm S},c_m^{\rm S}], \cdots ,[b_M^{\rm S},c_M^{\rm S}]\} $,其中,$ b_m^{\rm S} $代

表SAR单源检测结果中第m个检测结果框,$ c_m^{\rm S} $代表相应检测框

的置信度。输出:后时相可见光图像检测结果

$ {B^{\rm{OC}}} = \{ {B^{\rm O}},B_1^{\rm{OC}},B_2^{\rm{OC}}, \cdots ,B_M^{\rm{OC}}\} $。步骤: 1. 根据$ b_m^{\rm S} $生成可见光图像相应区域的邻域子图$ {\boldsymbol I}_m^{\rm O} $,并转换到

CIELAB色彩空间得到转换后的邻域子图$ {\boldsymbol I}_m^{\rm{Lab}} $;2. 对$ {\boldsymbol I}_m^{\rm{Lab}} $进行高斯滤波获得平滑图像$ {\boldsymbol I}_m^{{\mathrm{Lab}},s} $; 3. 按式(5)对$ {\boldsymbol I}_m^{\rm{Lab}} $计算均值图像$ {\boldsymbol I}_m^{{\mathrm{Lab}},\mu } $; 4. 将步骤2和步骤3中的结果代入式(4)计算$ {\boldsymbol I}_m^{\rm{Lab}} $对应的显著性图

$ {\boldsymbol{S}}_m^{\rm{Lab}} $;5. 利用OTSU方法分割$ {\boldsymbol{S}}_m^{\rm{Lab}} $获得所有可能的前景目标区域$ {T_m} $; 6. 计算前景目标区域面积,剔除$ {T_m} $中小的区块噪声和异常大连

通区域杂波;7. 按式(8),根据$ c_m^{\rm S} $计算步骤6中$ {T_m} $的各个前景目标区域置信度

得分和最大外接矩形检测框,得到当前邻域子图检测结果$ B_m^{\rm{OC}} $;8. 重复步骤1—步骤7直到遍历SAR单源检测结果框$ {B^{\rm S}} $中的M个

检测框,获得共M组邻域子图检测结果,再与可见光单源检测结

果$ {B^{\rm O}} $合并,生成前时相SAR单源检测结果辅助后时相可见光

图像检测结果$ {B^{\rm{OC}}} = \{ {B^{\rm O}},B_1^{\rm{OC}},B_2^{\rm{OC}}, \cdots ,B_M^{\rm{OC}}\} $;9. 对$ {B^{\rm{OC}}} $进行内部框融合和NMS。 2 基于前时相可见光单源检测结果辅助的后时相SAR图像显著性舰船目标检测算法

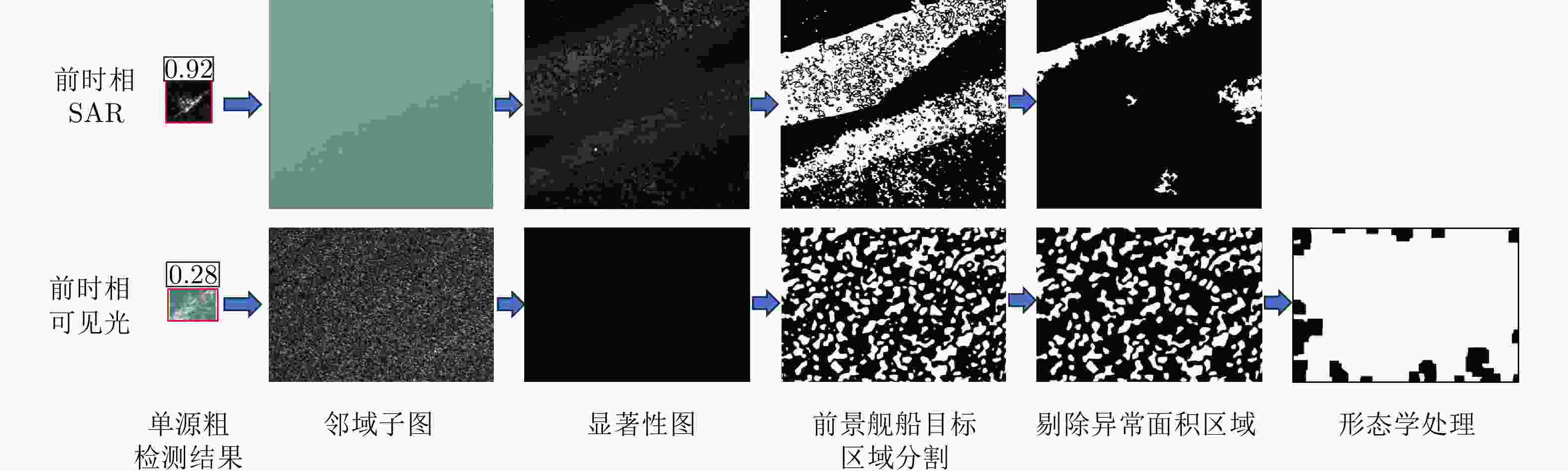

2. Algorithm for detecting salient ships in later temporal SAR images assisted by prior temporal optical single-source detection results

输入:可见光单源检测结果框 $ {B^{\rm O}} = \{ [b_1^{\rm O},c_1^{\rm O}],[b_2^{\rm O},c_2^{\rm O}], \cdots,[b_n^{\rm O},c_n^{\rm O}], \cdots,[b_N^{\rm O},c_N^{\rm O}]\} $,其中,$ b_n^{\rm O} $代

表可见光单源检测结果中第n个检测结果框,$ c_n^{\rm O} $代表相应检测框

的置信度。输出:后时相SAR图像检测结果

$ {B^{\rm{SC}}} = \{ {B^{\rm{SC}}},B_1^{\rm{SC}},B_2^{\rm{SC}},\cdots,B_N^{\rm{SC}}\} $步骤: 1. 根据$ b_n^{\rm O} $生成SAR图像相应区域邻域子图$ {\boldsymbol I}_n^{\rm S} $; 2. 对$ {\boldsymbol I}_n^{\rm S} $进行高斯滤波获得平滑图像$ {\boldsymbol I}_n^{{\mathrm{S}},s} $; 3. 按式(5)对$ {\boldsymbol I}_n^{\rm S} $计算均值图$ {\boldsymbol I}_n^{{\mathrm{S}},\mu } $; 4. 将步骤2和步骤3中的结果代入式(4)计算$ {\boldsymbol I}_n^{\rm S} $对应的显著性图

$ {\boldsymbol{S}}_n^{\rm S} $;5. 利用OTSU方法分割$ {\boldsymbol{S}}_n^{\rm S} $获得所有可能的前景目标区域$ T_n^{\rm S} $; 6. 计算前景目标区域面积,剔除$ T_n^{\rm S} $中小的区块噪声和异常大连

通区域杂波;7. 对步骤6处理后的$ T_n^{\rm S} $进行形态学处理,使得弱关联性的单体目

标的多个散射区域连通完整;8. 按式(8)根据$ c_n^{\rm O} $计算步骤7中$ T_n^{\rm S} $的各个前景目标区域置信度得

分,以及最大外接矩形检测框,得到当前邻域子图检测框$ B_n^{\rm{SC}} $;9. 重复步骤1—步骤8直到遍历可见光单源检测结果框$ {B^{\rm O}} $中N个

检测框,获得共N组邻域子图检测结果框,与SAR单源检测结果

框$ {B^{\rm S}} $合并,生成前时相可见光单源检测结果辅助后时相SAR

图像检测结果$ {B^{\rm{SC}}} = \{ {B^{\rm{SC}}},B_1^{\rm{SC}},B_2^{\rm{SC}},\cdots,B_N^{\rm{SC}}\} $;10. 对$ {B^{\rm{SC}}} $进行内部框融合和NMS。 表 2 海陆分割性能比较(%)

Table 2. Performance comparison of sea-land segmentation methods (%)

图像类型 方法 PL RL LF1 AccL 可见光 OTSU 92.32 71.10 80.33 87.96 K-means 98.98 70.13 82.09 89.42 SAR OTSU 94.44 74.57 83.33 89.69 K-means 99.04 70.06 82.07 89.42 可见光+SAR

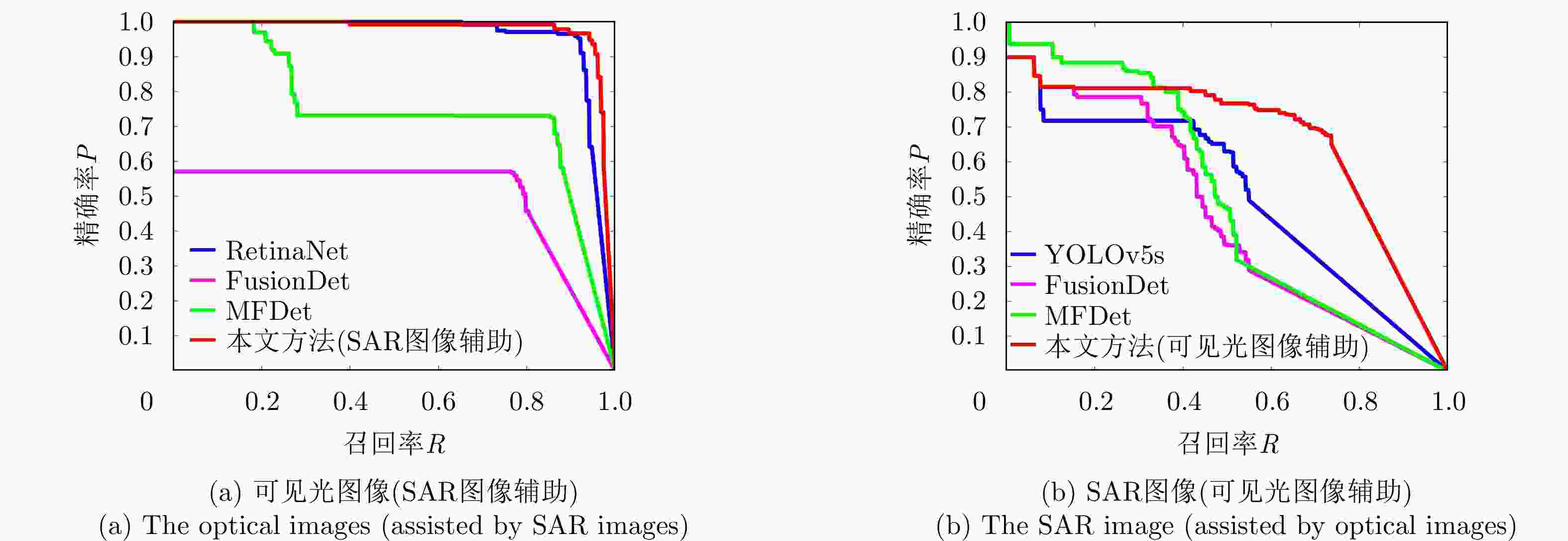

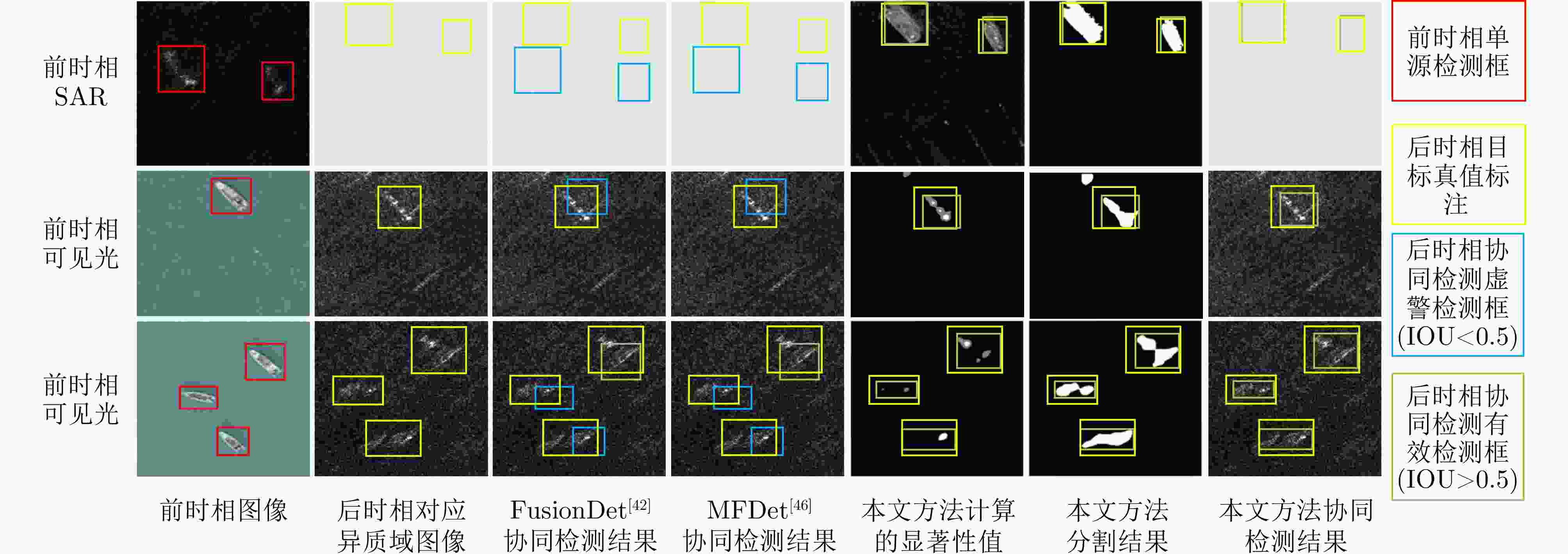

协同OTSU+OTSU 93.07 89.12 91.05 93.94 K-means+K-means 94.66 89.82 92.18 94.73 本文方法 94.20 90.93 92.54 94.93 表 3 不同检测方法基于可见光图像(SAR图像辅助)和SAR图像(可见光图像辅助)的检测性能比较(%)

Table 3. Performance comparison of detection methods based on optical images (assisted by SAR images) and SAR images (assisted by optical images) (%)

图像类型 方法 P R F1 AP50 可见光

(后时相)RetinaNet 95.7 91.5 93.6 95.3 FusionDet 56.5 77.1 65.2 50.1 MFDet 72.5 85.6 78.5 74.4 本文方法

(前时相SAR图像辅助)94.7 94.1 94.4 97.2 SAR

(后时相)YOLOv5s 62.6 50.0 55.6 50.7 FusionDet 51.6 43.1 46.9 44.7 MFDet 68.6 41.7 51.8 49.7 本文方法

(前时相可见光图像辅助)67.5 72.3 69.8 66.9 表 4 海陆分割以及多源异质遥感图像协同检测步骤的消融实验(%)

Table 4. Ablation study on sea-land segmentation and cooperative detection of multisource heterogeneous remote sensing images (%)

图像类型 海陆分割 多源异质遥感

图像协同检测P R F1 AP50 可见光

(后时相)– – 95.7 91.5 93.6 95.3 √ – 99.0 92.2 95.5 96.5 (+1.2) – √ 93.4 94.1 93.8 96.6 (+1.3) √ √ 94.7 94.1 94.4 97.2 (+1.9) SAR

(后时相)– – 62.6 50.0 55.6 50.7 √ – 73.6 51.4 60.5 58.5 (+7.8) – √ 58.3 68.1 62.8 59.1(+8.4) √ √ 67.5 72.3 69.8 66.9 (+16.2) 注:括号内容表示相对基准方法性能提升结果。 表 5 单源检测器的消融实验(%)

Table 5. Ablation study on single-source detectors (%)

图像类型 可见光图像单源检测器 SAR图像单源检测器 P R F1 AP50 可见光

(后时相)RetinaNet RetinaNet 84.9 93.5 90.0 92.6 YOLOv5s YOLOv5s 84.2 80.1 82.1 78.9 YOLOv5s RetinaNet 84.3 77.1 80.5 79.5 RetinaNet YOLOv5s 94.7 94.1 94.4 97.2 SAR

(后时相)RetinaNet RetinaNet 61.6 74.3 67.4 61.6 YOLOv5s YOLOv5s 70.3 69.2 69.8 65.9 YOLOv5s RetinaNet 67.4 70.4 68.9 62.6 RetinaNet YOLOv5s 67.5 72.3 69.8 66.9 -

[1] JIANG Xiao, LI Gang, LIU Yu, et al. Change detection in heterogeneous optical and SAR remote sensing images via deep homogeneous feature fusion[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2020, 13: 1551–1566. doi: 10.1109/jstars.2020.2983993. [2] 王志豪, 李刚, 蒋骁. 基于光学和SAR遥感图像融合的洪灾区域检测方法[J]. 雷达学报, 2020, 9(3): 539–553. doi: 10.12000/JR19095.WANG Zhihao, LI Gang, and JIANG Xiao. Flooded area detection method based on fusion of optical and SAR remote sensing images[J]. Journal of Radars, 2020, 9(3): 539–553. doi: 10.12000/JR19095. [3] ZHANG Qiang, WANG Xueqian, WANG Zhihao, et al. Heterogeneous remote sensing image fusion based on homogeneous transformation and target enhancement[C]. 2022 IEEE International Conference on Unmanned Systems (ICUS), Guangzhou, China, 2022: 688–693. doi: 10.1109/ICUS55513.2022.9987218. [4] WANG Xueqian, ZHU Dong, LI Gang, et al. Proposal-copula-based fusion of spaceborne and airborne SAR images for ship target detection[J]. Information Fusion, 2022, 77: 247–260. doi: 10.1016/j.inffus.2021.07.019. [5] ZHANG Yu, WANG Xueqian, JIANG Zhizhuo, et al. An efficient center-based method with multilevel auxiliary supervision for multiscale SAR ship detection[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022, 15: 7065–7075. doi: 10.1109/jstars.2022.3197210. [6] 刘泽宇, 柳彬, 郭炜炜, 等. 高分三号NSC模式SAR图像舰船目标检测初探[J]. 雷达学报, 2017, 6(5): 473–482. doi: 10.12000/JR17059.LIU Zeyu, LIU Bin, GUO Weiwei, et al. Ship detection in GF-3 NSC mode SAR images[J]. Journal of Radars, 2017, 6(5): 473–482. doi: 10.12000/JR17059. [7] BRUSCH S, LEHNER S, FRITZ T, et al. Ship surveillance with TerraSAR-X[J]. IEEE Transactions on Geoscience and Remote Sensing, 2011, 49(3): 1092–1103. doi: 10.1109/tgrs.2010.2071879. [8] WANG Xueqian, LI Gang, ZHANG Xiaoping, et al. A fast CFAR algorithm based on density-censoring operation for ship detection in SAR images[J]. IEEE Signal Processing Letters, 2021, 28: 1085–1089. doi: 10.1109/lsp.2021.3082034. [9] 张帆, 陆圣涛, 项德良, 等. 一种改进的高分辨率SAR图像超像素CFAR舰船检测算法[J]. 雷达学报, 2023, 12(1): 120–139. doi: 10.12000/JR22067.ZHANG Fan, LU Shengtao, XIANG Deliang, et al. An improved superpixel-based CFAR method for high-resolution SAR image ship target detection[J]. Journal of Radars, 2023, 12(1): 120–139. doi: 10.12000/JR22067. [10] ZHANG Linping, LIU Yu, ZHAO Wenda, et al. Frequency-adaptive learning for SAR ship detection in clutter scenes[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5215514. doi: 10.1109/TGRS.2023.3249349. [11] QIN Chuan, WANG Xueqian, LI Gang, et al. A semi-soft label-guided network with self-distillation for SAR inshore ship detection[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5211814. doi: 10.1109/TGRS.2023.3293535. [12] 刘方坚, 李媛. 基于视觉显著性的SAR遥感图像NanoDet舰船检测方法[J]. 雷达学报, 2021, 10(6): 885–894. doi: 10.12000/JR21105.LIU Fangjian and LI Yuan. SAR remote sensing image ship detection method NanoDet based on visual saliency[J]. Journal of Radars, 2021, 10(6): 885–894. doi: 10.12000/JR21105. [13] 胥小我, 张晓玲, 张天文, 等. 基于自适应锚框分配与IOU监督的复杂场景SAR舰船检测[J]. 雷达学报, 2023, 12(5): 1097–1111. doi: 10.12000/JR23059.XU Xiaowo, ZHANG Xiaoling, ZHANG Tianwen, et al. SAR ship detection in complex scenes based on adaptive anchor assignment and IOU supervise[J]. Journal of Radars, 2023, 12(5): 1097–1111. doi: 10.12000/JR23059. [14] WANG Wensheng, ZHANG Xinbo, SUN Wu, et al. A novel method of ship detection under cloud interference for optical remote sensing images[J]. Remote Sensing, 2022, 14(15): 3731. doi: 10.3390/rs14153731. [15] TIAN Yang, LIU Jinghong, ZHU Shengjie, et al. Ship detection in visible remote sensing image based on saliency extraction and modified channel features[J]. Remote Sensing, 2022, 14(14): 3347. doi: 10.3390/rs14143347. [16] ZHUANG Yin, LI Lianlin, and CHEN He. Small sample set inshore ship detection from VHR optical remote sensing images based on structured sparse representation[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2020, 13: 2145–2160. doi: 10.1109/JSTARS.2020.2987827. [17] HU Jianming, ZHI Xiyang, ZHANG Wei, et al. Salient ship detection via background prior and foreground constraint in remote sensing images[J]. Remote Sensing, 2020, 12(20): 3370. doi: 10.3390/rs12203370. [18] QIN Chuan, WANG Xueqian, LI Gang, et al. An improved attention-guided network for arbitrary-oriented ship detection in optical remote sensing images[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 6514805. doi: 10.1109/LGRS.2022.3198681. [19] REN Zhida, TANG Yongqiang, HE Zewen, et al. Ship detection in high-resolution optical remote sensing images aided by saliency information[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5623616. doi: 10.1109/TGRS.2022.3173610. [20] SI Jihao, SONG Binbin, WU Jixuan, et al. Maritime ship detection method for satellite images based on multiscale feature fusion[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2023, 16: 6642–6655. doi: 10.1109/JSTARS.2023.3296898. [21] BENDER E J, REESE C E, and VAN DER WAL G S. Comparison of additive image fusion vs. feature-level image fusion techniques for enhanced night driving[C]. SPIE 4796, Low-Light-Level and Real-Time Imaging Systems, Components, and Applications, Seattle, USA, 2003: 140–151. doi: 10.1117/12.450867. [22] JIA Yong, KONG Lingjiang, YANG Xiaobo, et al. Multi-channel through-wall-radar imaging based on image fusion[C]. 2011 IEEE RadarCon (RADAR), Kansas City, USA, 2011: 103–105. doi: 10.1109/RADAR.2011.5960508. [23] JIN Yue, YANG Ruliang, and HUAN Ruohong. Pixel level fusion for multiple SAR images using PCA and wavelet transform[C]. 2006 CIE International Conference on Radar, Shanghai, China, 2006: 1–4. doi: 10.1109/ICR.2006.343209. [24] FASANO L, LATINI D, MACHIDON A, et al. SAR data fusion using nonlinear principal component analysis[J]. IEEE Geoscience and Remote Sensing Letters, 2020, 17(9): 1543–1547. doi: 10.1109/LGRS.2019.2951292. [25] ZHU Dong, WANG Xueqian, LI Gang, et al. Vessel detection via multi-order saliency-based fuzzy fusion of spaceborne and airborne SAR images[J]. Information Fusion, 2023, 89: 473–485. doi: 10.1016/j.inffus.2022.08.022. [26] 张良培, 何江, 杨倩倩, 等. 数据驱动的多源遥感信息融合研究进展[J]. 测绘学报, 2022, 51(7): 1317–1337. doi: 10.11947/j.AGCS.2022.20220171.ZHANG Liangpei, HE Jiang, YANG Qianqian, et al. Data-driven multi-source remote sensing data fusion: Progress and challenges[J]. Acta Geodaetica et Cartographica Sinica, 2022, 51(7): 1317–1337. doi: 10.11947/j.AGCS.2022.20220171. [27] 童莹萍, 全英汇, 冯伟, 等. 基于空谱信息协同与Gram-Schmidt变换的多源遥感图像融合方法[J]. 系统工程与电子技术, 2022, 44(7): 2074–2083. doi: 10.12305/j.issn.1001-506X.2022.07.02.TONG Yingping, QUAN Yinghui, FENG Wei, et al. Multi-source remote sensing image fusion method based on spatial-spectrum information collaboration and Gram-Schmidt transform[J]. Systems Engineering and Electronics, 2022, 44(7): 2074–2083. doi: 10.12305/j.issn.1001-506X.2022.07.02. [28] QUANG N H, TUAN V A, HAO N T P, et al. Synthetic aperture radar and optical remote sensing image fusion for flood monitoring in the Vietnam Lower Mekong Basin: A prototype application for the Vietnam open data cube[J]. European Journal of Remote Sensing, 2019, 52(1): 599–612. doi: 10.1080/22797254.2019.1698319. [29] KAUR H, KOUNDAL D, and KADYAN V. Image fusion techniques: A survey[J]. Archives of Computational Methods in Engineering, 2021, 28(7): 4425–4447. doi: 10.1007/s11831-021-09540-7. [30] FUENTES REYES M, AUER S, MERKLE N, et al. SAR-to-optical image translation based on conditional generative adversarial networks—optimization, opportunities and limits[J]. Remote Sensing, 2019, 11(17): 2067. doi: 10.3390/rs11172067. [31] LEWIS J J, O’CALLAGHAN R J, NIKOLOV S G, et al. Pixel- and region-based image fusion with complex wavelets[J]. Information Fusion, 2007, 8(2): 119–130. doi: 10.1016/j.inffus.2005.09.006. [32] JIANG Xiao, HE You, LI Gang, et al. Building damage detection via superpixel-based belief fusion of space-borne SAR and optical images[J]. IEEE Sensors Journal, 2020, 20(4): 2008–2022. doi: 10.1109/jsen.2019.2948582. [33] KHELIFI L and MIGNOTTE M. Deep learning for change detection in remote sensing images: Comprehensive review and meta-analysis[J]. IEEE Access, 2020, 8: 126385–126400. doi: 10.1109/access.2020.3008036. [34] JIANG Xiao, LI Gang, ZHANG Xiaoping, et al. A semisupervised siamese network for efficient change detection in heterogeneous remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 4700718. doi: 10.1109/TGRS.2021.3061686. [35] LI Chengxi, LI Gang, WANG Xueqian, et al. A copula-based method for change detection with multisensor optical remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5620015. doi: 10.1109/TGRS.2023.3312344. [36] YU Ruikun, WANG Guanghui, SHI Tongguang, et al. Potential of land cover classification based on GF-1 and GF-3 data[C]. 2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, USA, 2020: 2747–2750. doi: 10.1109/IGARSS39084.2020.9324435. [37] MA Yanbiao, LI Yuxin, FENG Kexin, et al. Multisource data fusion for the detection of settlements without electricity[C]. 2021 IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 2021: 1839–1842. doi: 10.1109/IGARSS47720.2021.9553860. [38] KANG Wenchao, XIANG Yuming, WANG Feng, et al. CFNet: A cross fusion network for joint land cover classification using optical and SAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022, 15: 1562–1574. doi: 10.1109/JSTARS.2022.3144587. [39] YU Yongtao, LIU Chao, GUAN Haiyan, et al. Land cover classification of multispectral LiDAR data with an efficient self-attention capsule network[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 6501505. doi: 10.1109/LGRS.2021.3071252. [40] WU Xin, LI Wei, HONG Danfeng, et al. Vehicle detection of multi-source remote sensing data using active fine-tuning network[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 167: 39–53. doi: 10.1016/j.isprsjprs.2020.06.016. [41] FANG Qingyun and WANG Zhaokui. Cross-modality attentive feature fusion for object detection in multispectral remote sensing imagery[J]. Pattern Recognition, 2022, 130: 108786. doi: 10.1016/j.patcog.2022.108786. [42] FANG Qingyun and WANG Zhaokui. Fusion detection via distance-decay intersection over union and weighted dempster–shafer evidence theory[J]. Journal of Aerospace Information Systems, 2023, 20(3): 114–125. doi: 10.2514/1.I011031. [43] 焦洪臣, 张庆君, 刘杰, 等. 基于光电通路耦合的光SAR一体化卫星探测系统[P]. 中国, 115639553B, 2023.JIAO Hongchen, ZHANG Qingjun, LIU Jie, et al. Optical and SAR integrated satellite detection system based on photoelectric path coupling[P]. CN, 115639553B, 2023. [44] 焦洪臣, 刘杰, 张庆君, 等. 一种基于光SAR共口径集成的多源一体化探测方法[P]. 中国, 115616561B, 2023.JIAO Hongchen, LIU Jie, ZHANG Qingjun, et al. A multi-source integrated detection method based on optical and SAR co-aperture integration[P]. CN, 115616561B, 2023. [45] ZHANG Lu, ZHU Xiangyu, CHEN Xiangyu, et al. Weakly aligned cross-modal learning for multispectral pedestrian detection[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea (South), 2019: 5126–5136. doi: 10.1109/ICCV.2019.00523. [46] 陈俊. 基于R-YOLO的多源遥感图像海面目标融合检测算法研究[D]. [硕士论文], 华中科技大学, 2019. doi: 10.27157/d.cnki.ghzku.2019.002510.CHEN Jun. Research on maritime target fusion detection in multi-source remote sensing images based on R-YOLO[D]. [Master dissertation], Huazhong University of Science and Technology, 2019. doi: 10.27157/d.cnki.ghzku.2019.002510. [47] OTSU N. A threshold selection method from gray-level histograms[J]. IEEE Transactions on Systems, Man, and Cybernetics, 1979, 9(1): 62–66. doi: 10.1109/TSMC.1979.4310076. [48] HARTIGAN J A and WONG M A. Algorithm AS 136: A K-means clustering algorithm[J]. Journal of the Royal Statistical Society. Series C (Applied Statistics), 1979, 28(1): 100–108. doi: 10.2307/2346830. [49] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[C]. 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 2999–3007. doi: 10.1109/ICCV.2017.324. [50] JOCHER G, STOKEN A, BOROVEC J, et al. YOLOv5[EB/OL]. https://github.com/ultralytics/yolov5, 2020. [51] XIONG Hongqiang, LI Jing, LI Zhilian, et al. GPR-GAN: A ground-penetrating radar data generative adversarial network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5200114. doi: 10.1109/TGRS.2023.3337172. [52] WANG Zhixu, HOU Guangyu, XIN Zhihui, et al. Detection of SAR image multiscale ship targets in complex inshore scenes based on improved YOLOv5[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2024, 17: 5804–5823. doi: 10.1109/JSTARS.2024.3370722. [53] SHI Jingye, ZHI Ruicong, ZHAO Jingru, et al. A double-head global reasoning network for object detection of remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5402216. doi: 10.1109/TGRS.2023.3347798. [54] LI Tianhua, SUN Meng, HE Qinghai, et al. Tomato recognition and location algorithm based on improved YOLOv5[J]. Computers and Electronics in Agriculture, 2023, 208: 107759. doi: 10.1016/j.compag.2023.107759. [55] LIU Wei, QUIJANO K, and CRAWFORD M M. YOLOv5-tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 based on transfer learning[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022, 15: 8085–8094. doi: 10.1109/JSTARS.2022.3206399. [56] XIA Guisong, BAI Xiang, DING Jian, et al. DOTA: A large-scale dataset for object detection in aerial images[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 3974–3983. doi: 10.1109/CVPR.2018.00418. [57] LEI Songlin, LU Dongdong, QIU Xiaolan, et al. SRSDD-v1.0: A high-resolution SAR rotation ship detection dataset[J]. Remote Sensing, 2021, 13(24): 5104. doi: 10.3390/rs13245104. [58] ACHANTA R and SÜSSTRUNK S. Saliency detection using maximum symmetric surround[C]. 2010 IEEE International Conference on Image Processing, Hong Kong, China, 2010: 2653–2656. doi: 10.1109/ICIP.2010.5652636. [59] WANG Wensheng, REN Jianxin, SU Chang, et al. Ship detection in multispectral remote sensing images via saliency analysis[J]. Applied Ocean Research, 2021, 106: 102448. doi: 10.1016/j.apor.2020.102448. [60] 李志远, 郭嘉逸, 张月婷, 等. 基于自适应动量估计优化器与空变最小熵准则的SAR图像船舶目标自聚焦算法[J]. 雷达学报, 2022, 11(1): 83–94. doi: 10.12000/JR21159.LI Zhiyuan, GUO Jiayi, ZHANG Yueting, et al. A novel autofocus algorithm for ship targets in SAR images based on the adaptive momentum estimation optimizer and space-variant minimum entropy criteria[J]. Journal of Radars, 2022, 11(1): 83–94. doi: 10.12000/JR21159. [61] 罗汝, 赵凌君, 何奇山, 等. SAR图像飞机目标智能检测识别技术研究进展与展望[J]. 雷达学报, 2024, 13(2): 307–330. doi: 10.12000/JR23056.LUO Ru, ZHAO Lingjun, HE Qishan, et al. Intelligent technology for aircraft detection and recognition through SAR imagery: Advancements and prospects[J]. Journal of Radars, 2024, 13(2): 307–330. doi: 10.12000/JR23056. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: