Fall Detection Based on Deep Learning Fusing Ultrawideband Radar Spectrograms(in English)

-

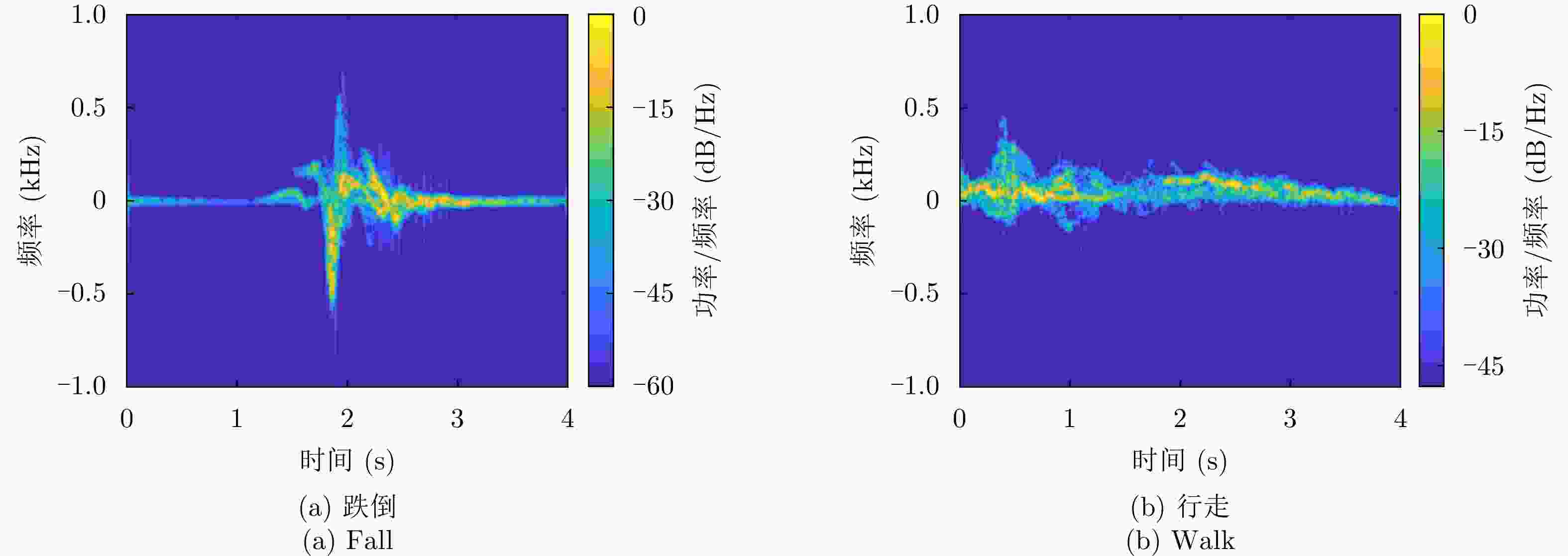

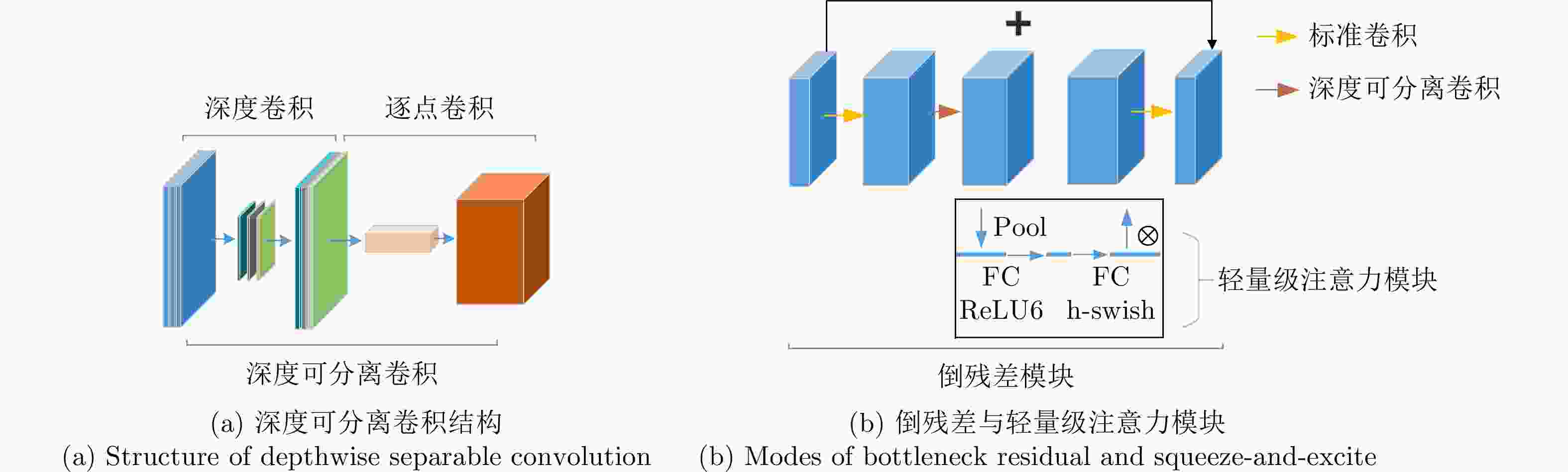

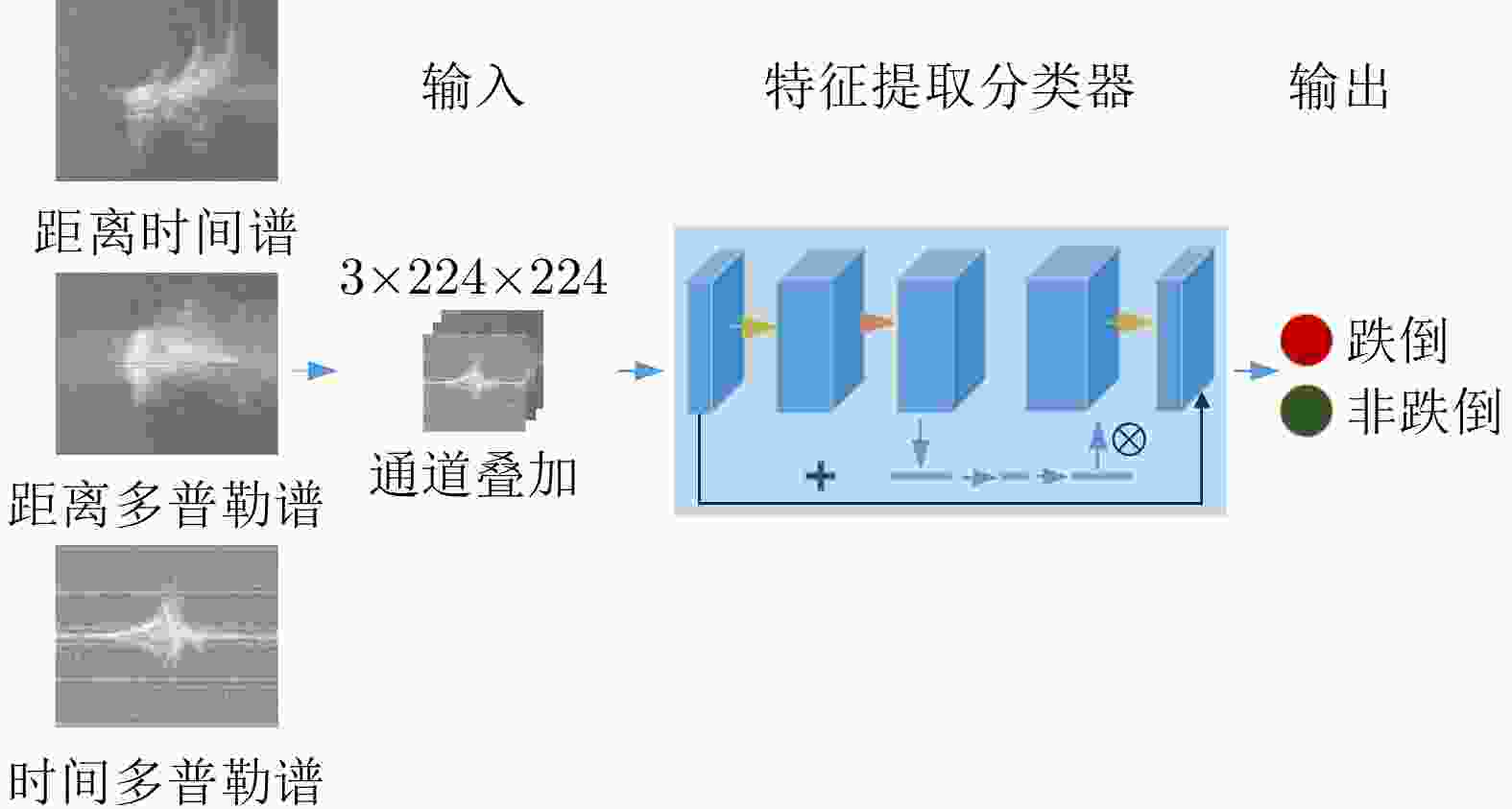

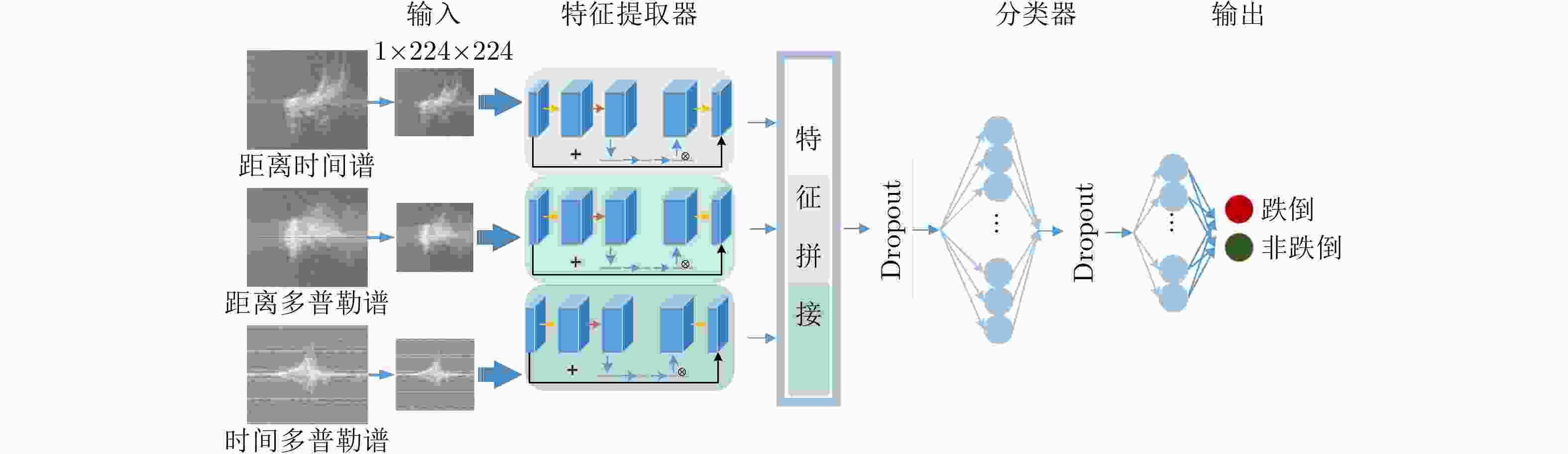

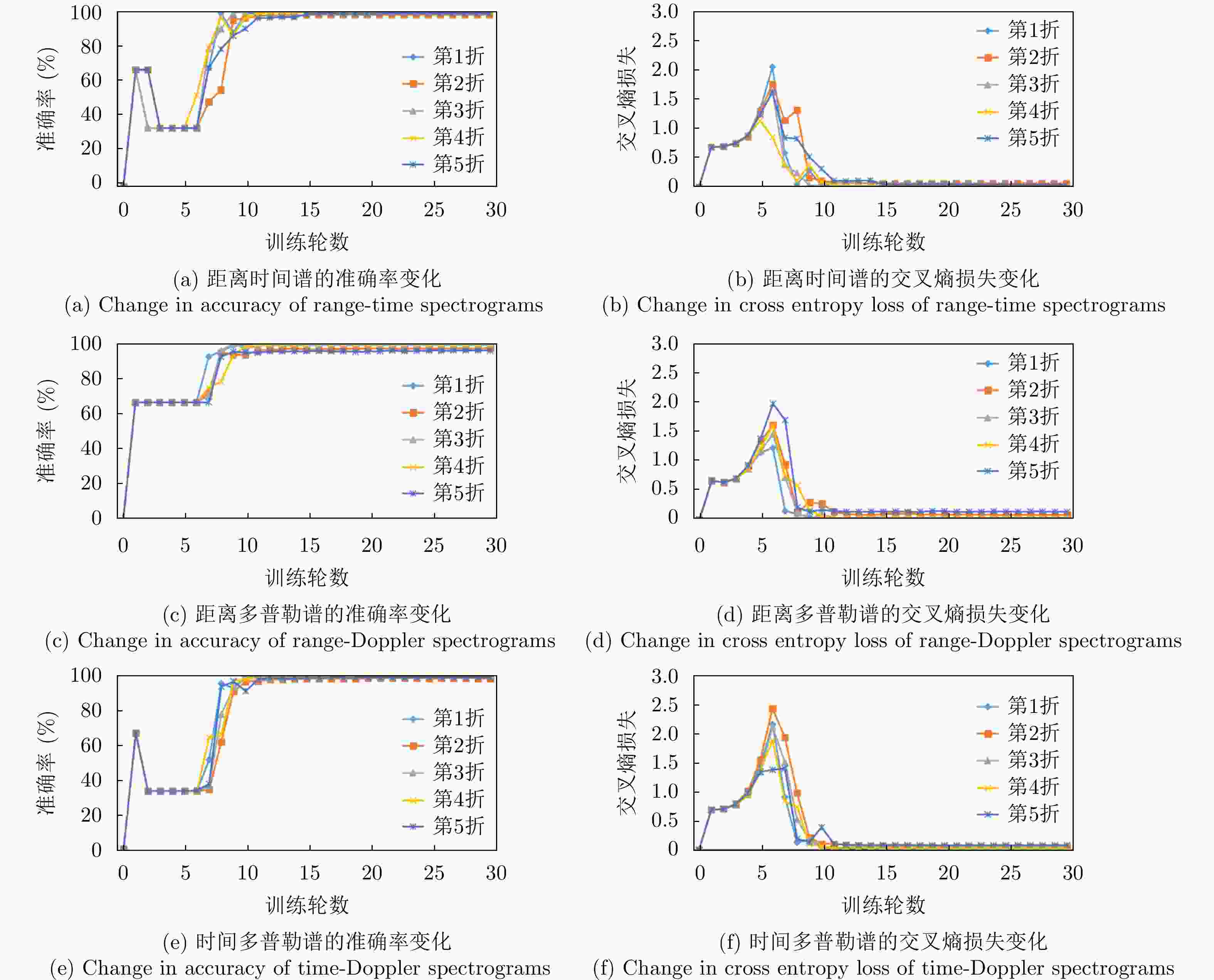

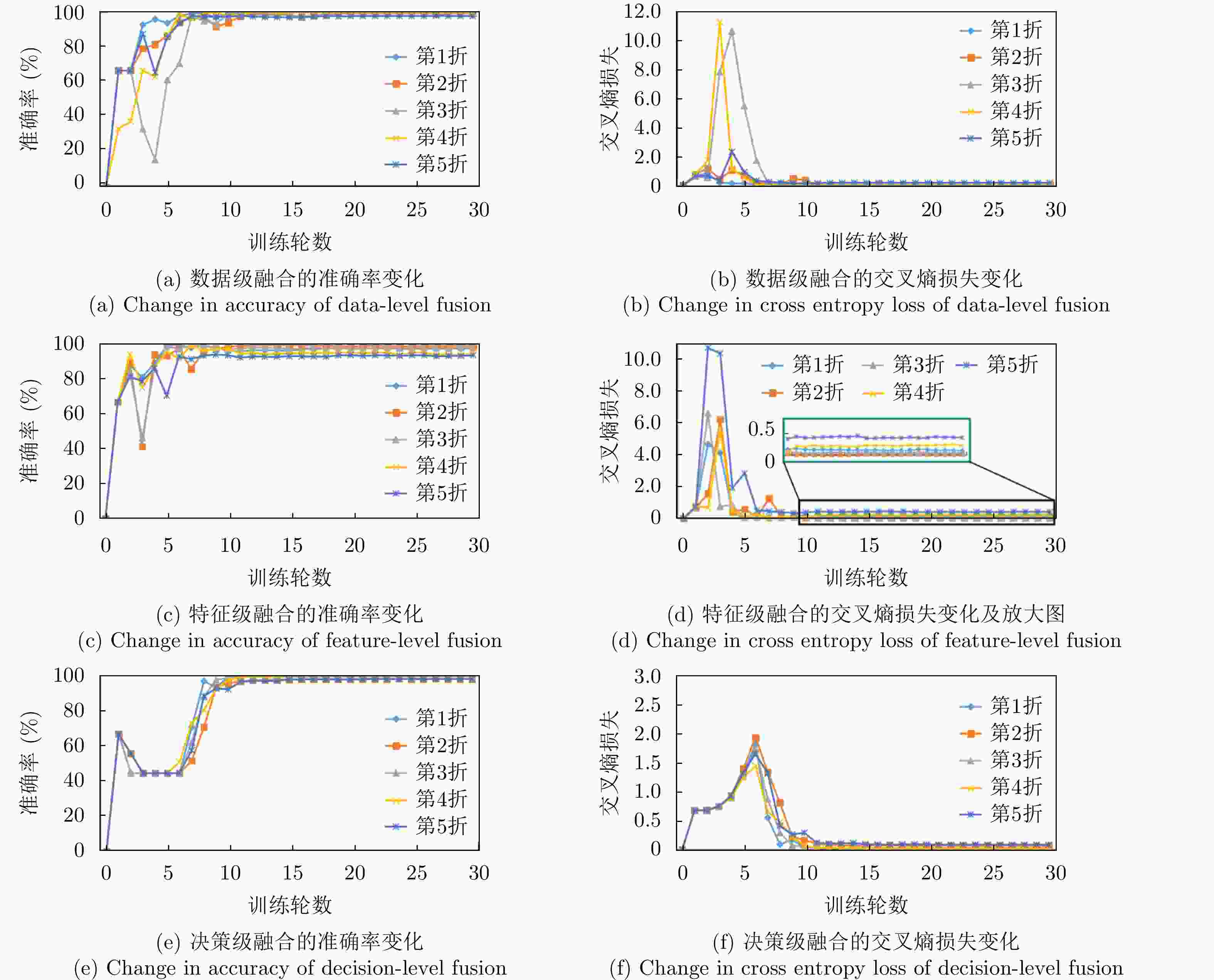

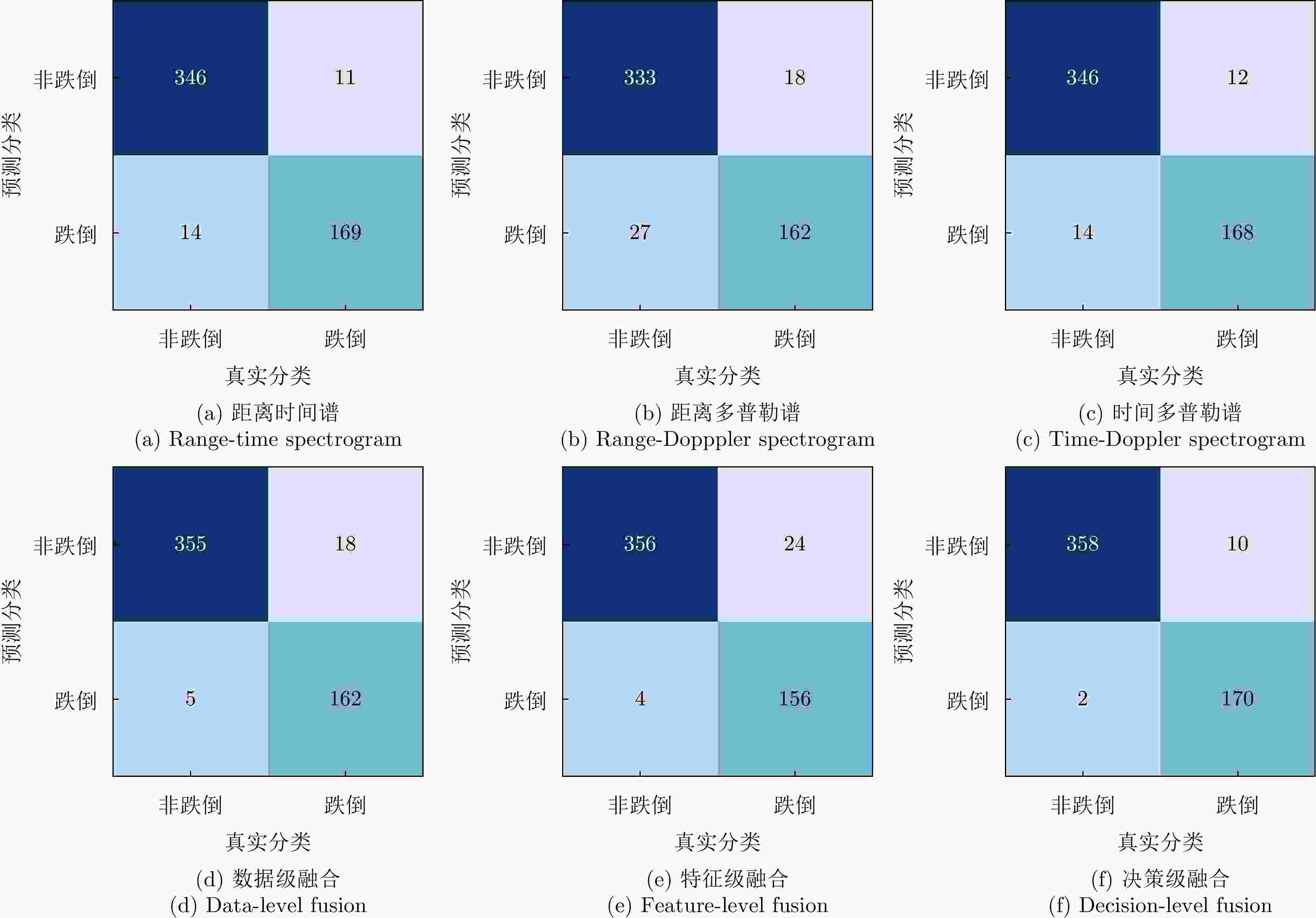

摘要: 相对于窄带多普勒雷达,超宽带雷达能够同时获取目标的距离和多普勒信息,更利于行为识别。为了提高跌倒行为的识别性能,该文采用调频连续波超宽带雷达在两个真实的室内复杂场景下采集36名受试者的日常行为和跌倒的回波数据,建立了动作种类丰富的多场景跌倒检测数据集;通过预处理,获取受试者的距离时间谱、距离多普勒谱和时间多普勒谱;基于MobileNet-V3轻量级网络,设计了数据级、特征级和决策级3种雷达图谱深度学习融合网络。统计分析结果表明,该文提出的决策级融合方法相对于仅用单种图谱、数据级和特征级融合的方法,能够提高跌倒检测的性能(显著性检验方法得到的所有P值<0.003)。决策级融合方法的5折交叉验证的准确率为

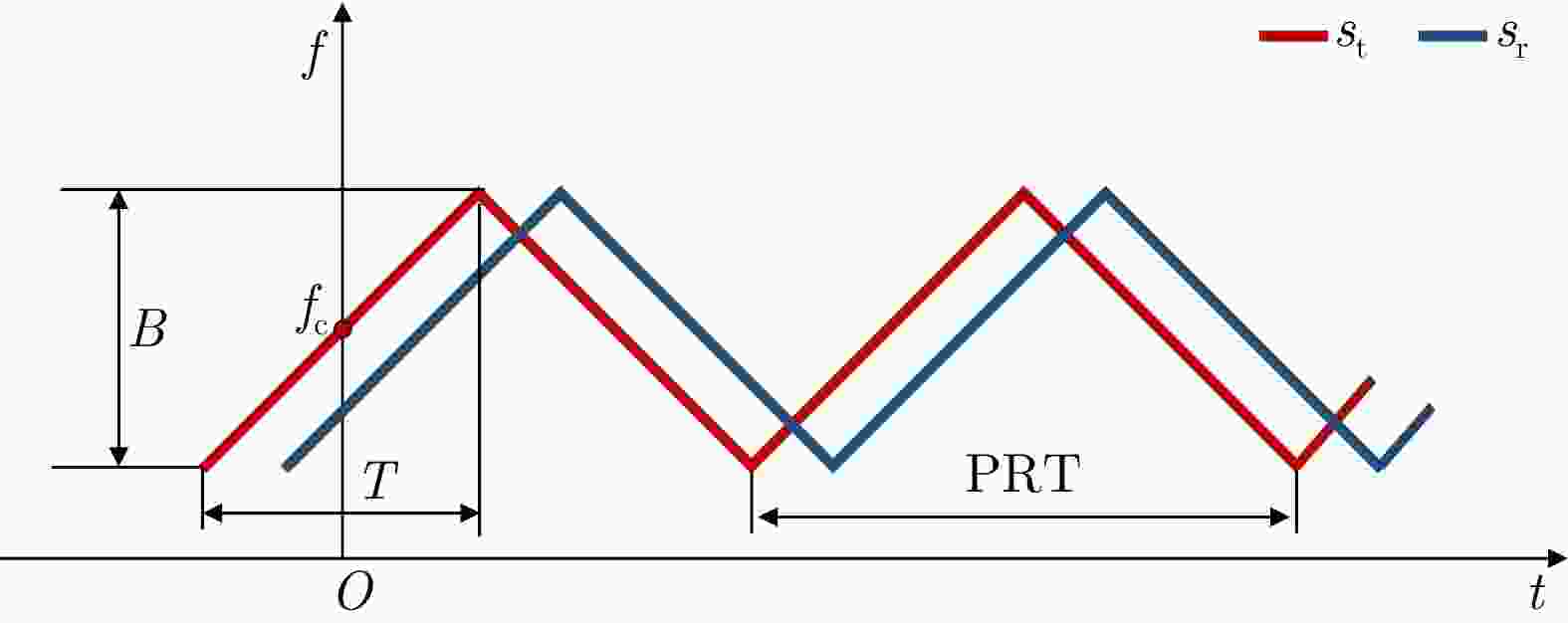

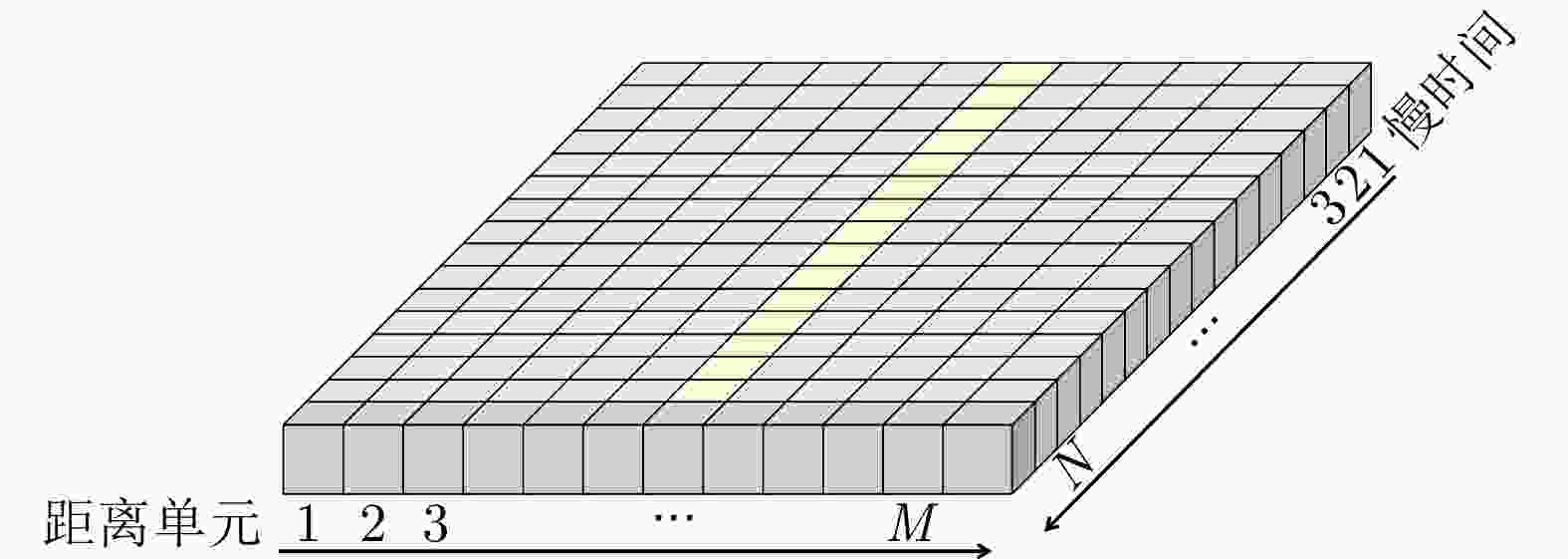

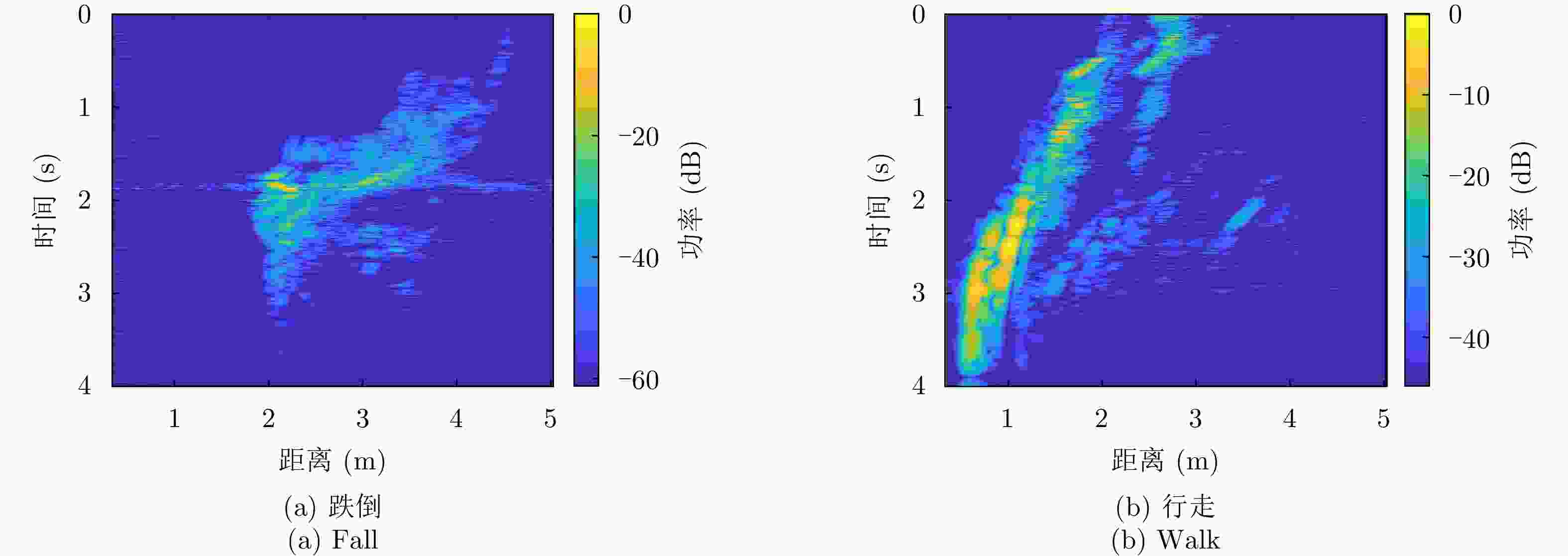

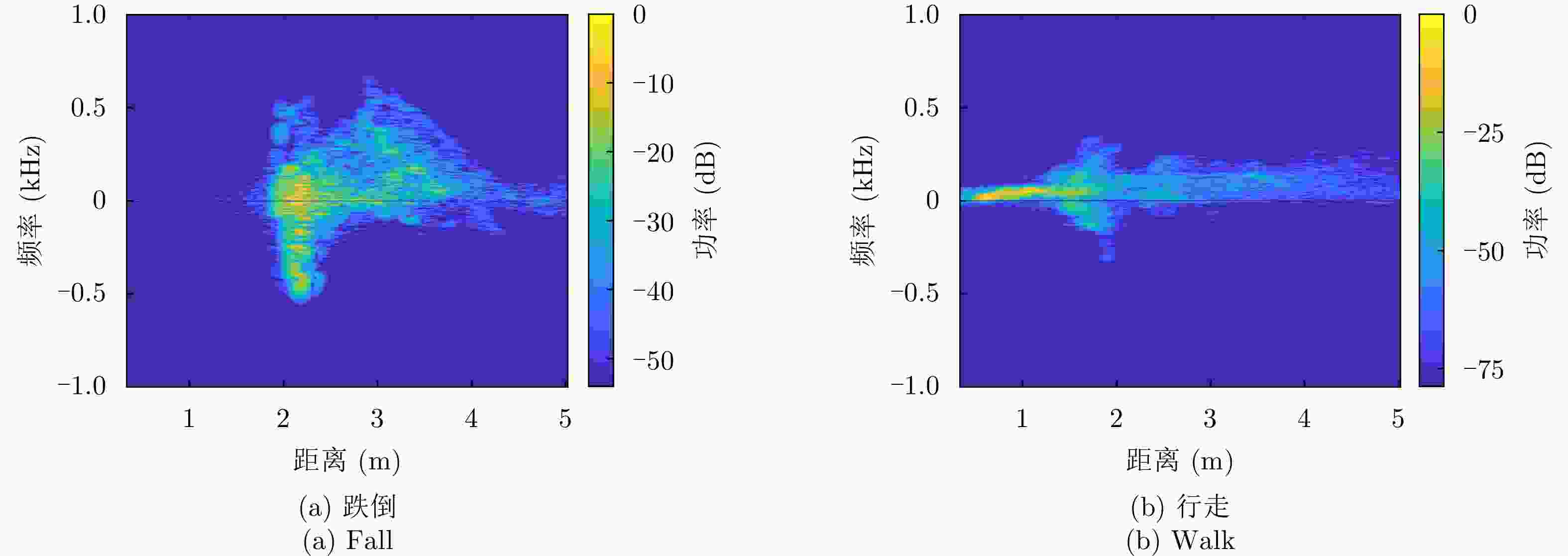

0.9956 ,在新场景下测试的准确率为0.9778 ,具有良好的泛化能力。Abstract: Compared with narrowband Doppler radar, ultrawideband radar can simultaneously acquire the range and Doppler information of targets, which is more beneficial for behavior recognition. To improve the recognition performance of fall behavior, frequency-modulated continuous-wave ultrawideband (UWB) radar was applied to collect daily behavior and fall data of 36 subjects in two real indoor complex scenes, and a multi-scene fall detection dataset was established with various action types; the range-time, time-Doppler, and range-Doppler spectrograms of the subjects were obtained after preprocessing radar data; based on the MobileNet-V3 lightweight network, three types of deep learning fusion networks at the data level, feature level, and decision level were designed for the radar spectrograms, respectively. A statistical analysis shows that the decision level fusion method proposed in this paper can improve fall detection performance compared with those using one type of spectrogram, the data level and the feature level fusion methods (all P values by significance test method are less than 0.003). The accuracies of 5-fold cross-validation and testing in the new scene of the decision level fusion method are0.9956 and0.9778 , respectively, which indicates the good generalization ability of the proposed method.-

Key words:

- Ultrawideband radar /

- Deep learning /

- Fall detection /

- Data fusion /

- Ligthweight network

-

表 1 MobileNet-V3网络和融合网络的大小及耗时对比

Table 1. Comparison of size and time consumption of MobileNet-V3 network and fusion networks

网络类型 训练耗时(h) 网络大小(MB) 测试平均耗时(s) MobileNet-V3

(距离时间谱)0.2833 5.9082 0.0035 数据级 2.3500 5.9092 0.0030 特征级 1.5447 11.9072 0.0046 决策级 0.8500 17.7246 0.1030 表 2 跌倒检测5折交叉验证评价指标对比(场景1)

Table 2. Comparison of evaluation indicators for 5-fold cross-validation of fall detection (Scene 1)

模型 Ac Pr Se Sp F1-score 单种图谱 距离时间谱 0.9923 0.9899 0.9889 0.9950 0.9894 距离多普勒谱 0.9822 0.9712 0.9756 0.9856 0.9734 时间多普勒谱 0.9893 0.9834 0.9844 0.9917 0.9839 融合方法 数据级融合 0.9933 0.9911 0.9889 0.9956 0.9900 特征级融合 0.9866 0.9757 0.9844 0.9878 0.9801 决策级融合 0.9956 0.9933 0.9933 0.9967 0.9933 表 3 不同模型之间跌倒检测性能的对比(场景2)

Table 3. Comparison of fall detection performance between different models (Scene 2)

模型 Ac Pr Se Sp F1-score 单种图谱 距离时间谱 0.9537 0.9235 0.9389 0.9611 0.9313 距离多普勒谱 0.9167 0.8571 0.9000 0.9250 0.8781 时间多普勒谱 0.9519 0.9231 0.9333 0.9611 0.9282 融合方法 数据级融合 0.9574 0.9701 0.9000 0.9861 0.9337 特征级融合 0.9482 0.9750 0.8667 0.9889 0.9177 决策级融合 0.9778 0.9883 0.9444 0.9944 0.9659 表 1 Comparison of size and time consumption of MobileNet-V3 network and fusion networks

Network type Training time (h) Network size (MB) Average testing time (s) MobileNet-V3 (range-time spectrograms) 0.2833 5.9082 0.0035 Data-level 2.3500 5.9092 0.0030 Feature-level 1.5447 11.9072 0.0046 Decision-level 0.8500 17.7246 0.1030 表 2 Comparison of evaluation indicators for 5-fold cross-validation of fall detection (Scene 1)

Models Accuracy Precision Sensitivity Specificity F 1-score Single spectrogram Range-time spectrogram 0.9923 0.9899 0.9889 0.9950 0.9894 Range-Doppler spectrogram 0.9822 0.9712 0.9756 0.9856 0.9734 Time-Doppler spectrogram 0.9893 0.9834 0.9844 0.9917 0.9839 Fusion method Data-level fusion 0.9933 0.9911 0.9889 0.9956 0.9900 Feature-level fusion 0.9866 0.9757 0.9844 0.9878 0.9801 Decision-level fusion 0.9956 0.9933 0.9933 0.9967 0.9933 表 3 Comparison of fall detection performance between different models (Scene 2)

Models Accuracy Precision Sensitivity Specificity F 1-score Single spectrogram Range-time spectrogram 0.9537 0.9235 0.9389 0.9611 0.9313 Range-Doppler spectrogram 0.9167 0.8571 0.9000 0.9250 0.8781 Time-Doppler spectrogram 0.9519 0.9231 0.9333 0.9611 0.9282 Fusion method Data-level fusion 0.9574 0.9701 0.9000 0.9861 0.9337 Feature-level fusion 0.9482 0.9750 0.8667 0.9889 0.9177 Decision-level fusion 0.9778 0.9883 0.9444 0.9944 0.9659 -

[1] DOS SANTOS R B, LAGO G N, JENCIUS M C, et al. Older adults’ views on barriers and facilitators to participate in a multifactorial falls prevention program: Results from Prevquedas Brasil[J]. Archives of Gerontology and Geriatrics, 2021, 92: 104287. doi: 10.1016/j.archger.2020.104287. [2] HU Zhan and PENG Xizhe. Strategic changes and policy choices in the governance of China’s aging society[J]. Social Sciences in China, 2020, 41(4): 185–208. doi: 10.1080/02529203.2020.1844451. [3] DAVIS J C, ROBERTSON M C, ASHE M C, et al. International comparison of cost of falls in older adults living in the community: A systematic review[J]. Osteoporosis International, 2010, 21(8): 1295–1306. doi: 10.1007/s00198-009-1162-0. [4] IIO T, SHIOMI M, KAMEI K, et al. Social acceptance by senior citizens and caregivers of a fall detection system using range sensors in a nursing home[J]. Advanced Robotics, 2016, 30(3): 190–205. doi: 10.1080/01691864.2015.1120241. [5] NOORUDDIN S, ISLAM M, SHARNA F A, et al. Sensor-based fall detection systems: A Review[J]. Journal of Ambient Intelligence and Humanized Computing, 2022, 13(5): 2735–2751. doi: 10.1007/s12652-021-03248-z. [6] XEFTERIS V R, TSANOUSA A, MEDITSKOS G, et al. Performance, challenges, and limitations in multimodal fall detection systems: A review[J]. IEEE Sensors Journal, 2021, 21(17): 18398–18409. doi: 10.1109/JSEN.2021.3090454. [7] SALEH M and LE BOUQUIN JEANNÈS R. Elderly fall detection using wearable sensors: A low cost highly accurate algorithm[J]. IEEE Sensors Journal, 2019, 19(8): 3156–3164. doi: 10.1109/JSEN.2019.2891128. [8] RASTOGI S and SINGH J. A systematic review on machine learning for fall detection system[J]. Computational Intelligence, 2021, 37(2): 951–974. doi: 10.1111/coin.12441. [9] GRACEWELL J J and PAVALARAJAN S. RETRACTED ARTICLE: Fall detection based on posture classification for smart home environment[J]. Journal of Ambient Intelligence and Humanized Computing, 2021, 12(3): 3581–3588. doi: 10.1007/s12652-019-01600-y. [10] LU Na, WU Yidan, FENG Li, et al. Deep learning for fall detection: Three-dimensional CNN combined with LSTM on video kinematic data[J]. IEEE Journal of Biomedical and Health Informatics, 2019, 23(1): 314–323. doi: 10.1109/JBHI.2018.2808281. [11] CIPPITELLI E, FIORANELLI F, GAMBI E, et al. Radar and RGB-depth sensors for fall detection: A review[J]. IEEE Sensors Journal, 2017, 17(12): 3585–3604. doi: 10.1109/JSEN.2017.2697077. [12] TARAMASCO C, RODENAS T, MARTINEZ F, et al. A novel monitoring system for fall detection in older people[J]. IEEE Access, 2018, 6: 43563–43574. doi: 10.1109/ACCESS.2018.2861331. [13] ABOBAKR A, HOSSNY M, and NAHAVANDI S. A skeleton-free fall detection system from depth images using random decision forest[J]. IEEE Systems Journal, 2018, 12(3): 2994–3005. doi: 10.1109/JSYST.2017.2780260. [14] LE H T, PHUNG S L, and BOUZERDOUM A. A fast and compact deep Gabor network for micro-Doppler signal processing and human motion classification[J]. IEEE Sensors Journal, 2021, 21(20): 23085–23097. doi: 10.1109/JSEN.2021.3106300. [15] SU W C, WU Xuanxin, HORNG T S, et al. Hybrid continuous-wave and self-injection-locking monopulse radar for posture and fall detection[J]. IEEE Transactions on Microwave Theory and Techniques, 2022, 70(3): 1686–1695. doi: 10.1109/TMTT.2022.3142142. [16] SAHO K, HAYASHI S, TSUYAMA M, et al. Machine learning-based classification of human behaviors and falls in restroom via dual Doppler radar measurements[J]. Sensors, 2022, 22(5): 1721. doi: 10.3390/s22051721. [17] WANG Yongchuan, YANG Song, LI Fan, et al. FallViewer: A fine-grained indoor fall detection system with ubiquitous Wi-Fi devices[J]. IEEE Internet of Things Journal, 2021, 8(15): 12455–12466. doi: 10.1109/JIOT.2021.3063531. [18] GURBUZ S Z and AMIN M G. Radar-based human-motion recognition with deep learning: Promising applications for indoor monitoring[J]. IEEE Signal Processing Magazine, 2019, 36(4): 16–28. doi: 10.1109/MSP.2018.2890128. [19] MAITRE J, BOUCHARD K, and GABOURY S. Fall detection with UWB radars and CNN-LSTM architecture[J]. IEEE Journal of Biomedical and Health Informatics, 2021, 25(4): 1273–1283. doi: 10.1109/JBHI.2020.3027967. [20] SADREAZAMI H, BOLIC M, and RAJAN S. Contactless fall detection using time-frequency analysis and convolutional neural networks[J]. IEEE Transactions on Industrial Informatics, 2021, 17(10): 6842–6851. doi: 10.1109/TII.2021.3049342. [21] GURBUZ S Z, CLEMENTE C, BALLERI A, et al. Micro-Doppler-based in-home aided and unaided walking recognition with multiple radar and sonar systems[J]. IET Radar, Sonar & Navigation, 2017, 11(1): 107–115. doi: 10.1049/iet-rsn.2016.0055. [22] AMIN M G, ZHANG Y D, AHMAD F, et al. Radar signal processing for elderly fall detection: The future for in-home monitoring[J]. IEEE Signal Processing Magazine, 2016, 33(2): 71–80. doi: 10.1109/MSP.2015.2502784. [23] MA Liang, LIU Meng, WANG Na, et al. Room-level fall detection based on ultra-wideband (UWB) monostatic radar and convolutional long short-term memory (LSTM)[J]. Sensors, 2020, 20(4): 1105. doi: 10.3390/s20041105. [24] JOKANOVIĆ B and AMIN M. Fall detection using deep learning in range-Doppler radars[J]. IEEE Transactions on Aerospace and Electronic Systems, 2018, 54(1): 180–189. doi: 10.1109/TAES.2017.2740098. [25] EROL B and AMIN M G. Radar data cube analysis for fall detection[C]. 2018 IEEE International Conference on Acoustics, Speech and Signal Processing, Calgary, Canada, 2018: 2446–2450. [26] WANG Mingyang, CUI Guolong, YANG Xiaobo, et al. Human body and limb motion recognition via stacked gated recurrent units network[J]. IET Radar, Sonar & Navigation, 2018, 12(9): 1046–1051. doi: 10.1049/iet-rsn.2018.5054. [27] TAYLOR W, DASHTIPOUR K, SHAH S A, et al. Radar sensing for activity classification in elderly people exploiting micro-Doppler signatures using machine learning[J]. Sensors, 2021, 21(11): 3881. doi: 10.3390/s21113881. [28] ANISHCHENKO L, ZHURAVLEV A, and CHIZH M. Fall detection using multiple bioradars and convolutional neural networks[J]. Sensors, 2019, 19(24): 5569. doi: 10.3390/s19245569. [29] ARAB H, GHAFFARI I, CHIOUKH L, et al. A convolutional neural network for human motion recognition and classification using a millimeter-wave Doppler radar[J]. IEEE Sensors Journal, 2022, 22(5): 4494–4502. doi: 10.1109/JSEN.2022.3140787. [30] HE Mi, YANG Yi, PING Qinwen, et al. Optimum target range bin selection method for time-frequency analysis to detect falls using wideband radar and a lightweight network[J]. Biomedical Signal Processing and Control, 2022, 77: 103741. doi: 10.1016/j.bspc.2022.103741. [31] HE Mi, NIAN Yongjian, and GONG Yushun. Novel signal processing method for vital sign monitoring using FMCW radar[J]. Biomedical Signal Processing and Control, 2017, 33: 335–345. doi: 10.1016/j.bspc.2016.12.008. [32] HOWARD A, SANDLER M, CHEN Bo, et al. Searching for MobileNetV3[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 1314–1324. [33] SU Boyu, HO K C, RANTZ M J, et al. Doppler radar fall activity detection using the wavelet transform[J]. IEEE Transactions on Biomedical Engineering, 2015, 62(3): 865–875. doi: 10.1109/TBME.2014.2367038. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: