Target Recognition Method for Multi-aspect Synthetic Aperture Radar Images Based on EfficientNet and BiGRU

-

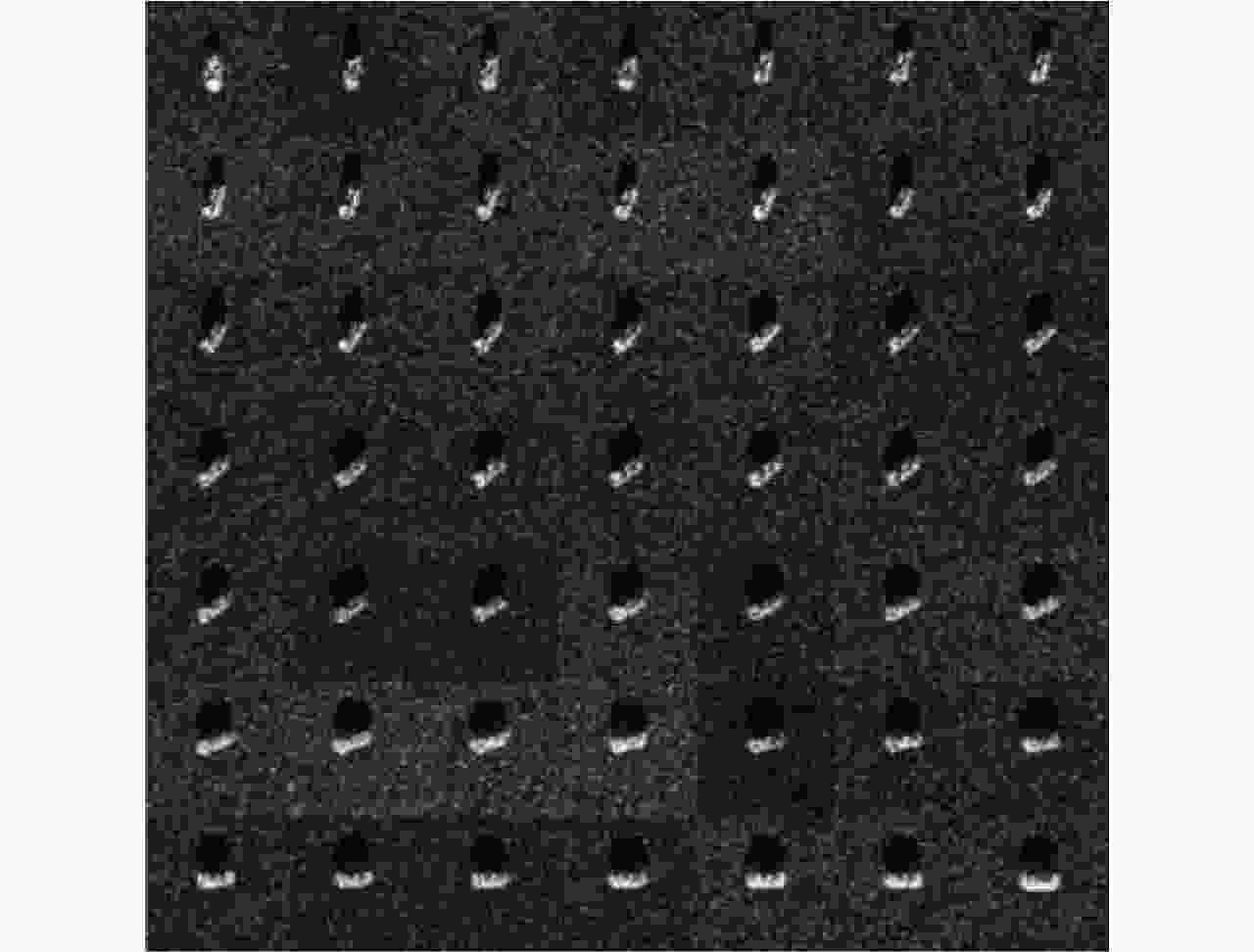

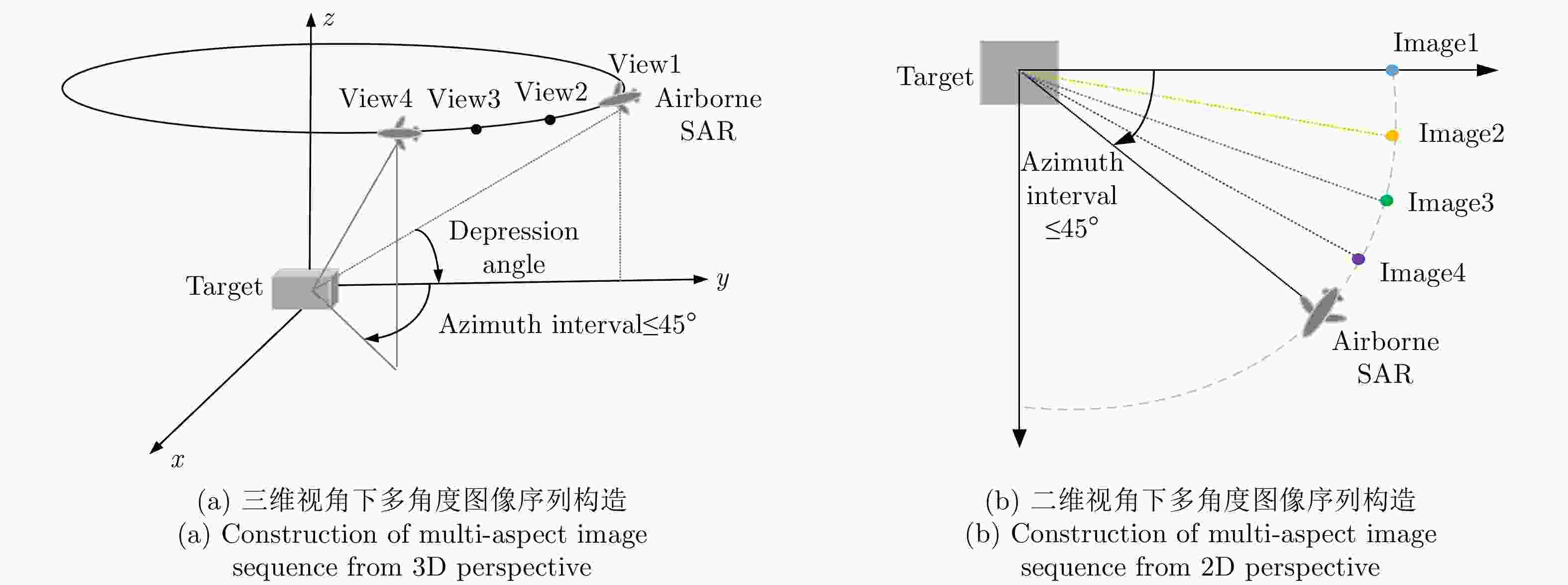

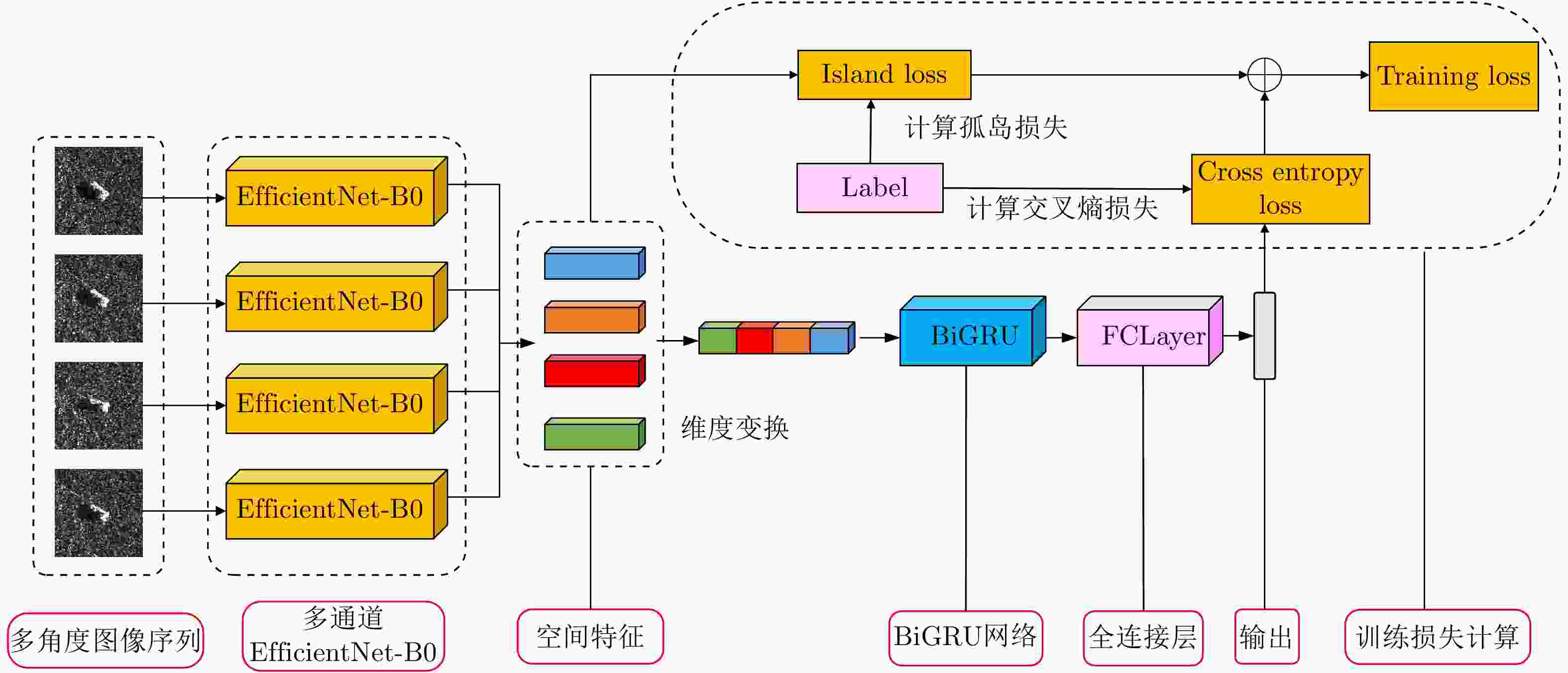

摘要: 合成孔径雷达(SAR)的自动目标识别(ATR)技术目前已广泛应用于军事和民用领域。SAR图像对成像的方位角极其敏感,同一目标在不同方位角下的SAR图像存在一定差异,而多方位角的SAR图像序列蕴含着更加丰富的分类识别信息。因此,该文提出一种基于EfficientNet和BiGRU的多角度SAR目标识别模型,并使用孤岛损失来训练模型。该方法在MSTAR数据集10类目标识别任务中可以达到100%的识别准确率,对大俯仰角(擦地角)下成像、存在版本变体、存在配置变体的3种特殊情况下的SAR目标分别达到了99.68%, 99.95%, 99.91%的识别准确率。此外,该方法在小规模的数据集上也能达到令人满意的识别准确率。实验结果表明,该方法在MSTAR的大部分数据集上识别准确率均优于其他多角度SAR目标识别方法,且具有一定的鲁棒性。

-

关键词:

- 合成孔径雷达 /

- 自动目标识别 /

- 多角度识别 /

- EfficientNet

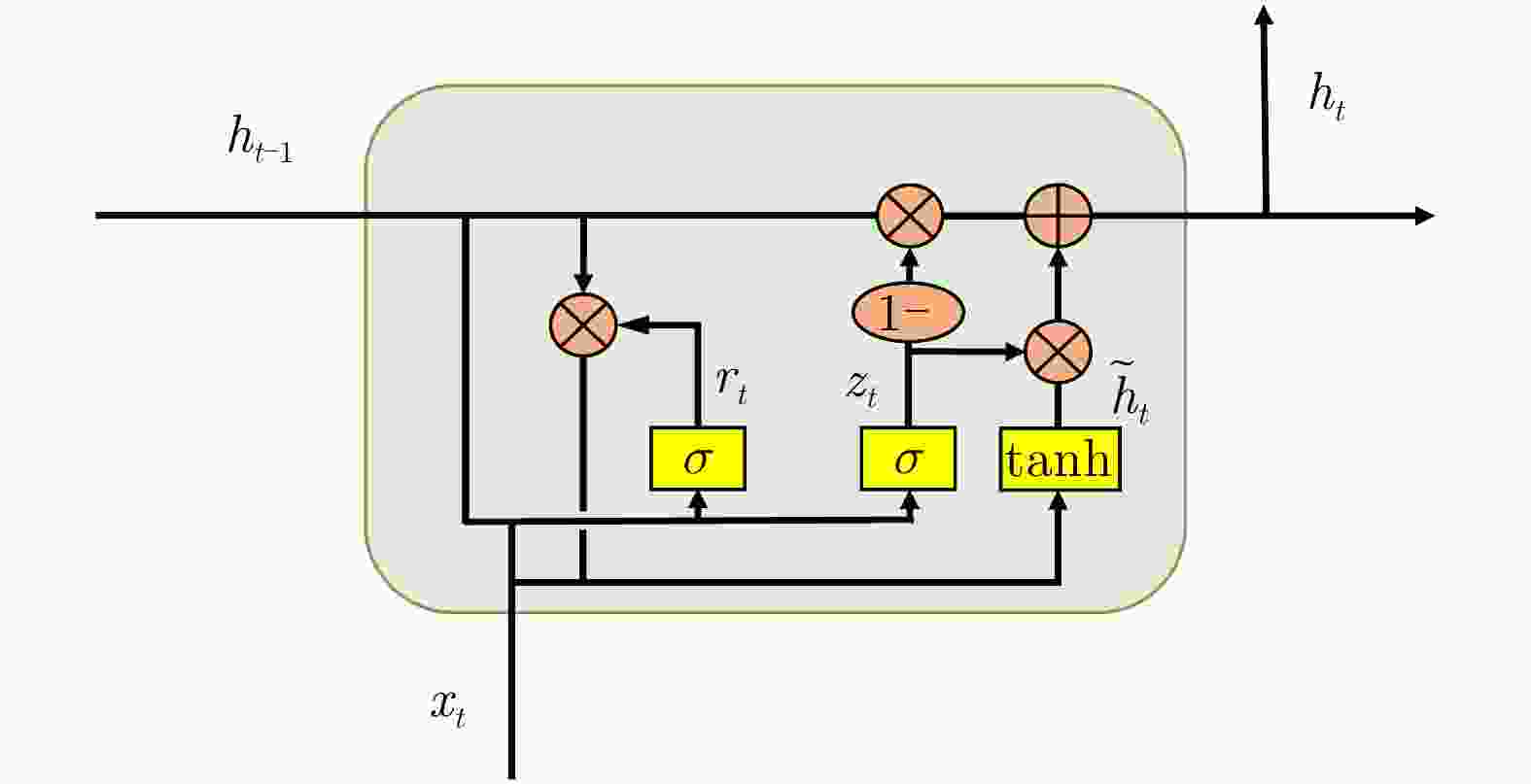

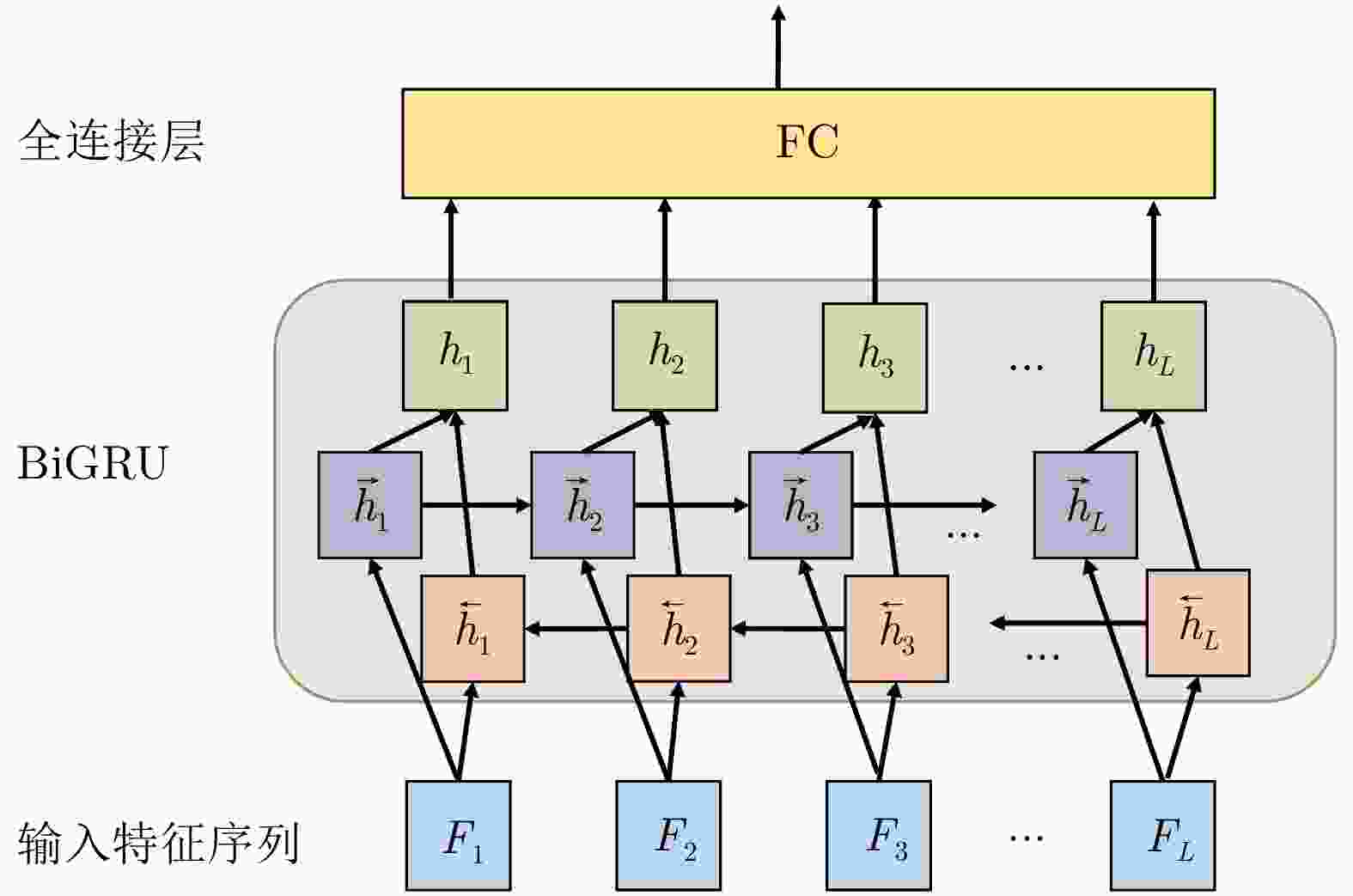

Abstract: Automatic Target Recognition (ATR) in Synthetic Aperture Radar (SAR) has been extensively applied in military and civilian fields. However, SAR images are very sensitive to the azimuth of the images, as the same target can differ greatly from different aspects. This means that more reliable and robust multiaspect ATR recognition is required. In this paper, we propose a multiaspect ATR model based on EfficientNet and BiGRU. To train this model, we use island loss, which is more suitable for SAR ATR. Experimental results have revealed that our proposed method can achieve 100% accuracy for 10-class recognition on the Moving and Stationary Target Acquisition and Recognition (MSTAR) database. The SAR targets in three special imaging cases with large depression angles, version variants, and configuration variants reached recognition accuracies of 99.68%, 99.95%, and 99.91%, respectively. In addition, the proposed method achieves satisfactory accuracy even with smaller datasets. Our experimental results show that our proposed method outperforms other state-of-the-art ATR methods on most MSTAR datasets and exhibits a certain degree of robustness. -

表 1 EfficientNet-B0网络结构

Table 1. EfficientNet-B0 network structure

阶段 模块 输出尺寸 层数 1 Conv3×3 16×32×32 1 2 MBConv1, k3×3 24×32×32 1 3 MBConv6, k3×3 40×16×16 2 4 MBConv6, k5×5 80×8×8 2 5 MBConv6, k3×3 112×8×8 3 6 MBConv6, k5×5 192×4×4 3 7 MBConv6, k5×5 320×2×2 4 8 MBConv6, k3×3 1280×2×2 1 9 Conv1×1 & Pooling & FC k 1 表 2 EfficientNet-B0与ResNet50网络对比

Table 2. Comparison of EfficientNet-B0 and ResNet50 networks

模型 参数量 (M) FLOPS (B) top1/top5准确率 (%) EfficientNet-B0 5.3 0.39 77.3/93.5 ResNet50 26.0 4.10 76.0/93.0 表 3 图像序列L为4时,SOC数据集大小

Table 3. SOC dataset size when L=4

目标名称 训练集数量 测试集数量 2S1 1162 1034 BMP2 883 634 BRDM_2 1158 1040 BTR70 889 649 BTR60 978 667 D7 1162 1037 T62 1162 1032 T72 874 642 ZIL131 1162 1034 ZSU_234 1162 1040 合计 10592 8809 表 4 图像序列L为4时,EOC-1数据集大小

Table 4. EOC-1 dataset size when L=4

目标名称 训练集数量 测试集数量 2S1 1166 1088 BRDM_2 1162 1084 T72 913 1088 ZSU_234 1166 1088 合计 4407 4348 表 5 EOC-1, EOC-2与EOC-3数据集大小

Table 5. EOC-1, EOC-2 and EOC-3 dataset size

L 数据集 训练集总数 测试集总数 4 EOC-1 4407 4384 4 EOC-2 4473 9996 4 EOC-3 4473 12969 3 EOC-1 3307 3310 3 EOC-2 2889 7773 3 EOC-3 2889 10199 2 EOC-1 2202 2312 2 EOC-2 1934 5258 2 EOC-3 1934 6911 表 6 部分进行数据增广的数据集增广后大小

Table 6. The size of some data sets for data augmentation

L 数据集类型 训练集总数 4 EOC-1 17392 3 SOC 16032 3 EOC-1 13228 3 EOC-2&EOC-3 11544 2 SOC 16041 2 EOC-1 8808 2 EOC-2&EOC-3 7736 表 7 SOC实验中各参数设置

Table 7. Parameter in SOC experiment

名称 设置参数 Batch Size 32 优化器 Adam Adam的学习率 0.001 Island Loss的优化器 SGD SGD的学习率 0.5 Island Loss参数$ \lambda $ 0.001 Island Loss参数$ { \lambda }_{1} $ 10 Epochs 260 表 8 图像序列数L为4时,EOC-1混淆矩阵

Table 8. The EOC-1 confusion matrix when L=4

类型 S1 BRDM_2 T72 ZSU_234 Acc (%) 2S1 1076 2 10 0 98.90 BRDM_2 0 1084 0 0 100.00 T72 0 0 1088 0 100.00 ZSU_234 2 0 0 1086 99.82 平均值 99.68 表 9 图像序列数L为4时,各方法识别准确率在SOC与EOC-1数据集上对比

Table 9. Comparison of the recognition accuracy on SOC and EOC-1 dataset when L is 4

表 10 图像序列数L为3时,各方法准确率对比(%)

Table 10. Comparison of test accuracy when L=3 (%)

表 11 图像序列数L为2时,各方法准确率对比(%)

Table 11. Comparison of test accuracy when L=2 (%)

表 12 EOC-2数据集识别准确率对比(%)

Table 12. Comparison of accuracy on EOC-2 (%)

表 13 EOC-3数据集识别准确率对比(%)

Table 13. Comparison of accuracy on EOC-3 (%)

表 14 在缩减数据集上的识别准确率(%)

Table 14. Recognition accuracy on the reduced dataset (%)

数据集规模 5% 15% 50% 本文方法 95.98 99.72 99.93 ResNet-LSTM[16] 93.97 99.37 99.58 表 15 消融实验结果

Table 15. Results of ablation experiments

序号 Center

LossIsland

LossEfficientNet BiGRU 准确率

(%)提升

(%)1 94.08 – 2 √ 95.81 1.73 3 √ 97.03 1.22 4 √ √ 98.46 1.43 5 √ √ √ 99.08 0.62 -

[1] 盖旭刚, 陈晋汶, 韩俊, 等. 合成孔径雷达的现状与发展趋势[J]. 飞航导弹, 2011(3): 82–86, 95.GAI Xugang, CHEN Jinwen, HAN Jun, et al. Development status and trend of synthetic aperture radar[J]. Aerodynamic Missile Journal, 2011(3): 82–86, 95. [2] 张红, 王超, 张波, 等. 高分辨率SAR图像目标识别[M]. 北京: 科学出版社, 2009.ZHANG Hong, WANG Chao, ZHANG Bo, et al. Target Recognition in High Resolution SAR Images[M]. Beijing: Science Press, 2009. [3] MOREIRA A, PRATS-IRAOLA P, YOUNIS M, et al. A tutorial on synthetic aperture radar[J]. IEEE Geoscience and Remote Sensing Magazine, 2013, 1(1): 6–43. doi: 10.1109/MGRS.2013.2248301 [4] 王瑞霞, 林伟, 毛军. 基于小波变换和PCA的SAR图像相干斑抑制[J]. 计算机工程, 2008, 34(20): 235–237. doi: 10.3969/j.issn.1000-3428.2008.20.086WANG Ruixia, LIN Wei, and MAO Jun. Speckle suppression for SAR image based on wavelet transform and PCA[J]. Computer Engineering, 2008, 34(20): 235–237. doi: 10.3969/j.issn.1000-3428.2008.20.086 [5] CHEN Sizhe and WANG Haipeng. SAR target recognition based on deep learning[C]. 2014 International Conference on Data Science and Advanced Analytics, Shanghai, China, 2015. [6] 田壮壮, 占荣辉, 胡杰民, 等. 基于卷积神经网络的SAR图像目标识别研究[J]. 雷达学报, 2016, 5(3): 320–325. doi: 10.12000/JR16037TIAN Zhuangzhuang, ZHAN Ronghui, HU Jiemin, et al. SAR ATR based on convolutional neural network[J]. Journal of Radars, 2016, 5(3): 320–325. doi: 10.12000/JR16037 [7] CHEN Sizhe, WANG Haipeng, XU Feng, et al. Target classification using the deep convolutional networks for SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(8): 4806–4817. doi: 10.1109/TGRS.2016.2551720 [8] FURUKAWA H. Deep learning for target classification from SAR imagery: Data augmentation and translation invariance[R]. SANE2017-30, 2017. [9] 袁媛, 袁昊, 雷玲, 等. 一种同步轨道星机双基SAR成像方法[J]. 雷达科学与技术, 2007, 5(2): 128–132. doi: 10.3969/j.issn.1672-2337.2007.02.011YUAN Yuan, YUAN Hao, LEI Ling, et al. An imaging method of GEO Spaceborne-Airborne Bistatic SAR[J]. Radar Science and Technology, 2007, 5(2): 128–132. doi: 10.3969/j.issn.1672-2337.2007.02.011 [10] 史洪印, 周荫清, 陈杰. 同步轨道星机双基地三通道SAR地面运动目标指示算法[J]. 电子与信息学报, 2009, 31(8): 1881–1885.SHI Hongyin, ZHOU Yinqing, and CHEN Jie. An algorithm of GEO spaceborne-airborne bistatic three-channel SAR ground moving target indication[J]. Journal of Electronics &Information Technology, 2009, 31(8): 1881–1885. [11] LI Zhuo, LI Chunsheng, YU Ze, et al. Back projection algorithm for high resolution GEO-SAR image formation[C]. 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, Canada, 2011: 336–339. [12] ZHANG Fan, HU Chen, YIN Qiang, et al. Multi-aspect-aware bidirectional LSTM networks for synthetic aperture radar target recognition[J]. IEEE Access, 2017, 5: 26880–26891. doi: 10.1109/ACCESS.2017.2773363 [13] PEI Jifang, HUANG Yulin, HUO Weibo, et al. SAR automatic target recognition based on Multiview deep learning framework[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(4): 2196–2210. doi: 10.1109/TGRS.2017.2776357 [14] 邹浩, 林赟, 洪文. 采用深度学习的多方位角SAR图像目标识别研究[J]. 信号处理, 2018, 34(5): 513–522. doi: 10.16798/j.issn.1003-0530.2018.05.002ZOU Hao, LIN Yun, and HONG Wen. Research on multi-aspect SAR images target recognition using deep learning[J]. Journal of Signal Processing, 2018, 34(5): 513–522. doi: 10.16798/j.issn.1003-0530.2018.05.002 [15] ZHAO Pengfei, LIU Kai, ZOU Hao, et al. Multi-stream convolutional neural network for SAR automatic target recognition[J]. Remote Sensing, 2018, 10(9): 1473. doi: 10.3390/rs10091473 [16] ZHANG Fan, FU Zhenzhen, ZHOU Yongsheng, et al. Multi-aspect SAR target recognition based on space-fixed and space-varying scattering feature joint learning[J]. Remote Sensing Letters, 2019, 10(10): 998–1007. doi: 10.1080/2150704X.2019.1635287 [17] TAN Mingxing and LE Q V. EfficientNet: Rethinking model scaling for convolutional neural networks[J]. arXiv: 1905.11946, 2019. [18] CHO K, VAN MERRIENBOER B, GULCEHRE C, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation[J]. arXiv: 1406.1078, 2014. [19] CAI Jie, MENG Zibo, KHAN A S, et al. Island loss for learning discriminative features in facial expression recognition[C]. The 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 2018: 302–309. [20] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, 2016. [21] HOCHREITER S and SCHMIDHUBER J. Long short-term memory[J]. Neural Computation, 1997, 9(8): 1735–1780. doi: 10.1162/neco.1997.9.8.1735 [22] WEN Yandong, ZHANG Kaipeng, LI Zhifeng, et al. A discriminative feature learning approach for deep face recognition[C]. The 14th European Conference on Computer Vision – ECCV 2016, Amsterdam, The Netherlands, 2016. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: