SAR Ship Detection in Complex Scenes Based on Adaptive Anchor Assignment and IOU Supervise(in English)

-

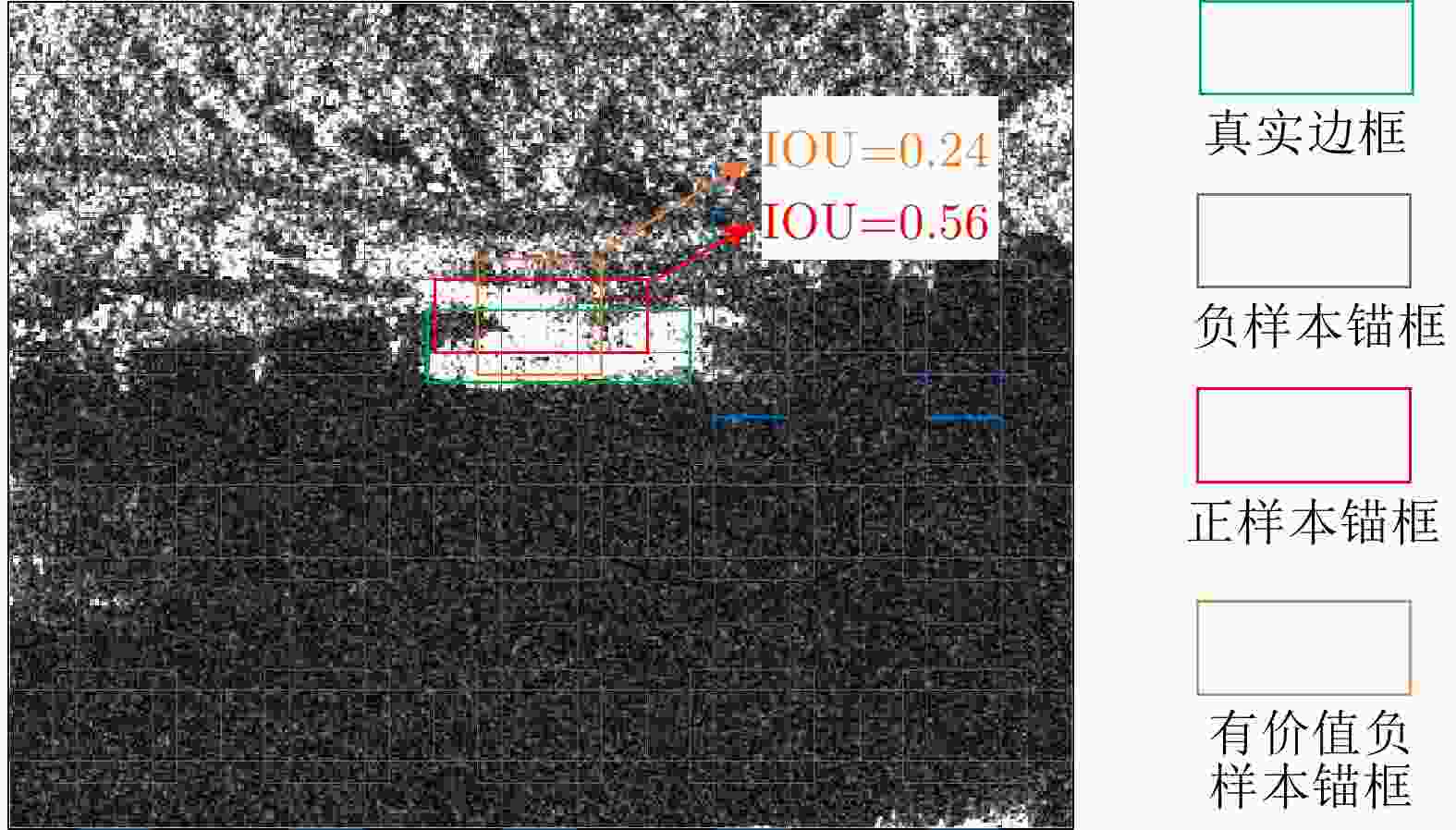

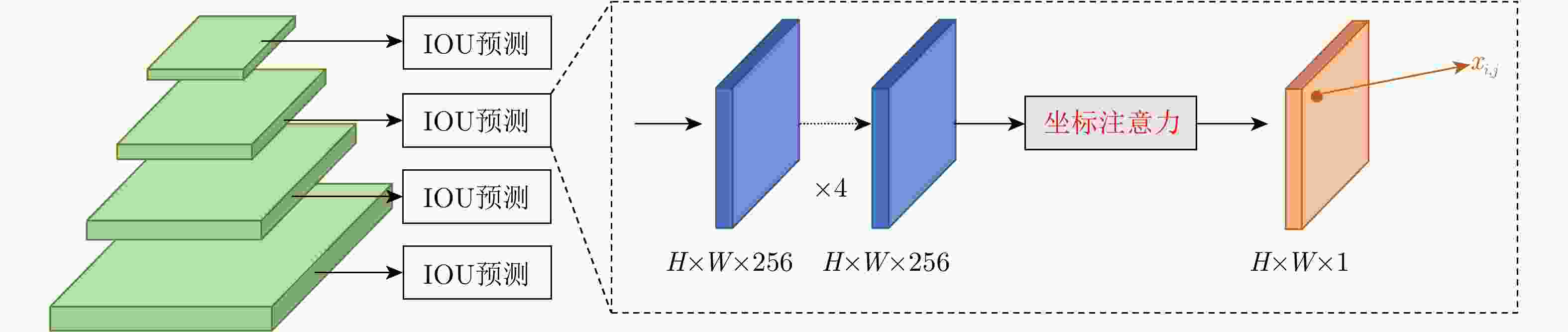

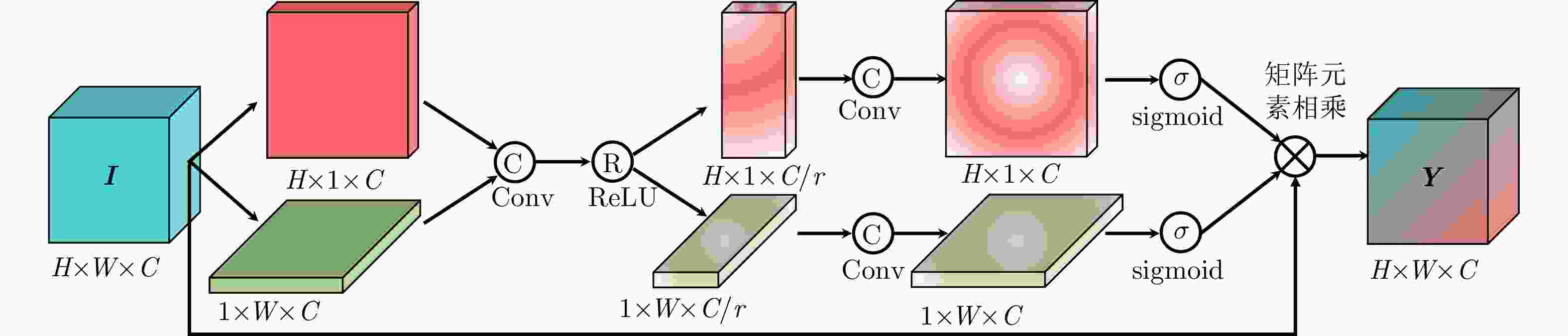

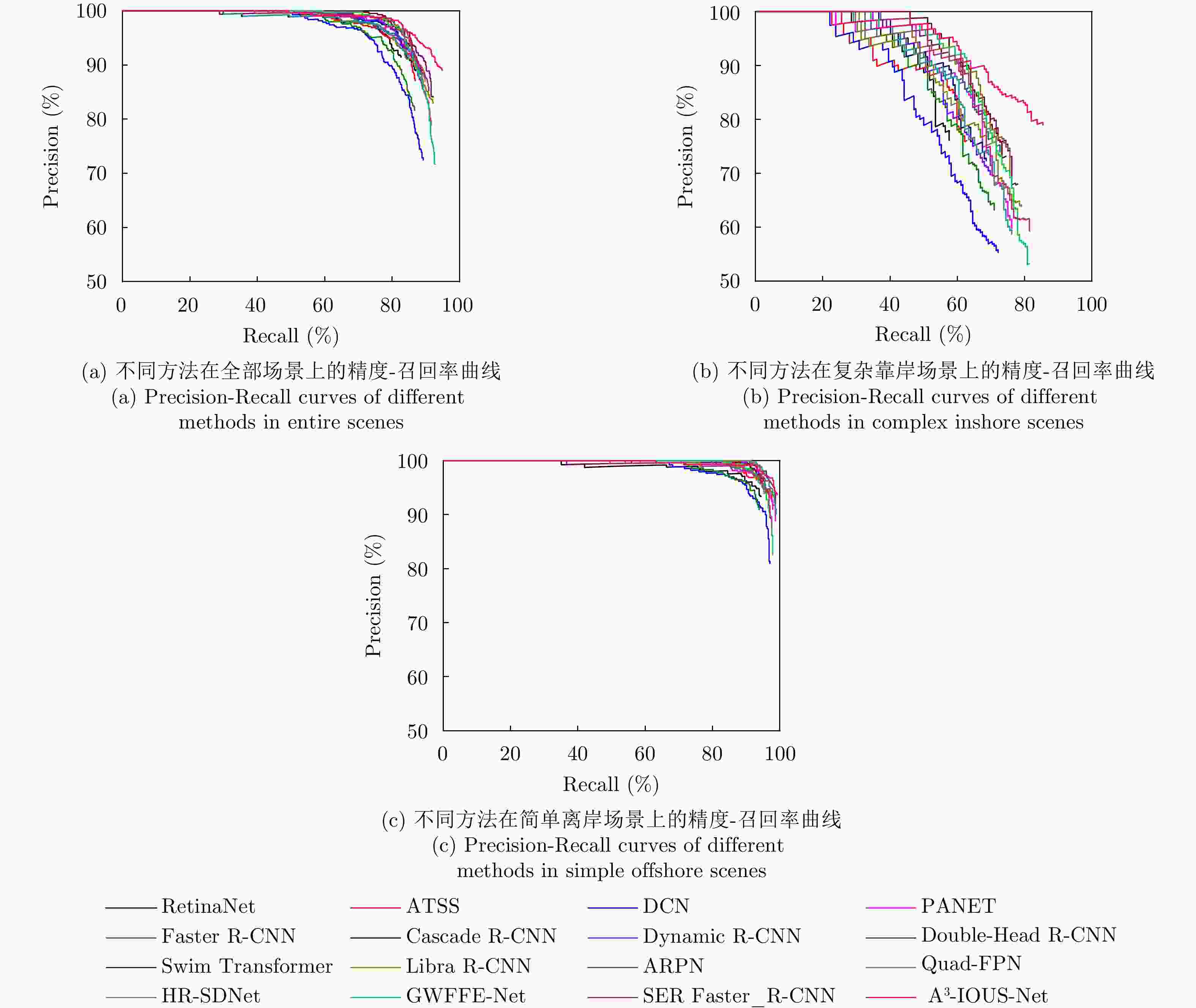

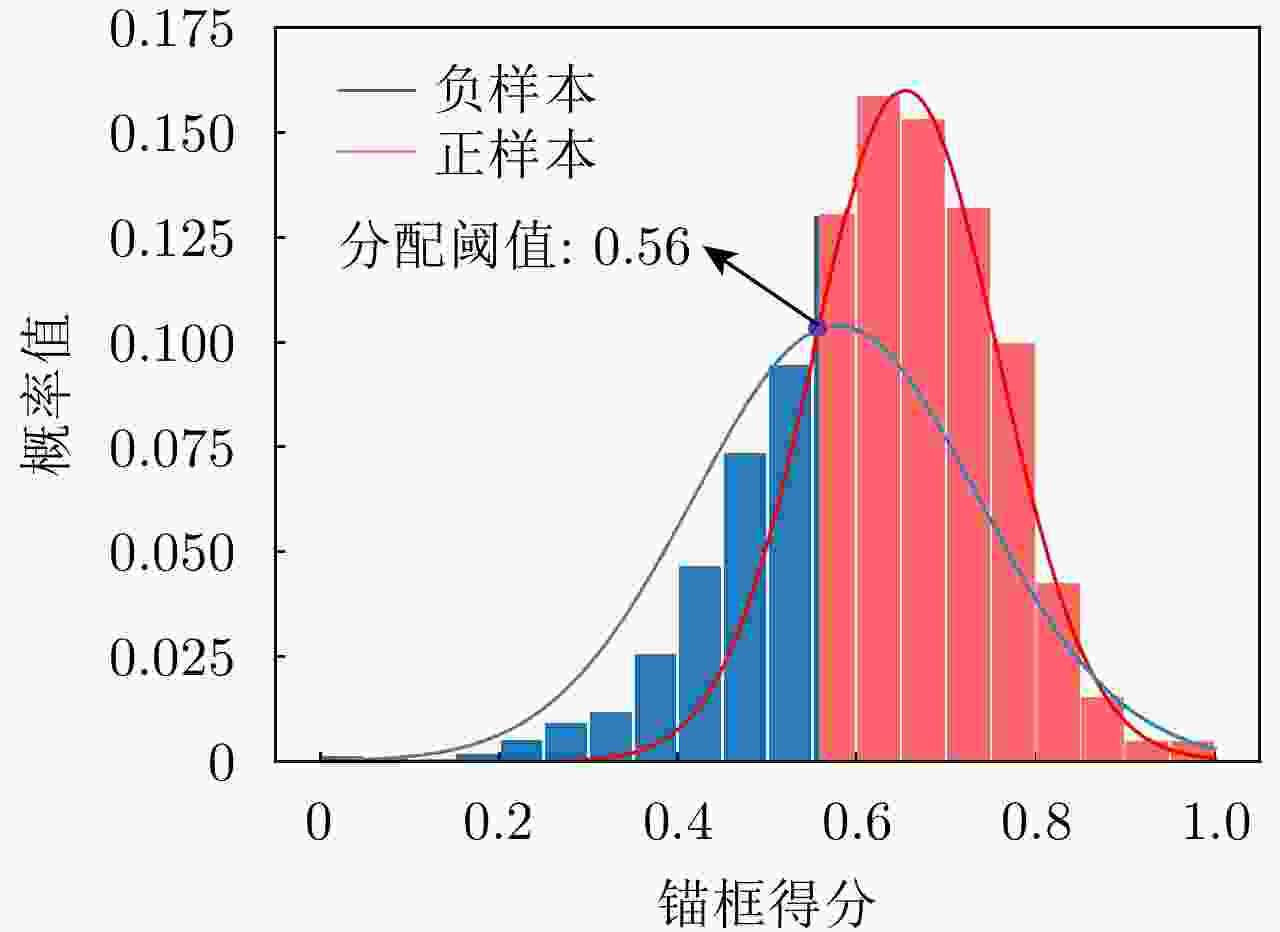

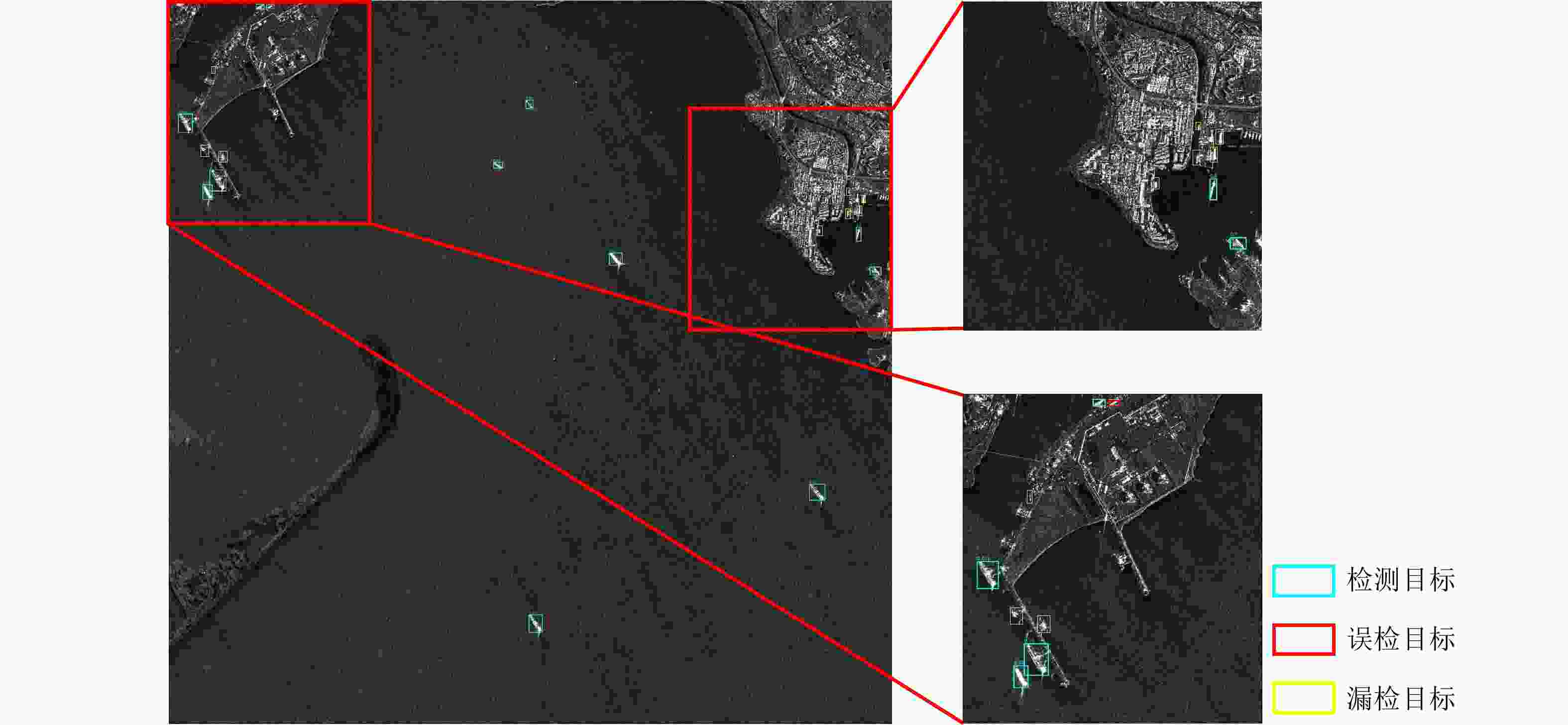

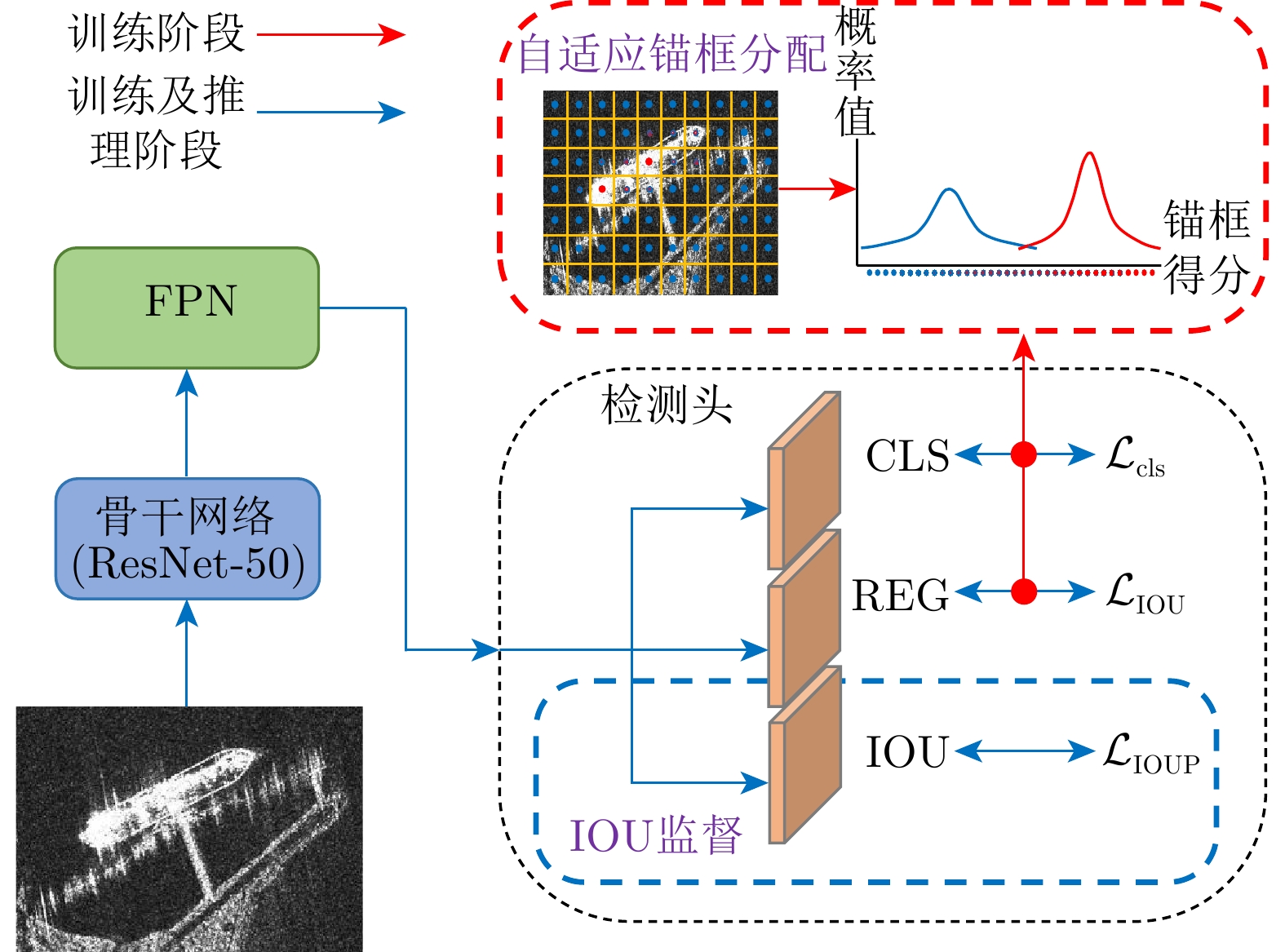

摘要: 针对复杂场景舰船检测中正负样本分配不合理以及定位质量较差的问题,该文提出了一种基于自适应锚框分配与交并比(IOU)监督的复杂场景合成孔径雷达(SAR)舰船检测方法(A3-IOUS-Net)。首先,A3-IOUS-Net提出了自适应锚框分配,建立概率分布模型来自适应地将锚框分配为正负样本,增强了复杂场景下的舰船样本学习能力。然后,A3-IOUS-Net提出了IOU监督,在预测头部增加IOU预测分支来监督检测框定位质量,使得网络能够精确定位复杂场景下的舰船目标。此外,在该IOU预测分支中引入了坐标注意力模块,抑制了背景杂波干扰,进一步提高了检测精度。在公开的SAR舰船检测数据集(SSDD)的实验结果表明,A3-IOUS-Net在复杂场景中的平均精度(AP)值为82.04%,优于其他15种对比模型。Abstract: This study aims to address the unreasonable assignment of positive and negative samples and poor localization quality in ship detection in complex scenes. Therefore, in this study, a Synthetic Aperture Radar (SAR) ship detection network (A3-IOUS-Net) based on adaptive anchor assignment and Intersection over Union (IOU) supervise in complex scenes is proposed. First, an adaptive anchor assignment mechanism is proposed, where a probability distribution model is established to adaptively assign anchors as positive and negative samples to enhance the ship samples’ learning ability in complex scenes. Then, an IOU supervise mechanism is proposed, which adds an IOU prediction branch in the prediction head to supervise the localization quality of detection boxes, allowing the network to accurately locate the SAR ship targets in complex scenes. Furthermore, a coordinate attention module is introduced into the prediction branch to suppress the background clutter interference and improve the SAR ship detection accuracy. The experimental results on the open SAR Ship Detection Dataset (SSDD) show that the Average Precision (AP) of A3-IOUS-Net in complex scenes is 82.04%, superior to the other 15 comparison models.

-

1 自适应锚框分配基本流程

1. Basic process of adaptive anchor assignment

输入:一组真实边框$\mathcal{G}$,一组锚框$\mathcal{A}$,一组来自第$ { i } $金字塔层级的

锚框${\mathcal{A} }_{i}$,金字塔层级$ \mathcal{L} $,每个金字塔层级的候选锚框$\mathcal{K}$过程: 1:do 自适应锚框分配 2: $\mathcal{P} \leftarrow \varnothing, \;\mathcal{N} \leftarrow \varnothing$ 3: for $g \in \mathcal{G}$ do 4: $ \mathcal{A}_{g} \leftarrow $锚框获取$(\mathcal{A}, g, \mathcal{G})$ 5: $ \mathcal{C}_{g} \leftarrow \varnothing $ 6: for $ i=1 $ to $ \mathcal{L} $ do 7: $\mathcal{A}_{i}^g \leftarrow \mathcal{A}_{i} \cap \mathcal{A}_{g}$ 8: $\mathcal{S}_{i} \leftarrow$计算锚框分数$\left(\mathcal{A}_{i}^{g}, g\right)$ 9: $t_{i} \leftarrow$收集分数前$\mathcal{Q}$锚框$ \left(s_{i}, \mathcal{K}\right) $ 10: $\mathcal{C}_{g}^{i} \leftarrow\left\{a_{j} \in \mathcal{A}_{i}^{g} \mid t_{i} \le s_{j} \in \mathcal{S}_{i}\right\}$ 11: $C_{g} \leftarrow \mathcal{C}_{g} \cup C_{g}^{i}$ 12: end 13: $ \mathcal{B}, \mathcal{F} \leftarrow $高斯混合分布建模$\left(\mathcal{C}_{g}, 2\right)$ 14: $\mathcal{N}_{g,} \mathcal{P}_{g} \leftarrow$锚框分配$\left(\mathcal{C}_{g}, \mathcal{B}, \mathcal{F}\right)$ 15: $\mathcal{P} \leftarrow \mathcal{P} \cup \mathcal{P}_{g}, \;\mathcal{N} \leftarrow \mathcal{N} \cup \mathcal{N}_{g}, \;\mathcal{I} \leftarrow \mathcal{I}\cup\left(\mathcal{C}_{g} - \mathcal{P}_{g} - \mathcal{N}_{g}\right)$ 16:end 17:$\mathcal{N} \leftarrow \mathcal{N}\cup(\mathcal{A}-P-\mathcal{N}-\mathcal{I})$ 18:end 输出:一组正样本$ \mathcal{P} $,一组负样本$ \mathcal{N} $,一组忽略样本$\mathcal{ I} $ 2 结合IOU监督的NMS后处理基本流程

2. Basic process of NMS combined with IOU supervision

输入:初始检测框集合$ \mathcal{B}=\left\{b_{1}, b_{2}, \cdots, b_{N}\right\} $,初始检测框分类分

数集合${\mathcal{S}}_{\mathrm{cls} }=\left\{\mathrm{cls}_{1,}, \mathrm{cls}_{2,} \cdots, \mathrm{cls}_{N}\right\}$,初始检测框IOU分数

集合${\mathcal{S} }_{\mathrm{IOU} }=\left\{\operatorname{IOU}_{1}, \operatorname{IOU}_{2,} \cdots, \operatorname{IOU}_{N}\right\}$,IOU阈值$N_{{\rm{t}}}$。过程: 1:do 联合分类得分和IOU得分 2: $\mathcal{S} \leftarrow\{\;\}$ 3: ${\mathcal{S} }={\mathcal{S} }_{ {\rm{cls} } }^{1/2}\cdot {\mathcal{S} }_{ {\rm{IOU} } }^{1 / 2}$ IOU监督 4:end 5:do NMS后处理 6: $\mathcal{D} \leftarrow\{\;\}$ 7: while $ \mathcal{B} \neq \varnothing $ do 8: $m \leftarrow \text {arg\,max} \;{\mathcal{S} }$ 9: $\mathcal{M} \leftarrow b_{m}$ 10: $ \mathcal{D} \leftarrow \mathcal{D} \cup \mathcal{M} ; \mathcal{B} \leftarrow \mathcal{B}-\mathcal{M} $ 11: for $b_{i} \;\text { in }\; \mathcal{B}$ do 12: if ${ {\rm{IOU} } }\left(\mathcal{M}, b_{i}\right) \ge N_{{\rm{t}}}$ then 13: $\mathcal{B} \leftarrow \mathcal{B}-b_{i} ; \;\mathcal{S} \leftarrow \mathcal{S}-s_{i}$ 14: end 15: end 16: end 17:end 输出:NMS处理后的检测框集合$\mathcal{ D}$,NMS处理后的检测分数集

合$\mathcal{S}$表 1 SSDD数据集信息概览

Table 1. Information of SSDD

参数 指标 SAR卫星 RadarSat-2, TerraSAR-X, Sentinel-1 SAR图像数量 1160 图像平均尺寸 500像素×500像素 训练集测试集比例 8∶2 极化模式 HH, VV, VH, HV 分辨率 1~15 m 地点 中国烟台、印度维萨卡帕特南 海况 良好、较差 场景 复杂靠岸、简单离岸 舰船数量 2587 最小尺寸舰船 66像素 最大尺寸舰船 78597像素 表 2 A3-IOUS-Net和其他方法性能对比

Table 2. Comparison of performance of A3-IOUS-Net and other methods

方法 全部场景(%) 复杂靠岸场景(%) 简单离岸场景(%) Params (M) R P AP R P AP R P AP RetinaNet[17] 83.15 89.37 82.23 58.14 76.92 56.15 94.65 93.65 94.01 30.94 ATSS[28] 86.81 87.13 85.78 62.79 76.06 59.07 97.86 91.04 97.50 30.86 DCN[29] 89.38 72.62 86.67 72.67 55.80 63.09 97.06 81.03 95.91 41.49 PANET[30] 92.49 79.65 91.06 77.91 61.19 71.95 99.20 89.40 98.76 44.16 Faster R-CNN[31] 86.81 81.87 85.15 71.51 63.73 65.29 93.85 90.93 93.16 40.61 Cascade R-CNN[32] 87.18 89.14 86.40 69.19 73.01 65.36 95.45 96.23 95.29 68.42 Dynamic R-CNN[33] 89.19 85.59 88.18 69.77 69.77 65.42 98.13 92.44 97.83 40.61 Double-Head R-CNN[34] 92.31 84.28 91.22 78.49 68.53 73.72 98.66 92.02 98.29 46.20 Swim Transformer[35] 89.01 89.17 88.42 75.00 73.71 71.95 95.45 96.49 95.32 72.55 Libra R-CNN[36] 93.22 83.99 91.59 80.81 65.26 73.66 98.93 94.15 98.43 40.88 ARPN[37] 89.01 88.04 88.06 72.67 73.96 68.49 96.52 94.26 96.28 41.17 Quad-FPN[38] 92.12 79.46 90.91 77.33 59.64 70.79 98.93 90.24 98.73 46.44 HR-SDNet[39] 91.76 87.89 90.88 76.16 70.43 71.83 98.93 96.35 98.79 90.92 GWFFE-Net[15] 92.86 71.81 91.34 81.98 53.61 75.71 97.86 82.62 97.45 61.48 SER Faster R-CNN[40] 93.04 77.79 91.52 81.40 59.32 74.88 98.40 88.25 97.96 41.74 A3-IOUS-Net 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 31.08 表 3 A3-IOUS-Net是否使用自适应锚框分配机制对精度的影响(%)

Table 3. Effect of whether A3-IOUS-Net using adaptive anchor assignment mechanism (%)

自适应锚框分配 全部场景 复杂靠岸场景 简单离岸场景 R P AP R P AP R P AP × 91.76 89.46 90.72 76.16 75.72 70.87 98.93 95.61 98.76 √ 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 表 4 自适应锚框分配机制使用不同概率分布模型对精度的影响(%)

Table 4. Effect of adaptive anchor assignment mechanism using different probability distribution models (%)

概率分布模型类型 全部场景 复杂靠岸场景 简单离岸场景 R P AP R P AP R P AP 狄利克雷混合分布 87.36 88.17 86.50 64.53 77.62 62.39 97.86 91.96 97.28 T混合分布 89.01 92.57 88.17 69.77 83.33 66.82 97.86 96.06 97.46 β混合分布 91.76 85.35 91.01 76.74 70.21 73.29 98.66 92.48 98.40 高斯混合分布 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 表 5 A3-IOUS-Net是否使用IOU监督机制对精度的影响(%)

Table 5. Effect of whether A3-IOUS-Net using IOU supervise mechanism (%)

IOU监督 全部场景 复杂靠岸场景 简单离岸场景 R P AP R P AP R P AP × 89.38 90.37 87.19 70.93 78.21 64.66 97.86 95.31 96.72 √ 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 表 6 IOU监督机制使用不同IOU预测损失函数对精度的影响(%)

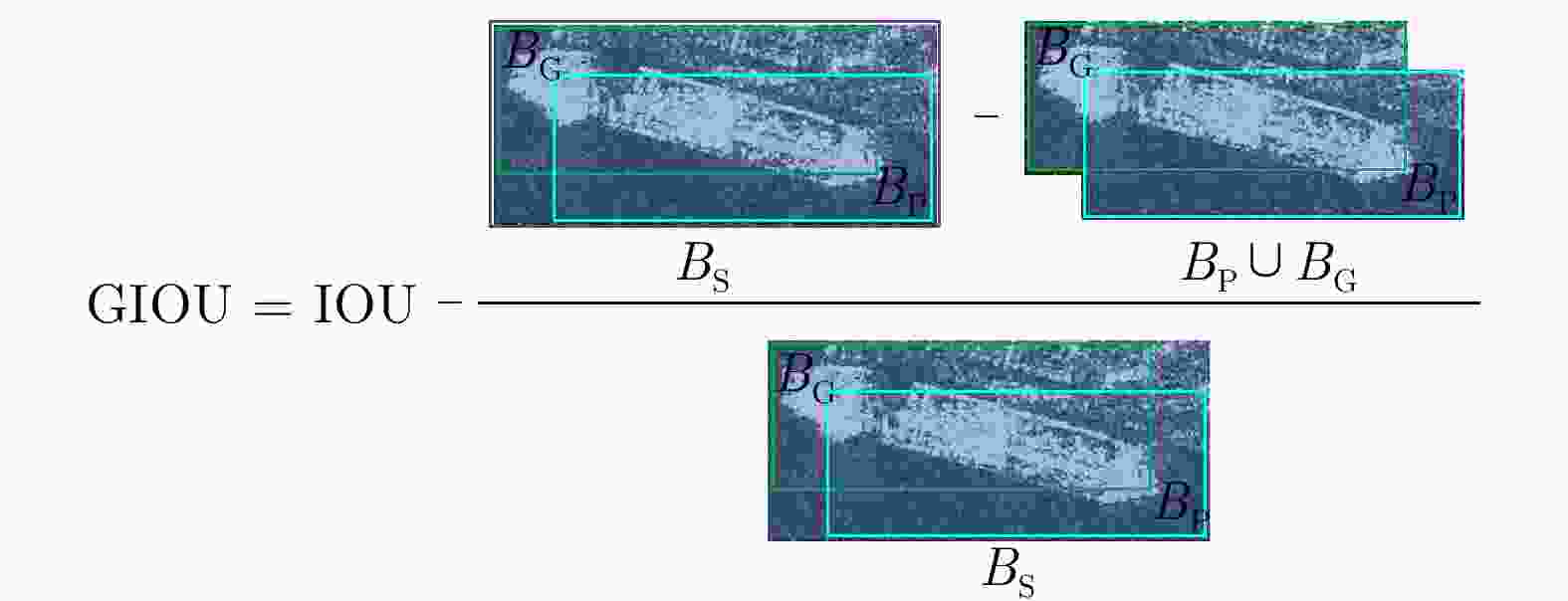

Table 6. Effect of IOU supervise mechanism using different IOU prediction loss functions (%)

损失函数类型 全部场景 复杂靠岸场景 简单离岸场景 R P AP R P AP R P AP IOU Loss 92.67 89.08 91.68 78.49 79.41 74.50 99.20 93.22 98.80 GIOU Loss 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 表 7 IOU监督机制是否使用坐标注意力模块对精度的影响(%)

Table 7. Effect of whether IOU supervise mechanism using coordinate attention module (%)

坐标注意力 全部场景 复杂靠岸场景 简单离岸场景 R P AP R P AP R P AP × 93.59 86.32 92.74 80.81 70.20 76.64 99.47 94.42 99.24 √ 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 1 Basic process of adaptive anchor assignment

Input: A set of ground-truth bounding boxes $ {\mathcal{G}} $, ${\mathcal{A}} $ set of

anchors $ \mathcal{A} $, A set of anchors from the i-th pyramid level $ \mathcal{A}_{i} $,

the pyramid level $ \mathcal{L} $, and candidate anchors for each pyramid

level $ \mathcal{K} $.Process: 1:do Adaptive anchor assignment 2: $ \mathcal{P} \leftarrow \varnothing, \mathcal{N} \leftarrow \varnothing $ 3: for $ g \in \mathcal{G} $ do 4: $ \mathcal{A}_{g} \leftarrow $Anchor acquisition $ (\mathcal{A}, g, \mathcal{G}) $ 5: $ C_{g} \leftarrow \varnothing $ 6: for $ i=1 $ to $ \mathcal{L} $ do 7: $ \mathcal{A}_{i}^{g} \leftarrow \mathcal{A}_{i} \cap \mathcal{A}_{g} $ 8: $ \mathcal{S}_{i} \leftarrow $Anchor score calculation $ (\mathcal{A}_i^g, g) $ 9: $ t_{i} \leftarrow $Anchors collection with $ \mathcal{Q}$ scores $ \left(s_{i}, \mathcal{K}\right) $ 10: $ \mathcal{C}_{g}^{i} \leftarrow\left\{a_{j} \in \mathcal{A}_i^g| t_{i} \leq s_{j} \in \mathcal{S}_{i}\right\} $ 11: $ \mathcal{C}_{g} \leftarrow \mathcal{C}_{g} \cup \mathcal{C}_{g}^i $ 12: end 13: $ \mathcal{B}, \mathcal{F} \leftarrow $ Gaussian mixture distribution modeling $ \left(\mathcal{C}_{g}, 2\right) $ 14: $ \mathcal{N}_{g}, \mathcal{P}_{g} \leftarrow $Anchor assignment $ \left(\mathcal{C}_{g}, \mathcal{B}, \mathcal{F}\right) $ 15: $ \mathcal{P} \leftarrow \mathcal{P} \cup \mathcal{P}_{g}, \mathcal{N} \leftarrow \mathcal{N} \cup \mathcal{N}_{g}, \mathcal{I} \leftarrow \mathcal{I} \cup\left(\mathcal{C}_{g}-\mathcal{P}_{g}-\mathcal{N}_{g}\right) $ 16: end 17: $ \mathcal{N} \leftarrow \mathcal{N} \cup(\mathcal{A}-\mathcal{P}-\mathcal{N}-\mathcal{I}) $ 18:end Output: A set of positive samples $ \mathcal{P} $, a set of negative samples

$ \mathcal{N} $, and a set of ignored samples $ \mathcal{I} $.2 Basic process of NMS combined with IOU supervision

Input: The set of initial detection boxes $ \mathcal{B}=\left\{b_{1}, b_{2}, \cdots, b_{N}\right\} $,

the set of initial detection box classification scores

$ {\mathcal{S} }_{\mathrm{cls} }=\left\{\mathrm{cls}_{1,}, \mathrm{cls}_{2,} \cdots, \mathrm{cls}_{N}\right\} $, the set of initial detection box

IOU scores $ {\mathcal{S} }_{\mathrm{IOU} }=\left\{\operatorname{IOU}_{1}, \operatorname{IOU}_{2,} \cdots, \operatorname{IOU}_{N}\right\} $, and the IOU

threshold value $ N_{{\mathrm{t}}} $。Process: 1: do Classification score and IOU score combination 2: $ \mathcal{S} \leftarrow\{\;\} $ 3: $ {\mathcal{S} }={\mathcal{S} }_{ {\rm{cls} } }^{1/2}\cdot {\mathcal{S} }_{ {\rm{IOU} } }^{1 / 2} $ IOU supervision 4: end 5: do NMS post-processing 6: $ D \leftarrow\{\;\} $ 7: while $ \mathcal{B} \neq \varnothing $ do 8: $ m \leftarrow \operatorname{argmax} \mathcal{S} $ 9: $ \mathcal{M} \leftarrow b_{m} $ 10: $ \mathcal{D} \leftarrow \mathcal{D} \cup \mathcal{M} ; \mathcal{B} \leftarrow \mathcal{B}-\mathcal{M} $ 11: for $ b_{i} \text { in } \mathcal{B} $ do 12: if $ \operatorname{IOU}\left(\mathcal{M}, b_{i}\right) \ge N_{{\mathrm{t}}} $ then 13: $ \mathcal{B} \leftarrow \mathcal{B}-b_{i} ; \mathcal{S} \leftarrow \mathcal{S}-s_{i} $ 14: end 15: end 16: end 17: end Output: The set $ \mathcal{D} $ of detection boxes after NMS processing and the set $ \mathcal{S} $ of detection scores after NMS processing. 表 1 Information on SSDD

Parameter Iadicator SAR satellite RadarSat-2, TerraSAR-X, Sentinel-1 Number of images 1,160 Average image size 500 pixel × 500 pixel Training/Test ratio 8:2 Polarization mode HH, VV, VH, HV Resolution 1–15 m Place Yantai, China; Visakhapatnam, India Sea condition Good, Bad Scene Complex inshore, Simple offshore Number of ships 2,587 Minimum-size ship 66 pixel Maximum-size ship 78,597 pixel 表 2 Comparison of performance of A 3-IOUS-Net and other methods

Method All scenes (%) Inshore scenes (%) Offshore scenes (%) Params (M) R P AP R P AP R P AP RetinaNet [ 17] 83.15 89.37 82.23 58.14 76.92 56.15 94.65 93.65 94.01 30.94 ATSS [ 28] 86.81 87.13 85.78 62.79 76.06 59.07 97.86 91.04 97.50 30.86 DCN [ 29] 89.38 72.62 86.67 72.67 55.80 63.09 97.06 81.03 95.91 41.49 PANET [ 30] 92.49 79.65 91.06 77.91 61.19 71.95 99.20 89.40 98.76 44.16 Faster R-CNN [ 31] 86.81 81.87 85.15 71.51 63.73 65.29 93.85 90.93 93.16 40.61 Cascade R-CNN [ 32] 87.18 89.14 86.40 69.19 73.01 65.36 95.45 96.23 95.29 68.42 Dynamic R-CNN [ 33] 89.19 85.59 88.18 69.77 69.77 65.42 98.13 92.44 97.83 40.61 Double-Head R-CNN [ 34] 92.31 84.28 91.22 78.49 68.53 73.72 98.66 92.02 98.29 46.20 Swim Transformer [ 35] 89.01 89.17 88.42 75.00 73.71 71.95 95.45 96.49 95.32 72.55 Libra R-CNN [ 36] 93.22 83.99 91.59 80.81 65.26 73.66 98.93 94.15 98.43 40.88 ARPN [ 37] 89.01 88.04 88.06 72.67 73.96 68.49 96.52 94.26 96.28 41.17 Quad-FPN [ 38] 92.12 79.46 90.91 77.33 59.64 70.79 98.93 90.24 98.73 46.44 HR-SDNet [ 39] 91.76 87.89 90.88 76.16 70.43 71.83 98.93 96.35 98.79 90.92 GWFFE-Net [ 15] 92.86 71.81 91.34 81.98 53.61 75.71 97.86 82.62 97.45 61.48 SER Faster R-CNN [ 40] 93.04 77.79 91.52 81.40 59.32 74.88 98.40 88.25 97.96 41.74 A 3-IOUS-Net 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 31.08 表 3 Effect of whether A 3-IOUS-Net using adaptive anchor assignment mechanism (%)

Adaptive anchor assignment All scenes Inshore scenes Offshore scenes R P AP R P AP R P AP × 91.76 89.46 90.72 76.16 75.72 70.87 98.93 95.61 98.76 √ 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 表 4 Effect of adaptive anchor assignment mechanism using different probability distribution models (%)

Probability distribution models All scenes Inshore scenes Offshore scenes R P AP R P AP R P AP Dirichlet mixture distribution 87.36 88.17 86.50 64.53 77.62 62.39 97.86 91.96 97.28 T mixture distribution 89.01 92.57 88.17 69.77 83.33 66.82 97.86 96.06 97.46 β mixture distribution 91.76 85.35 91.01 76.74 70.21 73.29 98.66 92.48 98.40 Gaussian mixture distribution 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 表 5 Effect of whether A 3-IOUS-Net using IOU supervision mechanism (%)

IOU supervision All scenes Inshore scenes Offshore scenes R P AP R P AP R P AP × 89.38 90.37 87.19 70.93 78.21 64.66 97.86 95.31 96.72 √ 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 表 6 Effect of IOU supervision mechanism using different IOU prediction loss functions (%)

Loss function All scenes Inshore scenes Offshore scenes R P AP R P AP R P AP IOU Loss 92.67 89.08 91.68 78.49 79.41 74.50 99.20 93.22 98.80 GIOU Loss 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 表 7 Effect of whether IOU supervision mechanism using coordinate attention module (%)

Coordinate attention module All scenes Inshore scenes Offshore scenes R P AP R P AP R P AP × 93.59 86.32 92.74 80.81 70.20 76.64 99.47 94.42 99.24 √ 95.05 89.18 94.05 86.05 79.57 82.04 99.20 93.69 98.83 -

[1] 刘方坚, 李媛. 基于视觉显著性的SAR遥感图像NanoDet舰船检测方法[J]. 雷达学报, 2021, 10(6): 885–894. doi: 10.12000/JR21105.LIU Fangjian and LI Yuan. SAR remote sensing image ship detection method NanoDet based on visual saliency[J]. Journal of Radars, 2021, 10(6): 885–894. doi: 10.12000/JR21105. [2] ZHANG Tianwen, ZHANG Xiaoling, KE Xiao, et al. HOG-ShipCLSNet: A novel deep learning network with hog feature fusion for SAR ship classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5210322. doi: 10.1109/TGRS.2021.3082759. [3] ZHANG Tianwen and ZHANG Xiaoling. Injection of traditional hand-crafted features into modern CNN-based models for SAR ship classification: What, why, where, and how[J]. Remote Sensing, 2021, 13(11): 2091. doi: 10.3390/rs13112091. [4] XU Xiaowo, ZHANG Xiaoling, ZHANG Tianwen, et al. Shadow-background-noise 3D spatial decomposition using sparse low-rank Gaussian properties for video-SAR moving target shadow enhancement[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4516105. doi: 10.1109/LGRS.2022.3223514. [5] ZHANG Tianwen and ZHANG Xiaoling. High-speed ship detection in SAR images based on a grid convolutional neural network[J]. Remote Sensing, 2019, 11(10): 1206. doi: 10.3390/rs11101206. [6] ZHANG Tianwen, ZHANG Xiaoling, SHI Jun, et al. Balance scene learning mechanism for offshore and inshore ship detection in SAR images[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4004905. doi: 10.1109/LGRS.2020.3033988. [7] 徐从安, 苏航, 李健伟, 等. RSDD-SAR: SAR舰船斜框检测数据集[J]. 雷达学报, 2022, 11(4): 581–599. doi: 10.12000/JR22007.XU Cong’an, SU Hang, LI Jianwei, et al. RSDD-SAR: Rotated ship detection dataset in SAR images[J]. Journal of Radars, 2022, 11(4): 581–599. doi: 10.12000/JR22007. [8] ZHANG Tianwen, ZHANG Xiaoling, SHI Jun, et al. Depthwise separable convolution neural network for high-speed SAR ship detection[J]. Remote Sensing, 2019, 11(21): 2483. doi: 10.3390/rs11212483. [9] TANG Gang, ZHUGE Yichao, CLARAMUNT C, et al. N-YOLO: A SAR ship detection using noise-classifying and complete-target extraction[J]. Remote Sensing, 2021, 13(5): 871. doi: 10.3390/rs13050871. [10] ZHANG Tianwen and ZHANG Xiaoling. HTC+ for SAR ship instance segmentation[J]. Remote Sensing, 2022, 14(10): 2395. doi: 10.3390/rs14102395. [11] HE Bokun, ZHANG Qingyi, TONG Ming, et al. Oriented ship detector for remote sensing imagery based on pairwise branch detection head and SAR feature enhancement[J]. Remote Sensing, 2022, 14(9): 2177. doi: 10.3390/rs14092177. [12] XU Xiaowo, ZHANG Xiaoling, and ZHANG Tianwen. Lite-YOLOv5: A lightweight deep learning detector for on-board ship detection in large-scene sentinel-1 SAR images[J]. Remote Sensing, 2022, 14(4): 1018. doi: 10.3390/rs14041018. [13] ZHANG Tianwen, ZHANG Xiaoling, SHI Jun, et al. HyperLi-Net: A hyper-light deep learning network for high-accurate and high-speed ship detection from synthetic aperture radar imagery[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 167: 123–153. doi: 10.1016/j.isprsjprs.2020.05.016. [14] ZHANG Tianwen and ZHANG Xiaoling. A mask attention interaction and scale enhancement network for SAR ship instance segmentation[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4511005. doi: 10.1109/LGRS.2022.3189961. [15] XU Xiaowo, ZHANG Xiaoling, SHAO Zikang, et al. A group-wise feature enhancement-and-fusion network with dual-polarization feature enrichment for SAR ship detection[J]. Remote Sensing, 2022, 14(20): 5276. doi: 10.3390/rs14205276. [16] LI Jianwei, XU Cong’an, SU Hang, et al. Deep learning for SAR ship detection: Past, present and future[J]. Remote Sensing, 2022, 14(11): 2712. doi: 10.3390/rs14112712. [17] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(2): 318–327. doi: 10.1109/TPAMI.2018.2858826. [18] ZHANG Tianwen, ZHANG Xiaoling, KE Xiao, et al. LS-SSDD-v1.0: A deep learning dataset dedicated to small ship detection from large-scale Sentinel-1 SAR images[J]. Remote Sensing, 2020, 12(18): 2997. doi: 10.3390/rs12182997. [19] KIM K and LEE H S. Probabilistic anchor assignment with IoU prediction for object detection[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 355–371. [20] REYNOLDS D. Gaussian Mixture Models[M]. LI S Z and JAIN A. Encyclopedia of Biometrics. Boston, USA: Springer, 2009: 659–663. [21] DEMPSTER A P, LAIRD N M, and RUBIN D B. Maximum likelihood from incomplete data via the EM algorithm[J]. Journal of the Royal Statistical Society:Series B (Methodological), 1977, 39(1): 1–22. doi: 10.1111/j.2517-6161.1977.tb01600.x. [22] ZHANG Caiguang, XIONG Boli, LI Xiao, et al. TCD: Task-collaborated detector for oriented objects in remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 4700714. doi: 10.1109/TGRS.2023.3244953. [23] ZHANG Tianwen and ZHANG Xiaoling. Squeeze-and-excitation Laplacian pyramid network with dual-polarization feature fusion for ship classification in SAR images[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4019905. doi: 10.1109/LGRS.2021.3119875. [24] HOU Qibin, ZHOU Daquan, and FENG Jiashi. Coordinate attention for efficient mobile network design[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 13708–13717. [25] ZHANG Tianwen, ZHANG Xiaoling, LI Jianwei, et al. SAR ship detection dataset (SSDD): Official release and comprehensive data analysis[J]. Remote Sensing, 2021, 13(18): 3690. doi: 10.3390/rs13183690. [26] KETKAR N. Introduction to PyTorch[M]. KETKAR N. Deep Learning with Python: A Hands-on Introduction. Berkeley, USA: Apress, 2017: 195–208. [27] CHEN Kai, WANG Jiaqi, PANG Jiangmiao, et al. MMDetection: Open MMLab detection toolbox and benchmark[J]. arXiv: 1906.07155, 2019. [28] ZHANG Shifeng, CHI Cheng, YAO Yongqiang, et al. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 9756–9765. [29] ZHU Xizhou, HU Han, LIN S, et al. Deformable ConvNets V2: More deformable, better results[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 9300–9308. [30] LIU Shu, QI Lu, QIN Haifeng, et al. Path aggregation network for instance segmentation[J]. arXiv: 1803.01534, 2018. [31] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. doi: 10.1109/TPAMI.2016.2577031. [32] CAI Zhaowei and VASCONCELOS N. Cascade R-CNN: Delving into high quality object detection[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 6154–6162. [33] ZHANG Hongkai, CHANG Hong, MA Bingpeng, et al. Dynamic R-CNN: Towards high quality object detection via dynamic training[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 260–275. [34] WU Yue, CHEN Yinpeng, YUAN Lu, et al. Rethinking classification and localization for object detection[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 10183–10192. [35] LIU Ze, LIN Yutong, CAO Yue, et al. Swin transformer: Hierarchical vision transformer using shifted windows[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 9992–10002. [36] PANG Jiangmiao, CHEN Kai, SHI Jianping, et al. Libra R-CNN: Towards balanced learning for object detection[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 821–830. [37] ZHAO Yan, ZHAO Lingjun, XIONG Boli, et al. Attention receptive pyramid network for ship detection in SAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2020, 13: 2738–2756. doi: 10.1109/JSTARS.2020.2997081. [38] ZHANG Tianwen, ZHANG Xiaoling, and KE Xiao. Quad-FPN: A novel quad feature pyramid network for SAR ship detection[J]. Remote Sensing, 2021, 13(14): 2771. doi: 10.3390/rs13142771. [39] WEI Shunjun, SU Hao, MING Jing, et al. Precise and robust ship detection for high-resolution SAR imagery based on HR-SDNet[J]. Remote Sensing, 2020, 12(1): 167. doi: 10.3390/rs12010167. [40] LIN Zhao, JI Kefeng, LENG Kiangguang, et al. Squeeze and excitation rank faster R-CNN for ship detection in SAR images[J]. IEEE Geoscience and Remote Sensing Letters, 2019, 16(5): 751–755. doi: 10.1109/LGRS.2018.2882551. [41] VO X T and JO K H. A review on anchor assignment and sampling heuristics in deep learning-based object detection[J]. Neurocomputing, 2022, 506: 96–116. doi: 10.1016/j.neucom.2022.07.003. [42] 孙显, 王智睿, 孙元睿, 等. AIR-SARShip-1.0: 高分辨率SAR舰船检测数据集[J]. 雷达学报, 2019, 8(6): 852–862. doi: 10.12000/JR19097.SUN Xian, WANG Zhirui, and SUN Yuanrui, et al. AIR-SARShip-1.0: High-resolution SAR ship detection dataset[J]. Journal of Radars, 2019, 8(6): 852–862. doi: 10.12000/JR19097. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: