| [1] |

BI Zhaoqiang, LI Jian, and LIU Zhengshe. Super resolution SAR imaging via parametric spectral estimation methods[J]. IEEE Transactions on Aerospace and Electronic Systems, 1999, 35(1): 267–281. doi: 10.1109/7.745697 |

| [2] |

GUPTA I J, BEALS M J, and MOGHADDAR A. Data extrapolation for high resolution radar imaging[J]. IEEE Transactions on Antennas and Propagation, 1994, 42(11): 1540–1545. doi: 10.1109/8.362783 |

| [3] |

BROWN L G. A survey of image registration techniques[J]. ACM Computing Surveys, 1992, 24(4): 325–376. doi: 10.1145/146370.146374 |

| [4] |

YANG Siyoung, KIM Y, and JEONG J. Fine edge-preserving technique for display devices[J]. IEEE Transactions on Consumer Electronics, 2008, 54(4): 1761–1769. doi: 10.1109/TCE.2008.4711232 |

| [5] |

|

| [6] |

YANG Jianchao, WRIGHT J, HUANG T S, et al. Image super-resolution via sparse representation[J]. IEEE Transactions on Image Processing, 2010, 19(11): 2861–2873. doi: 10.1109/TIP.2010.2050625 |

| [7] |

DONG Chao, LOY C C, HE Kaiming, et al. Image super-resolution using deep convolutional networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(2): 295–307. doi: 10.1109/TPAMI.2015.2439281 |

| [8] |

KIM J, KWON LEE J, and MU LEE K. Accurate image super-resolution using very deep convolutional networks[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 1646–1654. doi: 10.1109/CVPR.2016.182. |

| [9] |

HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Identity mappings in deep residual networks[C]. The 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 630–645. doi: 10.1007/978-3-319-46493-0_38. |

| [10] |

WANG Longgang, ZHENG Mana, DU Wenbo, et al. Super-resolution SAR image reconstruction via generative adversarial network[C]. 2018 12th International Symposium on Antennas, Propagation and EM Theory, Hangzhou, China, 2018: 1–4.

|

| [11] |

LI Zhen, YANG Jinglei, LIU Zheng, et al. Feedback network for image super-resolution[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 3862–3871. doi: 10.1109/CVPR.2019.00399. |

| [12] |

KIM J, KWON LEE J, and MU LEE K. Deeply-recursive convolutional network for image super-resolution[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 1637–1645. doi: 10.1109/CVPR.2016.181. |

| [13] |

TAI Ying, YANG Jian, and LIU Xiaoming. Image super-resolution via deep recursive residual network[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 2790–2798. doi: 10.1109/CVPR.2017.298. |

| [14] |

LEDIG C, THEIS L, HUSZÁR F, et al. Photo-realistic single image super-resolution using a generative adversarial network[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 105–114. doi: 10.1109/CVPR.2017.19. |

| [15] |

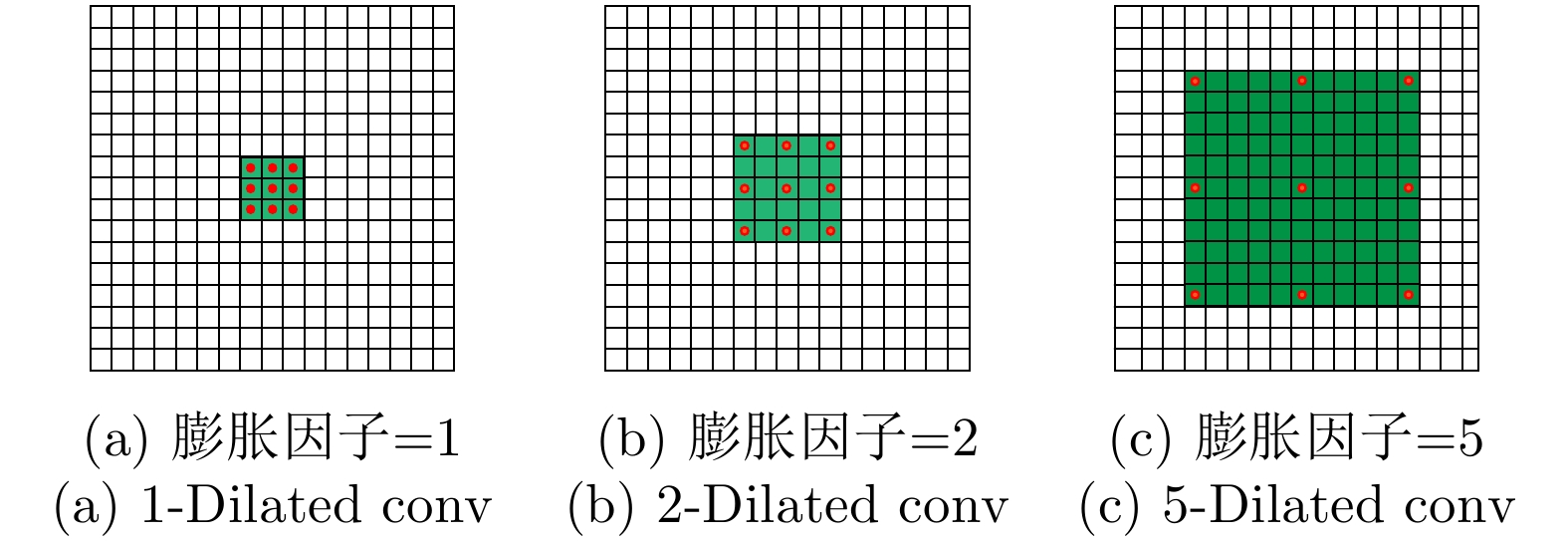

YU F and KOLTUN V. Multi-scale context aggregation by dilated convolutions[J]. arXiv preprint arXiv: 1511.07122, 2015.

|

| [16] |

HUANG Gao, LIU Zhuang, VAN DER MAATEN L, et al. Densely connected convolutional networks[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 4700–4708. doi: 10.1109/CVPR.2017.243. |

| [17] |

BENGIO Y, LECUN Y, NOHL C, et al. LeRec: A NN/HMM hybrid for on-line handwriting recognition[J]. Neural Computation, 1995, 7(6): 1289–1303. doi: 10.1162/neco.1995.7.6.1289 |

| [18] |

SIMONYAN K and ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[J]. arXiv preprint arXiv: 1409.1556, 2014.

|

| [19] |

JOHNSON J, ALAHI A, and LI Feifei. Perceptual losses for real-time style transfer and super-resolution[C]. The 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 694–711. doi: 10.1007/978-3-319-46475-6_43. |

| [20] |

ZHANG Qiang, YUAN Qiangqiang, LI Jie, et al. Learning a dilated residual network for SAR image despeckling[J]. Remote Sensing, 2018, 10(2): 196. doi: 10.3390/rs10020196 |

| [21] |

WANG Panqu, CHEN Pengfei, YUAN Ye, et al. Understanding convolution for semantic segmentation[C]. 2018 IEEE Winter Conference on Applications of Computer Vision, Lake Tahoe, USA, 2018: 1451–1460. doi: 10.1109/WACV.2018.00163. |

| [22] |

SHI Wenzhe, CABALLERO J, HUSZÁR F, et al. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Lake Tahoe, USA, 2016: 1874–1883. doi: 10.1109/CVPR.2016.207. |

| [23] |

ZEILER M D, TAYLOR G W, and FERGUS R. Adaptive deconvolutional networks for mid and high level feature learning[C]. 2011 International Conference on Computer Vision, Barcelona, Spain, 2011: 2018–2025. doi: 10.1109/ICCV.2011.6126474. |

| [24] |

NAIR V and HINTON G E. Rectified linear units improve restricted boltzmann machines[C]. The 27th International Conference on Machine Learning, Haifa, Israel, 2010: 807–814.

|

| [25] |

王振. 基于学习策略的SAR图像超分辨[D]. [硕士论文], 西安电子科技大学, 2018.

WANG Zhen. SAR image super resolution based on learning strategy[D]. [Master dissertation], Xidian University, 2018.

|

| [26] |

WANG Qiang and BI Sheng. Prediction of the PSNR quality of decoded images in fractal image coding[J]. Mathematical Problems in Engineering, 2016, 2016: 2159703.

|

| [27] |

WANG Zhou, BOVIK A C, SHEIKH H R, et al. Image quality assessment: From error visibility to structural similarity[J]. IEEE Transactions on Image Processing, 2004, 13(4): 600–612. doi: 10.1109/TIP.2003.819861 |

| [28] |

唐伶俐, 江平, 戴昌达, 等. 星载SAR图象斑点噪声消除方法效果的比较研究[J]. 环境遥感, 1996, 11(3): 206–211.

TANG Lingli, JIANG Ping, DAI Changda, et al. Evaluation of smoothing filters suppressing speckle noise on SAR images[J]. Remote Sensing of Environment China, 1996, 11(3): 206–211.

|

Submit Manuscript

Submit Manuscript Peer Review

Peer Review Editor Work

Editor Work.jpg)

DownLoad:

DownLoad: