Maritime Multimodal Data Resource System—Infrared-visible Dual-modal Dataset for Ship Detection

-

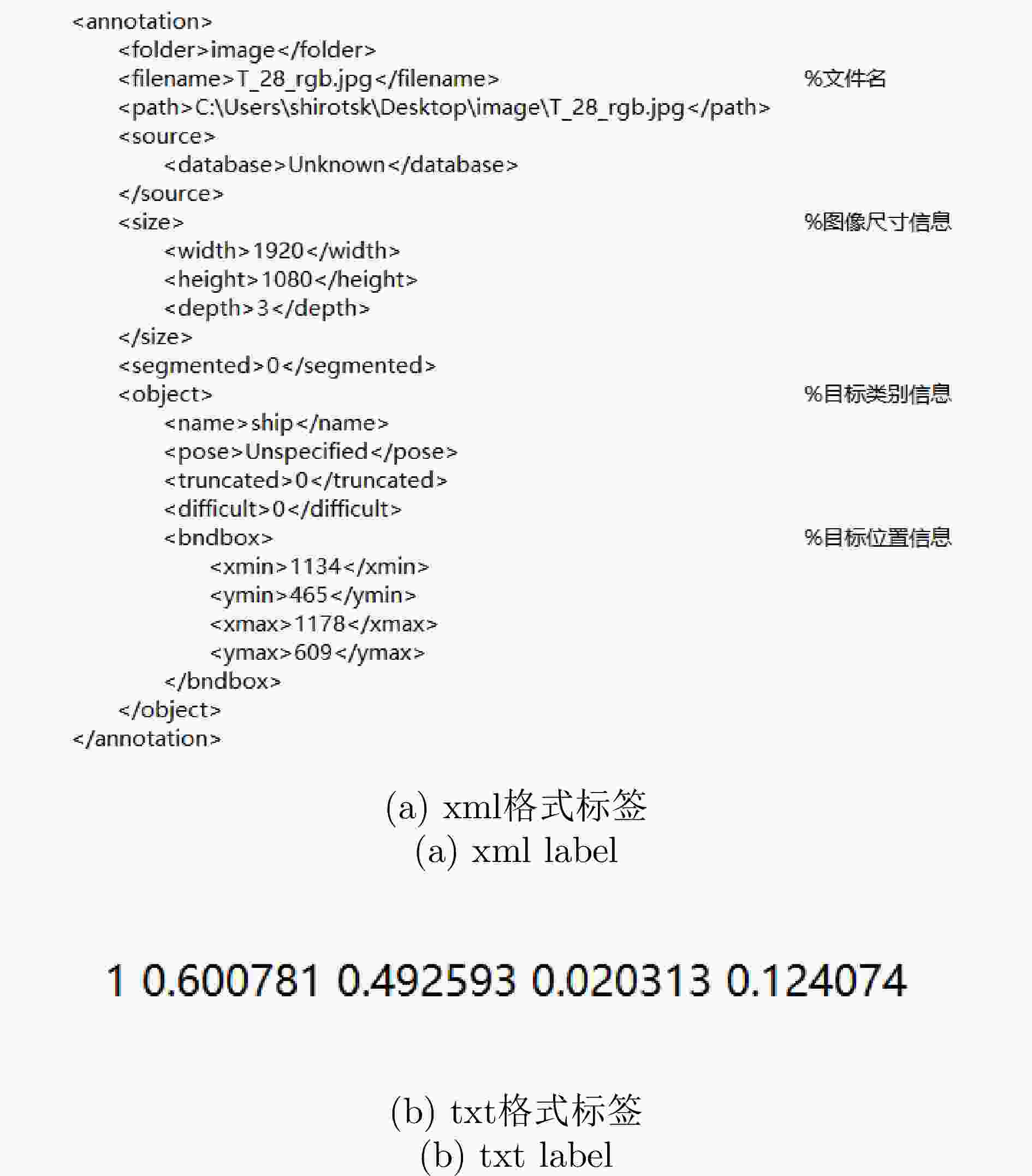

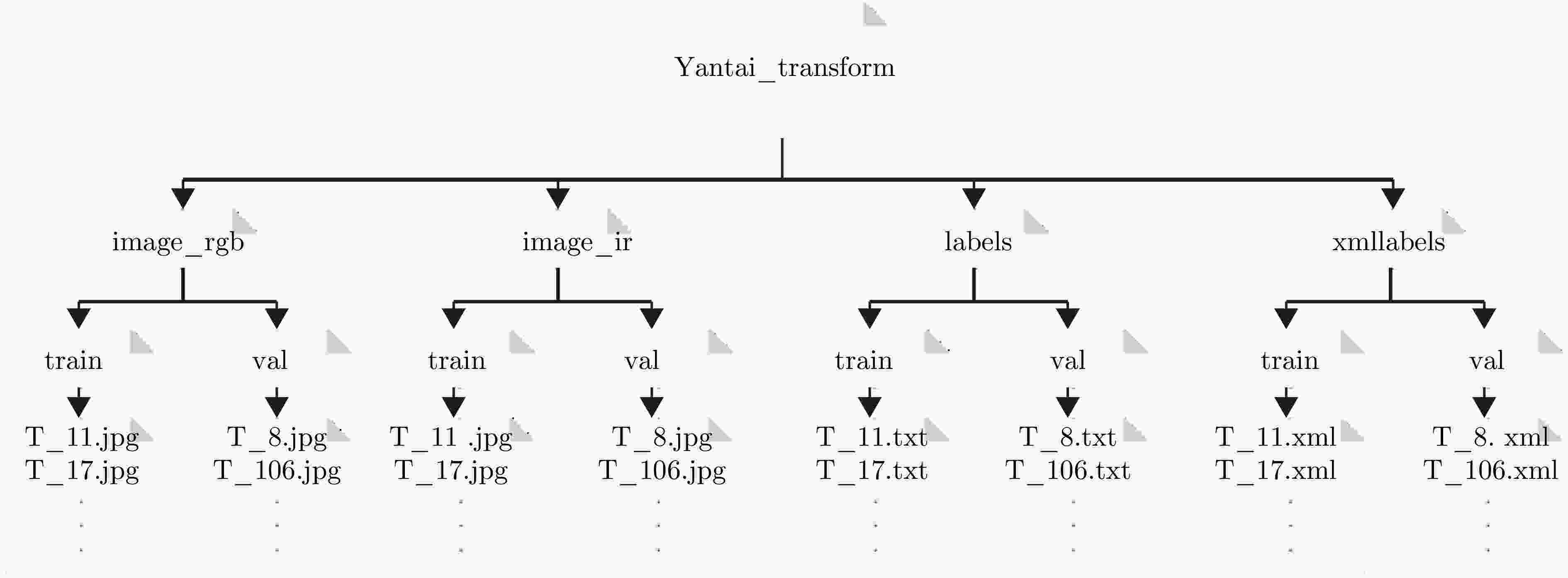

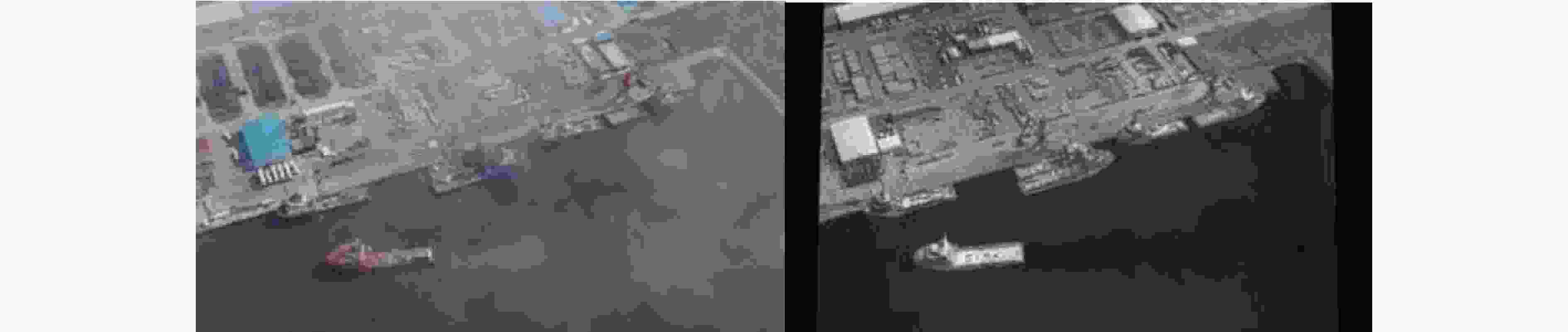

摘要: 海上多模态数据资源体系是支撑雷达、合成孔径雷达(SAR)、光电等多传感器协同探测,进而实现目标精细感知的基础,对推动算法落地应用、提高海上目标监视能力具有重要意义。为此,以渤海某港口附近海域为试验区域,利用岸基、空基等平台搭载的SAR、雷达、可见光、红外摄像头等设备,采集海上目标多源数据,并通过自动关联配准与人工修正相结合的方式进行标注,针对不同任务特点整编形成了多个多模态关联数据集,以期构建面向任务的海上多模态数据资源体系。本文所发布多模态船舶图像数据集(DMSD)是该体系的重要组成部分,共包含可见光与红外两类模态图像

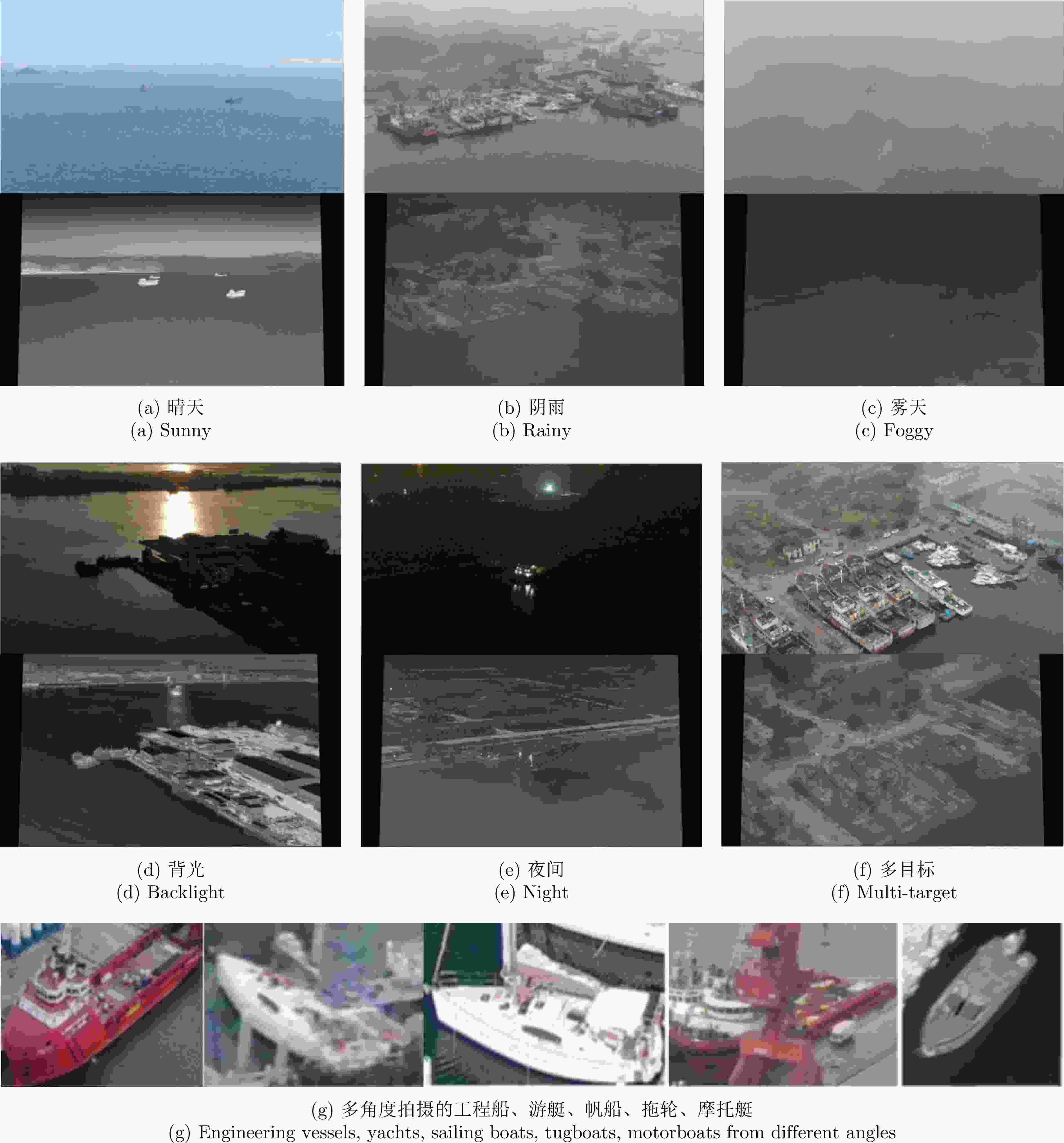

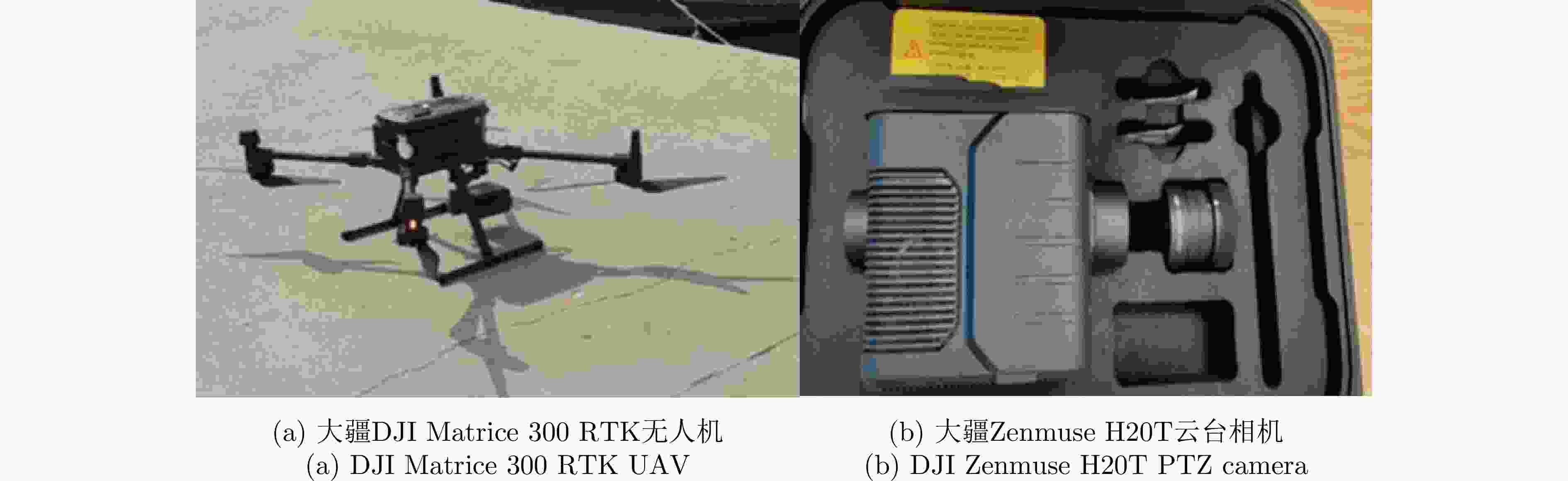

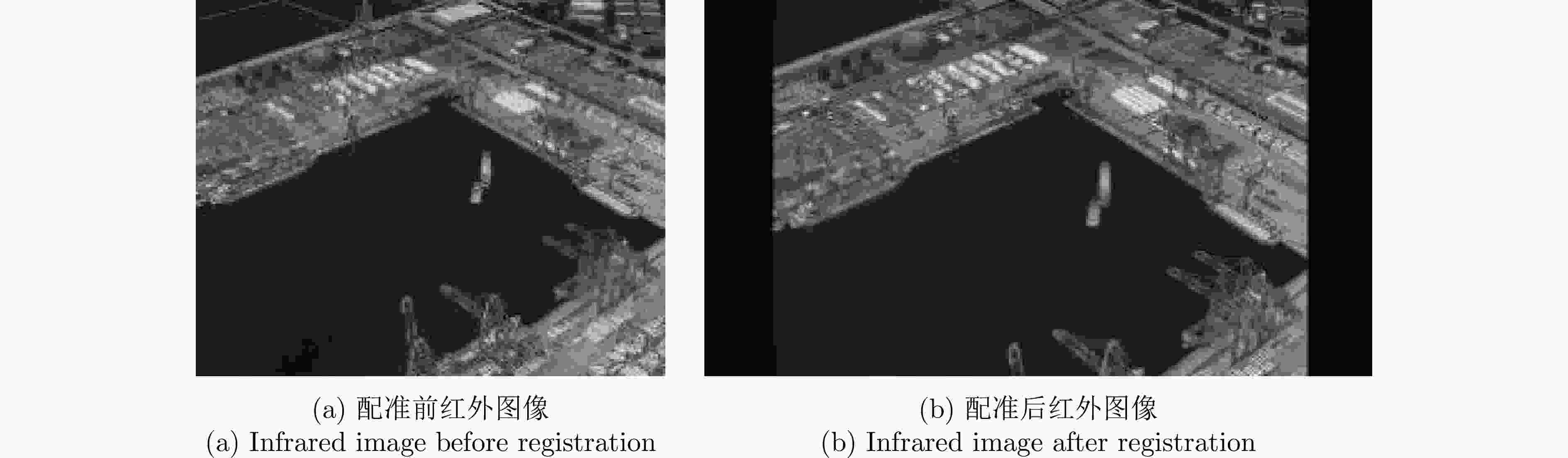

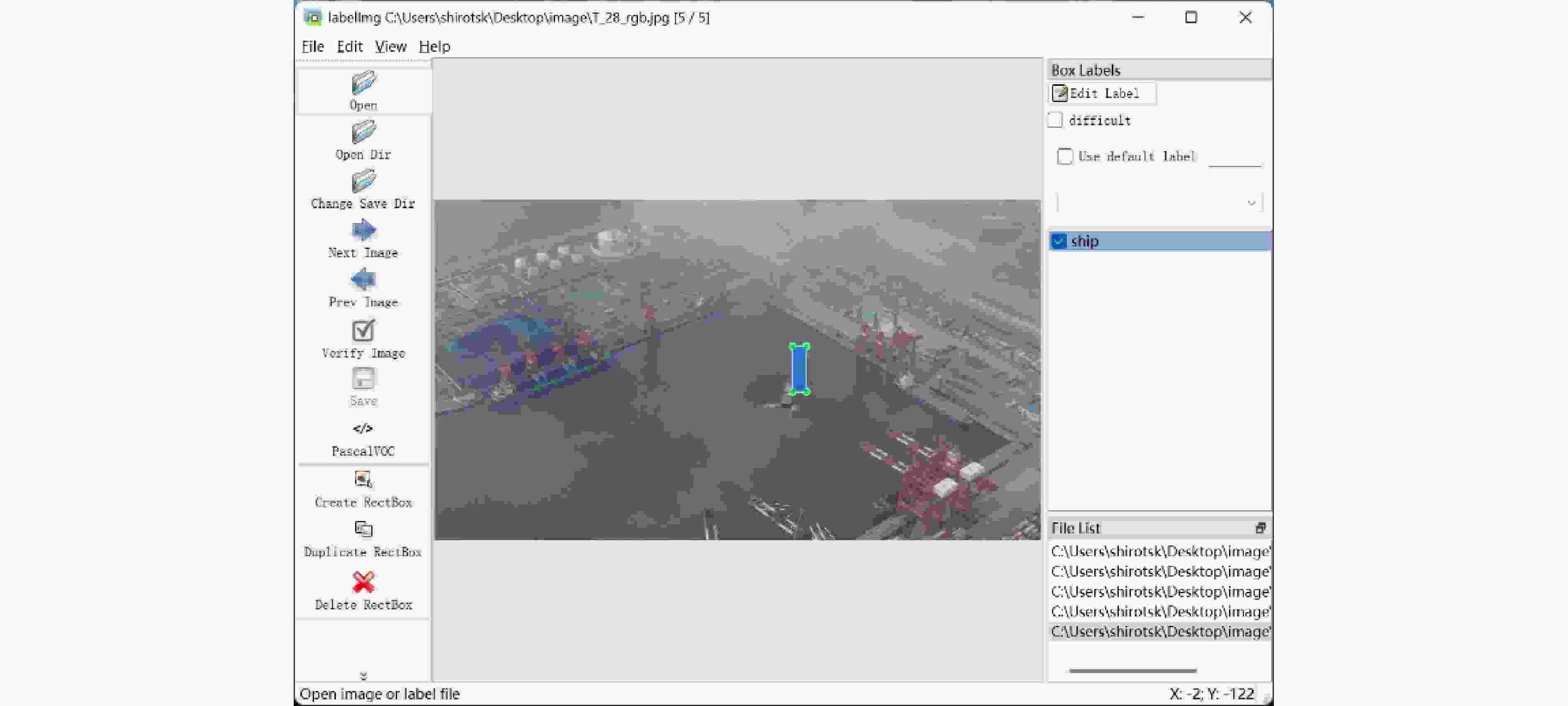

2163 对,涵盖云雨雾、逆光等多种条件,且通过仿射变换实现了模态间的图像配准。基于该数据集,该文在YOLO, CFT等算法上进行了实验验证,实验结果表明,该文数据集在YOLOv8算法上mAP50约为0.65,CFT算法上mAP50约为0.63,能够支撑相关学者开展双模态融合策略优化、复杂场景鲁棒性提升等研究。Abstract: A maritime multimodal data resource system provides a foundation for multisensor collaborative detection using radar, Synthetic Aperture Radar (SAR), and electro-optical sensors, enabling fine-grained target perception. Such systems are essential for advancing the practical application of detection algorithms and improving maritime target surveillance capabilities. To this end, this study constructs a maritime multimodal data resource system using multisource data collected from the sea area near a port in the Bohai Sea. Data were acquired using SAR, radar, visible-light cameras, infrared cameras, and other sensors mounted on shore-based and airborne platforms. The data were labeled by performing automatic correlation registration and manual correction. According to the requirements of different tasks, multiple task-oriented multimodal associated datasets were compiled. This paper focuses on one subset of the overall resource system, namely the Dual-Modal Ship Detection, which consists exclusively of visible-light and infrared image pairs. The dataset contains2163 registered image pairs, with intermodal alignment achieved through an affine transformation. All images were collected in real maritime environments and cover diverse sea conditions and backgrounds, including cloud, rain, fog, and backlighting. The dataset was evaluated using representative algorithms, including YOLO and CFT. Experimental results show that the dataset achieves an mAP@50 of approximately 0.65 with YOLOv8 and 0.63 with CFT, demonstrating its effectiveness in supporting research on optimizing bimodal fusion strategies and enhancing detection robustness in complex maritime scenarios.-

Key words:

- Ship dataset /

- Public dataset /

- Multimodal data resource system /

- Ship detection /

- Deep learning

-

表 1 部分公开数据集统计

Table 1. Statistics from some publicly available datasets

数据集 实例数量 图像数量 图像分辨率 模态 目标类型 HRSC2016 2976 1070 300×300到1500×900 可见光 船舶 ShipRSImageNet 3435 3435 930×930 可见光 船舶 FGSD 5634 2612 930×930 可见光 船舶 ISDD 3061 1284 768×512~ 5056 ×5056 红外 船舶 MassMIND 22364 2900 640×512 红外 船舶 TNO Image Fusion Dataset 261 261对 640×480或720×576 双模态 城市场景 LLVIP 约 14000 30976 1920×1080、1280×720 双模态 行人 DMSD(本文) 19567 2163 对1920×1080、640×512 双模态 船舶 表 2 可见光与热成像相机参数

Table 2. Parameters of visible light and thermal imaging cameras

设备类型 可见光相机 热成像相机 分辨率(像素) 1920× 1080 640×512 视场角(°) 66.6~4 40.6 帧率(fps) 30 30 镜头焦距(mm) 6.83~119.94 13.5 镜头光圈 f/2.8~f/11 f/1.0 波长范围(μm) 可见光 8~14 表 3 不同算法实验结果

Table 3. Experimental results of different algorithms

算法模型 Precision Recall mAP50 mAP50-95 推理速度(fps) YOLOv5n 0.657 0.509 0.532 0.190 26.88 CFT(YOLOv5l) 0.705 0.644 0.635 0.216 17.25 CFT(YOLOv5s) 0.725 0.502 0.624 0.263 25.75 FFODNet 0.730 0.536 0.636 0.269 16.25 SuperYOLO 0.699 0.627 0.604 0.203 17.50 YOLOv8n 0.753 0.529 0.654 0.280 23.25 YOLOv8x 0.724 0.549 0.646 0.279 18.75 表 4 相同算法下不同模态实验结果

Table 4. Experimental results of different modes under the same algorithm

算法模型 可见光mAP50 红外mAP50 双模态mAP50 YOLOv5n 0.489 0.602 0.532 YOLOv8n 0.531 0.657 0.654 SuperYOLO 0.450 0.607 0.604 -

[1] LIU Zikun, YUAN Liu, WENG Lubin, et al. A high resolution optical satellite image dataset for ship recognition and some new baselines[C]. The 6th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2017), Porto, Portugal, 2017: 324–331. doi: 10.5220/0006120603240331. [2] ZHANG Zhengning, ZHANG Lin, WANG Yue, et al. ShipRSImageNet: A large-scale fine-grained dataset for ship detection in high-resolution optical remote sensing images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 8458–8472. doi: 10.1109/JSTARS.2021.3104230. [3] CHEN Kaiyan, WU Ming, LIU Jiaming, et al. FGSD: A dataset for fine-grained ship detection in high resolution satellite images[EB/OL]. https://arxiv. org/abs/2003.06832, 2020. [4] HAN Yaqi, LIAO Jingwen, LU Tianshu, et al. KCPNet: Knowledge-driven context perception networks for ship detection in infrared imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5000219. doi: 10.1109/TGRS.2022.3233401. [5] NIRGUDKAR S, DEFILIPPO M, SACARNY M, et al. MassMIND: Massachusetts maritime INfrared dataset[J]. The International Journal of Robotics Research, 2023, 42(1/2): 21–32. doi: 10.1177/02783649231153020. [6] TOET A. TNO image fusion dataset[EB/OL]. https://figshare.com/articles/dataset/TNO_Image_Fusion_Dataset/1008029, 2022. [7] JIA Xinyu, ZHU Chuang, LI Minzhen, et al. LLVIP: A visible-infrared paired dataset for low-light vision[C]. IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, Canada, 2021: 3489–3497. doi: 10.1109/ICCVW54120.2021.00389. [8] LI Yiming, LI Zhiheng, CHEN Nuo, et al. Multiagent multitraversal multimodal self-driving: Open MARS dataset[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2024: 22041–22051. doi: 10.1109/CVPR52733.2024.02081. [9] HWANG S, PARK J, KIM N, et al. Multispectral pedestrian detection: Benchmark dataset and baseline[C]. The 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, USA, 2015: 1037–1045. doi: 10.1109/CVPR.2015.7298706. [10] 苏丽, 崔世豪, 张雯. 基于改进暗通道先验的海上低照度图像增强算法[J]. 海军航空大学学报, 2024, 39(5): 576–586. doi: 10.7682/j.issn.2097-1427.2024.05.007.SU Li, CUI Shihao, and ZHANG Wen. An algorithm for enhancing low-light images at sea based on improved dark channel priors[J]. Journal of Naval Aviation University, 2024, 39(5): 576–586. doi: 10.7682/j.issn.2097-1427.2024.05.007. [11] 曾文锋, 李树山, 王江安. 基于仿射变换模型的图像配准中的平移、旋转和缩放[J]. 红外与激光工程, 2001, 30(1): 18–20, 17. doi: 10.3969/j.issn.1007-2276.2001.01.006.ZENG Wenfeng, LI Shushan, and WANG Jiang’an. Translation, rotation and scaling changes in image registration based affine transformation model[J]. Infrared and Laser Engineering, 2001, 30(1): 18–20, 17. doi: 10.3969/j.issn.1007-2276.2001.01.006. [12] 于乐凯, 曹政, 孙艳丽, 等. 海上舰船目标可见光/红外图像匹配方法[J]. 海军航空大学学报, 2024, 39(6): 755–764,772. doi: 10.7682/j.issn.2097-1427.2024.06.013.YU Lekai, CAO Zheng, SUN Yanli, et al. Visible and infrared images matching method for maritime ship targets[J]. Journal of Naval Aviation University, 2024, 39(6): 755–764,772. doi: 10.7682/j.issn.2097-1427.2024.06.013. [13] GOYAL P, DOLLÁR P, GIRSHICK R, et al. Accurate, large minibatch SGD: Training ImageNet in 1 hour[EB/OL]. https://arxiv.org/abs/1706.02677, 2018. [14] FANG Qingyun, HAN Dapeng, WANG Zhaokui. Cross-modality fusion transformer for multispectral object detection[EB/OL]. https://arxiv.org/abs/2111.00273, 2022. [15] BOCHKOVSKIY A, WANG C Y, LIAO H Y M. YOLOv4: Optimal speed and accuracy of object detection[EB/OL]. https://arxiv.org/abs/2004.10934, 2020. [16] WANG Jinpeng, XU Cong’an, ZHAO Chunhui, et al. Multimodal object detection of UAV remote sensing based on joint representation optimization and specific information enhancement[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2024, 17: 12364–12373. doi: 10.1109/JSTARS.2024.3373816. [17] ZHANG Jiaqing, LEI Jie, XIE Weiying, et al. SuperYOLO: Super resolution assisted object detection in multimodal remote sensing imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5605415. doi: 10.1109/TGRS.2023.3258666. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: