-

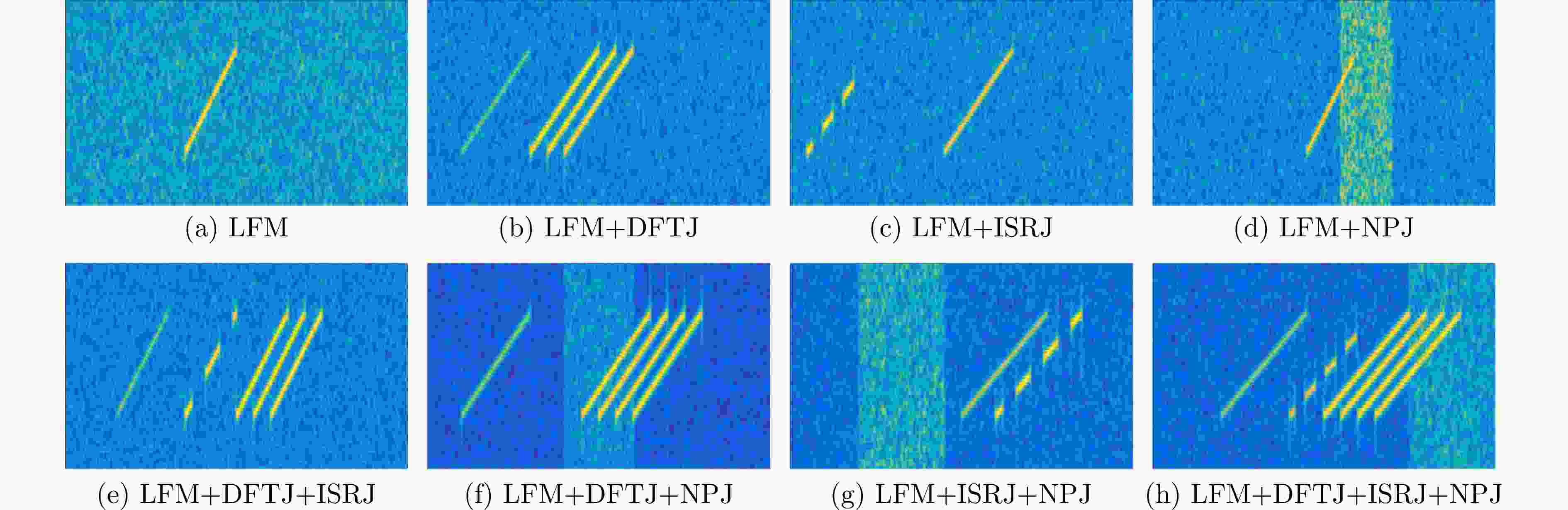

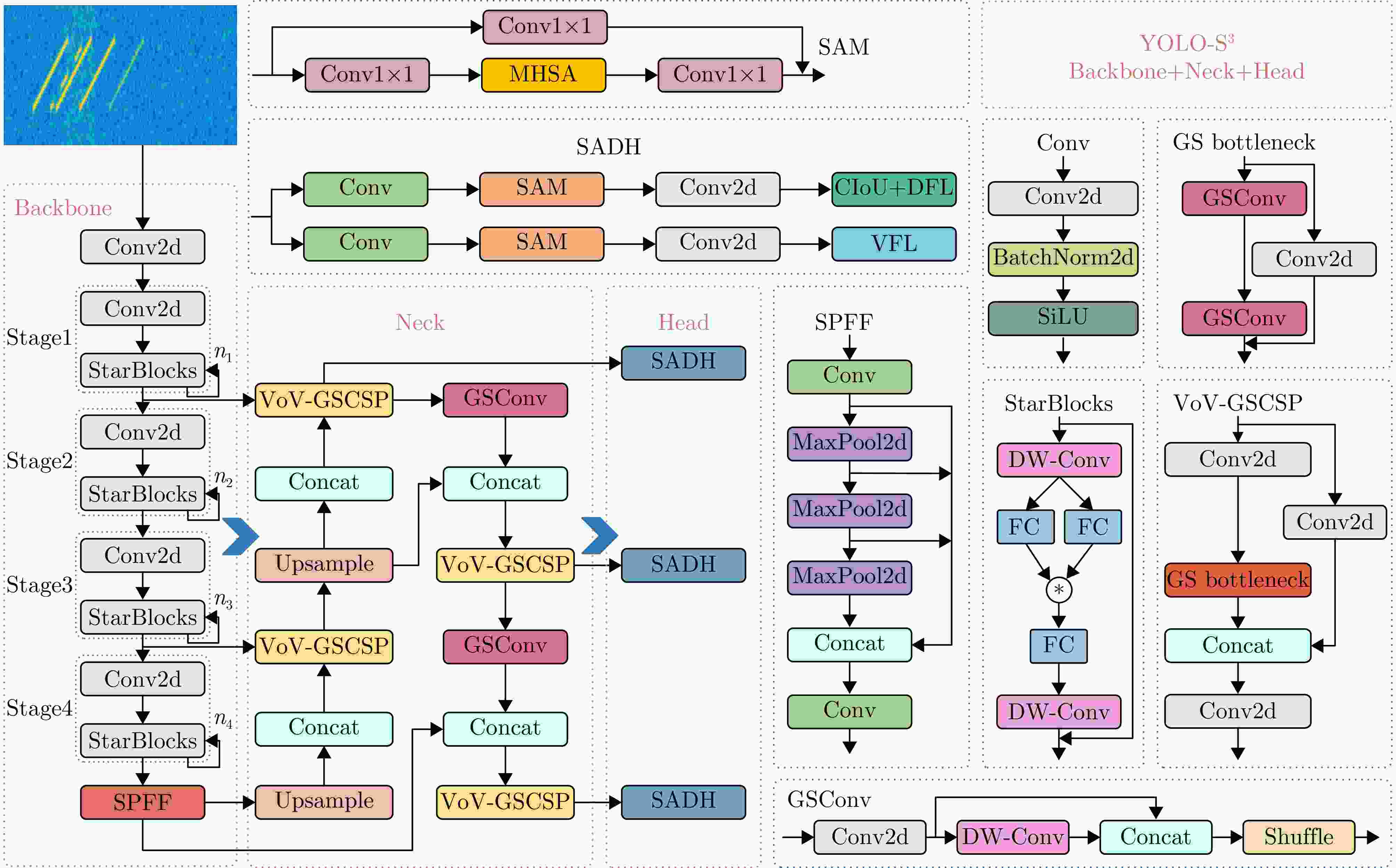

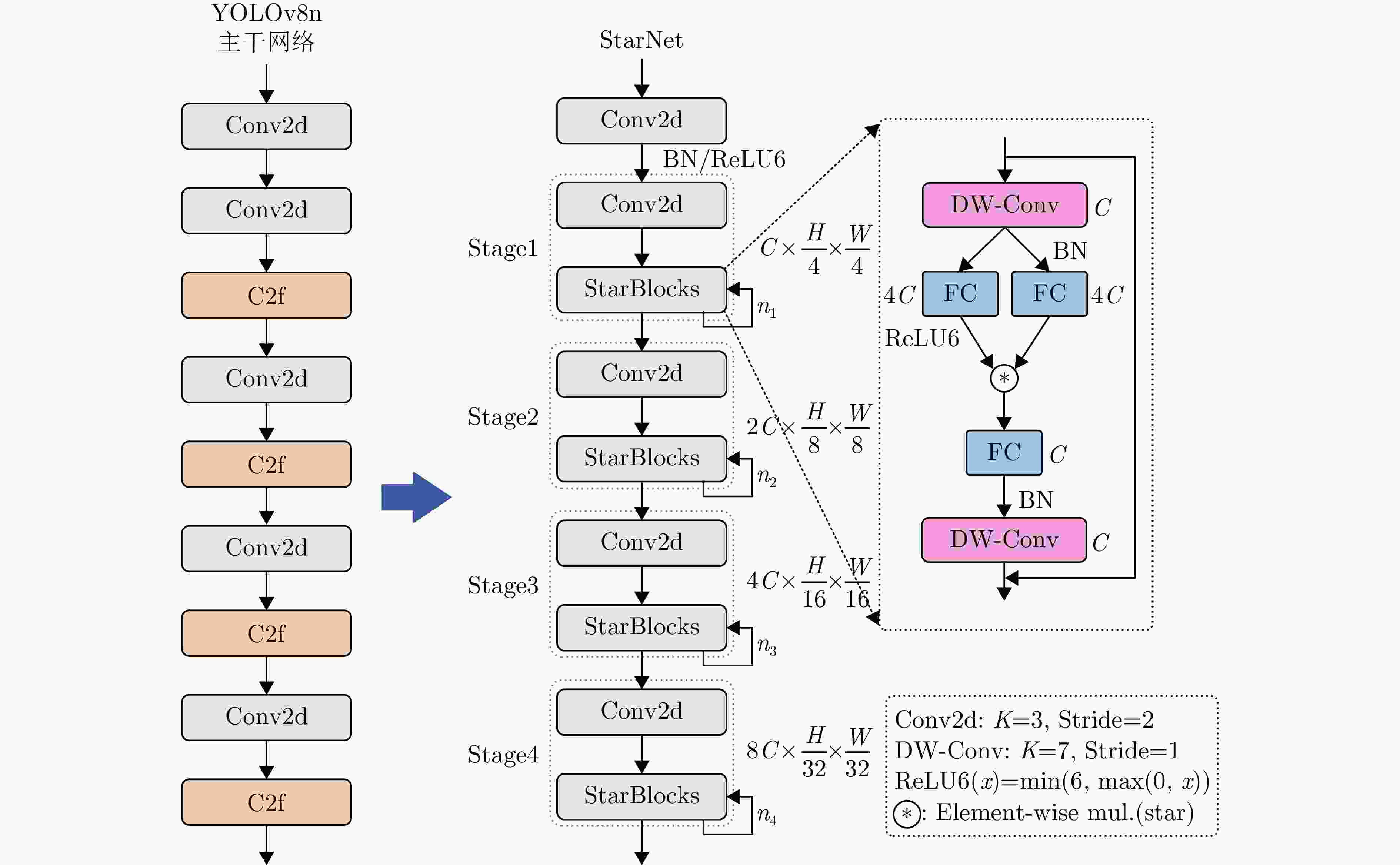

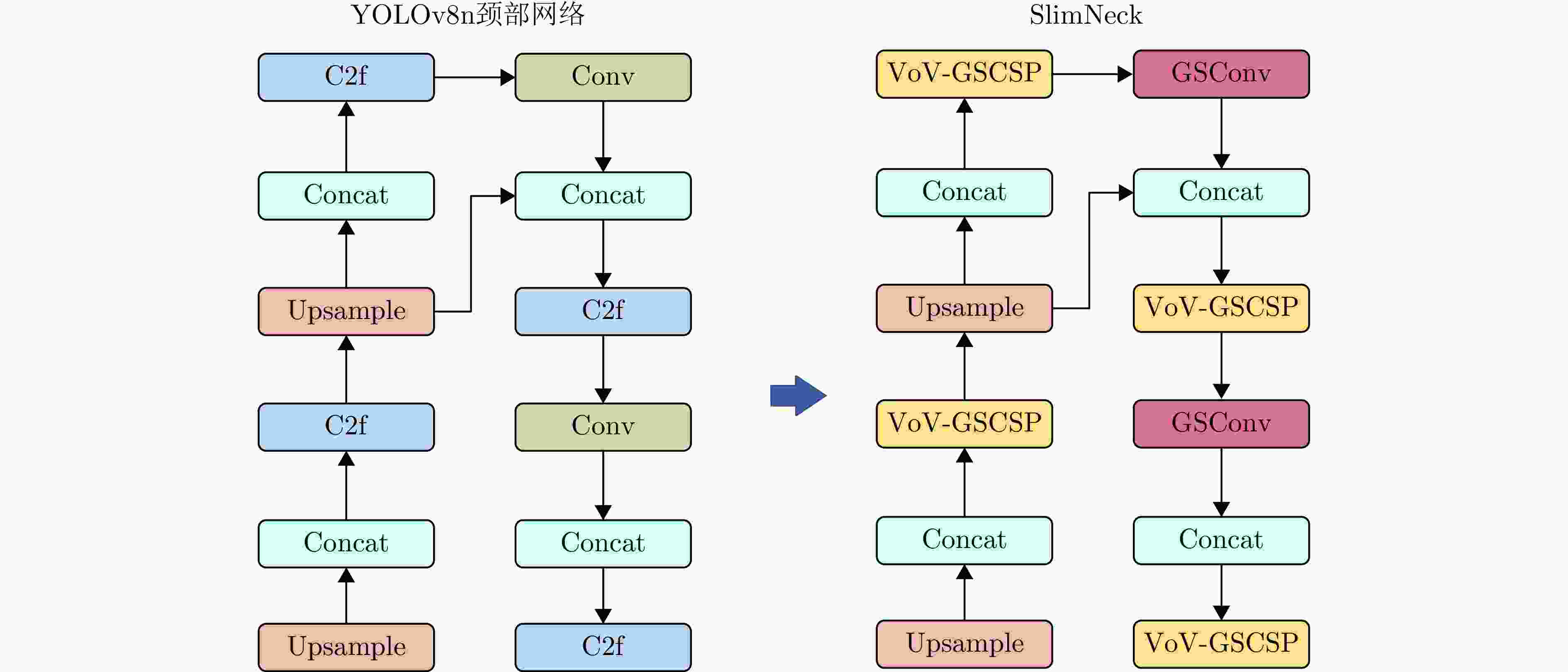

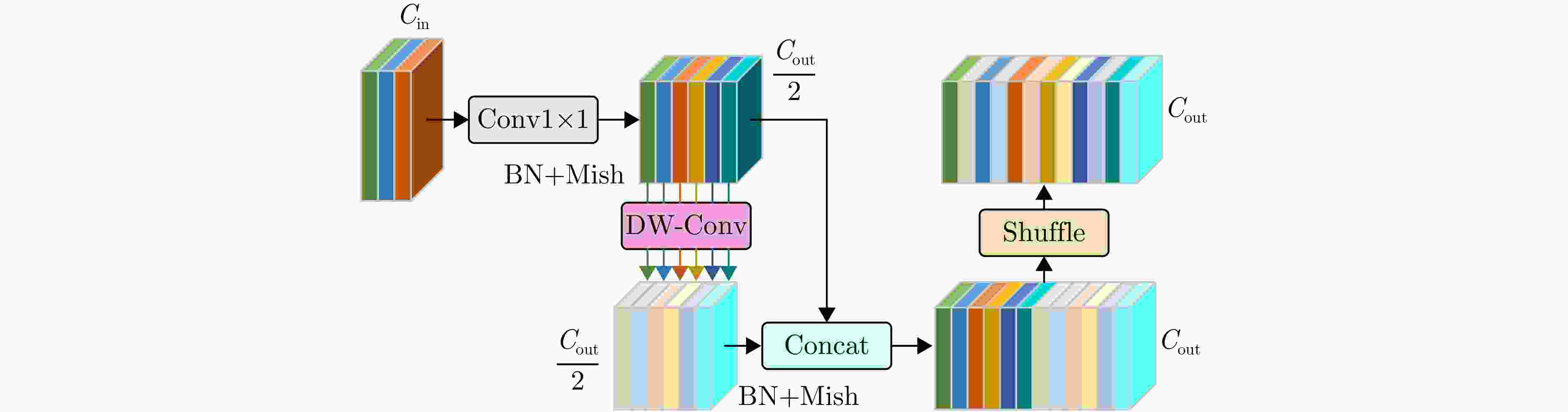

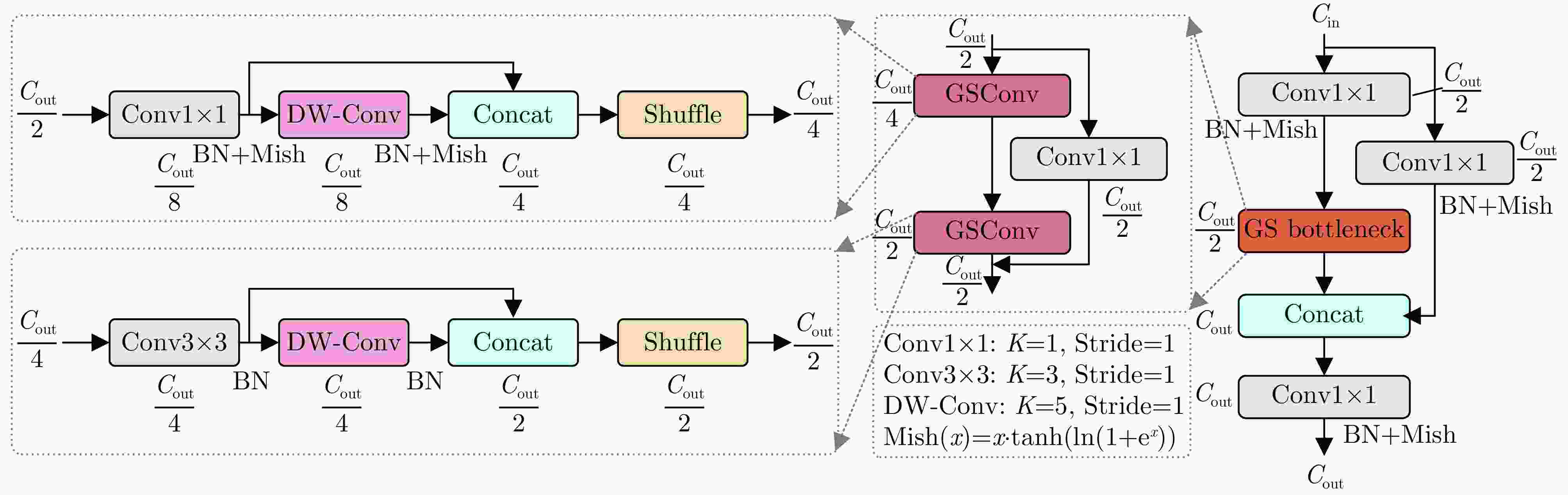

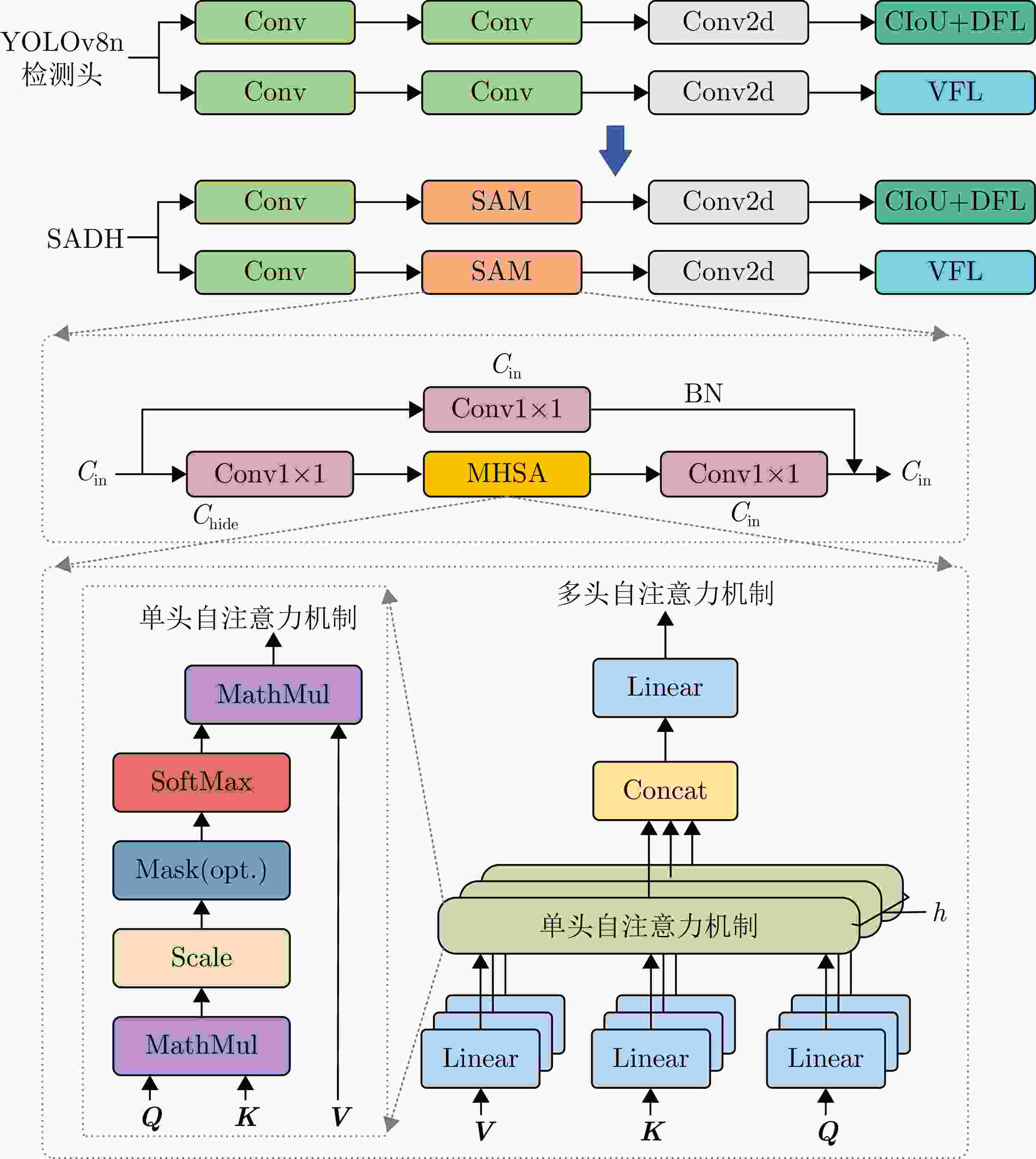

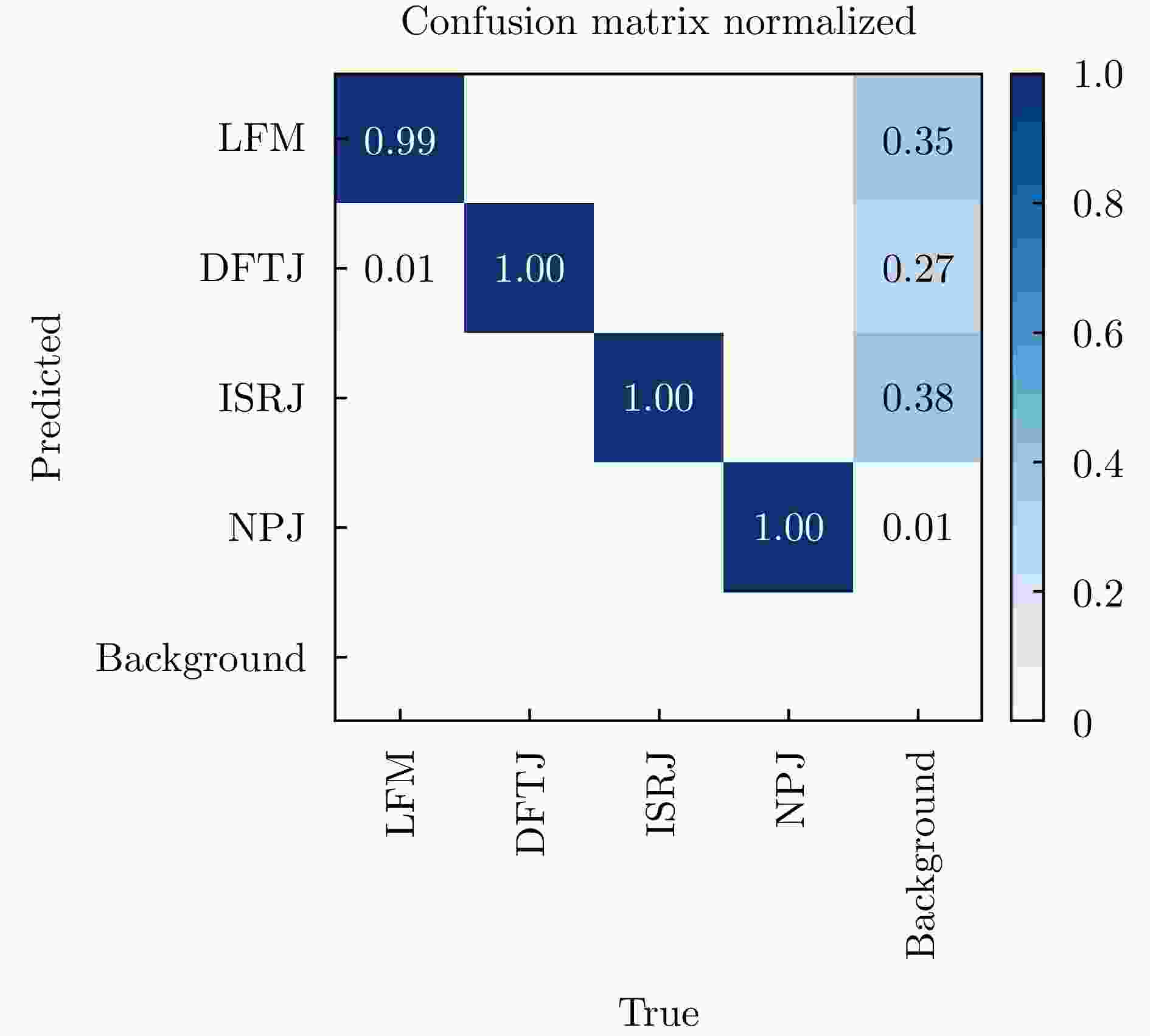

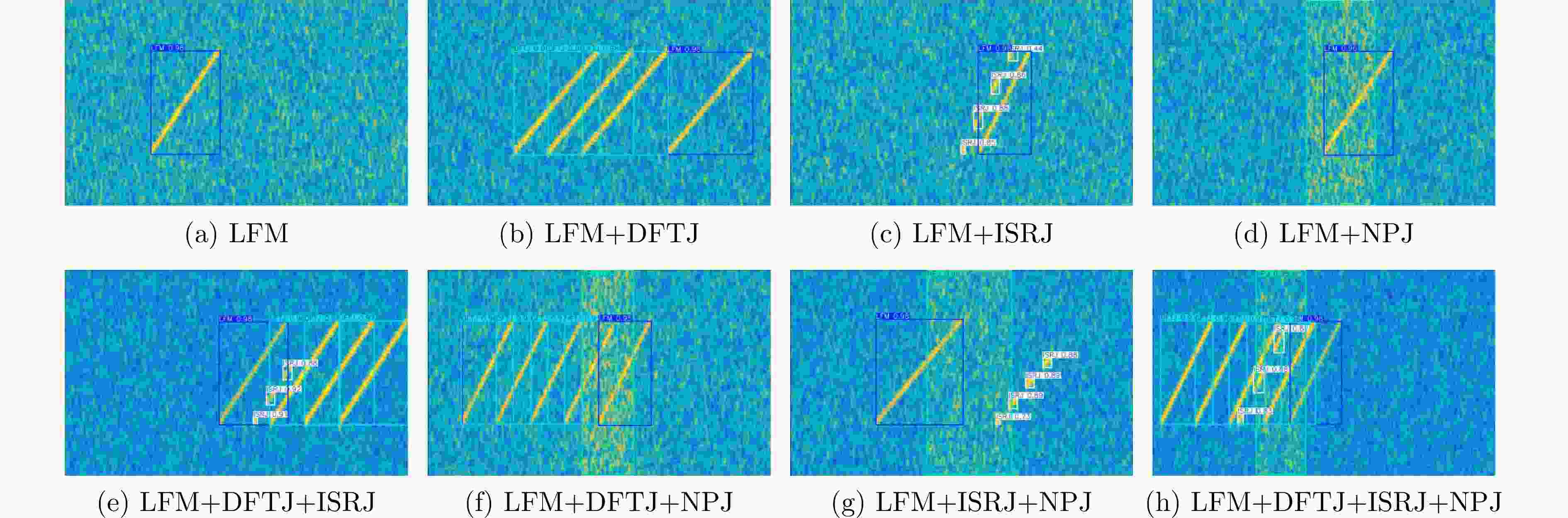

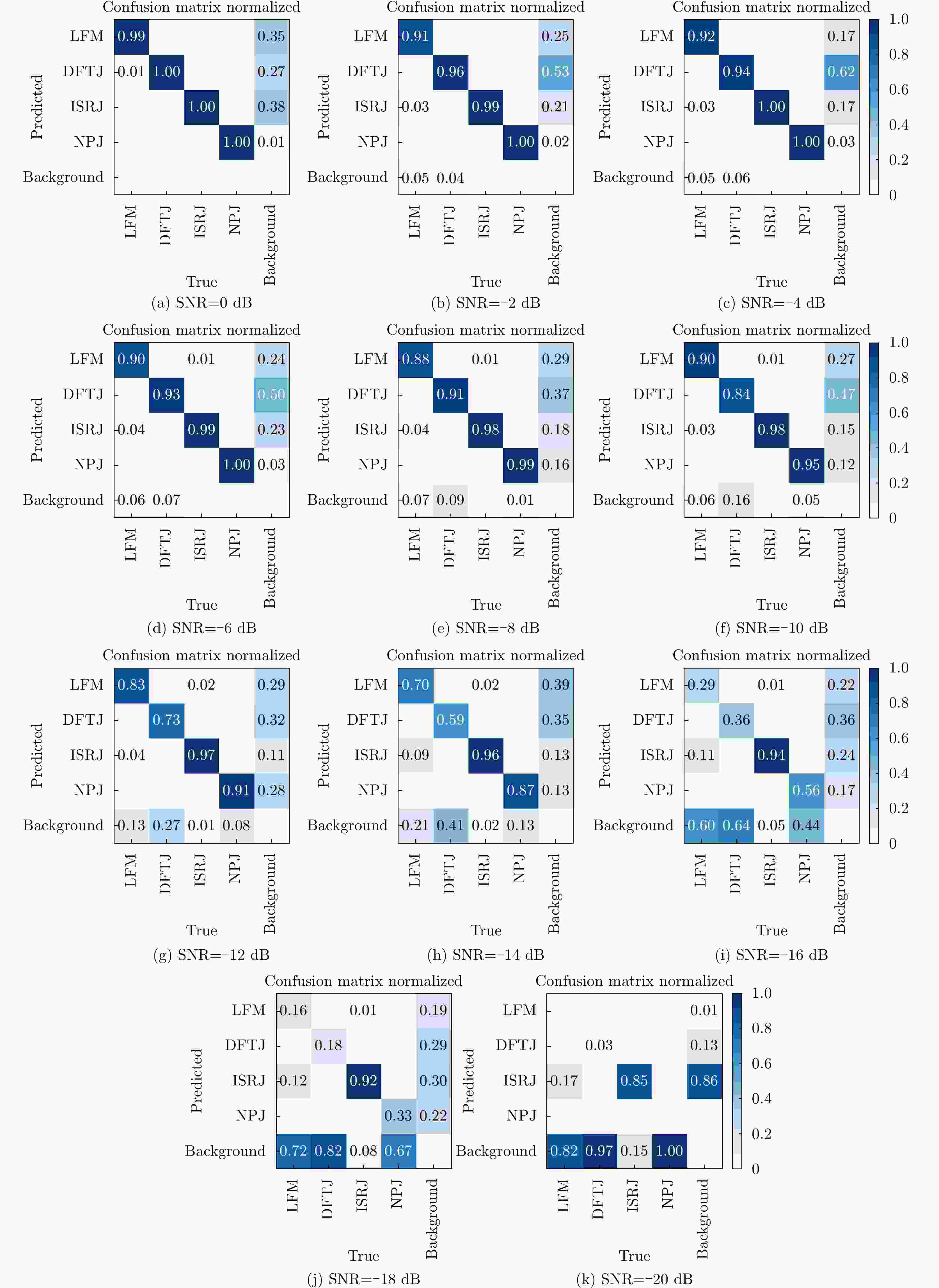

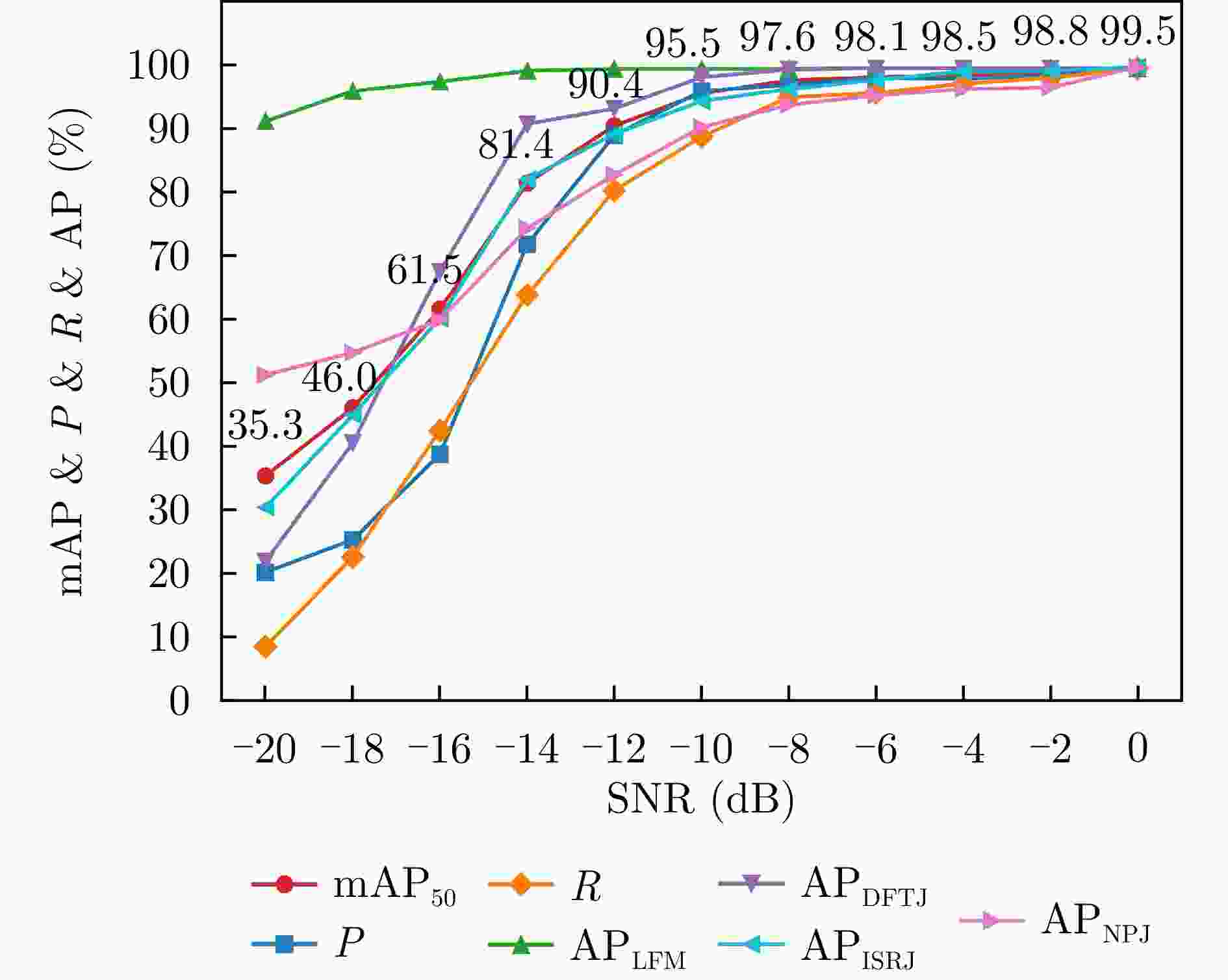

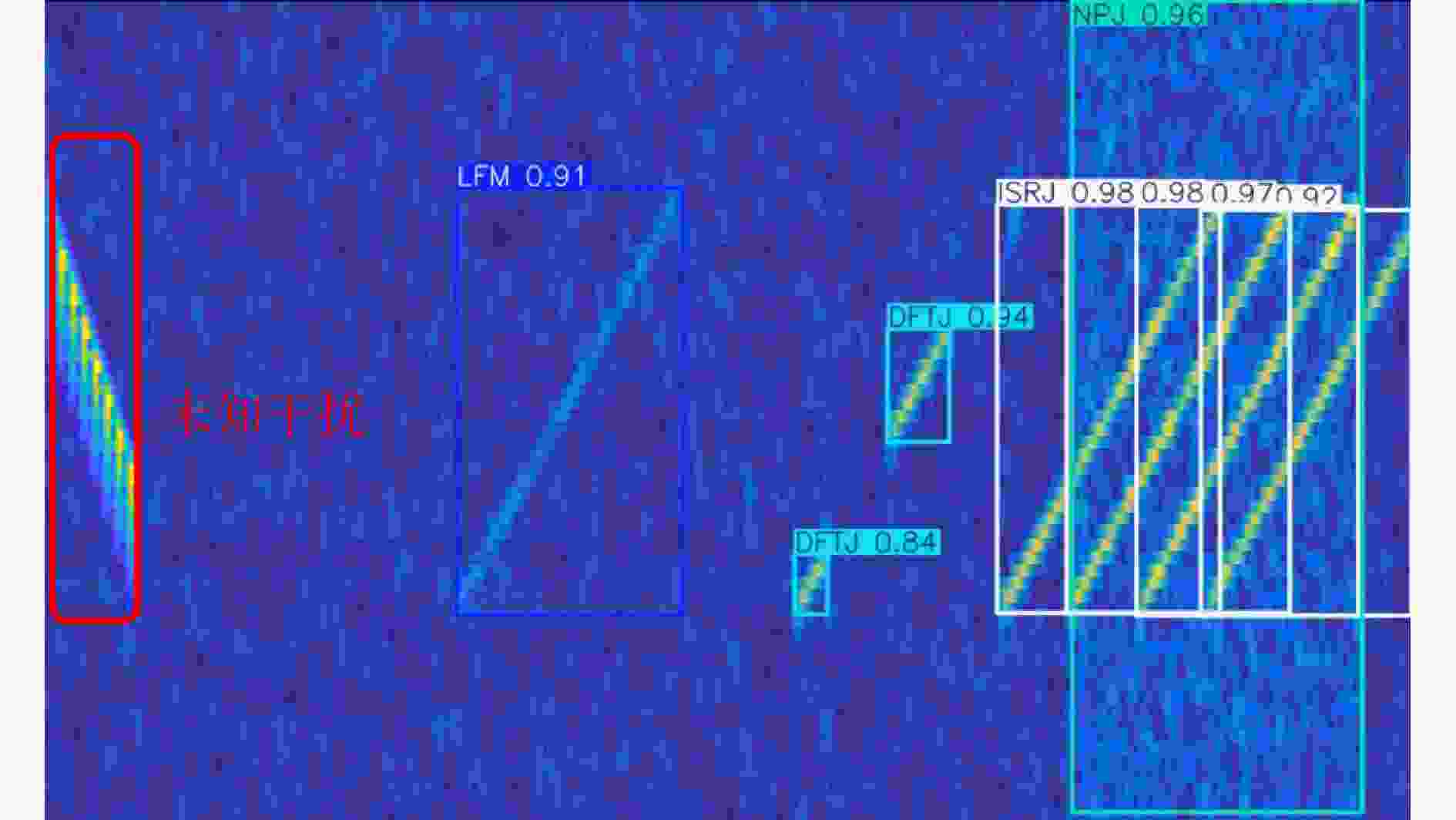

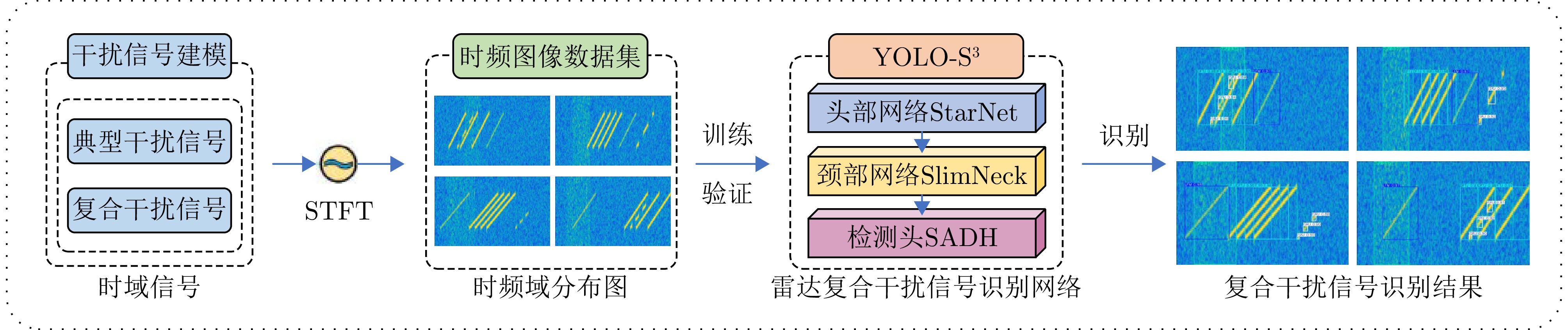

摘要: 为提高雷达在复杂电磁环境下的干扰识别能力,该文提出一种具备智能化、轻量化以及高实时性3项主要特性的轻量化复合干扰信号识别网络YOLO-S3。首先,提出利用视觉检测算法对雷达干扰的二维时频数据进行识别的技术路线,并基于信号建模仿真技术和短时傅里叶变换构建复合干扰信号图像数据集。其次,通过引入StarNet和SlimNeck重构YOLOv8n的主干及颈部网络,同时设计具有自注意力机制的检测头(SADH),在保证识别精度的同时实现了网络轻量化。最后,通过消融实验和对比实验验证了网络性能。实验结果表明,YOLO-S3具有最轻量化的网络设计。在信干比–10~0 dB范围内随机分布的条件下,当信噪比≥0 dB时,该网络的平均识别精度高达99.5%;当信噪比降至–10 dB时,仍可保持95.5%的平均识别精度,在低信噪比条件下表现出较好的鲁棒性和泛化能力。该文的研究成果为机载雷达信号处理器、便携式电子设备等资源受限平台的实时复合干扰信号识别提供了新的技术途径。Abstract: To enhance the jamming recognition capabilities of radars in complex electromagnetic environments, this study proposes YOLO-S3, a lightweight network for recognizing composite jamming signals. YOLO-S3 is characterized by three core attributes: Smartness, slimness, and high speed. Initially, a technical approach based on visual detection algorithms is introduced to identify 2D time-frequency representations of jamming signals. An image dataset of composite jamming signals is constructed using signal modeling, simulation technology, and the short-time Fourier transform. Next, the backbone and neck networks of YOLOv8n are restructured by integrating StarNet and SlimNeck, and a Self-Attention Detect Head (SADH) is designed to enhance feature extraction. These modifications result in a lightweight network without compromising recognition accuracy. Finally, the network’s performance is validated through ablation and comparative experiments. Results show that YOLO-S3 features a highly lightweight network design. When the signal-to-jamming ratio varies from −10 to 0 dB and the Signal-to-Noise Ratio (SNR) is ≥0 dB, the network achieves an impressive average recognition accuracy of 99.5%. Even when the SNR decreases to −10 dB, it maintains a robust average recognition accuracy of 95.5%, exhibiting strong performance under low SNR conditions. These findings provide a promising solution for the real-time recognition of composite jamming signals on resource-constrained platforms such as airborne radar signal processors and portable electronic devices.

-

表 1 信号仿真参数

Table 1. Signal simulation parameters

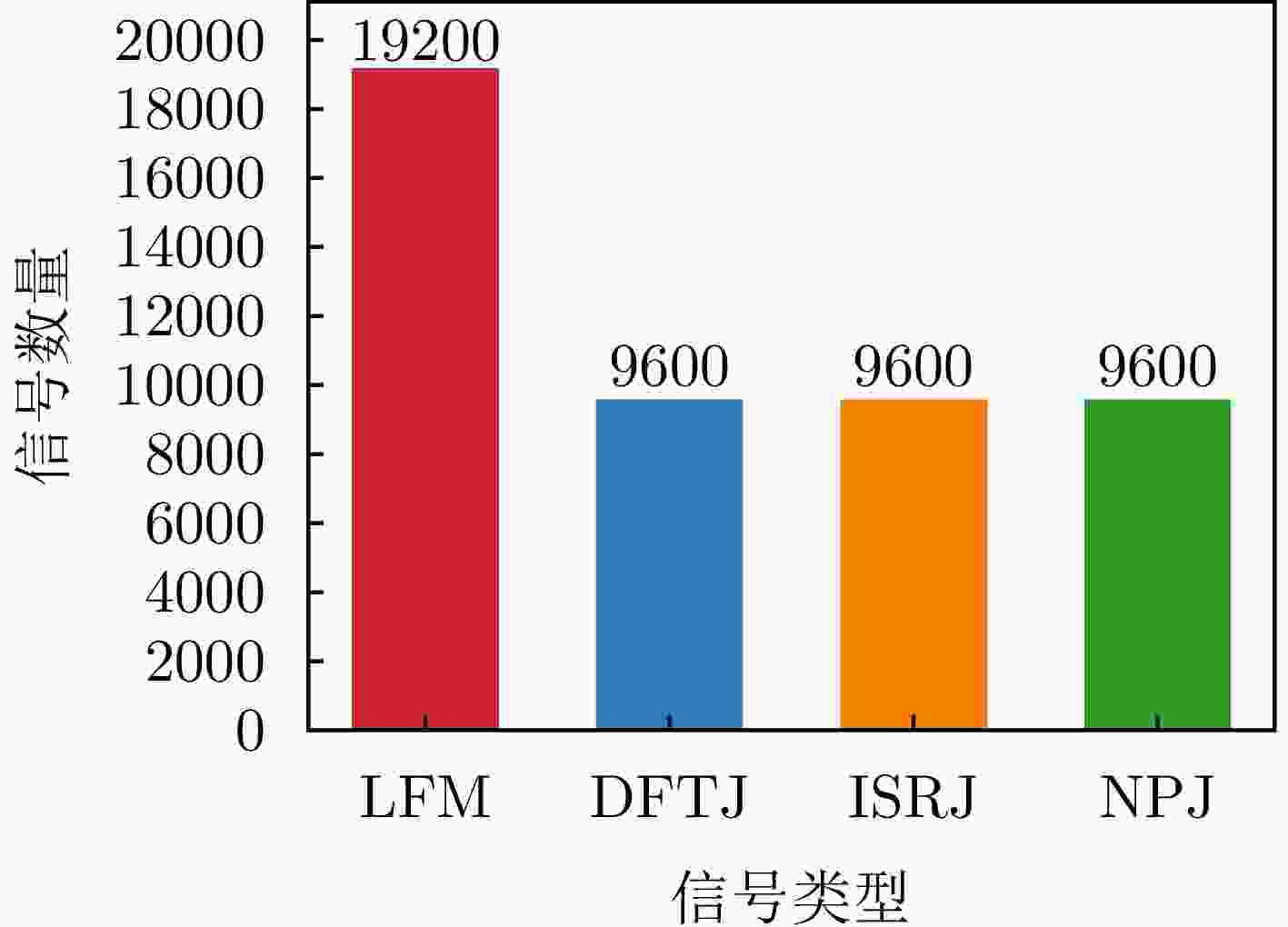

信号类型 参数 取值范围 LFM 起始频率 –10 MHz 终止频率 10 MHz 单信号总样本数 4000 点有效调制段样本数 [600, 800, 1000 ]点采样率 40 MHz SNR 0~10 dB LFM+DFTJ 假目标数量 [3, 4]个 假目标时延 [5, 7, 9] μs 对应样本数 [200, 280, 360]点 SNR 0~10 dB SJR –10~0 dB LFM+ISRJ 转发次数 [3, 4]个 采样时间 [5, 6, 8] μs 对应样本数 [200, 240, 320]点 SNR 0~10 dB SJR –10~0 dB LFM+NPJ SNR 0~10 dB SJR –10~0 dB LFM+DFTJ+ISRJ

LFM+DFTJ+NPJ

LFM+ISRJ+NPJ

LFM+DFTJ+ISRJ+NPJ假目标数量 [3, 4]个 假目标时延 [5, 7, 9] μs 转发次数 [3, 4]个 采样时间 [5, 6, 8] μs SNR 0~10 dB SJR –10~0 dB 表 2 JSID19200数据集详细信息

Table 2. JSID19200 dataset specifications

信号类型 训练集+验证集数量 测试集数量 LFM 1200 1200 LFM+DFTJ 1200 1200 LFM+ISRJ 1200 1200 LFM+NPJ 1200 1200 LFM+DFTJ+ISRJ 1200 1200 LFM+DFTJ+NPJ 1200 1200 LFM+ISRJ+NPJ 1200 1200 LFM+DFTJ+ISRJ+NPJ 1200 1200 总计 9600 9600 表 3 实验环境与参数配置

Table 3. Experimental environment and parameter configuration

环境 配置 参数 数值 操作系统 Windows 10 图像尺寸 640 CPU AMD EPYC 7H12 64-Core Processor 批量大小 16 GPU NVIDIA RTX A6000 迭代次数 100 显存 48 GB 优化器 SGD Python 3.9.2 初始学习率 0.01 Pytorch 1.12.0 最终学习率 0.0001 CUDA 11.3 学习率动量 0.937 编译软件 PyCharm 2020.1.3 权重衰减系数 0.0005 表 4 消融实验结果

Table 4. Ablation study results

StarNet SlimNeck SADH Params

(M)FLOPs

(GB)Size

(MB)Dtotal

(ms)P

(%)R

(%)AP(%) mAP

(%)LFM DFTJ ISRJ NPJ -- -- -- 3.0 8.2 6.3 46.4 99.8 99.7 99.5 99.5 99.5 99.5 99.5 √ -- -- 1.9 5.3 4.0 30.4 99.7 99.7 99.5 99.5 99.5 99.5 99.5 √ √ -- 2.0 4.2 4.2 34.7 99.8 99.7 99.5 99.5 99.5 99.5 99.5 √ √ √ 1.8 3.3 4.0 39.8 99.7 99.7 99.5 99.5 99.5 99.5 99.5 表 5 常见网络对比实验结果

Table 5. Comparative study results of state-of-the-art networks

网络名称 Params

(M)FLOPs

(GB)Size

(MB)Dtotal

(ms)P

(%)R

(%)AP(%) mAP

(%)LFM DFTJ ISRJ NPJ Faster RCNN[21] 41.4 216.5 315.1 2437.5 95.5 94.0 99.0 84.1 99.0 99.8 95.5 SSD[22] 13.4 15.2 103.1 312.5 93.6 82.4 98.9 79.0 97.9 98.8 93.6 RetinaNet[23] 32.3 215.0 245.7 1920.3 96.8 94.1 99.6 89.2 99.0 99.4 96.8 RT-DETRx[24] 67.3 232.3 129.0 255.9 96.6 98.1 99.4 99.0 99.2 99.4 99.3 YOLOv8n[25] 3.0 8.2 6.3 46.4 99.8 99.7 99.5 99.5 99.5 99.5 99.5 YOLOv8x 68.2 258.1 136.7 214.3 99.8 99.9 99.5 99.5 99.5 99.5 99.5 YOLOv9t[26] 2.0 7.9 4.6 63.7 99.8 99.8 99.5 99.5 99.5 99.5 99.5 YOLOv9e 58.1 192.7 117.3 679.3 99.8 99.8 99.5 99.5 99.5 99.5 99.5 YOLOv10n[27] 2.7 8.4 5.8 49.4 99.3 99.5 99.4 99.5 99.5 99.5 99.5 YOLOv10x 31.7 170.0 64.1 229.9 99.8 99.8 99.5 99.5 99.5 99.5 99.5 YOLOv11n[28] 2.6 6.4 5.5 49.9 99.8 99.7 99.5 99.5 99.5 99.5 99.5 YOLOv11x 56.9 195.5 114.4 248.2 99.8 99.9 99.5 99.5 99.5 99.5 99.5 YOLOv12n[29] 2.6 6.5 5.5 63.2 99.8 99.8 99.5 99.5 99.5 99.5 99.5 YOLOv12x 59.1 199.8 119.1 343.6 99.8 99.9 99.5 99.5 99.5 99.5 99.5 YOLO-S3 1.8 3.3 4.0 39.8 99.7 99.7 99.5 99.5 99.5 99.5 99.5 -

[1] 崔国龙, 余显祥, 魏文强, 等. 认知智能雷达抗干扰技术综述与展望[J]. 雷达学报, 2022, 11(6): 974–1002. doi: 10.12000/JR22191.CUI Guolong, YU Xianxiang, WEI Wenqiang, et al. An overview of antijamming methods and future works on cognitive intelligent radar[J]. Journal of Radars, 2022, 11(6): 974–1002. doi: 10.12000/JR22191. [2] 张顺生, 陈爽, 陈晓莹, 等. 面向小样本的多模态雷达有源欺骗干扰识别方法[J]. 雷达学报, 2023, 12(4): 882–891. doi: 10.12000/JR23104.ZHANG Shunsheng, CHEN Shuang, CHEN Xiaoying, et al. Active deception jamming recognition method in multimodal radar based on small samples[J]. Journal of Radars, 2023, 12(4): 882–891. doi: 10.12000/JR23104. [3] 郭文杰, 吴振华, 曹宜策, 等. 多域浅层特征引导下雷达有源干扰多模态对比识别方法[J]. 雷达学报(中英文), 2024, 13(5): 1004–1018. doi: 10.12000/JR24129.GUO Wenjie, WU Zhenhua, CAO Yice, et al. Multidomain characteristic-guided multimodal contrastive recognition method for active radar jamming[J]. Journal of Radars, 2024, 13(5): 1004–1018. doi: 10.12000/JR24129. [4] WANG Zan, GUO Zhengwei, SHU Gaofeng, et al. Radar jamming recognition: Models, methods, and prospects[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2025, 18: 3315–3343. doi: 10.1109/JSTARS.2024.3522951. [5] LECUN Y, BENGIO Y, and HINTON G. Deep learning[J]. Nature, 2015, 521(7553): 436–444. doi: 10.1038/nature14539. [6] ZHANG Haoyu, YU Lei, CHEN Yushi, et al. Fast complex-valued CNN for radar jamming signal recognition[J]. Remote Sensing, 2021, 13(15): 2867. doi: 10.3390/rs13152867. [7] LIU Qiang and ZHANG Wei. Deep learning and recognition of radar jamming based on CNN[C]. The 12th International Symposium on Computational Intelligence and Design, Hangzhou, China, 2019: 208–212. doi: 10.1109/ISCID.2019.00054. [8] CAI Yuan, SHI Kai, SONG Fei, et al. Jamming pattern recognition using spectrum waterfall: A deep learning method[C]. The 5th International Conference on Computer and Communications, Chengdu, China, 2019: 2113–2117. doi: 10.1109/ICCC47050.2019.9064207. [9] WANG Jingyi, DONG Wenhao, SONG Zhiyong, et al. Identification of radar active interference types based on three-dimensional residual network[C]. The 3rd International Academic Exchange Conference on Science and Technology Innovation, Guangzhou, China, 2021: 167–172. doi: 10.1109/IAECST54258.2021.9695564. [10] 陈思伟, 崔兴超, 李铭典, 等. 基于深度CNN模型的SAR图像有源干扰类型识别方法[J]. 雷达学报, 2022, 11(5): 897–908. doi: 10.12000/JR22143.CHEN Siwei, CUI Xingchao, LI Mingdian, et al. SAR image active jamming type recognition based on deep CNN model[J]. Journal of Radars, 2022, 11(5): 897–908. doi: 10.12000/JR22143. [11] QU Qizhe, WEI Shunjun, LIU Shan, et al. JRNet: Jamming recognition networks for radar compound suppression jamming signals[J]. IEEE Transactions on Vehicular Technology, 2020, 69(12): 15035–15045. doi: 10.1109/TVT.2020.3032197. [12] LV Qinzhe, QUAN Yinghui, FENG Wei, et al. Radar deception jamming recognition based on weighted ensemble CNN with transfer learning[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5107511. doi: 10.1109/TGRS.2021.3129645. [13] LV Qinzhe, QUAN Yinghui, SHA Minghui, et al. Deep neural network-based interrupted sampling deceptive jamming countermeasure method[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022, 15: 9073–9085. doi: 10.1109/JSTARS.2022.3214969. [14] ZHANG Jiaxiang, LIANG Zhennan, ZHOU Chao, et al. Radar compound jamming cognition based on a deep object detection network[J]. IEEE Transactions on Aerospace and Electronic Systems, 2023, 59(3): 3251–3263. doi: 10.1109/TAES.2022.3224695. [15] ZHU Xuan, WU Hao, HE Fangmin, et al. YOLO-CJ: A lightweight network for compound jamming signal detection[J]. IEEE Transactions on Aerospace and Electronic Systems, 2024, 60(5): 6807–6821. doi: 10.1109/TAES.2024.3406491. [16] MA Xu, DAI Xiyang, BAI Yue, et al. Rewrite the stars[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2024: 5694–5703. doi: 10.1109/CVPR52733.2024.00544. [17] LI Hulin, LI Jun, WEI Hanbing, et al. Slim-neck by GSConv: A lightweight-design for real-time detector architectures[J]. Journal of Real-Time Image Processing, 2024, 21(3): 62. doi: 10.1007/s11554-024-01436-6. [18] YU Hongyuan, WAN Cheng, DAI Xiyang, et al. Real-time image segmentation via hybrid convolutional-transformer architecture search[EB/OL]. https://arxiv.org/abs/2403.10413, 2025. [19] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 6000–6010. [20] ZHANG Haoyang, WANG Ying, DAYOUB F, et al. VarifocalNet: An IOU-aware dense object detector[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 8510–8519. doi: 10.1109/CVPR46437.2021.00841. [21] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. doi: 10.1109/TPAMI.2016.2577031. [22] LIU Wei, ANGUELOV D, ERHAN D, et al. SSD: Single shot MultiBox detector[C]. The 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 21–37. doi: 10.1007/978-3-319-46448-0_2. [23] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[C]. IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2999–3007. doi: 10.1109/ICCV.2017.324. [24] ZHAO Yi’an, LV Wenyu, XU Shangliang, et al. DETRS beat YOLOs on real-time object detection[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2024: 16965–16974. doi: 10.1109/CVPR52733.2024.01605. [25] 翟永杰, 田济铭, 陈鹏晖, 等. 基于YOLOv8n-Aerolite的轻量化蝴蝶兰种苗目标检测算法[J]. 农业工程学报, 2025, 41(4): 220–229. doi: 10.11975/j.issn.1002-6819.202408192.ZHAI Yongjie, TIAN Jiming, CHEN Penghui, et al. Algorithm for the target detection of phalaenopsis seedlings using lightweight YOLOv8n-Aerolite[J]. Transactions of the Chinese Society of Agricultural Engineering, 2025, 41(4): 220–229. doi: 10.11975/j.issn.1002-6819.202408192. [26] WANG C Y, YEH I H, and LIAO H Y M. YOLOv9: Learning what you want to learn using programmable gradient information[C]. The 18th European Conference on Computer Vision, Milan, Italy, 2025: 1–21. doi: 10.1007/978-3-031-72751-1_1. [27] WANG Ao, CHEN Hui, LIU Lihao, et al. YOLOv10: Real-time end-to-end object detection[C]. The 38th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2024: 3429. [28] ZHUO Shulong, BAI Hao, JIANG Lifeng, et al. SCL-YOLOv11: A lightweight object detection network for low-illumination environments[J]. IEEE Access, 2025, 13: 47653–47662. doi: 10.1109/ACCESS.2025.3550947. [29] TIAN Yunjie, YE Qixiang, and DOERMANN D. YOLOv12: Attention-centric real-time object detectors[EB/OL]. https://arxiv.org/abs/2502.12524, 2025. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: