Research on Ship Feature Recognition and Tracking Method Based on Long-line Array Single-photon LiDAR

-

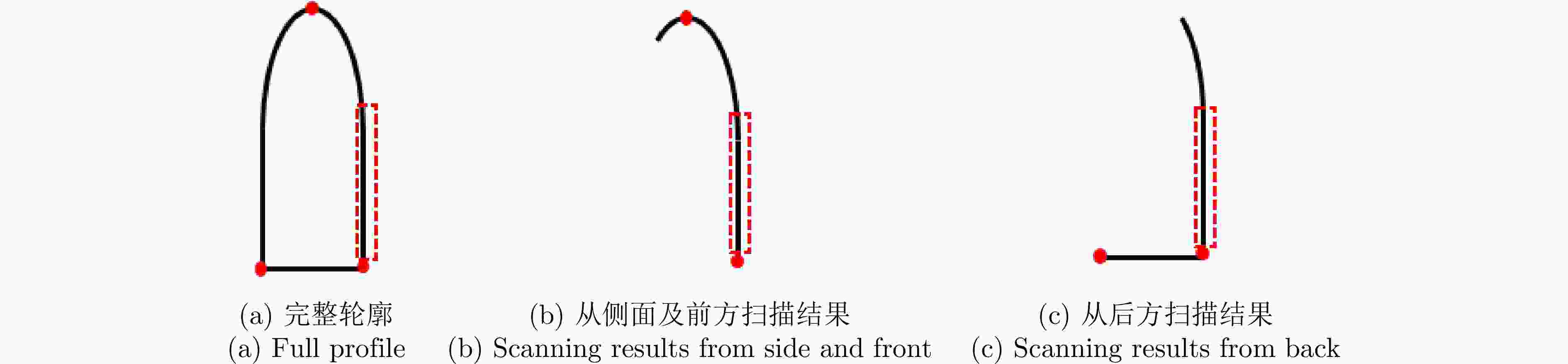

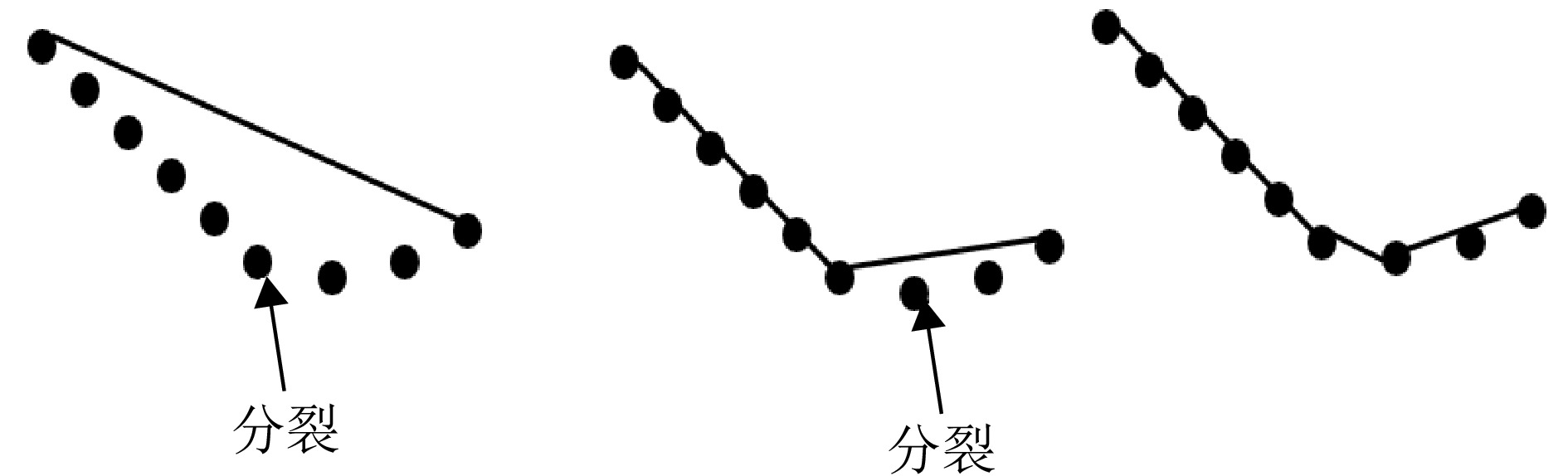

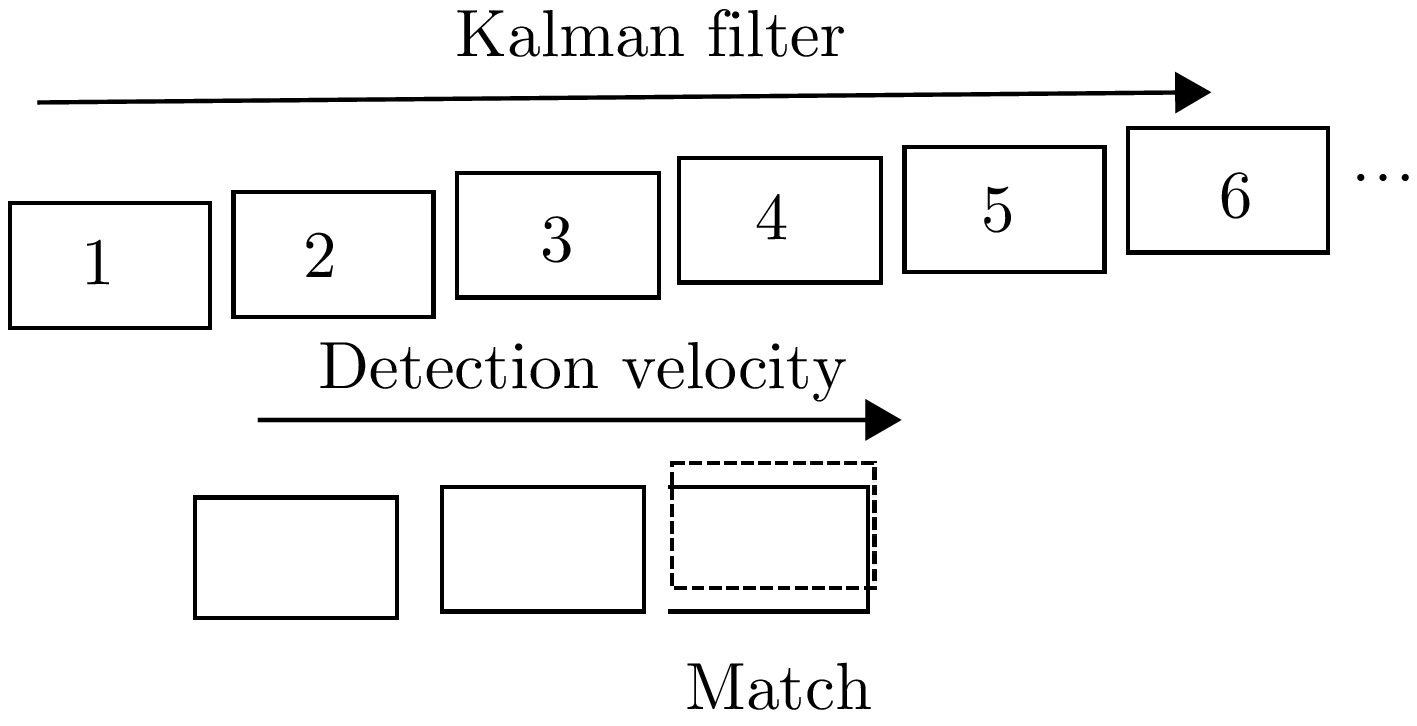

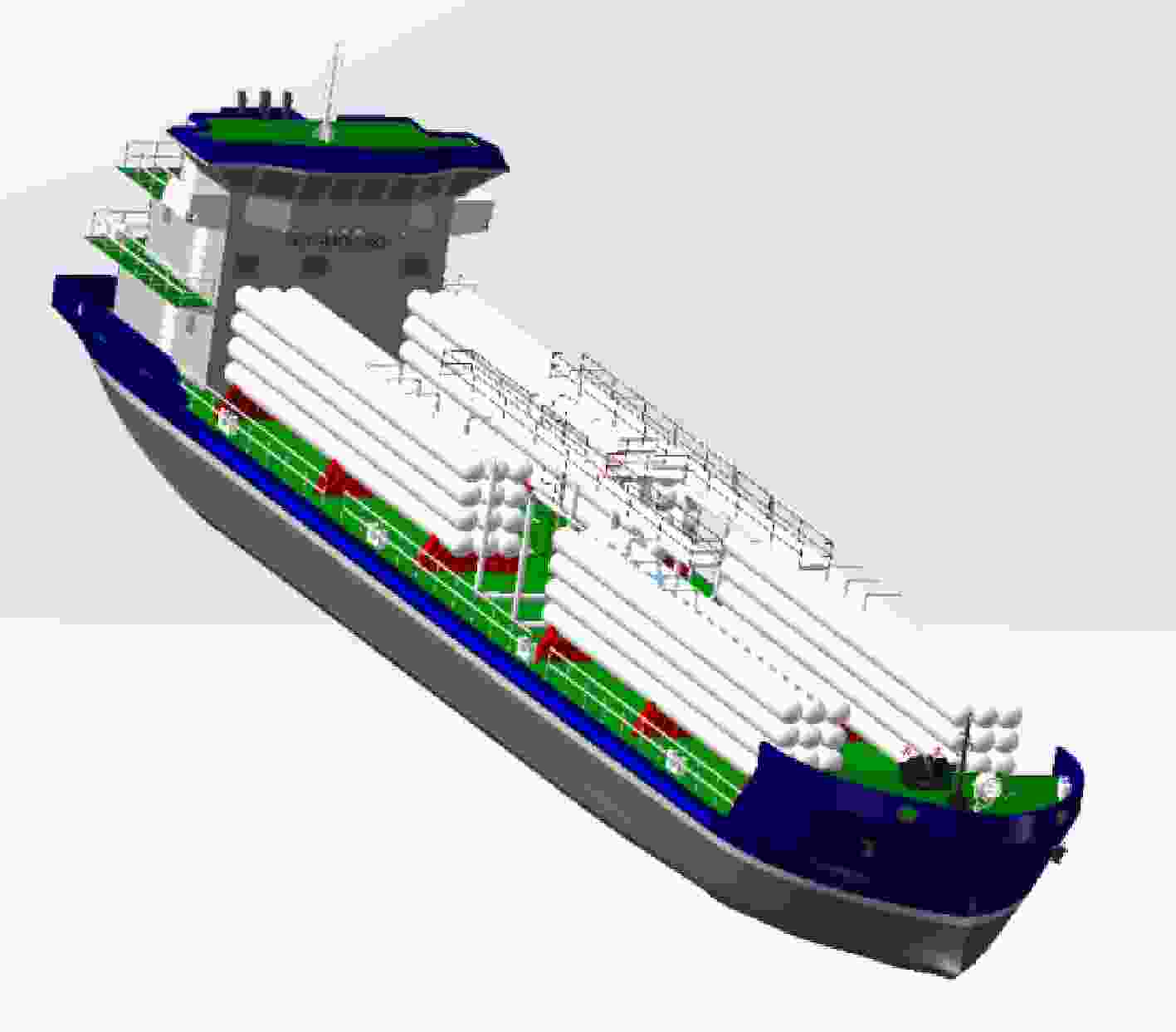

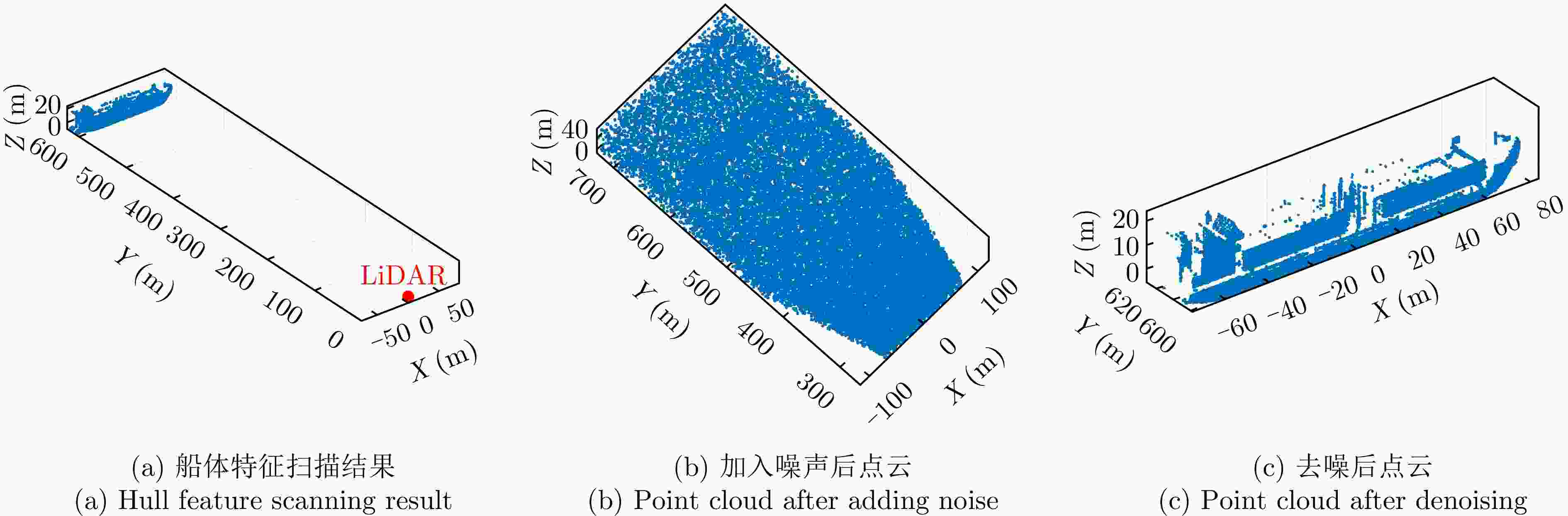

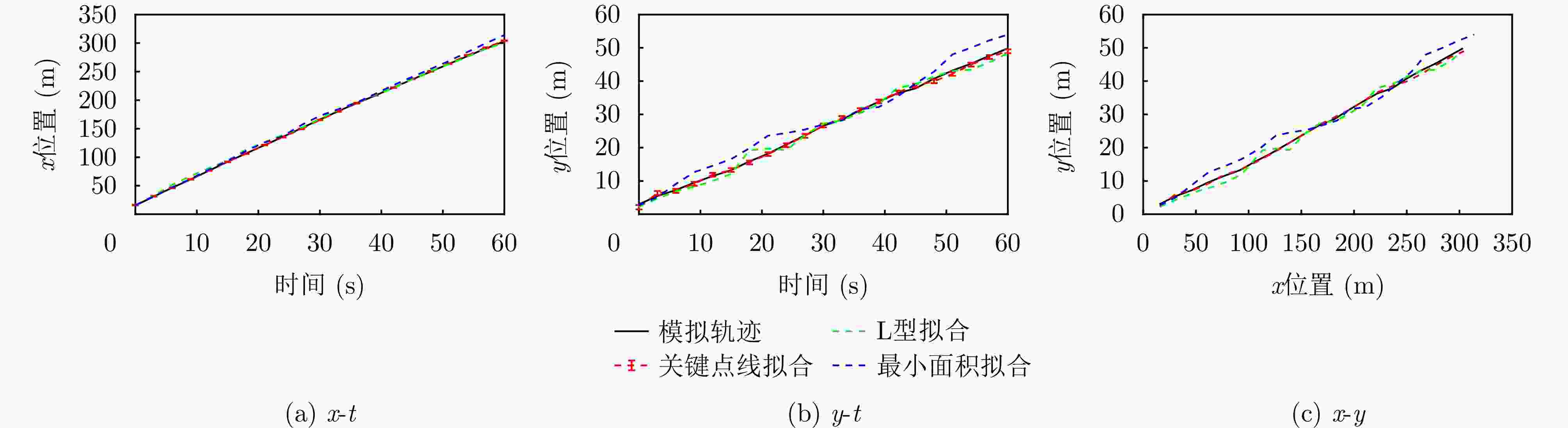

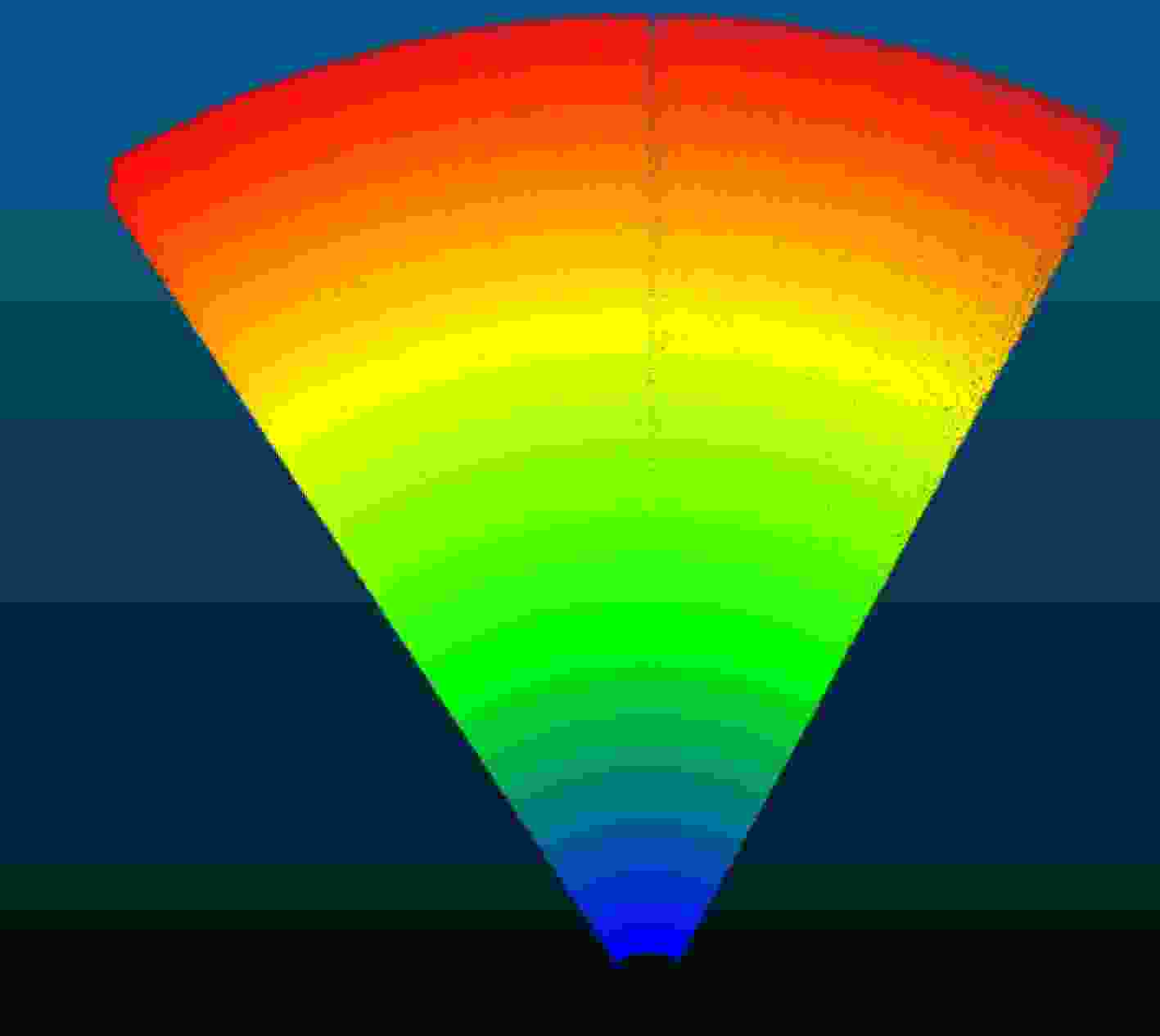

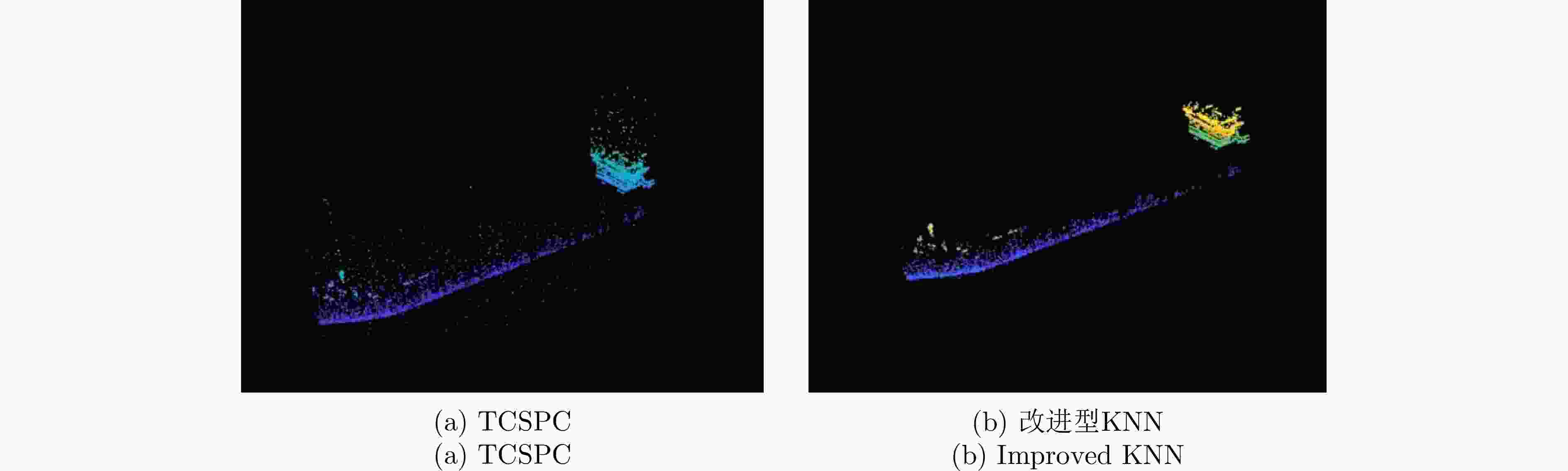

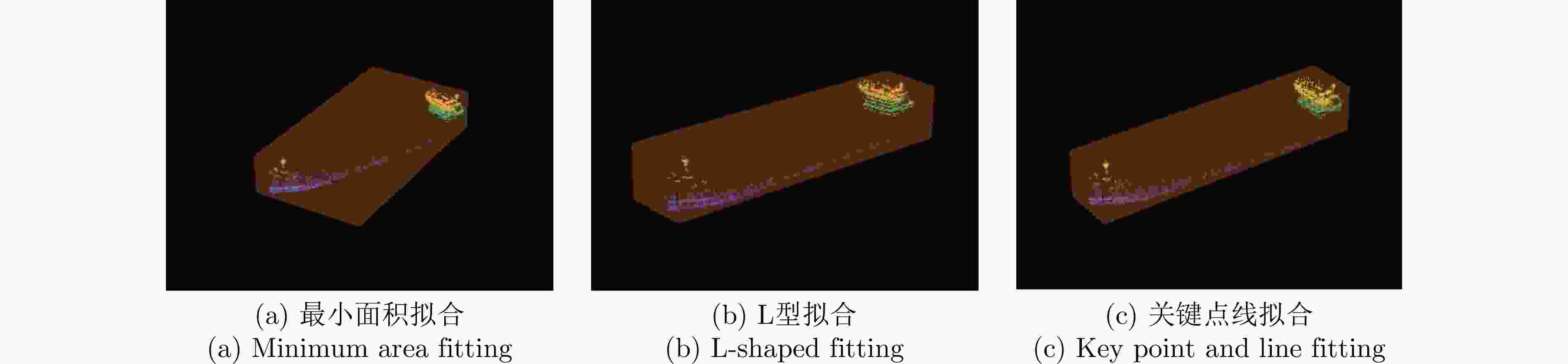

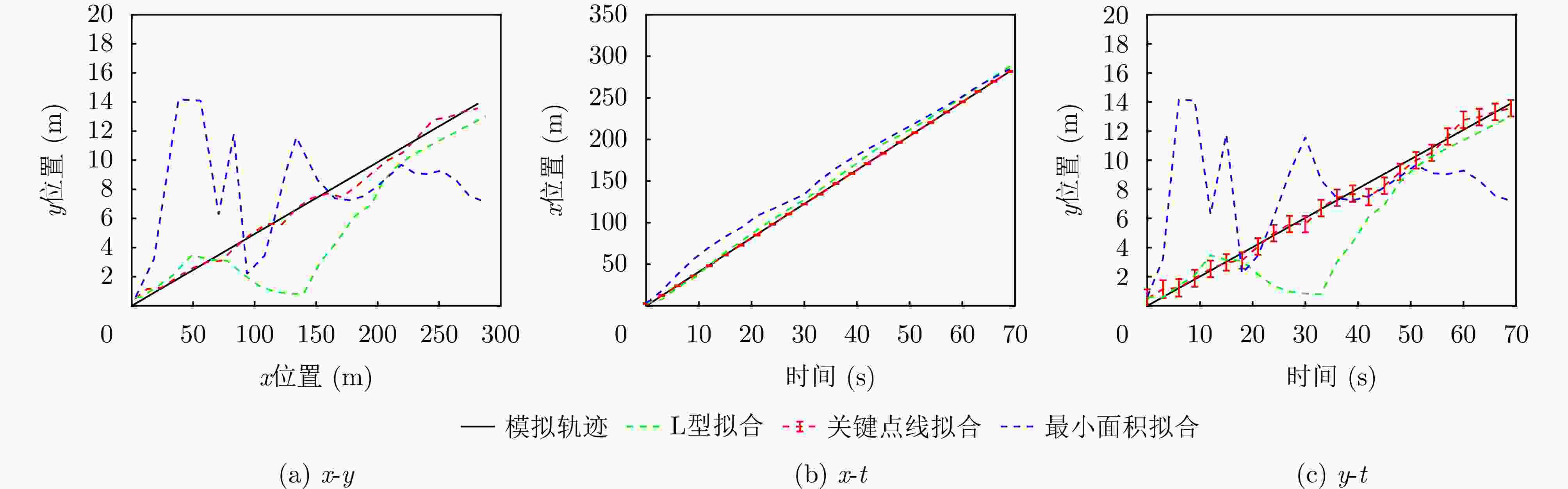

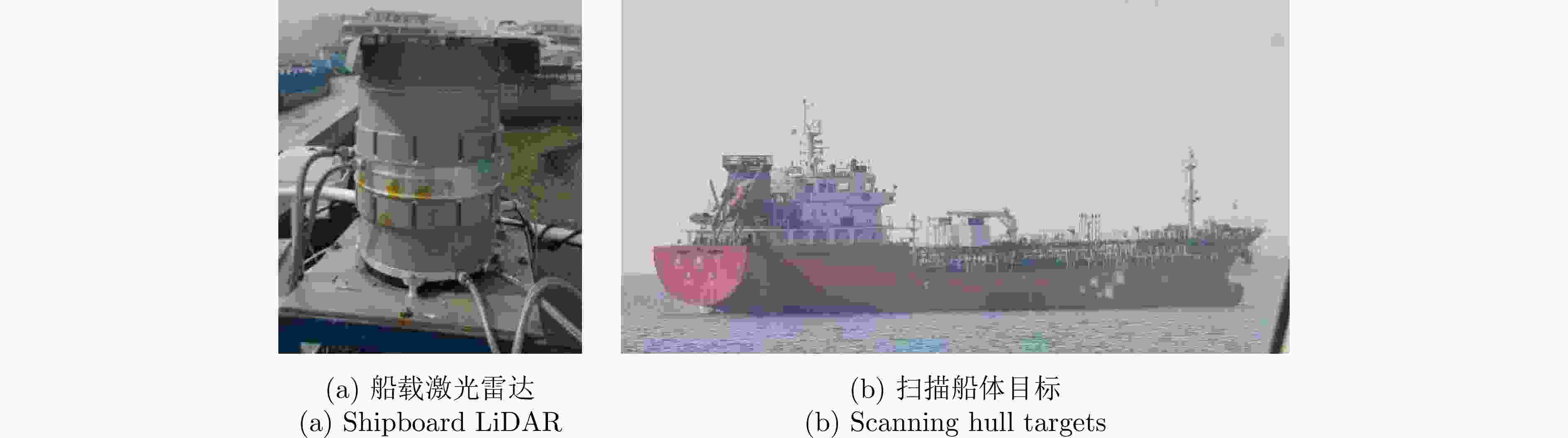

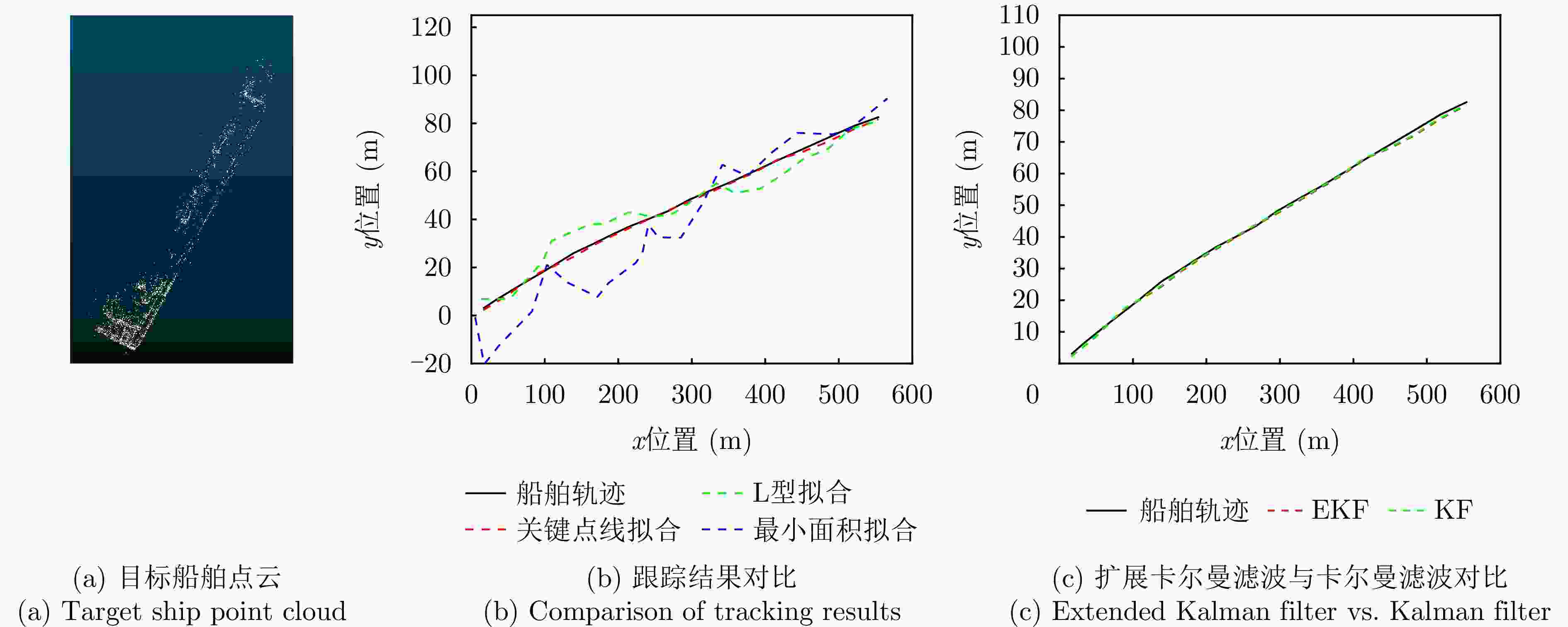

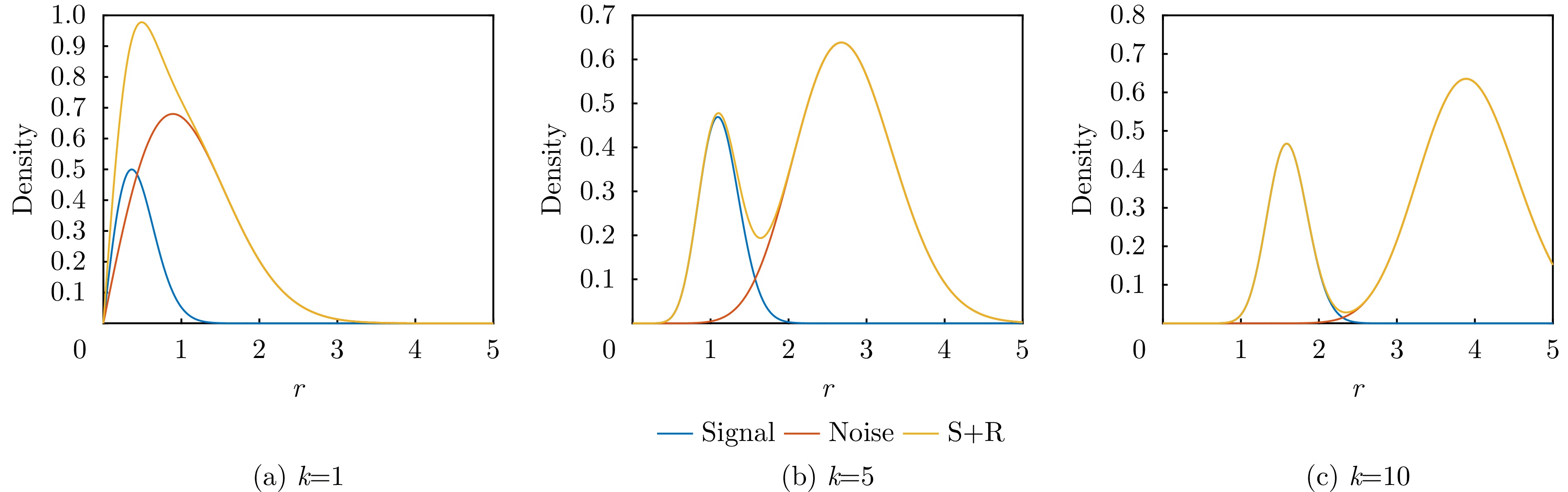

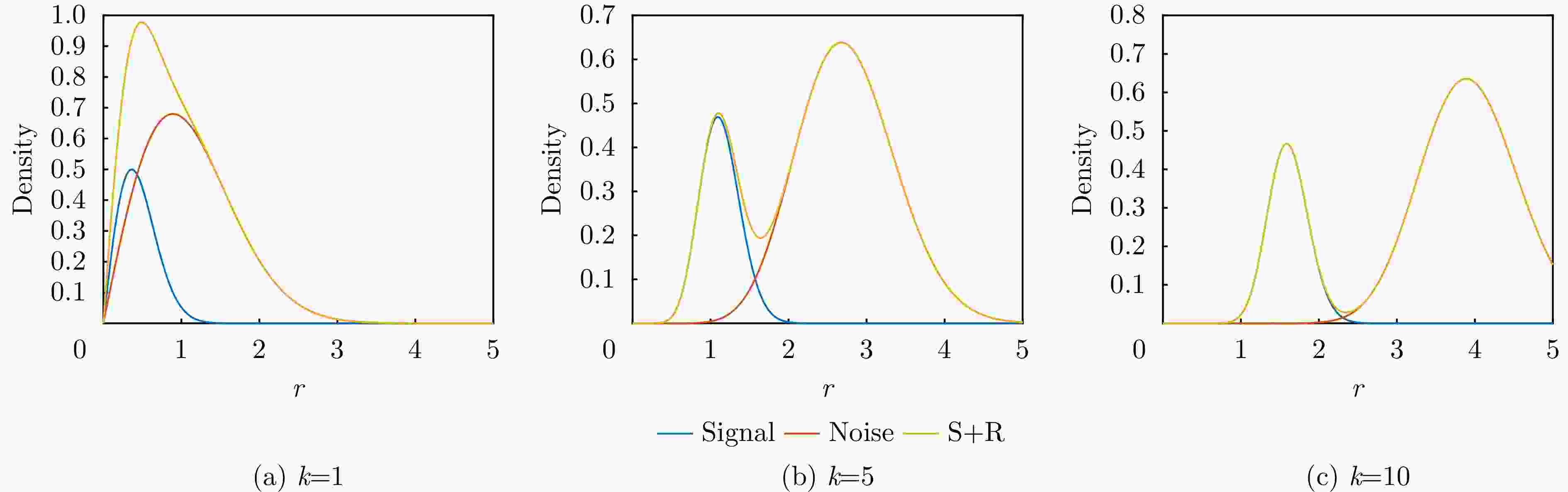

摘要: 近年来,针对水面船舶的目标跟踪是船舶自主航行中需要解决的一个重要问题。对于三维的环境感知,激光雷达有着其高分辨率和高精度等特征,长线阵激光雷达通过加上一维扫描,有着比单点和面阵激光雷达更大的视场,在环境感知上有着其独特的优势。由于水面船舶的特征等信息与地面目标的特征不一致,且相关的数据集较少,目前常用的拟合方法无法有效地针对水面目标的特征进行有效感知。文中根据单光子点云以及远距离目标探测的特征提出一种高效的船舶目标跟踪方法。该方法基于近邻点的同步聚类及去噪的方法,并基于船舶的几何特征先验知识通过船舶特征点面提取的方法进行拟合,进一步降低了噪声的影响。结合扩展卡尔曼滤波以及速度估计方法,实现了600 m范围内目标的实时稳定的轨迹跟踪,跟踪均方根误差(RMSE)为0.5 m,单帧处理时间1.02 s,满足工程实时性的需求。并在复杂环境下进行测试,对大型船舶仍有较好的跟踪效果,效果优于常用拟合跟踪方法。为后续智能船舶自主航行提供更完善的信息,实现船舶更好的障碍避让、路径规划。Abstract: In recent years, surface ship target tracking has been an important issue that needs to be solved in autonomous ship navigation. For three-dimensional environmental perception, LiDAR has the characteristics of high resolution and high precision, for three-dimensional environmental perception. By adding one-dimensional scanning, long-line array LiDAR has a larger field of view compared with single point and area array LiDAR, offering unique advantages in environmental perception. Owing to the inconsistency between the characteristics of surface ships and ground target, and the lack of relevant data sets, the current commonly used fitting methods cannot effectively perceive surface target characteristics. In this paper, an efficient target tracking method for ships is proposed based on the characteristics of single-photon point clouds and long-distance target detection. This method is based on the synchronous clustering and denoising of neighboring points; it uses the prior knowledge of the geometric features of ships to fit through the extraction of ship feature points and surfaces, further reducing the influence of noise. Combined with the extended Kalman filter and velocity estimation method, the real-time and stable trajectory tracking of a 600 m target is realized. The root mean square error of tracking is 0.5 m, with a single-frame processing time of 1.02 s, which meets real-time engineering requirements. The proposed method has also been tested in a complex environment and has a good tracking effect for large ships, which is better than the common fitting tracking method. This provides better information for the subsequent autonomous navigation of intelligent ships, and realizes better obstacle avoidance and path planning for ships.

-

1 含噪声目标检测算法

1. Noise-containing target detection algorithm

输入:点云 p=p1, p2, ···, pN, k; 输出:点云类 C=C1, C2, ···, CM; 1: 将所有据此形成k-d树,计算出各点的近邻点距离 2: 计算点密度并据此计算出阈值Prthreshold 3: for i = 1 to N do 4: if pi未被分类 5: 计算pi的距离10个近邻点为信号的概率Pr 6: if Pr < Prthreshold 7: 将点定义为噪声 8: else 9: 将点保留为信号,并新建聚类Cj,将该点与近邻点加入

聚类中10: while(聚类中存在近邻点未被遍历) 11: 计算其10个近邻点,并将未归类的近邻点加入聚类 12: end 表 1 单光子激光雷达系统主要参数

Table 1. Main parameters of the single-photon LiDAR system

参数 数值 波长 1550 nm 单脉冲能量 100 μJ 重复频率 20 kHz 激光发散角 0.15 mrad 相邻线束夹角 1.37 mrad 接收光学口径 34 mm 接收视场角 0.3 mrad 测距范围 150~3600 m 测距误差 20 cm 帧周期 1~3 s 表 2 不同类型船舶跟踪结果

Table 2. Tracking results for different types of ships

船只类型 数量 关键点线 L型拟合 最小面积拟合 大型船(~150 m) 167 0.5 m 2.4 m 6.9 m 小型船(~15 m) 34 0.6 m 1.1 m 5.1 m 表 3 算法平均计算时长

Table 3. Average computation time of the algorithm

处理步骤 时长 (s) 帧采集时间 3 初始帧 3.2 后续帧 1.02 -

[1] LIU Zhixiang, ZHANG Youmin, YU Xiang, et al. Unmanned surface vehicles: An overview of developments and challenges[J]. Annual Reviews in Control, 2016, 41: 71–93. doi: 10.1016/j.arcontrol.2016.04.018. [2] BAI Xiangen, LI Bohan, XU Xiaofei, et al. A review of current research and advances in unmanned surface vehicles[J]. Journal of Marine Science and Application, 2022, 21(2): 47–58. doi: 10.1007/s11804-022-00276-9. [3] LEXAU S J N, BREIVIK M, and LEKKAS A M. Automated docking for marine surface vessels—a survey[J]. IEEE Access, 2023, 11: 132324–132367. doi: 10.1109/ACCESS.2023.3335912. [4] HENTSCHKE M and DE FREITAS E P. Design and implementation of a control and navigation system for a small unmanned aerial vehicle[J]. IFAC-PapersOnLine, 2016, 49(30): 320–324. doi: 10.1016/j.ifacol.2016.11.155. [5] IMANBERDIYEV N, FU Changhong, KAYACAN E, et al. Autonomous navigation of UAV by using real-time model-based reinforcement learning[C]. 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), Phuket, Thailand, 2016: 1–6. doi: 10.1109/ICARCV.2016.7838739. [6] 王文明. 无人船自主靠泊避碰决策与运动控制研究[D]. [硕士论文], 大连海事大学, 2022. doi: 10.26989/d.cnki.gdlhu.2022.001524.WANG Wenming. Research on collision avoidance decision-making and motion control for autonomous berthing of unmanned surface vehicles[D]. [Master dissertation], Dalian Maritime University, 2022. doi: 10.26989/d.cnki.gdlhu.2022.001524. [7] LI Shengyu, WANG Shiwen, ZHOU Yuxuan, et al. Tightly coupled integration of GNSS, INS, and LiDAR for vehicle navigation in urban environments[J]. IEEE Internet of Things Journal, 2022, 9(24): 24721–24735. doi: 10.1109/JIOT.2022.3194544. [8] ABDELAZIZ N and EL-RABBANY A. An integrated INS/LiDAR SLAM navigation system for GNSS-challenging environments[J]. Sensors, 2022, 22(12): 4327. doi: 10.3390/s22124327. [9] SAWADA R and HIRATA K. Mapping and localization for autonomous ship using LiDAR SLAM on the sea[J]. Journal of Marine Science and Technology, 2023, 28(2): 410–421. doi: 10.1007/s00773-023-00931-y. [10] BORETTI A. A perspective on single-photon LiDAR systems[J]. Microwave and Optical Technology Letters, 2024, 66(1): e33918. doi: 10.1002/mop.33918. [11] WANG Xiaoheng and ZHU Jun. Vehicle-mounted imaging lidar with nonuniform distribution of instantaneous field of view[J]. Optics & Laser Technology, 2024, 169: 110063. doi: 10.1016/j.optlastec.2023.110063. [12] HASAN M, HANAWA J, GOTO R, et al. LiDAR-based detection, tracking, and property estimation: A contemporary review[J]. Neurocomputing, 2022, 506: 393–405. doi: 10.1016/j.neucom.2022.07.087. [13] BEWLEY A, GE Zongyuan, OTT L, et al. Simple online and realtime tracking[C]. 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, USA, 2016: 3464–3468. doi: 10.1109/ICIP.2016.7533003. [14] VEERAMANI B, RAYMOND J W, and CHANDA P. DeepSort: Deep convolutional networks for sorting haploid maize seeds[J]. BMC Bioinformatics, 2018, 19(9): 289. doi: 10.1186/s12859-018-2267-2. [15] CHEN Long, AI Haizhou, ZHUANG Zijie, et al. Real-time multiple people tracking with deeply learned candidate selection and person re-identification[C]. 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, USA, 2018: 1–6. doi: 10.1109/ICME.2018.8486597. [16] WENG Xinshuo, WANG Jianren, HELD D, et al. AB3DMOT: A baseline for 3D multi-object tracking and new evaluation metrics[J]. arXiv: 2008.08063. doi: 10.48550/arXiv.2008.08063. [17] YIN Tianwei, ZHOU Xingyi, and KRÄHENBÜHL P. Center-based 3d object detection and tracking[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 11779–11788. doi: 10.1109/CVPR46437.2021.01161. [18] LUO Wenjie, YANG Bin, and URTASUN R. Fast and furious: Real time end-to-end 3D detection, tracking and motion forecasting with a single convolutional net[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 3569–3577. doi: 10.1109/CVPR.2018.00376. [19] YOU Xin, DING Ming, ZHANG Minghui, et al. PnPNet: Pull-and-push networks for volumetric segmentation with boundary confusion[J]. arXiv: 2312.08323. doi: 10.48550/arXiv.2312.08323. [20] MACLACHLAN R and MERTZ C. Tracking of moving objects from a moving vehicle using a scanning laser rangefinder[C]. 2006 IEEE Intelligent Transportation Systems Conference, Toronto, Canada, 2006: 301–306. doi: 10.1109/ITSC.2006.1706758. [21] SHEN Xiaotong, PENDLETON S, and ANG M H. Efficient L-shape fitting of laser scanner data for vehicle pose estimation[C]. 2015 IEEE 7th International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), Siem Reap, Cambodia, 2015: 173–178. doi: 10.1109/ICCIS.2015.7274568. [22] ZHANG Xiao, XU Wenda, DONG Chiyu, et al. Efficient L-shape fitting for vehicle detection using laser scanners[C]. 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, USA, 2017: 54–59. doi: 10.1109/IVS.2017.7995698. [23] MA Rujia, KONG Wei, CHEN Tao, et al. KNN based denoising algorithm for photon-counting LiDAR: Numerical simulation and parameter optimization design[J]. Remote Sensing, 2022, 14(24): 6236. doi: 10.3390/rs14246236. [24] PRIO M H, PATEL S, and KOLEY G. Implementation of dynamic radius outlier removal (DROR) algorithm on LiDAR point cloud data with arbitrary white noise addition[C]. 2022 IEEE 95th Vehicular Technology Conference: (VTC2022-Spring), Helsinki, Finland, 2022: 1–7. doi: 10.1109/VTC2022-Spring54318.2022.9860643. [25] TSENG C C, CHEN H T, and CHEN K C. On the distance distributions of the wireless Ad Hoc networks[C]. 2006 IEEE 63rd Vehicular Technology Conference, Melbourne, Australia, 2006: 772–776. doi: 10.1109/VETECS.2006.1682929. [26] KONSTANTINOVA P, UDVAREV A, and SEMERDJIEV T. A study of a target tracking algorithm using global nearest neighbor approach[C]. The 4th International Conference on Computer Systems and Technologies, Rousse, Bulgaria, 2003: 290–295. doi: 10.1145/973620.973668. [27] BAR-SHALOM Y, DAUM F, and HUANG J. The probabilistic data association filter[J]. IEEE Control Systems Magazine, 2009, 29(6): 82–100. doi: 10.1109/MCS.2009.934469. [28] FORTMANN T, BAR-SHALOM Y, and SCHEFFE M. Sonar tracking of multiple targets using joint probabilistic data association[J]. IEEE Journal of Oceanic Engineering, 1983, 8(3): 173–184. doi: 10.1109/JOE.1983.1145560. [29] KUHN H W. The Hungarian method for the assignment problem[J]. Naval Research Logistics (NRL), 1955, 2(1-2): 83–97. doi: 10.1002/nav.3800020109. [30] YOON K, KIM D Y, YOON Y C, et al. Data association for multi-object tracking via deep neural networks[J]. Sensors, 2019, 19(3): 559. doi: 10.3390/s19030559. [31] ARNOLD J, SHAW S W, and PASTERNACK H. Efficient target tracking using dynamic programming[J]. IEEE Transactions on Aerospace and Electronic Systems, 1993, 29(1): 44–56. doi: 10.1109/7.249112. [32] PENG Ziqiang, WANG Han, SHE Xiaokai, et al. Marine remote target signal extraction based on 128 line-array single photon LiDAR[J]. Infrared Physics & Technology, 2024, 143: 105592. doi: 10.1016/j.infrared.2024.105592. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: