AIR-PolSAR-Seg-2.0: Polarimetric SAR Ground Terrain Classification Dataset for Large-scale Complex Scenes

-

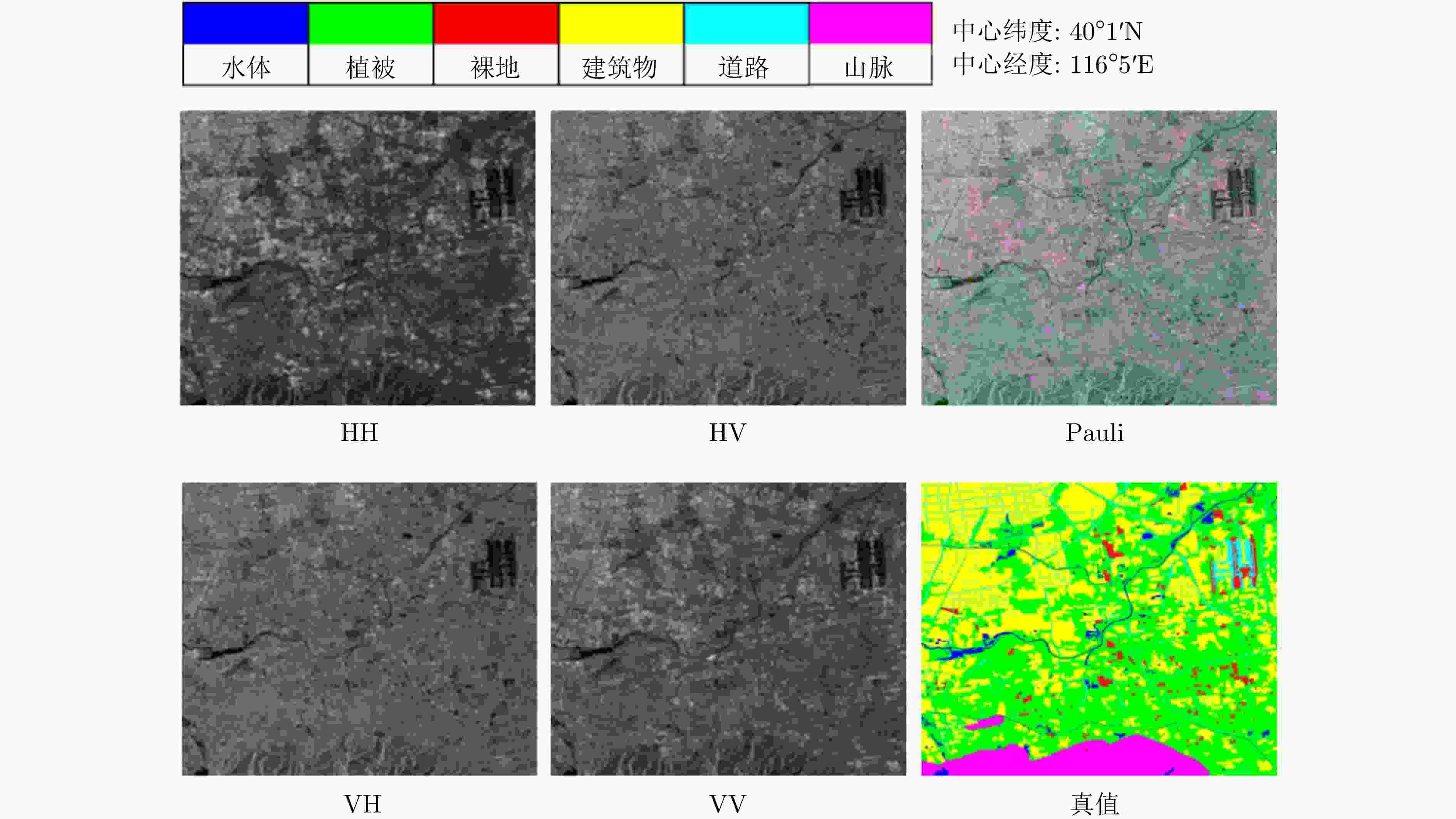

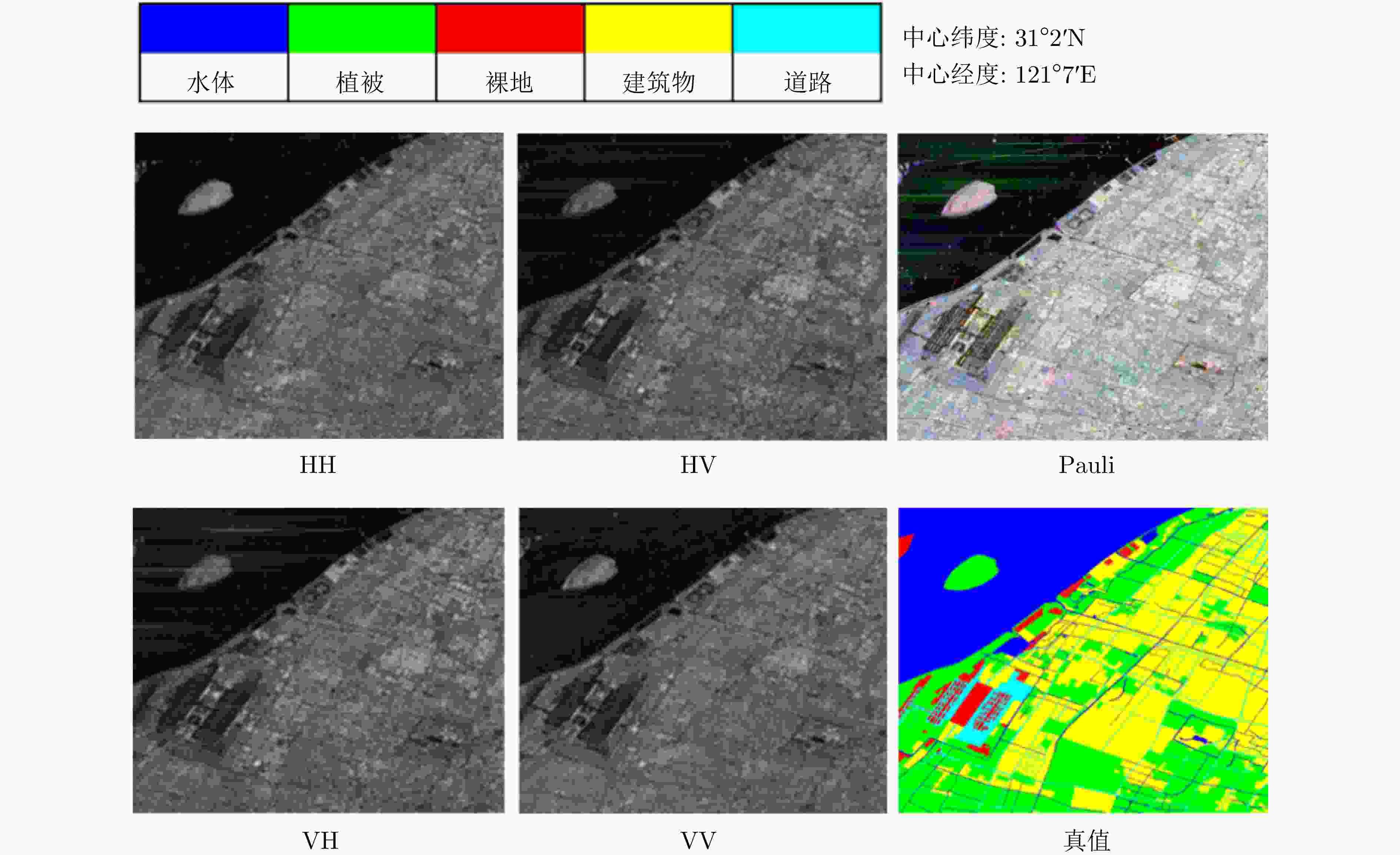

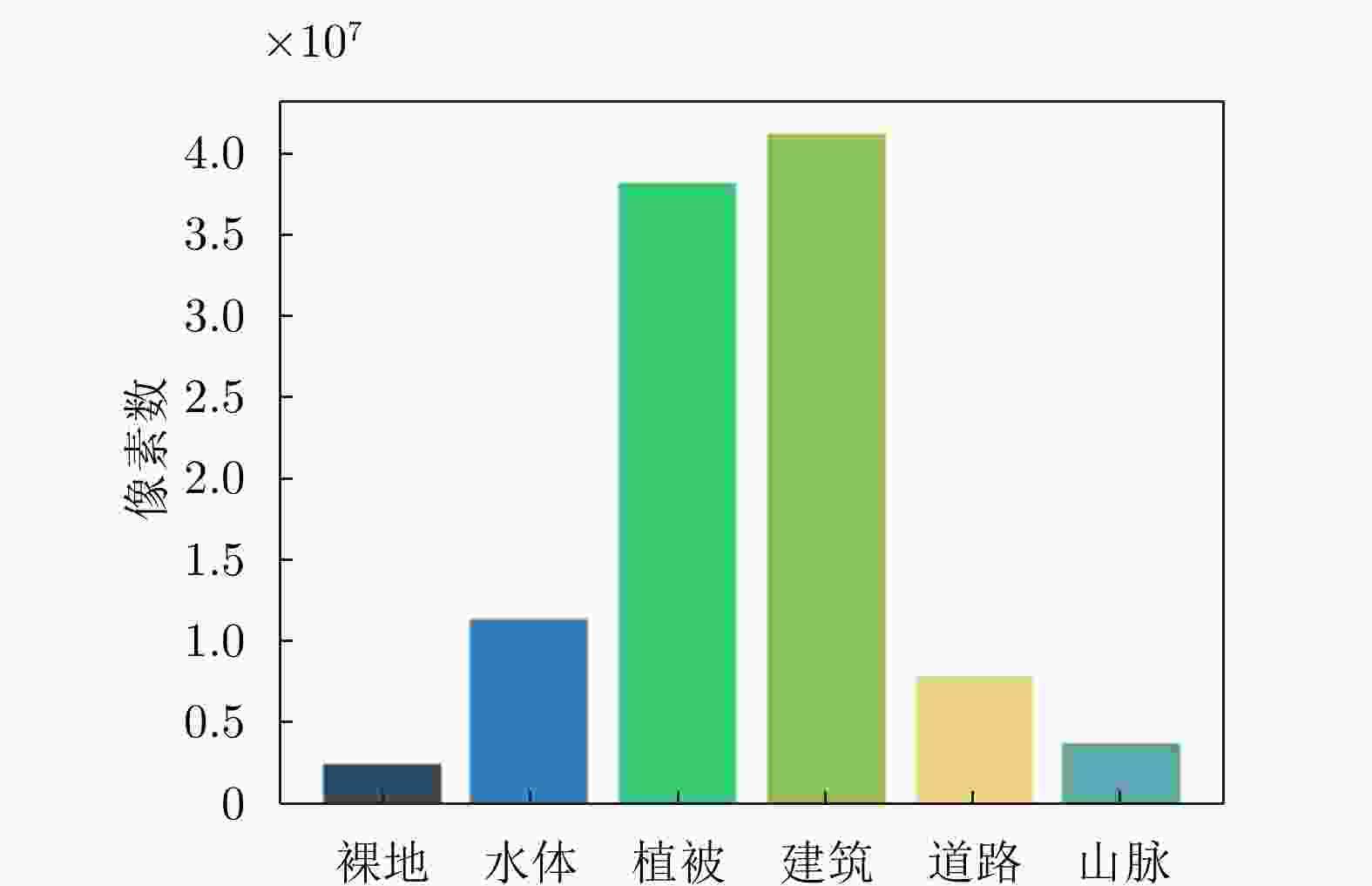

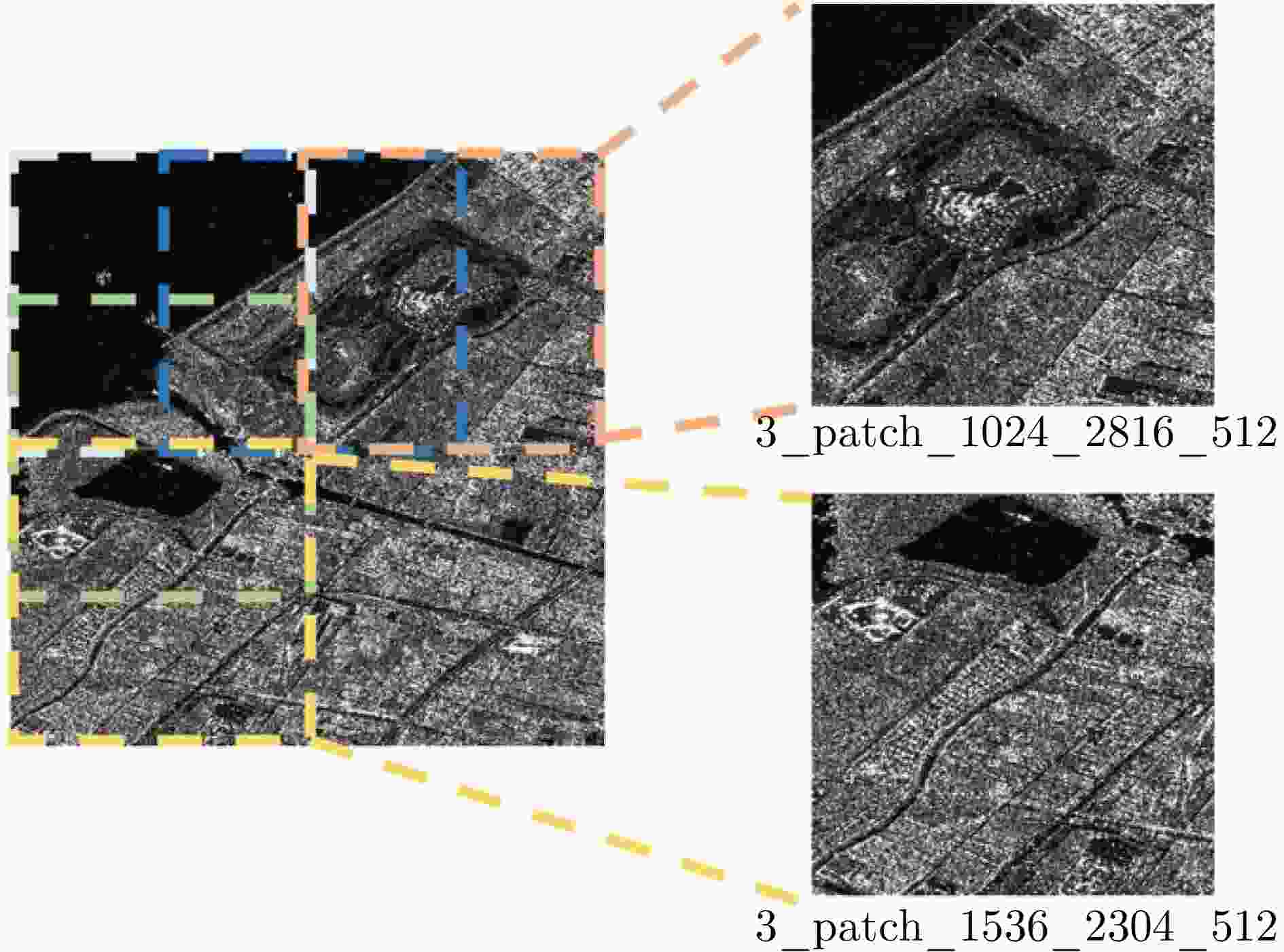

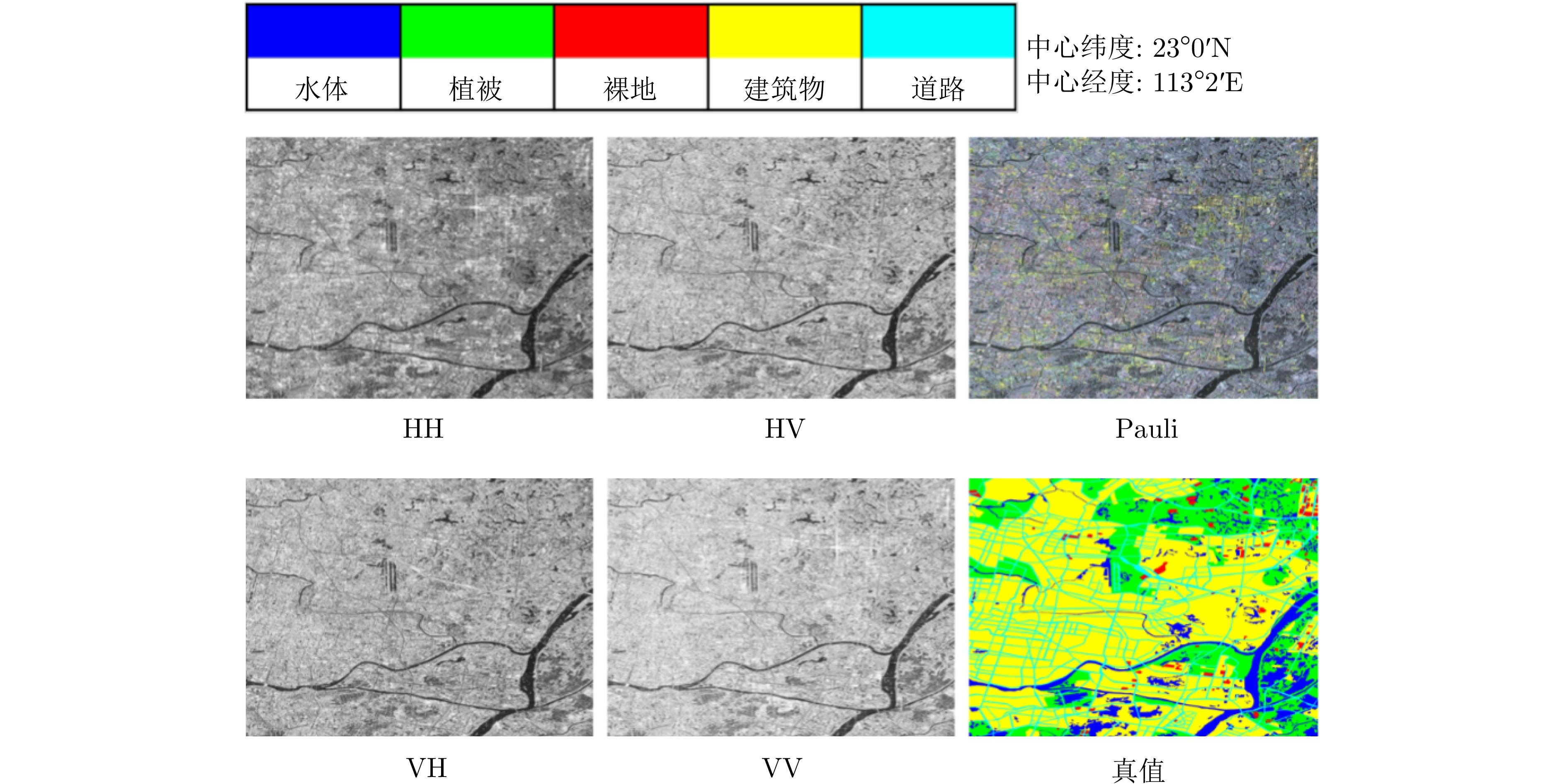

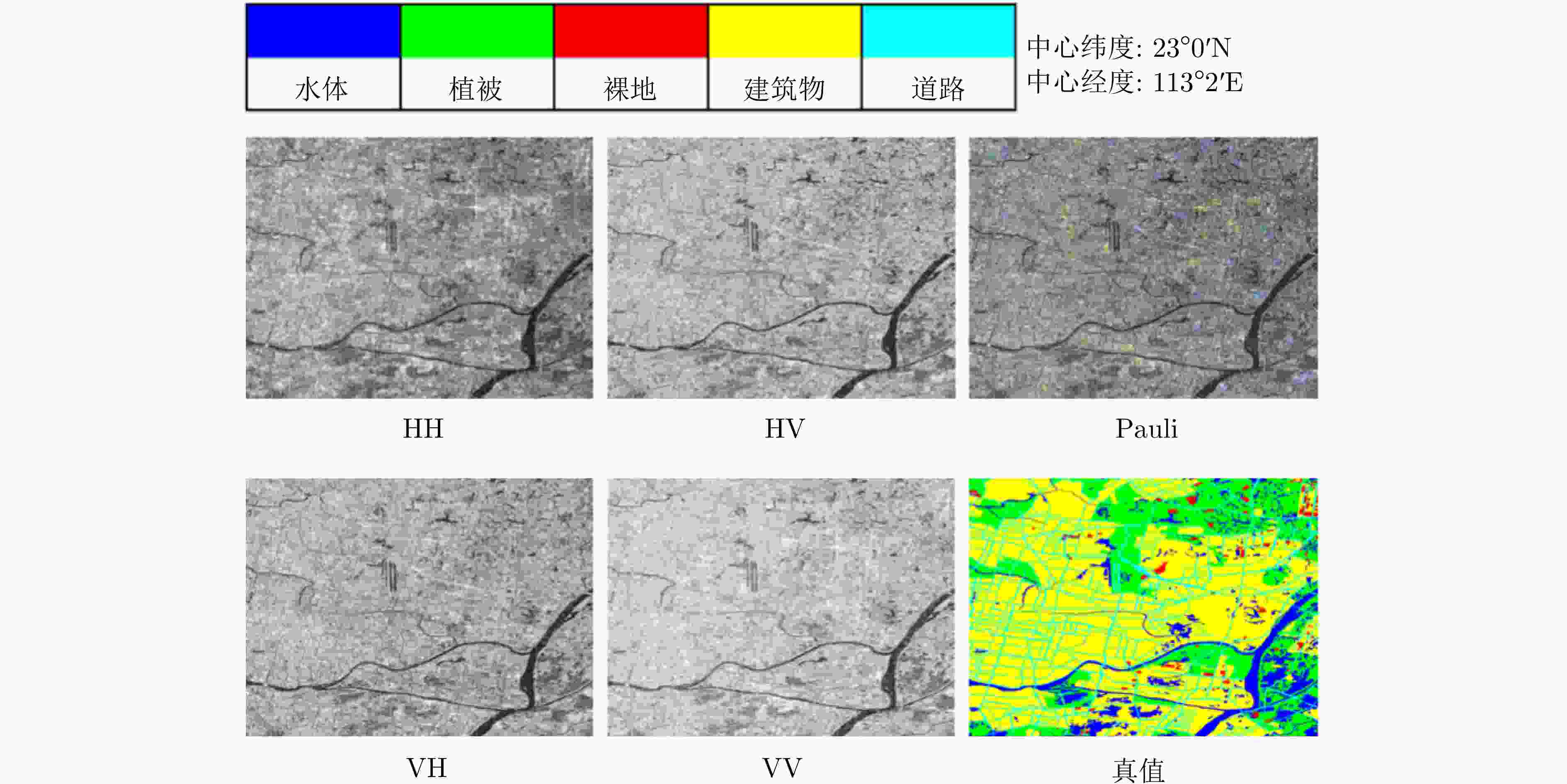

摘要: 极化合成孔径雷达(PolSAR)地物分类是SAR图像智能解译领域的研究热点之一。为了进一步促进该领域研究的发展,该文组织并发布了一个面向大规模复杂场景的极化SAR地物分类数据集AIR-PolSAR-Seg-2.0。该数据集由三景不同区域的高分三号卫星L1A级复数SAR影像构成,空间分辨率8 m,包含HH, HV, VH和VV共4种极化方式,涵盖水体、植被、裸地、建筑、道路、山脉等6类典型的地物类别,具有场景复杂规模大、强弱散射多样、边界分布不规则、类别尺度多样、样本分布不均衡的特点。为方便试验验证,该文将三景完整的SAR影像裁剪成24,672张512像素×512像素的切片,并使用一系列通用的深度学习方法进行了实验验证。实验结果显示,基于双通道自注意力方法的DANet性能表现最佳,在幅度数据和幅相融合数据的平均交并比分别达到了85.96%和87.03%。该数据集与实验指标基准有助于其他学者进一步展开极化SAR地物分类相关研究。Abstract: The ground terrain classification using Polarimetric Synthetic Aperture Radar (PolSAR) is one of the research hotspots in the field of intelligent interpretation of SAR images. To further promote the development of research in this field, this paper organizes and releases a polarimetric SAR ground terrain classification dataset named AIR-PolSAR-Seg-2.0 for large-scale complex scenes. This dataset is composed of three L1A-level complex SAR images of the Gaofen-3 satellite from different regions, with a spatial resolution of 8 meters. It includes four polarization modes: HH, HV, VH, VV, and covers six typical ground terrain categories such as water bodies, vegetation, bare land, buildings, roads, and mountains. It has the characteristics of large-scale complex scenes, diverse strong and weak scattering, irregular boundary distribution, diverse category scales, and unbalanced sample distribution. To facilitate experimental verification, this paper cuts the three complete SAR images into 24,672 slices of 512×512 pixels, and conducts experimental verification using a series of common deep learning methods. The experimental results show that the DANet based on the dual-channel self-attention method performs the best, with the average intersection over union ratio reaching 85.96% for amplitude data and 87.03% for amplitude-phase fusion data. This dataset and the experimental index benchmark are helpful for other scholars to further carry out research related to polarimetric SAR ground terrain classification.

-

表 1 AIR-PolSAR-Seg-2.0数据集中3个地区影像数据的详细信息

Table 1. Details of image data for three regions in the AIR-PolSAR-Seg-2.0 dataset

地区编号 分辨率(m) 经度 纬度 图像大小(像素) 时间 成像模式 极化方式 1 8 113°2' 23°0' 5456 ×4708 2016年11月 全极化条带I HH, HV, VH, VV 2 8 116°5' 40°1' 7820 ×6488 2016年9月 全极化条带I HH, HV, VH, VV 3 8 121°7' 31°2' 6014 ×4708 2019年1月 全极化条带I HH, HV, VH, VV 表 2 AIR-PolSAR-Seg-2.0数据集的地物类别对应编号及编码信息

Table 2. The corresponding numbers and coding information of the ground terrain categories in the AIR-PolSAR-Seg-2.0 dataset

地物类别编号 地区1影像数据 地区2影像数据 地区3影像数据 C1 水体(0, 0, 255) 水体(0, 0, 255) 水体(0, 0, 255) C2 植被(0, 255, 0) 植被(0, 255, 0) 植被(0, 255, 0) C3 裸地(255, 0, 0) 裸地(255, 0, 0) 裸地(255, 0, 0) C4 道路(0, 255, 255) 道路(0, 255, 255) 道路(0, 255, 255) C5 建筑(255, 255, 0) 建筑(255, 255, 0) 建筑(255, 255, 0) C6 – 山脉(255, 0, 255) – 表 3 AIR-PolSAR-Seg-2.0与AIR-PolSAR-Seg数据集比较

Table 3. Comparison of AIR-PolSAR-Seg-2.0 and AIR-PolSAR-Seg datasets

数据集 数据内容 分辨率 地物类别 影像区域及尺寸 样本数量及尺寸 AIR-PolSAR-Seg-2.0数据集 L1A级SAR复数据(含幅度和

相位图像),极化方式包括

HH, HV, VH, VV8 m 共6类,分别为水体、

植被、裸地、建筑、

道路、山脉三景,尺寸分别为 5456 像素×4708 像素、7820 像素×6488 像素

和6014 像素×4708 像素24672 张,

512像素×512像素AIR-PolSAR-Seg

数据集L2级SAR幅度图像,极化方式

包括HH, HV, VH, VV8 m 共6类,分别为住房区域、工业区、自然区、

土地利用区、水域

和其他区域一景, 9082 像素×9805 像素2000张,

512像素×512像素表 4 基于幅度数据实验中不同算法的对比结果(%)

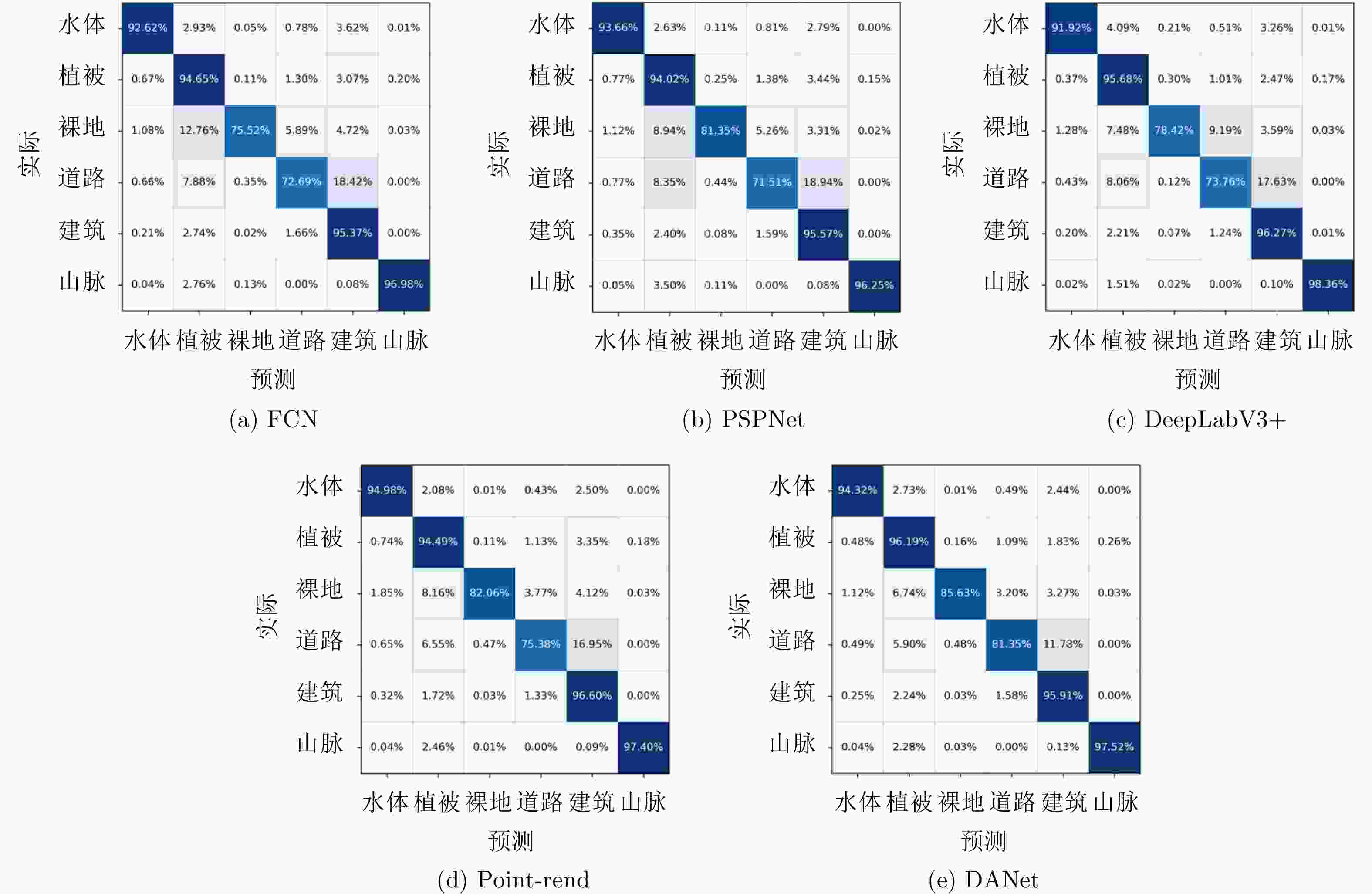

Table 4. Comparative results of different methods in experiments based on amplitude data (%)

方法 每个类别的IoU/PA 评价指标 C0 C1 C2 C3 C4 C5 PA mPA mIoU Kappa FCN 89.33/93.44 88.34/94.03 75.58/79.25 62.07/75.31 88.58/95.03 90.53/92.15 92.46 88.20 82.40 89.13 PSPNet 89.78/93.30 88.22/94.05 73.93/78.82 60.00/70.24 88.56/95.88 92.55/93.19 92.41 87.58 82.17 89.02 DeepLabV3+ 90.44/93.11 89.54/95.18 69.66/73.98 63.15/76.61 89.93/95.44 95.98/98.17 93.12 88.75 83.12 90.08 Point-rend 90.63/93.70 89.53/96.06 79.87/84.31 62.19/70.82 89.57/95.32 95.43/96.65 93.23 89.48 84.54 90.21 DANet 91.06/94.49 90.51/95.62 80.51/84.76 67.39/78.67 90.66/95.59 95.62/97.28 93.92 91.07 85.96 91.24 注:加粗项表示最优结果。 表 5 基于幅度相位融合数据实验中不同算法的对比结果 (%)

Table 5. Comparative results of different methods in experiments based on amplitude and phase fusion data (%)

方法 每个类别的IoU/PA 评价指标 C0 C1 C2 C3 C4 C5 PA mPA mIoU Kappa FCN 89.62/92.62 88.39/94.65 73.16/75.52 61.84/72.69 88.58/95.37 94.60/96.98 92.55 87.97 82.70 89.23 PSPNet 89.87/93.66 88.31/94.02 76.38/81.35 60.90/71.51 88.70/95.57 94.51/96.25 92.57 88.73 83.11 89.27 DeepLabV3+ 89.82/91.92 89.93/95.68 73.78/78.42 64.25/73.76 90.14/96.27 96.23/98.36 93.39 89.07 84.02 90.44 Point-rend 91.25/94.98 90.03/94.49 79.49/82.06 66.19/75.38 90.06/96.60 95.23/97.40 93.66 90.15 85.38 90.84 DANet 91.73/94.32 91.17/96.19 82.38/85.63 70.86/81.35 91.48/95.91 94.54/97.52 94.46 91.82 87.03 92.03 注:加粗项表示最优结果。 -

[1] JACKSON C R and APEL J R. Synthetic Aperture Radar Marine User’s Manual[M]. Washington: National Oceanic and Atmospheric Administration, 2004. [2] FU Kun, FU Jiamei, WANG Zhirui, et al. Scattering-keypoint-guided network for oriented ship detection in high-resolution and large-scale SAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 11162–11178. doi: 10.1109/JSTARS.2021.3109469. [3] LEE J S and POTTIER E. Polarimetric Radar Imaging: From Basics to Applications[M]. Boca Raton: CRC Press, 2017: 1–10. doi: 10.1201/9781420054989. [4] LIU Xu, JIAO Licheng, TANG Xu, et al. Polarimetric convolutional network for PolSAR image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(5): 3040–3054. doi: 10.1109/TGRS.2018.2879984. [5] PARIKH H, PATEL S, and PATEL V. Classification of SAR and PolSAR images using deep learning: A review[J]. International Journal of Image and Data Fusion, 2020, 11(1): 1–32. doi: 10.1080/19479832.2019.1655489. [6] BI Haixia, SUN Jian, and XU Zongben. A graph-based semisupervised deep learning model for PolSAR image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(4): 2116–2132. doi: 10.1109/TGRS.2018.2871504. [7] CHEN Siwei and TAO Chensong. PolSAR image classification using polarimetric-feature-driven deep convolutional neural network[J]. IEEE Geoscience and Remote Sensing Letters, 2018, 15(4): 627–631. doi: 10.1109/LGRS.2018.2799877. [8] 刘涛, 杨子渊, 蒋燕妮, 等. 极化SAR图像舰船目标检测研究综述[J]. 雷达学报, 2021, 10(1): 1–19. doi: 10.12000/JR20155.LIU Tao, YANG Ziyuan, JIANG Yanni, et al. Review of ship detection in polarimetric synthetic aperture imagery[J]. Journal of Radars, 2021, 10(1): 1–19. doi: 10.12000/JR20155. [9] WU Wenjin, LI Hailei, LI Xinwu, et al. PolSAR image semantic segmentation based on deep transfer learning—realizing smooth classification with small training sets[J]. IEEE Geoscience and Remote Sensing Letters, 2019, 16(6): 977–981. doi: 10.1109/LGRS.2018.2886559. [10] XIAO Daifeng, WANG Zhirui, WU Youming, et al. Terrain segmentation in polarimetric SAR images using dual-attention fusion network[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4006005. doi: 10.1109/LGRS.2020.3038240. [11] FREEMAN A and DURDEN S L. A three-component scattering model for polarimetric SAR data[J]. IEEE Transactions on Geoscience and Remote Sensing, 1998, 36(3): 963–973. doi: 10.1109/36.673687. [12] 肖东凌, 刘畅. 基于精调的膨胀编组-交叉CNN的PolSAR地物分类[J]. 雷达学报, 2019, 8(4): 479–489. doi: 10.12000/JR19039.XIAO Dongling and LIU Chang. PolSAR terrain classification based on fine-tuned dilated group-cross convolution neural network[J]. Journal of Radars, 2019, 8(4): 479–489. doi: 10.12000/JR19039. [13] 秦先祥, 余旺盛, 王鹏, 等. 基于复值卷积神经网络样本精选的极化SAR图像弱监督分类方法[J]. 雷达学报, 2020, 9(3): 525–538. doi: 10.12000/JR20062.QIN Xianxiang, YU Wangsheng, WANG Peng, et al. Weakly supervised classification of PolSAR images based on sample refinement with complex-valued convolutional neural network[J]. Journal of Radars, 2020, 9(3): 525–538. doi: 10.12000/JR20062. [14] 邹焕新, 李美霖, 马倩, 等. 一种基于张量积扩散的非监督极化SAR图像地物分类方法[J]. 雷达学报, 2019, 8(4): 436–447. doi: 10.12000/JR19057.ZOU Huanxin, LI Meilin, MA Qian, et al. An unsupervised PolSAR image classification algorithm based on tensor product graph diffusion[J]. Journal of Radars, 2019, 8(4): 436–447. doi: 10.12000/JR19057. [15] FANG Zheng, ZHANG Gong, DAI Qijun, et al. Hybrid attention-based encoder-decoder fully convolutional network for PolSAR image classification[J]. Remote Sensing, 2023, 15(2): 526. doi: 10.3390/rs15020526. [16] ZHANG Mengxuan, SHI Jingyuan, LIU Long, et al. Evolutionary complex-valued CNN for PolSAR image classification[C]. 2024 International Joint Conference on Neural Networks, Yokohama, Japan, 2024: 1–8. doi: 10.1109/IJCNN60899.2024.10650936. [17] SUN Xian, WANG Peijin, YAN Zhiyuan, et al. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 184: 116–130. doi: 10.1016/j.isprsjprs.2021.12.004. [18] ZAMIR W S, ARORA A, GUPTA A, et al. iSAID: A large-scale dataset for instance segmentation in aerial images[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, USA, 2019: 28–37. [19] YANG Yi and NEWSAM S. Bag-of-visual-words and spatial extensions for land-use classification[C]. The 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, USA, 2010: 270–279. doi: 10.1145/1869790.1869829. [20] ROTTENSTEINER F, SOHN G, GERKE M, et al. ISPRS semantic labeling contest[C]. Photogrammetric Computer Vision, Zurich, Switzerland, 2014: 5–7. [21] CHENG Gong, HAN Junwei, and LU Xiaoqiang. Remote sensing image scene classification: Benchmark and state of the art[J]. Proceedings of the IEEE, 2017, 105(10): 1865–1883. doi: 10.1109/JPROC.2017.2675998. [22] SHENG Guofeng, YANG Wen, XU Tao, et al. High-resolution satellite scene classification using a sparse coding based multiple feature combination[J]. International Journal of Remote Sensing, 2012, 33(8): 2395–2412. doi: 10.1080/01431161.2011.608740. [23] LIU Xu, JIAO Licheng, LIU Fang, et al. PolSF: PolSAR image datasets on San Francisco[C]. The 5th IFIP TC 12 International Conference on Intelligence Science, Xi’an, China, 2022: 214–219. doi: 10.1007/978-3-031-14903-0_23. [24] WANG Zhirui, ZENG Xuan, YAN Zhiyuan, et al. AIR-PolSAR-Seg: A large-scale data set for terrain segmentation in complex-scene PolSAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022, 15: 3830–3841. doi: 10.1109/JSTARS.2022.3170326. [25] HOCHSTUHL S, PFEFFER N, THIELE A, et al. Pol-InSAR-island—a benchmark dataset for multi-frequency pol-InSAR data land cover classification[J]. ISPRS Open Journal of Photogrammetry and Remote Sensing, 2023, 10: 100047. doi: 10.1016/j.ophoto.2023.100047. [26] WEST R D, HENRIKSEN A, STEINBACH E, et al. High-resolution fully-polarimetric synthetic aperture radar dataset[J]. Discover Geoscience, 2024, 2(1): 83. doi: 10.1007/s44288-024-00090-6. [27] LONG J, SHELHAMER E, and DARRELL T. Fully convolutional networks for semantic segmentation[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 3431–3440. doi: 10.1109/CVPR.2015.7298965. [28] ZHAO Hengshuang, SHI Jianping, QI Xiaojuan, et al. Pyramid scene parsing network[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 6230–6239. doi: 10.1109/CVPR.2017.660. [29] CHEN L C, ZHU Yukun, PAPANDREOU G, et al. Encoder-decoder with atrous separable convolution for semantic image segmentation[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 833–851. doi: 10.1007/978-3-030-01234-2_49. [30] KIRILLOV A, WU Yuxin, HE Kaiming, et al. PointRend: Image segmentation as rendering[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 9796–9805. doi: 10.1109/CVPR42600.2020.00982. [31] FU Jun, LIU Jing, TIAN Haijie, et al. Dual attention network for scene segmentation[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 3141–3149. doi: 10.1109/CVPR.2019.00326. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: