HRRP Unsupervised Target Feature Extraction Method Based on Multiple Contrastive Loss in Radar Sensor Networks

-

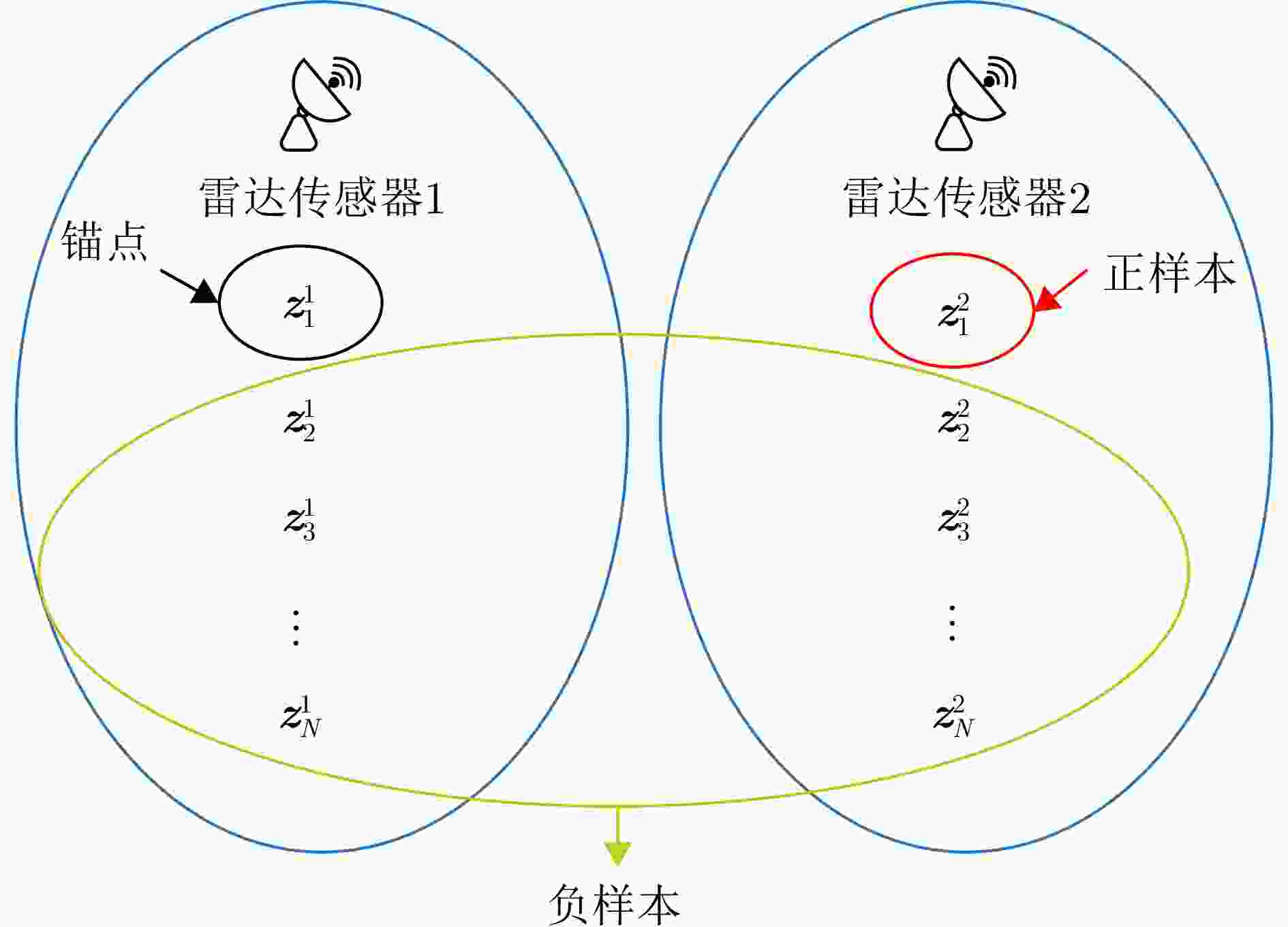

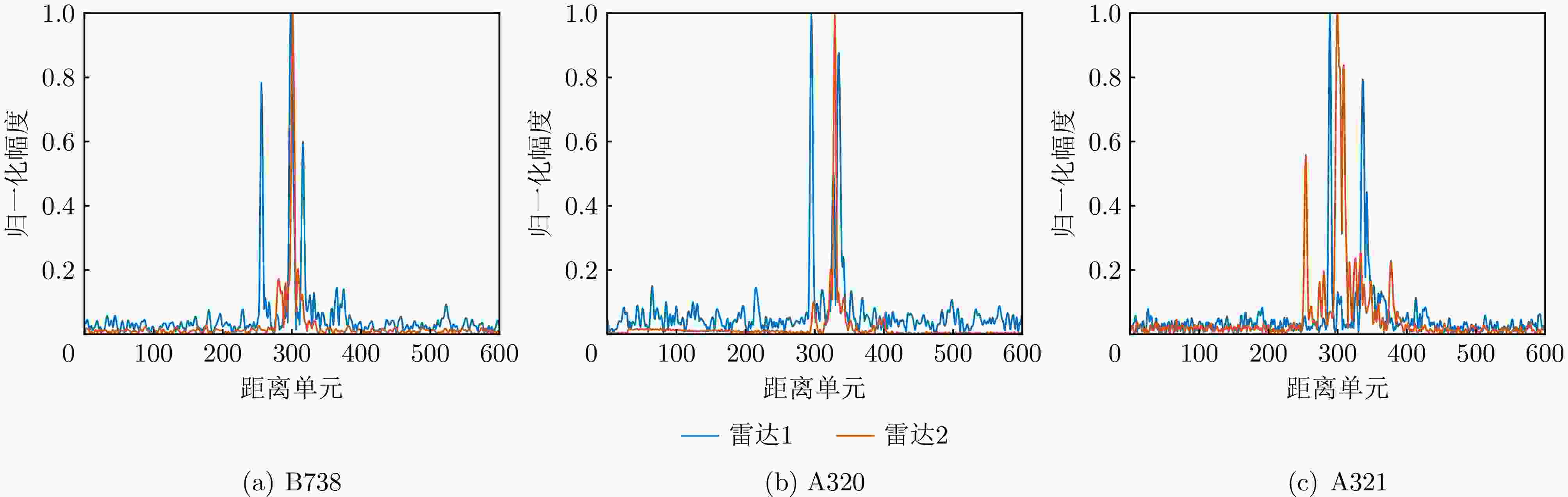

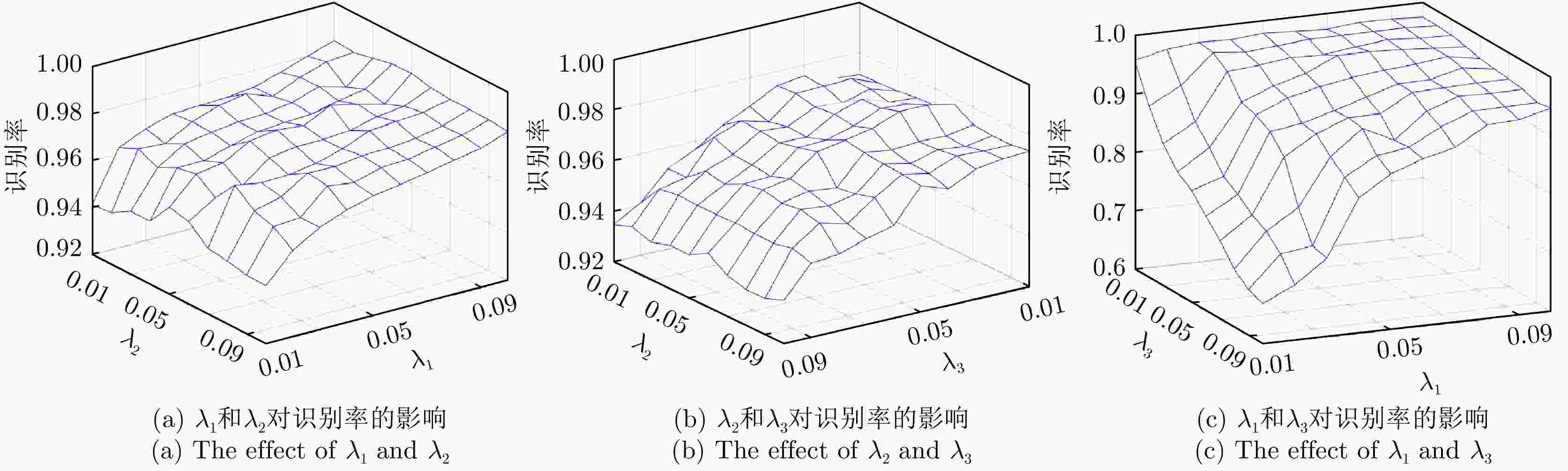

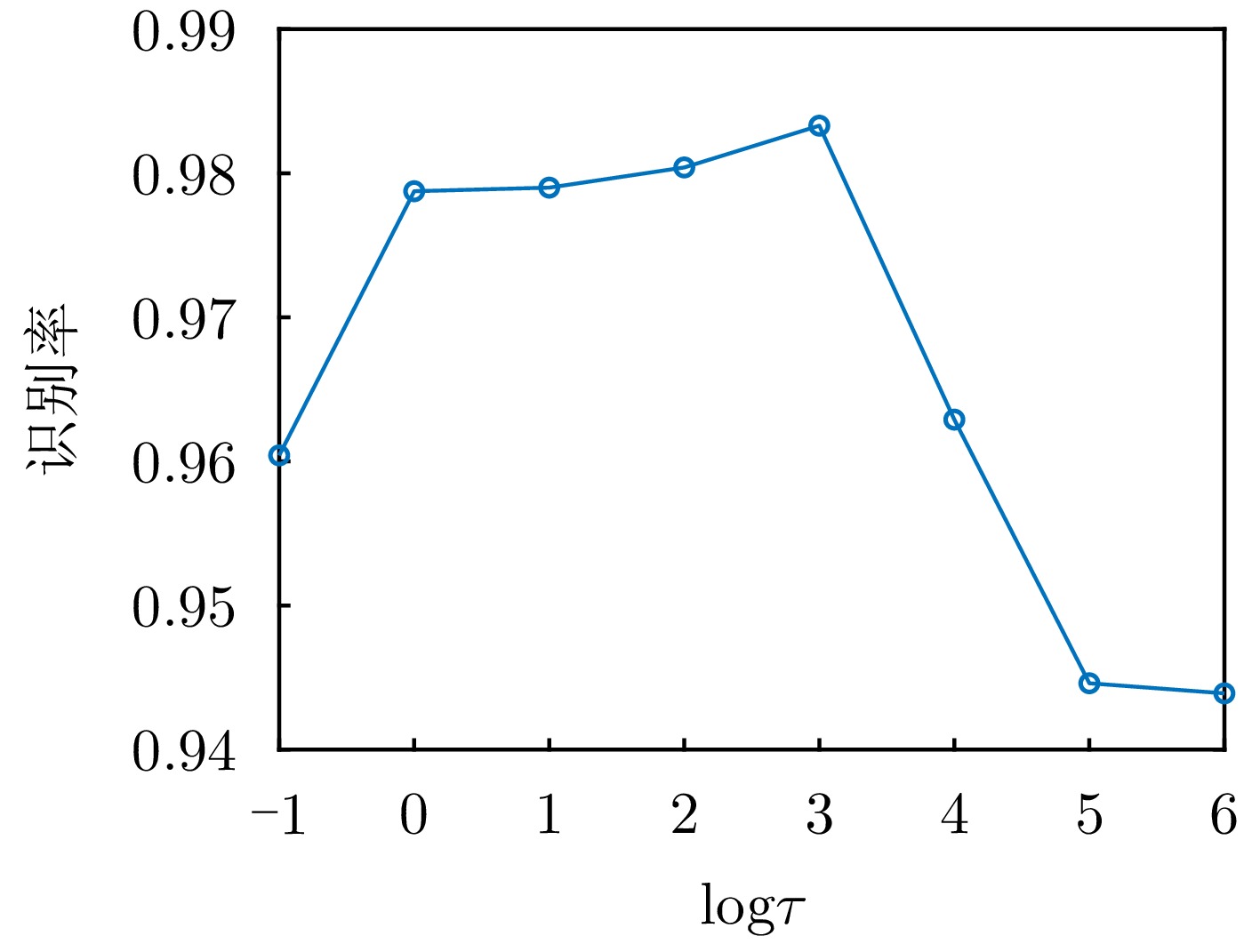

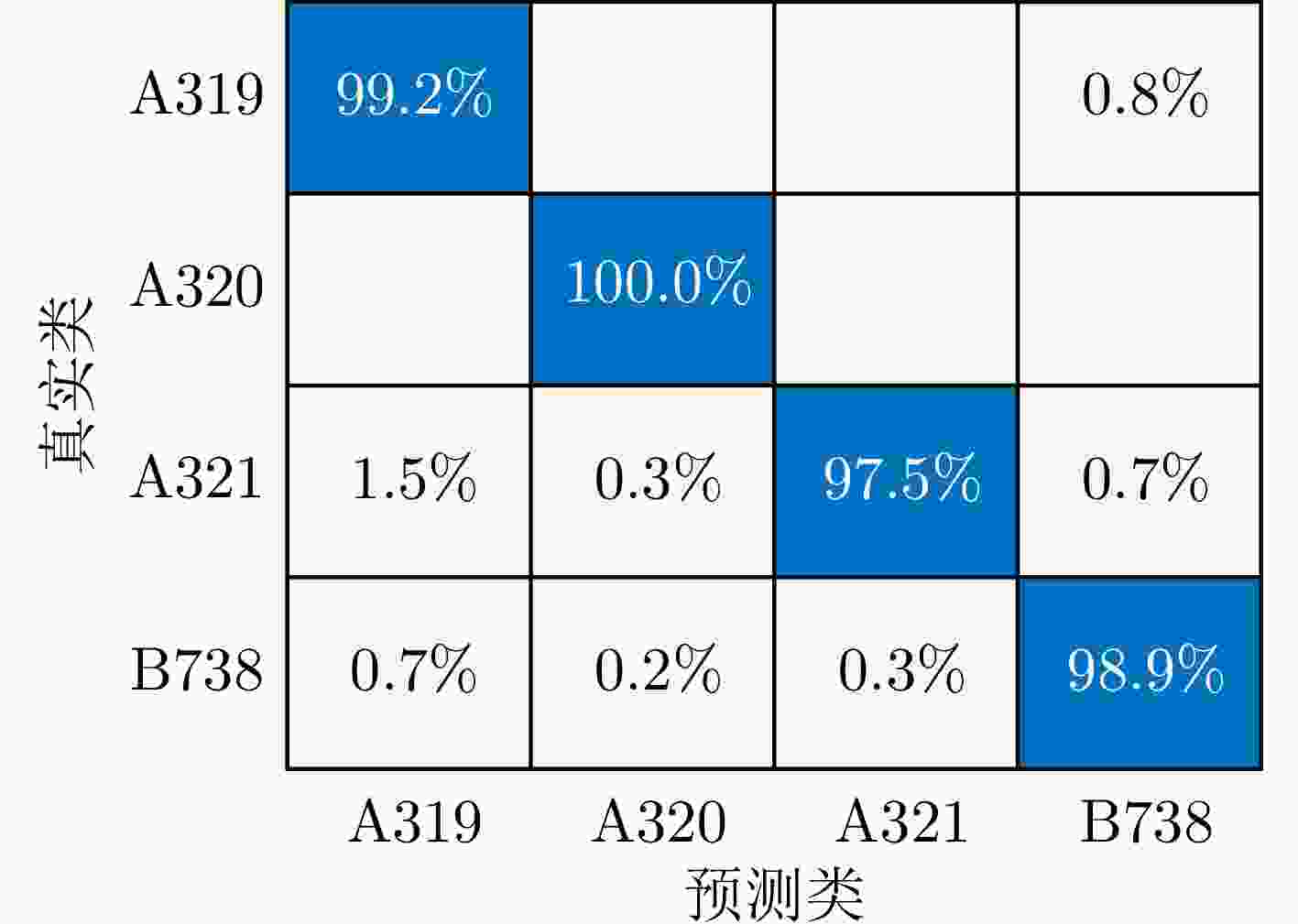

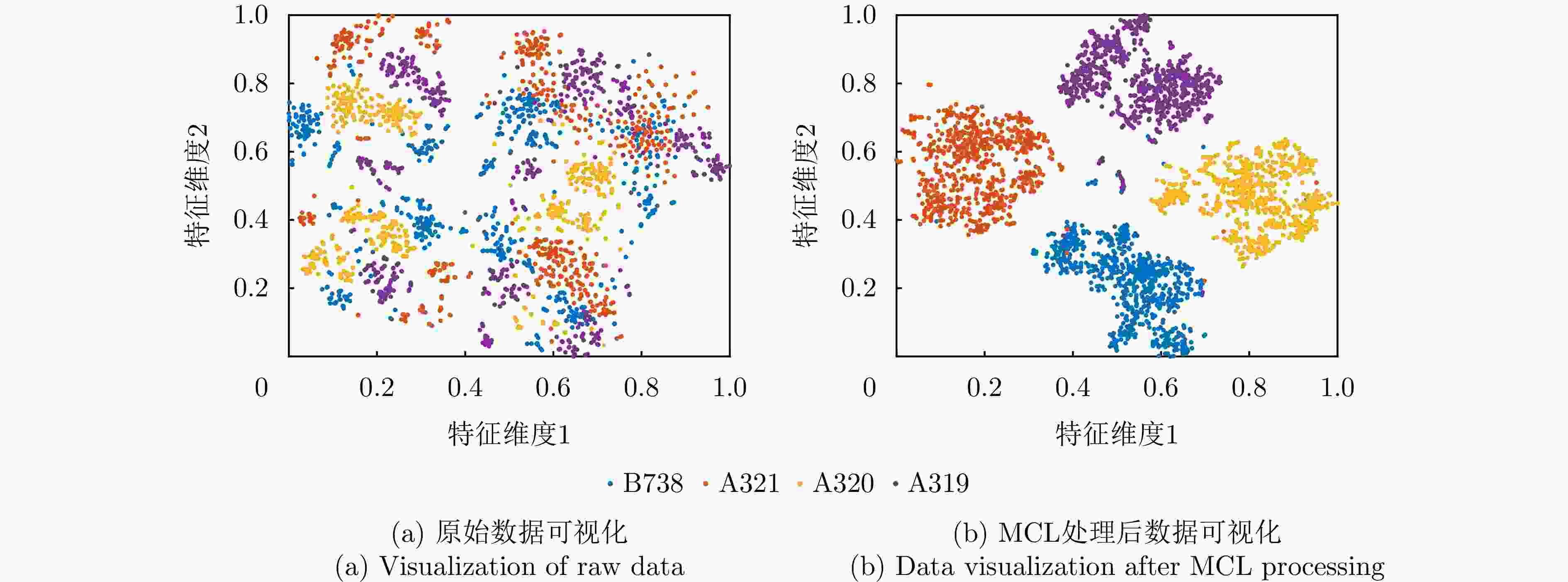

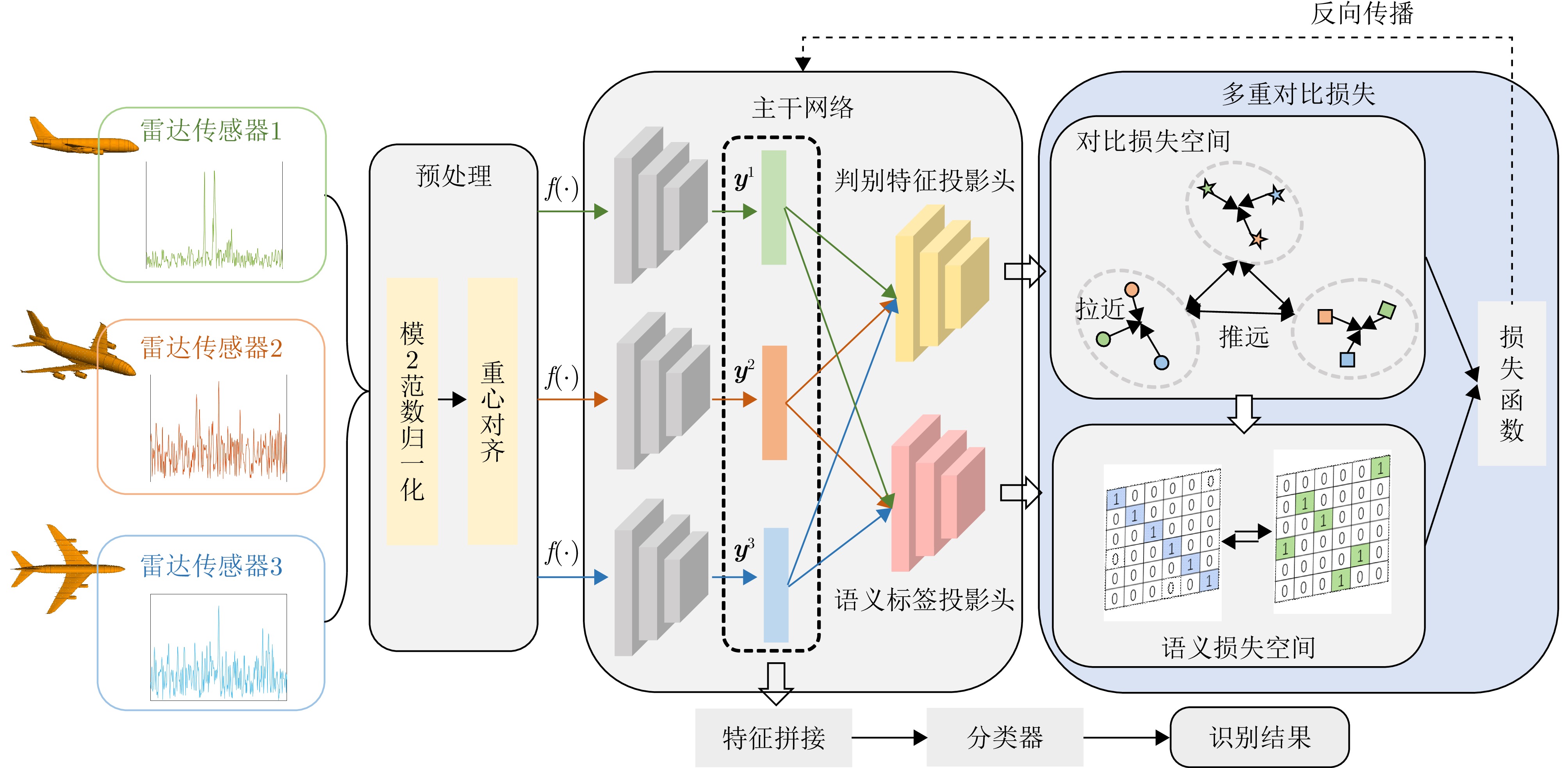

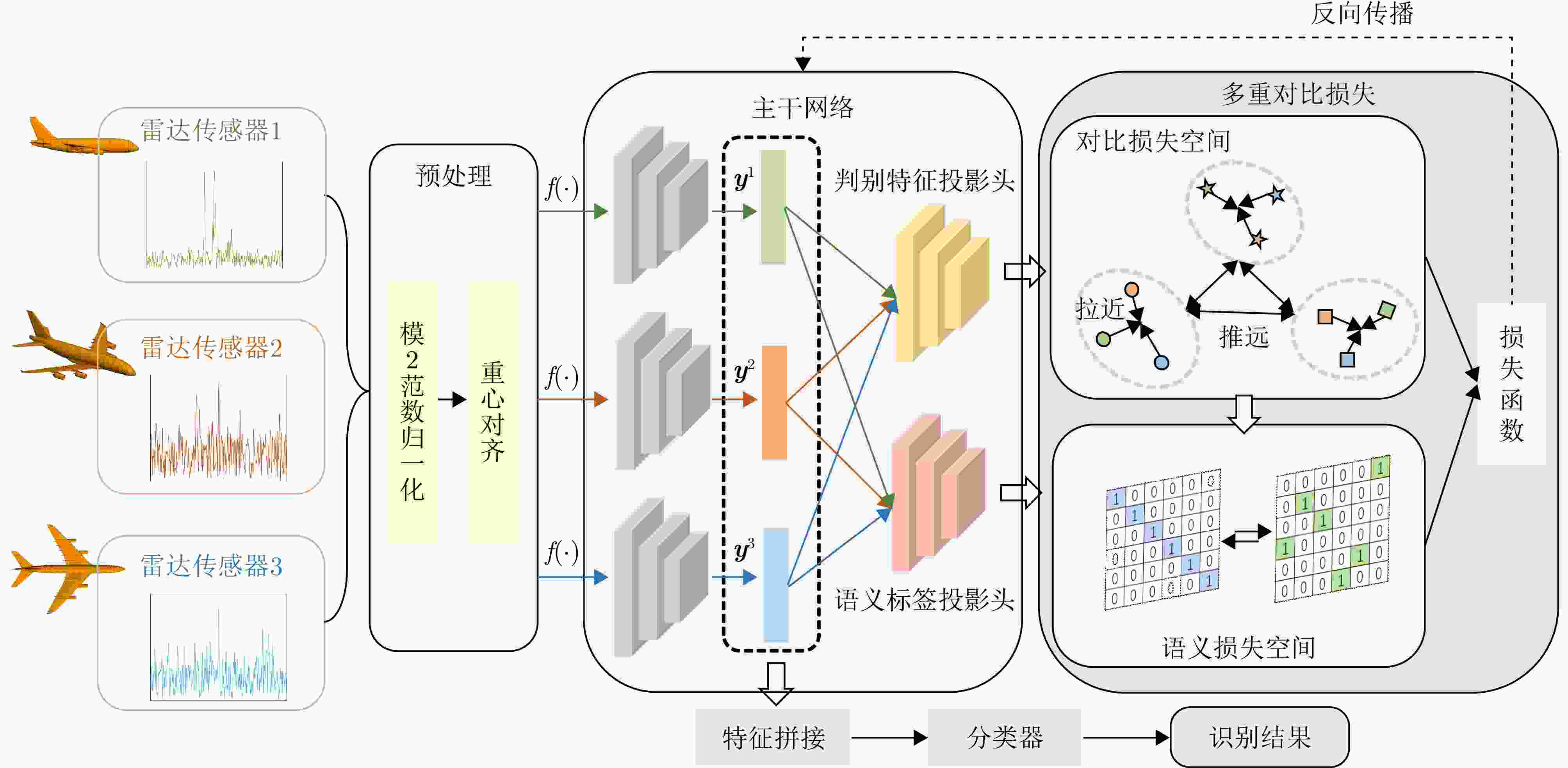

摘要: 基于雷达传感器网络的目标识别系统在自动目标识别领域得到了广泛的研究,该系统从多个角度对目标进行观测从而可获得稳健的目标识别能力,这也带来了多雷达传感器回波数据间相关信息和差异信息的利用问题。其次,现有研究大都需要大规模标记数据来获得目标的先验知识,考虑到大量未标注数据未被有效使用,该文研究了一种基于多重对比损失(MCL)的雷达传感器网络HRRP无监督目标特征提取方法。该方法通过联合实例级损失、Fisher损失和语义一致损失这三重损失约束,用以寻求多雷达传感器回波间具有一致性和判别性的特征向量并用于后续的识别任务。具体而言,将原始回波数据分别映射到对比损失空间和语义标签空间:在对比损失空间中,利用对比损失对样本的相似性和聚集性进行约束,使不同传感器获取的同一目标不同回波间的相对距离和绝对距离被减小,而不同目标回波样本间的距离被拉大;在语义损失空间中,通过提取到的判别特征对语义标签进行约束,实现语义信息和判别特征一致的目标。在实测民用飞机数据集上进行的实验表明,与最先进的无监督和有监督目标识别算法相比,MCL的识别准确率分别提升了0.4%和1.4%,并且MCL能有效提升多雷达传感器协同时的目标识别性能。Abstract: In recent years, target recognition systems based on radar sensor networks have been widely studied in the field of automatic target recognition. These systems observe the target from multiple angles to achieve robust recognition, which also brings the problem of using the correlation and difference information of multiradar sensor echo data. Furthermore, most existing studies used large-scale labeled data to obtain prior knowledge of the target. Considering that a large amount of unlabeled data is not effectively used in target recognition tasks, this paper proposes an HRRP unsupervised target feature extraction method based on Multiple Contrastive Loss (MCL) in radar sensor networks. The proposed method combines instance level loss, Fisher loss, and semantic consistency loss constraints to identify consistent and discriminative feature vectors among the echoes of multiple radar sensors and then use them in subsequent target recognition tasks. Specifically, the original echo data are mapped to the contrast loss space and the semantic label space. In the contrast loss space, the contrastive loss is used to constrain the similarity and aggregation of samples so that the relative and absolute distances between different echoes of the same target obtained by different sensors are reduced while the relative and absolute distances between different target echoes are increased. In the semantic loss space, the extracted discriminant features are used to constrain the semantic labels so that the semantic information and discriminant features are consistent. Experiments on an actual civil aircraft dataset revealed that the target recognition accuracy of the MCL-based method is improved by 0.4% and 1.4%, respectively, compared with the most advanced unsupervised algorithm CC and supervised target recognition algorithm PNN. Further, MCL can effectively improve the target recognition performance of radar sensors when applied in conjunction with the sensors.

-

1 MCL算法

1. MCL algorithm

输入:雷达传感网络观测数据${{\boldsymbol{x}}}_i^m$,目标类别数C,温度参数

${\tau }$,平衡参数$\lambda $。输出:各观测样本的特征表示${{\boldsymbol{y}}}_i^m$。 1:通过式(4)、式(5)对HRRP数据进行预处理。 2:通过式(6)、式(7)、式(8)计算雷达传感器网络观测数据的特

征表示、判别特征向量和语义标签向量。3:根据式(11)、式(12)、式(18)分别计算实例级损失、Fisher损

失和语义损失。4:利用Adam算法优化网络权重f和W。 5:根据式(6)计算各观测样本的特征表示${{\boldsymbol{y}}}_i^m$。 表 1 目标物理参数

Table 1. Parameters of targets

飞机型号 机长(m) 翼展(m) 机高(m) A321 44.51 34.10 11.76 A320 37.57 34.10 11.76 A319 33.84 34.10 11.76 B738 39.50 35.79 12.50 表 2 实测HRRP数据集详细描述

Table 2. Description of the HRRP datasets

数据集参数 数值 带宽 200 MHz 目标类型 民用飞机 目标类型数目 4 每部雷达训练样本数 4800 每部雷达测试样本数 2400 样本维度 600 观测角 雷达1 0°~15° 雷达2 45°~60° 雷达3 80°~95° 表 3 各种HRRP识别方法在实测HRRP数据集上的识别率

Table 3. Recognition rates of various HRRP recognition methods on measured HRRP data sets

方法 策略 $ \mathrm{Pe} $ 参数量(M) 0.01 0.05 0.10 无监督对比学习方法 基线 冻结网络只训练分类头 0.591 0.676 0.729 0.065 MoCo[24] 字典查询与动量更新 0.926 0.934 0.949 0.298 SwAV[25] 原型聚类预测 0.947 0.951 0.963 0.301 SimCLR[22] 实例级损失 0.967 0.979 0.984 0.298 CC[26] 实例级和标签损失 0.969 0.980 0.985 0.314 MCL(ours) 三重对比损失 0.977 0.986 0.989 0.308 MCL(label) 带标签约束的对比损失 0.985 0.992 0.993 0.308 HRRP监督学习方法 基线 交叉熵损失 0.927 0.226 AMM[27] 网络嵌套和动态调整 0.969 0.051 TACNN[28] 局部特征注意 0.956 0.998 PNN[29] 并行神经网络 0.975 1.712 表 4 消融实验结果

Table 4. Results of ablation experiment

实例级损失 Fisher

损失语义

损失${\text{Pe}}$ 0.01 0.05 0.10 – – – 0.591 0.676 0.729 √ – – 0.967 0.979 0.984 – √ – 0.937 0.944 0.957 √ 0.657 0.809 0.859 √ √ 0.968 0.982 0.986 √ √ 0.943 0.953 0.961 √ √ 0.962 0.976 0.984 √ √ √ 0.977 0.986 0.989 表 5 雷达传感器数量对识别率的影响

Table 5. The influence of the number of radar sensors on the recognition rate

雷达数量 Pe 0.01 0.05 0.10 2 0.753 0.772 0.785 3 0.955 0.966 0.972 4 0.985 0.986 0.988 5 0.990 0.993 0.995 -

[1] 陈健, 杜兰, 廖磊瑶. 基于参数化统计模型的雷达HRRP目标识别方法综述[J]. 雷达学报, 2022, 11(6): 1020–1047. doi: 10.12000/JR22127.CHEN Jian, DU Lan, and LIAO Leiyao. Survey of radar HRRP target recognition based on parametric statistical model[J]. Journal of Radars, 2022, 11(6): 1020–1047. doi: 10.12000/JR22127. [2] DONG Ganggang and LIU Hongwei. A hierarchical receptive network oriented to target recognition in SAR images[J]. Pattern Recognition, 2022, 126: 108558. doi: 10.1016/j.patcog.2022.108558. [3] ZHANG Yukun, GUO Xiansheng, LEUNG H, et al. Cross-task and cross-domain SAR target recognition: A meta-transfer learning approach[J]. Pattern Recognition, 2023, 138: 109402. doi: 10.1016/j.patcog.2023.109402. [4] CHEN Bo, LIU Hongwei, CHAI Jing, et al. Large margin feature weighting method via linear programming[J]. IEEE Transactions on Knowledge and Data Engineering, 2009, 21(10): 1475–1488. doi: 10.1109/TKDE.2008.238. [5] MOLCHANOV P, EGIAZARIAN K, ASTOLA J, et al. Classification of aircraft using micro-Doppler bicoherence-based features[J]. IEEE Transactions on Aerospace and Electronic Systems, 2014, 50(2): 1455–1467. doi: 10.1109/TAES.2014.120266. [6] LI Xiaoxiong, ZHANG Shuning, ZHU Yuying, et al. Supervised contrastive learning for vehicle classification based on the IR-UWB radar[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5117312. doi: 10.1109/TGRS.2022.3203468. [7] CHEN Jian, DU Lan, HE Hua, et al. Convolutional factor analysis model with application to radar automatic target recognition[J]. Pattern Recognition, 2019, 87: 140–156. doi: 10.1016/j.patcog.2018.10.014. [8] FENG Bo, CHEN Bo, and LIU Hongwei. Radar HRRP target recognition with deep networks[J]. Pattern Recognition, 2017, 61: 379–393. doi: 10.1016/j.patcog.2016.08.012. [9] DU Lan, LIU Hongwei, WANG Penghui, et al. Noise robust radar HRRP target recognition based on multitask factor analysis with small training data size[J]. IEEE Transactions on Signal Processing, 2012, 60(7): 3546–3559. doi: 10.1109/TSP.2012.2191965. [10] XU Bin, CHEN Bo, WAN Jinwei, et al. Target-aware recurrent attentional network for radar HRRP target recognition[J]. Signal Processing, 2019, 155: 268–280. doi: 10.1016/j.sigpro.2018.09.041. [11] SHI Lei, WANG Penghui, LIU Hongwei, et al. Radar HRRP statistical recognition with local factor analysis by automatic Bayesian Ying-Yang harmony learning[J]. IEEE Transactions on Signal Processing, 2011, 59(2): 610–617. doi: 10.1109/TSP.2010.2088391. [12] LIAO Leiyao, DU Lan, and CHEN Jian. Class factorized complex variational auto-encoder for HRR radar target recognition[J]. Signal Processing, 2021, 182: 107932. doi: 10.1016/j.sigpro.2020.107932. [13] MAO Chengchen and LIANG Jing. HRRP recognition in radar sensor network[J]. Ad Hoc Networks, 2017, 58: 171–178. doi: 10.1016/j.adhoc.2016.09.001. [14] LUNDÉN J and KOIVUNEN V. Deep learning for HRRP-based target recognition in multistatic radar systems[C]. 2016 IEEE Radar Conference, Philadelphia, USA, 2016: 1–6. doi: 10.1109/RADAR.2016.7485271. [15] 章鹏飞, 李刚, 霍超颖, 等. 基于双雷达微动特征融合的无人机分类识别[J]. 雷达学报, 2018, 7(5): 557–564. doi: 10.12000/JR18061.ZHANG Pengfei, LI Gang, HUO Chaoying, et al. Classification of drones based on micro-Doppler radar signatures using dual radar sensors[J]. Journal of Radars, 2018, 7(5): 557–564. doi: 10.12000/JR18061. [16] 郭帅, 陈婷, 王鹏辉, 等. 基于角度引导Transformer融合网络的多站协同目标识别方法[J]. 雷达学报, 2023, 12(3): 516–528. doi: 10.12000/JR23014.GUO Shuai, CHEN Ting, WANG Penghui, et al. Multistation cooperative radar target recognition based on an angle-guided transformer fusion network[J]. Journal of Radars, 2023, 12(3): 516–528. doi: 10.12000/JR23014. [17] 吕小玲, 仇晓兰, 俞文明, 等. 基于无监督域适应的仿真辅助SAR目标分类方法及模型可解释性分析[J]. 雷达学报, 2022, 11(1): 168–182. doi: 10.12000/JR21179.LYU Xiaoling, QIU Xiaolan, YU Wenming, et al. Simulation-assisted SAR target classification based on unsupervised domain adaptation and model interpretability analysis[J]. Journal of Radars, 2022, 11(1): 168–182. doi: 10.12000/JR21179. [18] WU Zhirong, XIONG Yuanjun, YU S X, et al. Unsupervised feature learning via non-parametric instance discrimination[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 3733–3742. doi: 10.1109/CVPR.2018.00393. [19] ÖZDEMIR C. Inverse Synthetic Aperture Radar Imaging with MATLAB Algorithms[M]. 2nd ed. Hoboken: John Wiley & Sons, 2021: 167–170. [20] DU Lan, WANG Penghui, LIU Hongwei, et al. Bayesian spatiotemporal multitask learning for radar HRRP target recognition[J]. IEEE Transactions on Signal Processing, 2011, 59(7): 3182–3196. doi: 10.1109/TSP.2011.2141664. [21] LIAO Kuo, SI Jinxiu, ZHU Fangqi, et al. Radar HRRP target recognition based on concatenated deep neural networks[J]. IEEE Access, 2018, 6: 29211–29218. doi: 10.1109/ACCESS.2018.2842687. [22] CHEN Ting, KORNBLITH S, NOROUZI M, et al. A simple framework for contrastive learning of visual representations[C]. The 37th International Conference on Machine Learning, Virtual Event, 2020: 149. [23] WANG Feng and LIU Huaping. Understanding the behaviour of contrastive loss[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 2495–2504. doi: 10.1109/CVPR46437.2021.00252. [24] HE Kaiming, FAN Haoqi, WU Yuxin, et al. Momentum contrast for unsupervised visual representation learning[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 9729–9738. doi: 10.1109/CVPR42600.2020.00975. [25] CARON M, MISRA I, MAIRAL J, et al. Unsupervised learning of visual features by contrasting cluster assignments[C]. The 34th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2020: 831. [26] LI Yunfan, HU Peng, LIU Zitao, et al. Contrastive clustering[C]. The 35th AAAI Conference on Artificial Intelligence, Virtual Event, 2021: 8547–8555. doi: 10.1609/aaai.v35i10.17037. [27] PAN Mian, LIU Ailin, YU Yanzhen, et al. Radar HRRP target recognition model based on a stacked CNN–Bi-RNN with attention mechanism[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5100814. doi: 10.1109/TGRS.2021.3055061. [28] CHEN Jian, DU Lan, GUO Guanbo, et al. Target-attentional CNN for radar automatic target recognition with HRRP[J]. Signal Processing, 2022, 196: 108497. doi: 10.1016/j.sigpro.2022.108497. [29] WU Lingang, HU Shengliang, XU Jianghu, et al. Ship HRRP target recognition against decoy jamming based on CNN-BiLSTM-SE model[J]. IET Radar, Sonar & Navigation, 2024, 18(2): 361–378. doi: 10.1049/rsn2.12507. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: