Multidomain Characteristic-guided Multimodal Contrastive Recognition Method for Active Radar Jamming

-

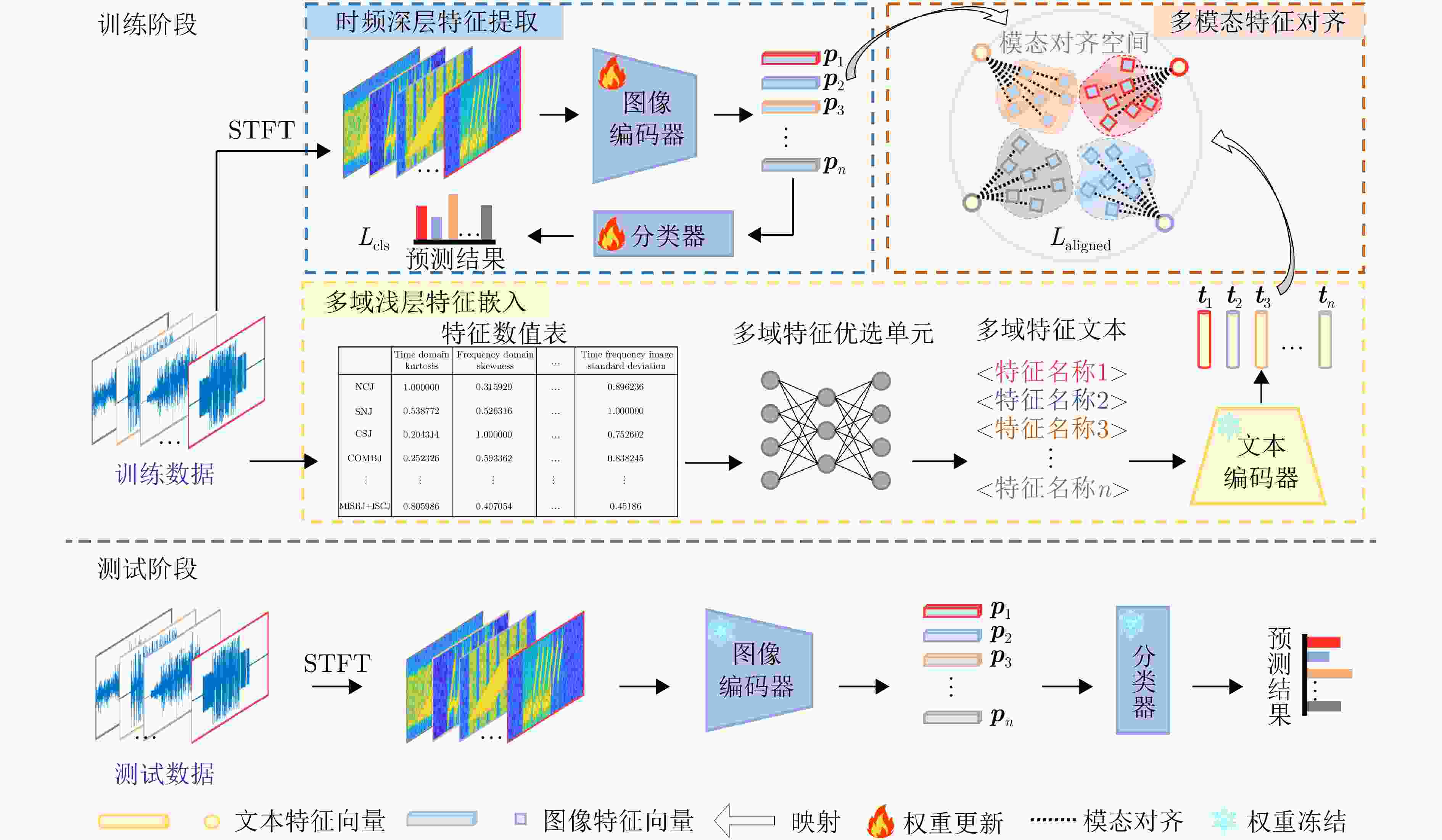

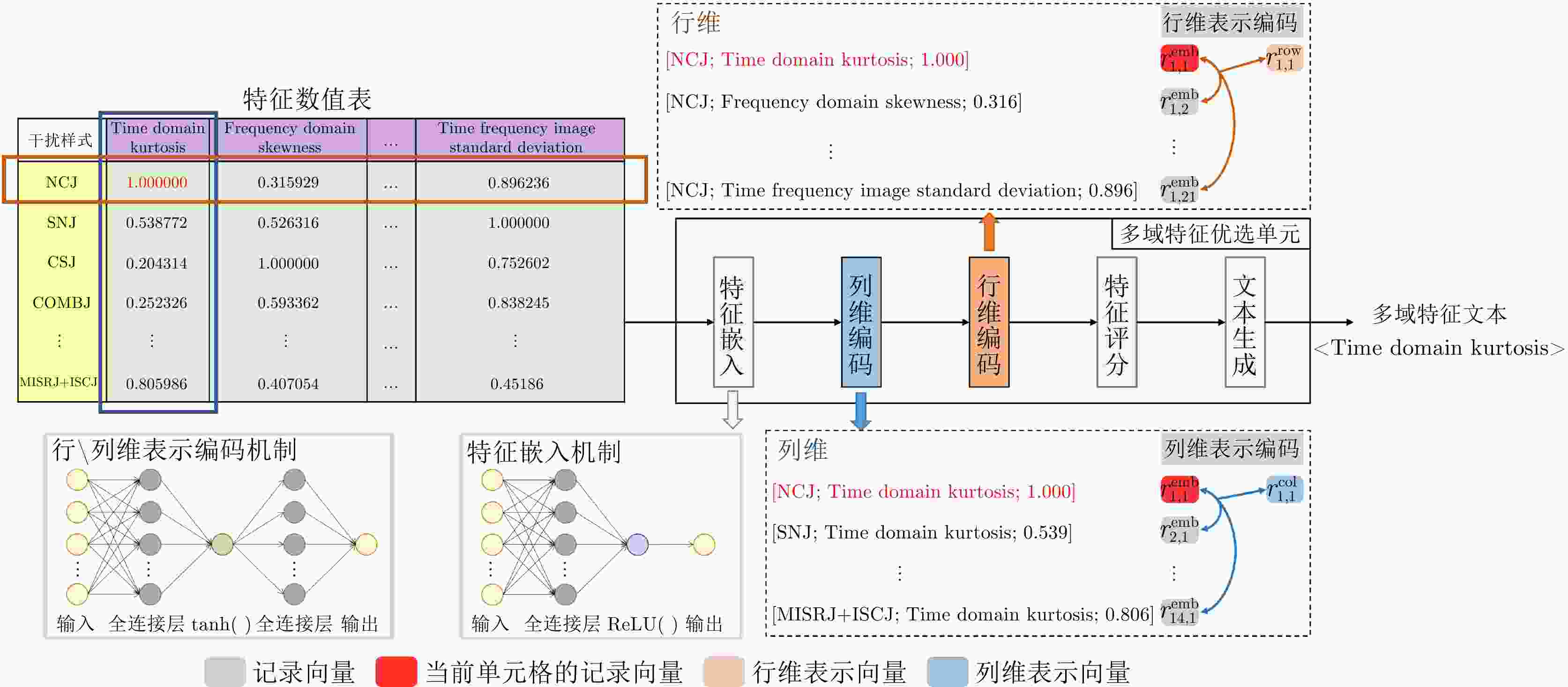

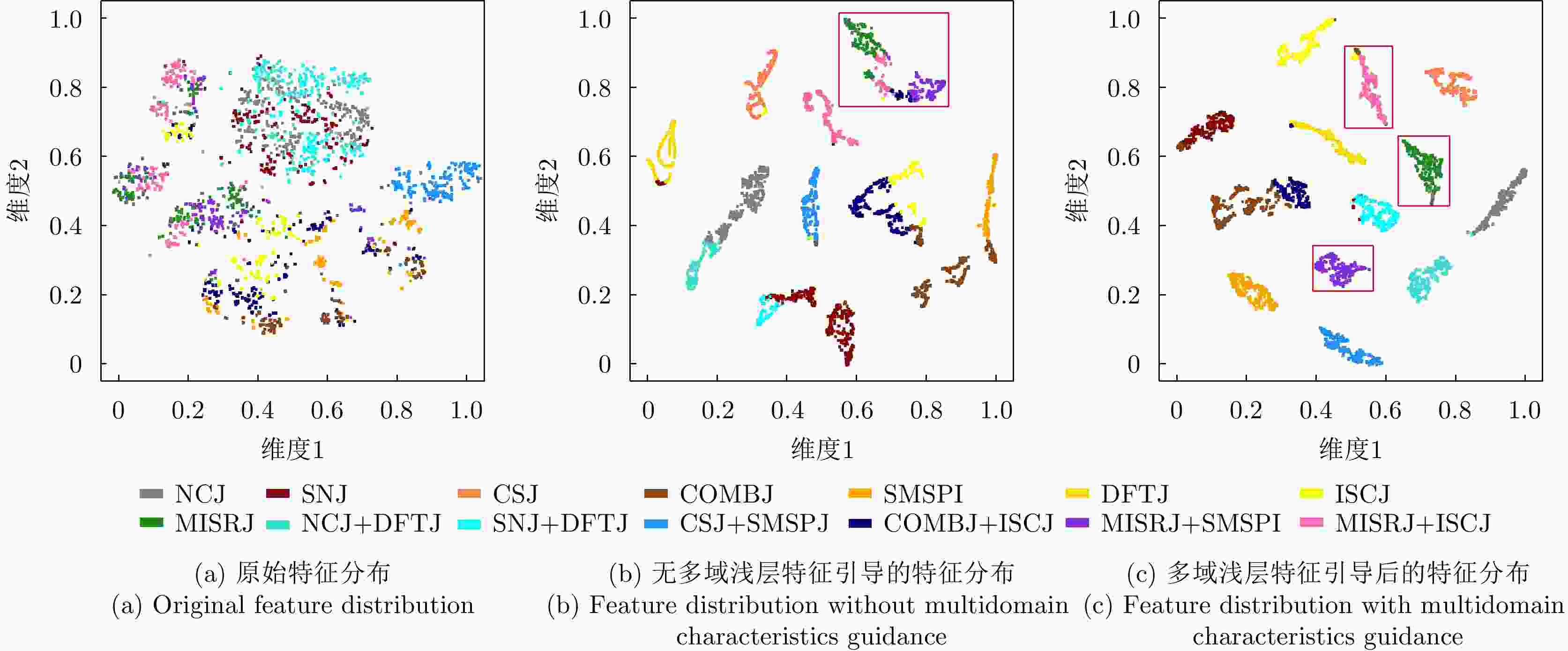

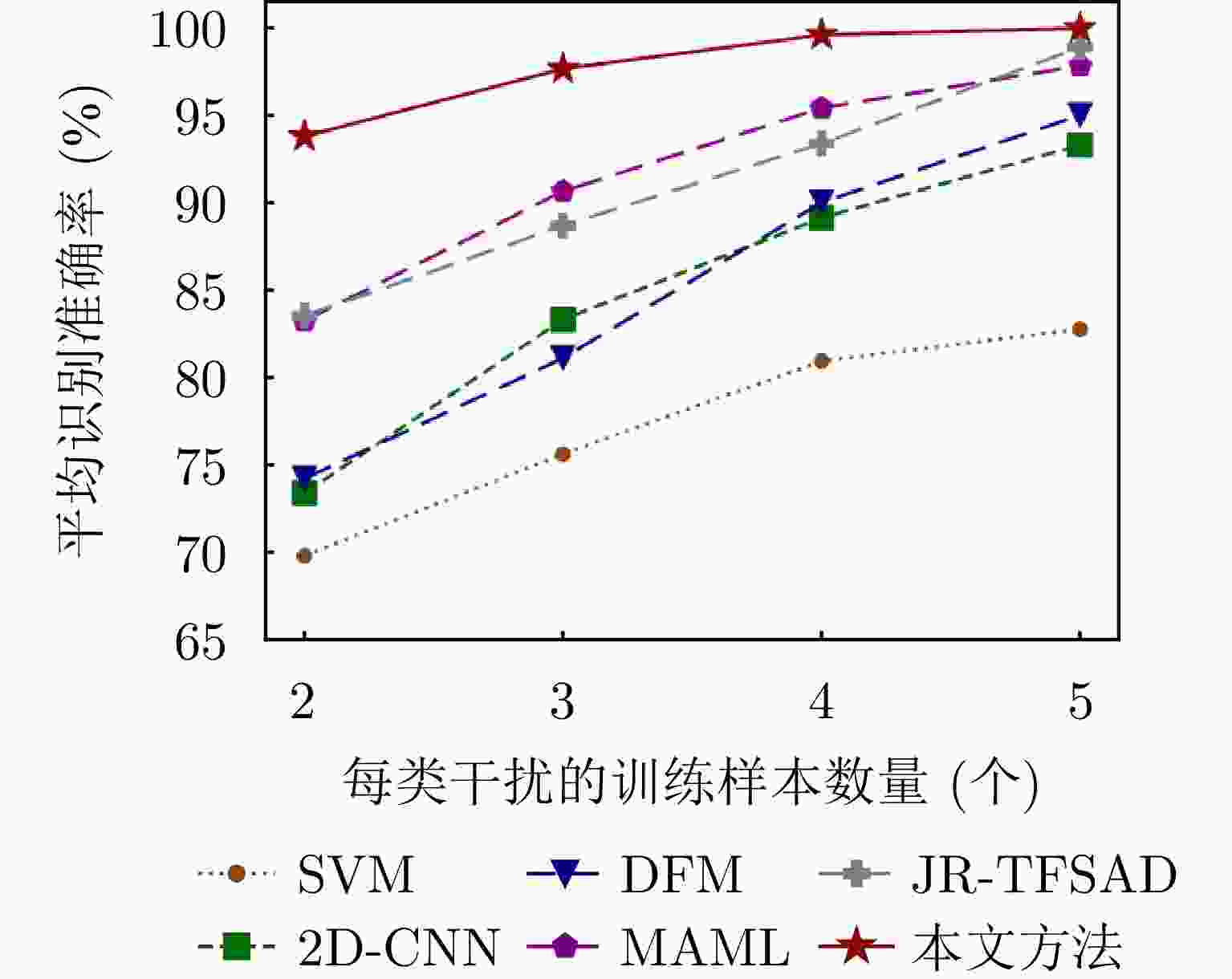

摘要: 在雷达有源干扰识别任务中,如何实现多域浅层特征与时频域深层网络特征的稳健联合,并在极端小样本下维持高干扰识别准确率是亟待解决的关键问题。针对此问题,该文提出一种多域浅层特征引导下雷达有源干扰多模态对比识别方法。在充分提取有源干扰多域浅层特征基础上,设计优选单元自动选择有效特征,生成对应含有隐式专家知识的文本模态。将文本模态与时频变换图像分别输入文本和图像编码器,构建多模态特征对并映射至模态对齐高维空间中,利用文本特征作为锚点,通过对比学习引导同类干扰的时频图像特征聚合,以优化图像编码器表征能力,实现干扰识别特征类内更聚集、类间更分离。实验结果表明,相较于已有深浅特征直接联合,所提引导式联合方法可以实现特征差异处理,从而提高识别特征判别力和泛化力。且在极端小样本条件(每类干扰训练样本为2~3个)下,所提识别方法较先进对比方法的准确率提升9.84%,证明了该文方法的有效性与鲁棒性。Abstract: Achieving robust joint utilization of multidomain characteristics and deep-network features while maintaining a high jamming-recognition accuracy with limited samples is challenging. To address this issue, this paper proposes a multidomain characteristic-guided multimodal contrastive recognition method for active radar jamming. This method involves first thoroughly extracting the multidomain characteristics of active jamming and then designing an optimization unit to automatically select effective characteristics and generate a text modality imbued with implicit expert knowledge. The text modality and involved time-frequency transformation image are separately fed into text and image encoders to construct multimodal-feature pairs and map them to a high-dimensional space for modal alignment. The text features are used as anchors and a guide to time-frequency image features for aggregation around the anchors through contrastive learning, optimizing the image encoder’s representation capability, achieving tight intraclass and separated interclass distributions of active jamming. Experiments show that compared to existing methods, which involve directly combining multidomain characteristics and deep-network features, the proposed guided-joint method can achieve differential feature processing, thereby enhancing the discriminative and generalization capabilities of recognition features. Moreover, under extremely small-sample conditions (2~3 training samples for each type of jamming), the accuracy of our method is 9.84% higher than those of comparative methods, proving the effectiveness and robustness of the proposed method.

-

表 1 雷达干扰多域浅层特征

Table 1. Multidomain characteristics of radar jamming

调制域 干扰特征中英文名称 时域 矩偏度

Time domain skewness矩峰度

Time domain kurtosis包络起伏度

Envelope undulation快速脉内调制识别参数

Fast intra pulse modulation recognition parameters频域 矩偏度

Frequency domain skewness矩峰度

Frequency domain kurtosis载波因子

Carrier factor加性高斯白噪声因子

Additive Gaussian white noise factor时频域 时频图像重心

Centroid of time-frequency image时频图像标准差

Time-frequency image standard deviation双谱域 双谱方差

Bispectral variance双谱均值

Bispectral mean小波域 方差

Variance均值

Mean value最大值

Maximum尺度重心

Scale center of gravity最大奇异值

Maximum singular value中心矩特征

Central moment feature统计域 信息熵

Information entropy指数熵

Exponential entropy范数熵

Norm entropy表 2 EfficientNetV2网络结构

Table 2. EffcientNetV2 architecture

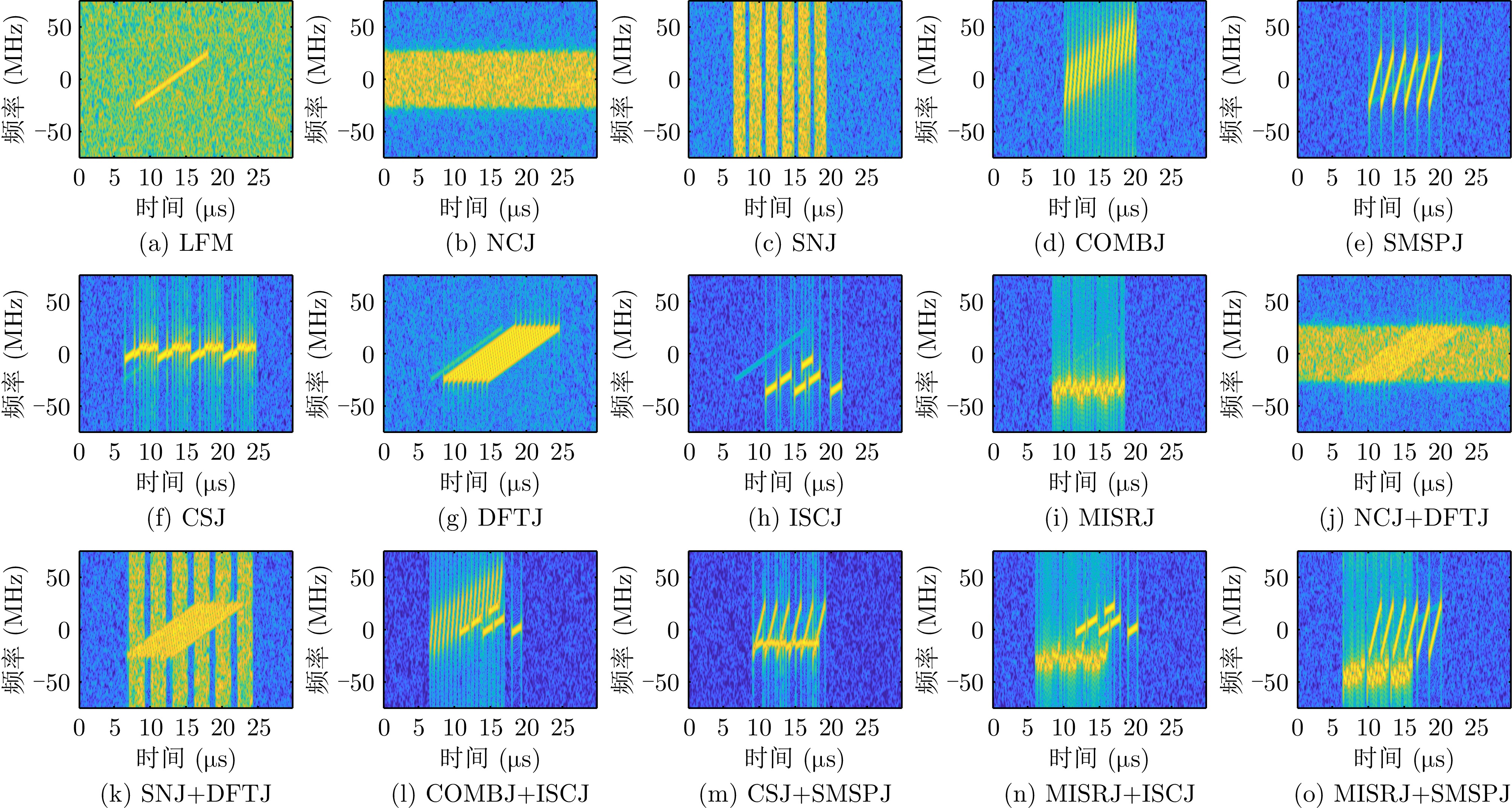

阶段 名称 通道数 层数 状态 0 Conv 24 1 冻结 1 Fused-MBConv 24 2 冻结 2 Fused-MBConv 48 4 冻结 3 Fused-MBConv 64 4 冻结 4 MBConv 128 6 冻结 5 MBConv 160 9 训练 6 MBConv 272 15 训练 7 Conv & Pooling & FC 1792 1 训练 表 3 雷达发射信号与干扰参数设置

Table 3. Parameter setting of transmission signal and jamming

信号类型 参数 赋值 LFM 时宽 10 μs 带宽 50 MHz 采样频率 125 MHz $ {J_0} $ NCJ 干扰带宽 60 MHz 高斯白噪声 N(0, 1) $ {J_1} $ SNJ 干扰个数 4~7个 干扰时宽 1.5~2.5 μs 干扰占空比 0.8 高斯白噪声 N(0, 1) $ {J_2} $ CSJ 转发次数 2~4次 切片时宽 2.5~5.0 μs 干扰占空比 0.9 干扰时延 0.5 μs $ {J_3} $ COMBJ 干扰个数 9~17个 干扰带宽 10~40 MHz $ {J_4} $ SMSPJ 干扰个数 4~7个 干扰时延 1~3 μs $ {J_5} $ DFTJ 假目标个数 5~10个 假目标时延 1~3 μs $ {J_6} $ ISCJ 转发次数 2~4次 干扰个数 1~4个 切片时宽 1~3 μs 干扰时延 0.5~1.0 μs $ {J_7} $ MISRJ 转发次数 3~5次 切片时宽 6~8 μs 干扰占空比 0.95 干扰时延 0.5~1.0 μs $ {J_8} $ NCJ+DFTJ 由单一干扰参数决定 $ {J_9} $ SNJ+DFTJ $ {J_{10}} $ CSJ+SMSPJ $ {J_{11}} $ COMBJ+ISCJ $ {J_{12}} $ MISRJ+SMSPJ $ {J_{13}} $ MISRJ+ISCJ 注:“N(0, 1)”表示0为平均数、以1为标准差的正态分布。 表 4 不同超参数${\boldsymbol{\lambda}} $对本文提出方法的识别准确率的影响(%)

Table 4. The impact of different hyperparameters ${\boldsymbol{\lambda}} $ on the recognition accuracy of the proposed method (%)

超参数$\lambda $取值 准确率 0.2 85.17 0.4 88.79 0.6 91.80 0.8 93.55 1.0 93.09 注:表4中使用粗体标出了当前实验中最优的结果,下划线标出了当前实验中次优的结果。 表 5 不同超参数${\boldsymbol{\tau}} $对本文提出方法的识别准确率的影响(%)

Table 5. The impact of different hyperparameters ${\boldsymbol{\tau}} $ on the recognition accuracy of the proposed method (%)

超参数$\tau $取值 准确率 0.25 60.26 0.50 85.17 0.75 93.55 1.00 88.83 1.25 88.13 1.50 73.11 1.75 70.44 2.00 65.60 注:表5中使用粗体标出了当前实验中最优的结果,下划线标出了当前实验中次优的结果。 表 6 有效性验证实验结果

Table 6. Validity verification experiment results

多域浅层特征 时频图像深层特征 特征联合方式 准确率(%) 单次训练时间(s) × √ 无 80.11 0.203 √ √ 特征拼接 85.63 0.470 √ √ 特征对比(本文方法) 93.55 0.317 注:“×”表示未使用相应的特征,“√”表示使用了相应的特征,并使用粗体标出了最优的结果,下划线标出了次优的结果。 表 7 不同方法的识别性能对比结果(均值±标准差)

Table 7. Experimental results of jamming recognition performance evaluation between the proposed method and the comparative methods (mean ± standard deviation)

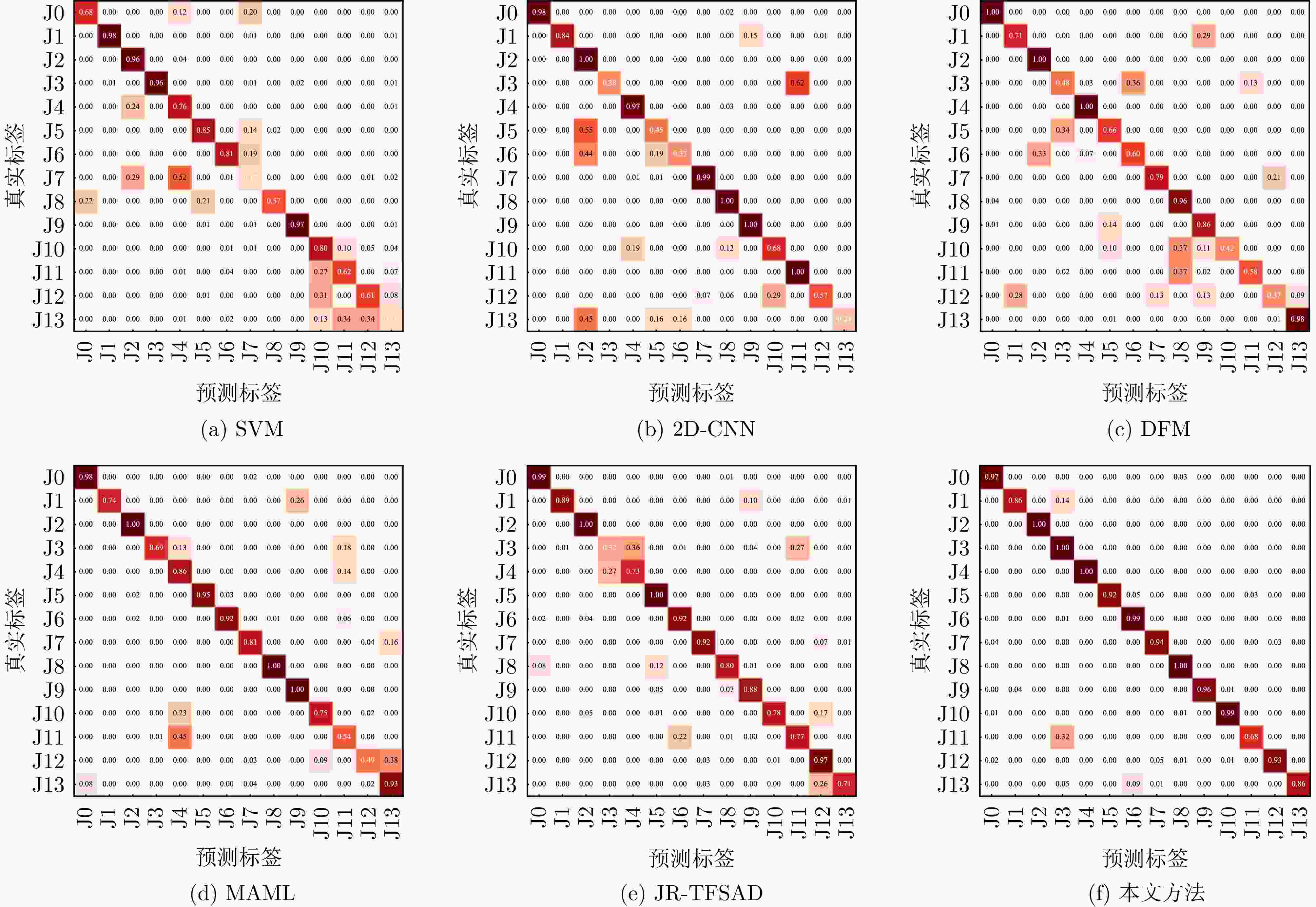

干扰类别和性能评价指标 干扰识别方法(%) SVM 2D-CNN DFM MAML JR-TFSAD 本文方法 干扰类别 NCJ 61.57±8.97 97.74±0.34 100.00±0.00 98.33±0.15 98.97±0.00 96.92±0.37 SNJ 94.55±6.74 82.97±1.50 71.23±0.15 73.67±0.36 89.23±0.15 86.15±1.45 CSJ 96.16±6.02 100.00±0.00 100.00±0.00 100.00±0.00 100.00±0.00 100.00±0.00 COMBJ 89.44±4.67 37.95±0.00 48.21±0.27 68.99±0.56 31.79±0.73 100.00±0.00 SMSPJ 91.67±9.64 90.15±4.86 100.00±0.00 85.67±0.39 73.33±0.00 100.00±0.00 DFTJ 48.23±7.81 45.13±0.00 65.85±0.25 94.98±0.19 100.00±0.00 92.30±0.80 ISCJ 77.22±7.11 37.44±0.00 60.00±0.13 92.00±0.20 92.31±0.24 99.49±0.09 MISRJ 39.29±4.13 99.03±0.15 78.97±0.11 80.67±0.50 92.31±0.00 93.85±0.57 NCJ+DFTJ 62.12±9.82 100.00±0.00 95.90±0.05 100.00±0.00 80.00±0.00 100.00±0.00 SNJ+DFTJ 91.97±8.29 100.00±0.00 84.77±0.83 99.67±0.23 97.95±0.00 95.90±0.55 CSJ+SMSPJ 64.39±8.36 68.21±0.00 41.54±0.98 75.33±0.20 88.21±0.25 98.97±0.15 COMBJ+ISCJ 56.21±9.95 100.00±0.00 58.15±0.25 54.02±0.47 77.95±0.00 67.70±2.08 MISRJ+SMSPJ 40.96±8.05 57.03±1.60 36.56±0.33 49.00±0.48 77.44±0.25 92.82±0.81 MISRJ+ISCJ 63.38±8.84 23.59±6.62 97.95±0.13 93.33±0.17 71.28±0.26 85.64±1.65 识别结果 准确率(%) 69.80±1.42 73.41±0.42 74.23±0.26 83.26±0.45 83.55±0.22 93.55±0.29 召回率(%) 69.97±1.57 73.74±0.34 74.64±0.22 83.35±0.39 83.64±0.23 93.61±0.27 F1(%) 68.23±1.74 72.75±0.39 73.15±0.15 83.59±0.56 82.98±0.22 93.14±0.28 精准率(%) 70.36±1.43 71.14±0.29 76.01±0.25 86.79±0.36 84.05±0.21 94.79±0.30 Kappa(×100) 67.47±1.45 72.25±0.45 72.24±0.37 82.07±0.39 82.29±0.22 93.05±0.31 模型

复杂度浮点运算次(109) – 1.456 2.816 0.273 11.978 3.819 模型参数量(106) – 15.471 18.862 1.076 18.370 21.254 单次训练平均时间(s) – 0.197 0.270 0.127 51.200 0.317 单次测试平均时间(s) – 13.738 18.798 2.100 12.029 9.933 注:每类干扰训练样本量为2;表中使用粗体标出了最优的结果,下划线标出了次优的结果。 表 8 不同方法在不同训练样本下的平均识别准确率±标准差比较(%)

Table 8. Comparison of mean recognition accuracy ± standard deviation of different methods under different numbers of training samples (%)

干扰识别方法 每类干扰训练样本量 2个(占1%) 3个 4个 5个 SVM 69.80±1.42 75.63±0.96 80.95±0.36 82.78±0.46 2D-CNN 73.41±0.42 83.33±0.31 89.13±0.03 93.32±0.12 DFM 74.23±0.06 81.12±0.47 90.03±0.56 95.02±0.28 MAML 83.26±0.42 90.67±0.35 95.43±0.21 97.85±0.26 JR-TFSAD 83.55±0.12 88.70±0.30 93.40±0.29 98.91±0.41 本文方法 93.55±0.29 97.68±0.16 99.62±0.03 100.00±0.00 注:表8中使用粗体标出了最优的结果,下划线标出了次优的结果。 -

[1] 崔国龙, 余显祥, 魏文强, 等. 认知智能雷达抗干扰技术综述与展望[J]. 雷达学报, 2022, 11(6): 974–1002. doi: 10.12000/JR22191.CUI Guolong, YU Xianxiang, WEI Wenqiang, et al. An overview of antijamming methods and future works on cognitive intelligent radar[J]. Journal of Radars, 2022, 11(6): 974–1002. doi: 10.12000/JR22191. [2] 解烽, 刘环宇, 胡锡坤, 等. 基于复数域深度强化学习的多干扰场景雷达抗干扰方法[J]. 雷达学报, 2023, 12(6): 1290–1304. doi: 10.12000/JR23139.XIE Feng, LIU Huanyu, HU Xikun, et al. A radar anti-jamming method under multi-jamming scenarios based on deep reinforcement learning in complex domains[J]. Journal of Radars, 2023, 12(6): 1290–1304. doi: 10.12000/JR23139. [3] 周红平, 王子伟, 郭忠义. 雷达有源干扰识别算法综述[J]. 数据采集与处理, 2022, 37(1): 1–20. doi: 10.16337/j.1004-9037.2022.01.001.ZHOU Hongping, WANG Ziwei, and GUO Zhongyi. Overview on recognition algorithms of radar active jamming[J]. Journal of Data Acquisition and Processing, 2022, 37(1): 1–20. doi: 10.16337/j.1004-9037.2022.01.001. [4] LV Qinzhe, FAN Hanxin, LIU Junliang, et al. Multilabel deep learning-based lightweight radar compound jamming recognition method[J]. IEEE Transactions on Instrumentation and Measurement, 2024, 73: 2521115. doi: 10.1109/TIM.2024.3400337. [5] CAO Ru, CAO Jiuwen, MEI Jianping, et al. Radar emitter identification with bispectrum and hierarchical extreme learning machine[J]. Multimedia Tools and Applications, 2019, 78(20): 28953–28970. doi: 10.1007/s11042-018-6134-y. [6] QU Qizhe, WANG Yongliang, LIU Weijian, et al. IRNet: Interference recognition networks for automotive radars via autocorrelation features[J]. IEEE Transactions on Microwave Theory and Techniques, 2022, 70(5): 2762–2774. doi: 10.1109/TMTT.2022.3151635. [7] WU Zhenhua, QIAN Jun, ZHANG Man, et al. High-confidence sample augmentation based on label-guided denoising diffusion probabilistic model for active deception jamming recognition[J]. IEEE Geoscience and Remote Sensing Letters, 2023, 20: 3508305. doi: 10.1109/LGRS.2023.3316282. [8] ZOU Wenxu, XIE Kai, and LIN Jinjian. Light-weight deep learning method for active jamming recognition based on improved MobileViT[J]. IET Radar, Sonar & Navigation, 2023, 17(8): 1299–1311. doi: 10.1049/rsn2.12420. [9] LV Qinzhe, QUAN Yinghui, FENG Wei, et al. Radar deception jamming recognition based on weighted ensemble CNN with transfer learning[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5107511. doi: 10.1109/TGRS.2021.3129645. [10] LUO Zhenyu, CAO Yunhe, YEO T S, et al. Few-shot radar jamming recognition network via time-frequency self-attention and global knowledge distillation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5105612. doi: 10.1109/TGRS.2023.3280322. [11] KONG Yukai, XIA Senlin, DONG Luxin, et al. Compound jamming recognition via contrastive learning for distributed MIMO radars[J]. IEEE Transactions on Vehicular Technology, 2024, 73(6): 7892–7907. doi: 10.1109/TVT.2024.3358996. [12] KONG Yukai, WANG Xiang, WU Changxin, et al. Active deception jamming recognition in the presence of extended target[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4024905. doi: 10.1109/LGRS.2022.3184997. [13] ZHOU Hongping, WANG Lei, and GUO Zhongyi. Recognition of radar compound jamming based on convolutional neural network[J]. IEEE Transactions on Aerospace and Electronic Systems, 2023, 59(6): 7380–7394. doi: 10.1109/TAES.2023.3288080. [14] PENG Ruihui, WEI Wenbin, SUN Dianxing, et al. Dense false target jamming recognition based on fast-slow time domain joint frequency response features[J]. IEEE Transactions on Aerospace and Electronic Systems, 2023, 59(6): 9142–9159. doi: 10.1109/TAES.2023.3316125. [15] 魏赓力, 李凉海, 闫海鹏, 等. 基于多模特征融合的雷达干扰信号识别[J]. 遥测遥控, 2023, 44(4): 80–87. doi: 10.12347/j.ycyk.20230328001.WEI Gengli, LI Lianghai, YAN Haipeng, et al. Radar jamming signal identification based on multimode feature fusion[J]. Journal of Telemetry, Tracking and Command, 2023, 44(4): 80–87. doi: 10.12347/j.ycyk.20230328001. [16] 张顺生, 陈爽, 陈晓莹, 等. 面向小样本的多模态雷达有源欺骗干扰识别方法[J]. 雷达学报, 2023, 12(4): 882–891. doi: 10.12000/JR23104.ZHANG Shunsheng, CHEN Shuang, CHEN Xiaoying, et al. Active deception jamming recognition method in multimodal radar based on small samples[J]. Journal of Radars, 2023, 12(4): 882–891. doi: 10.12000/JR23104. [17] SHAO Guangqing, CHEN Yushi, and WEI Yinsheng. Deep fusion for radar jamming signal classification based on CNN[J]. IEEE Access, 2020, 8: 117236–117244. doi: 10.1109/ACCESS.2020.3004188. [18] ZHANG Yipeng, LU Dongdong, QIU Xiaolan, et al. Scattering-point topology for few-shot ship classification in SAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2023, 16: 10326–10343. doi: 10.1109/JSTARS.2023.3328066. [19] GENG Jie, ZHANG Yuhang, and JIANG Wen. Polarimetric SAR image classification based on hierarchical scattering-spatial interaction transformer[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5205014. doi: 10.1109/TGRS.2024.3362360. [20] 郭帅, 陈婷, 王鹏辉, 等. 基于角度引导Transformer融合网络的多站协同目标识别方法[J]. 雷达学报, 2023, 12(3): 516–528. doi: 10.12000/JR23014.GUO Shuai, CHEN Ting, WANG Penghui, et al. Multistation cooperative radar target recognition based on an angle-guided Transformer fusion network[J]. Journal of Radars, 2023, 12(3): 516–528. doi: 10.12000/JR23014. [21] CHEN Xiaolong, SU Ningyuan, HUANG Yong, et al. False-alarm-controllable radar detection for marine target based on multi features fusion via CNNs[J]. IEEE Sensors Journal, 2021, 21(7): 9099–9111. doi: 10.1109/JSEN.2021.3054744. [22] RADFORD A, KIM J W, HALLACY C, et al. Learning transferable visual models from natural language supervision[C]. The 38th International Conference on Machine Learning (ICML), 2021: 8748–8763. [23] 李宏, 郑光勇, 郭雷, 等. 雷达相参压制干扰信号分析[J]. 电子信息对抗技术, 2013, 28(3): 36–40. doi: 10.3969/j.issn.1674-2230.2013.03.009.LI Hong, ZHENG Guangyong, GUO Lei, et al. Jamming analysis of radar coherent suppressing[J]. Electronic Information Warfare Technology, 2013, 28(3): 36–40. doi: 10.3969/j.issn.1674-2230.2013.03.009. [24] WEI Shaopeng, ZHANG Lei, LU Jingyue, et al. Modulated-ISRJ rejection using online dictionary learning for synthetic aperture radar imagery[J]. Journal of Systems Engineering and Electronics, 2024, 35(2): 316–329. doi: 10.23919/JSEE.2023.000076. [25] TAN Mingxing and LE Q V. EfficientNetV2: Smaller models and faster training[C]. The 38th International Conference on Machine Learning (ICML), 2021: 10096–10106. [26] GONG Heng, FENG Xiaocheng, QIN Bing, et al. Table-to-text generation with effective hierarchical encoder on three dimensions (row, column and time)[C]. The 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 2019: 3143–3152. doi: 10.18653/v1/D19-1310. [27] LI Liang, MA Can, YUE Yinliang, et al. Improving encoder by auxiliary supervision tasks for table-to-text generation[C]. The 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), 2021: 5979–5989. doi: 10.18653/v1/2021.acl-long.466. [28] RADFORD A, WU J, CHILD R, et al. Language models are unsupervised multitask learners[J]. OpenAI Blog, 2019, 1(8): 9. [29] KHOSLA P, TETERWAK P, WANG Chen, et al. Supervised contrastive learning[C]. The 34th International Conference on Neural Information Processing Systems (NIPS’20), Vancouver, Canada, 2020: 1567. [30] WANG Feng and LIU Huaping. Understanding the behaviour of contrastive loss[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 2495–2504. doi: 10.1109/CVPR46437.2021.00252. [31] LIU Mingqian, LIU Zilong, LU Weidang, et al. Distributed few-shot learning for intelligent recognition of communication jamming[J]. IEEE Journal of Selected Topics in Signal Processing, 2022, 16(3): 395–405. doi: 10.1109/JSTSP.2021.3137028. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: