Target Recognition Method Based on Graph Structure Perception of Invariant Features for SAR Images

-

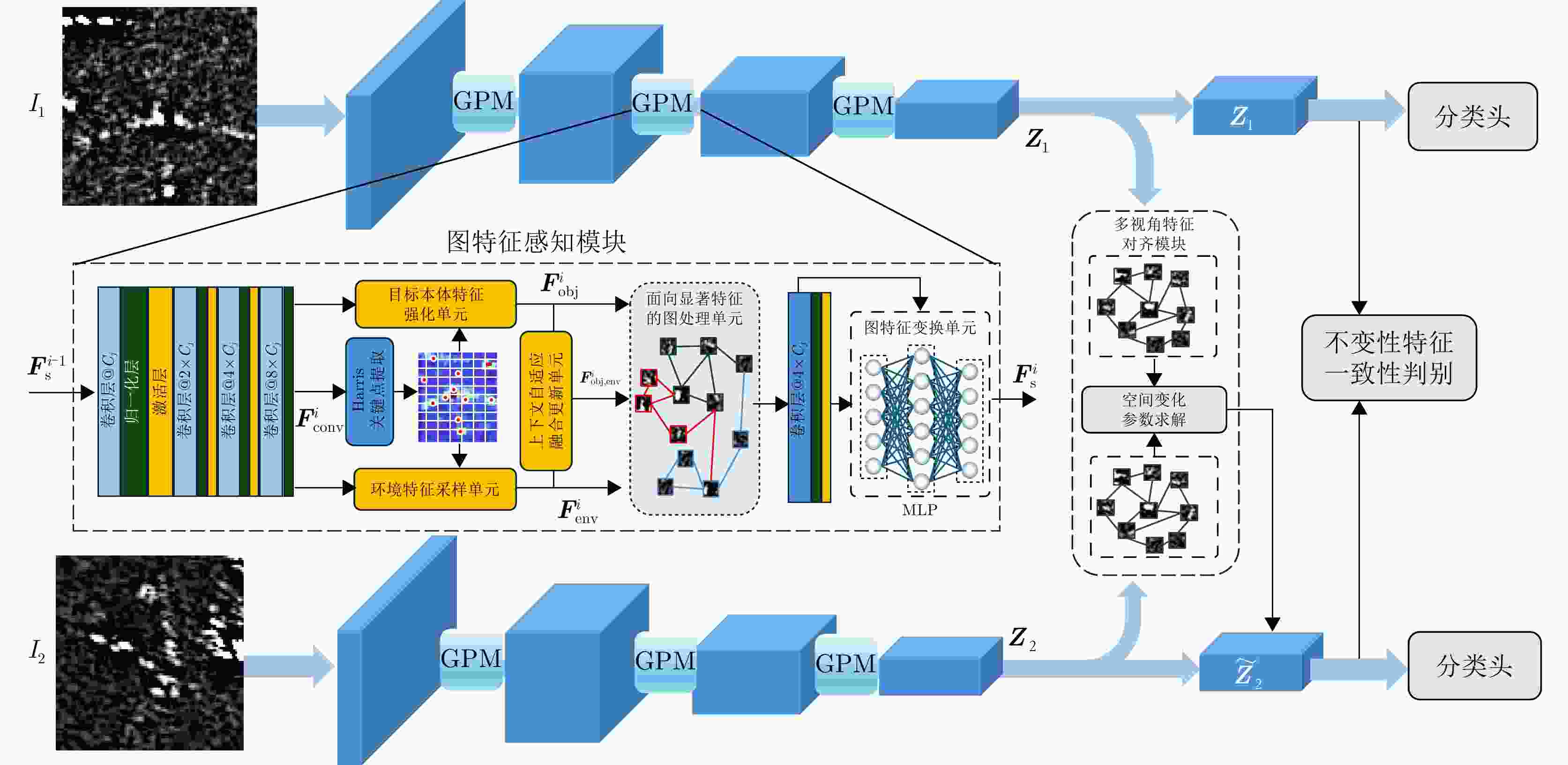

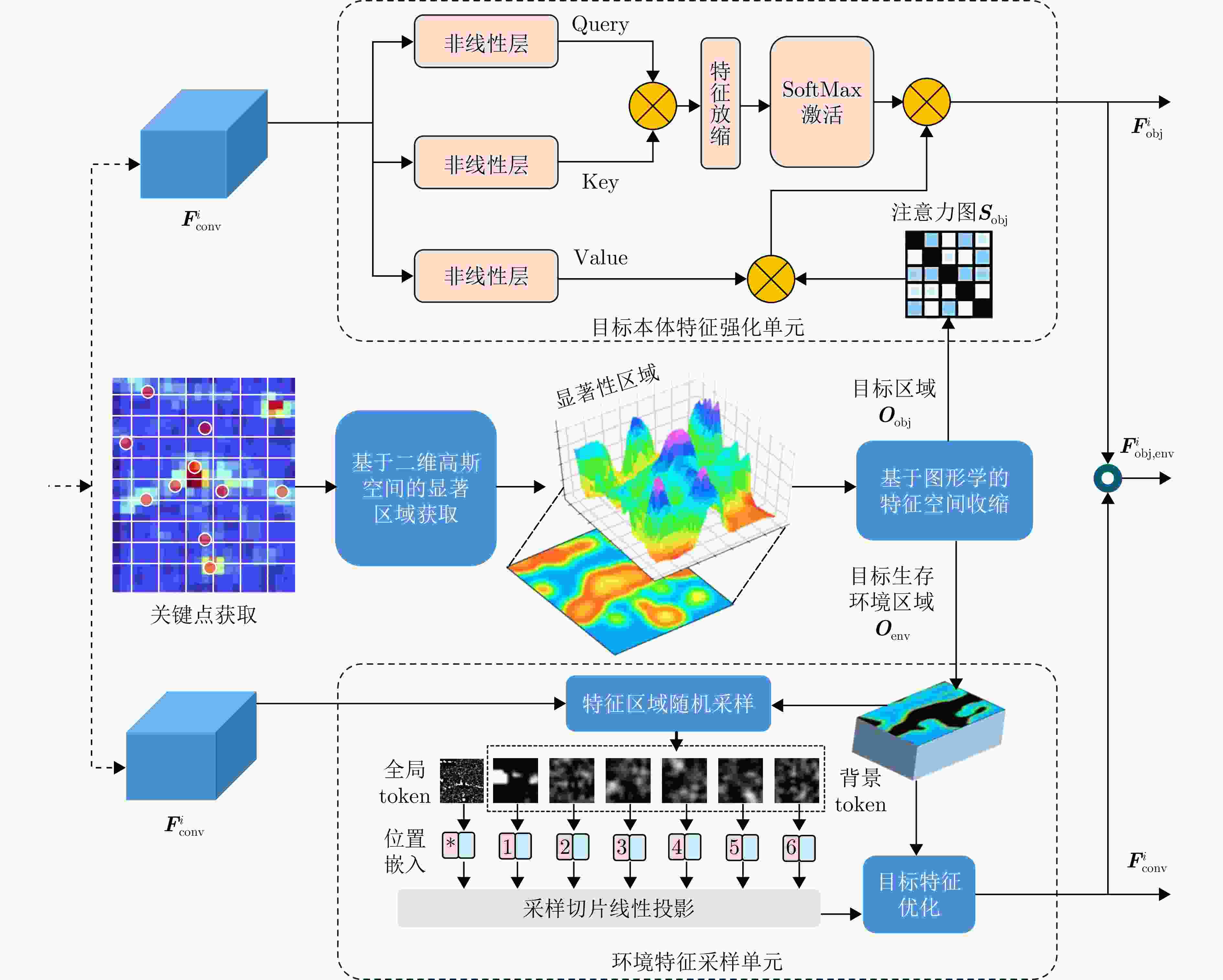

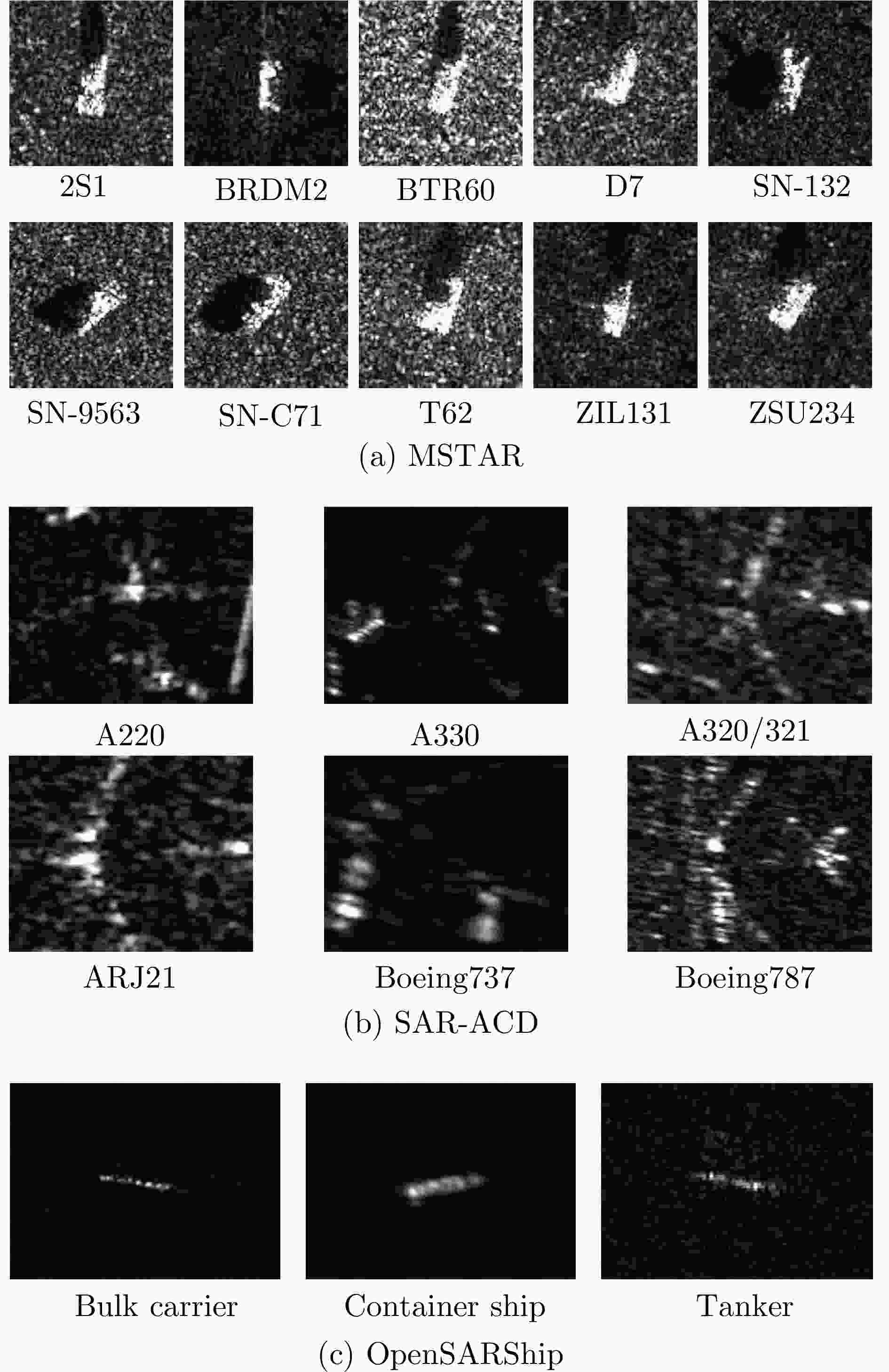

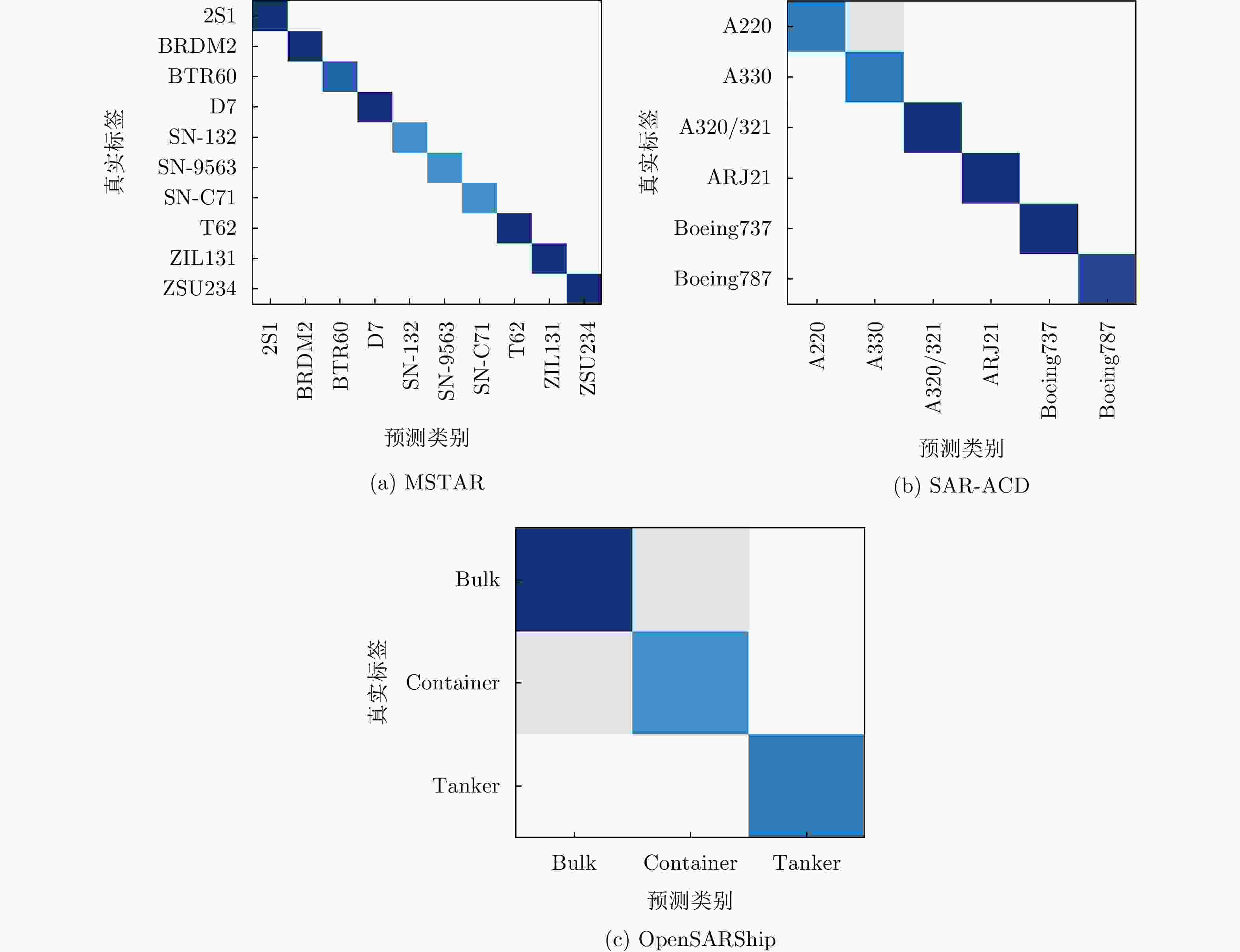

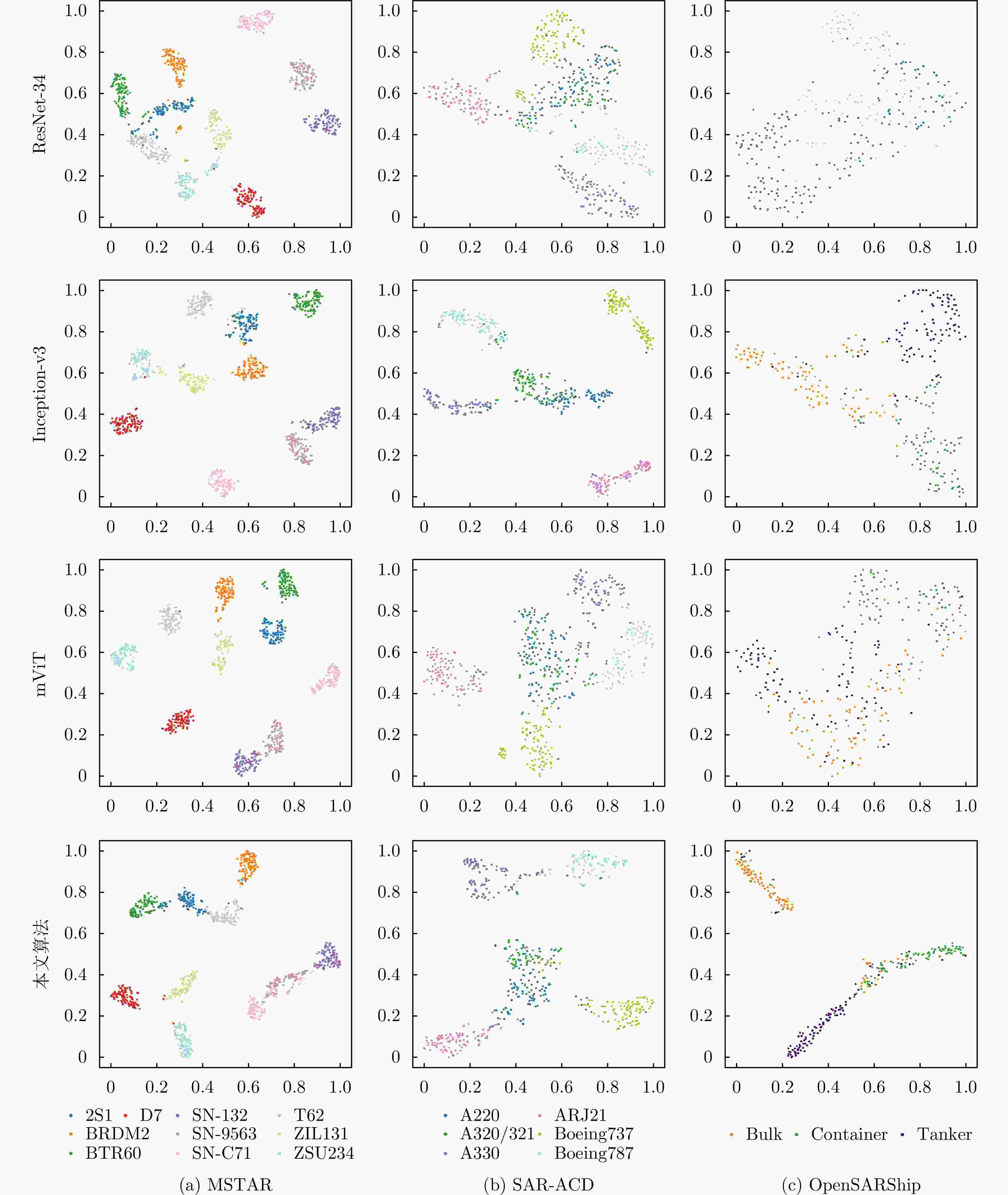

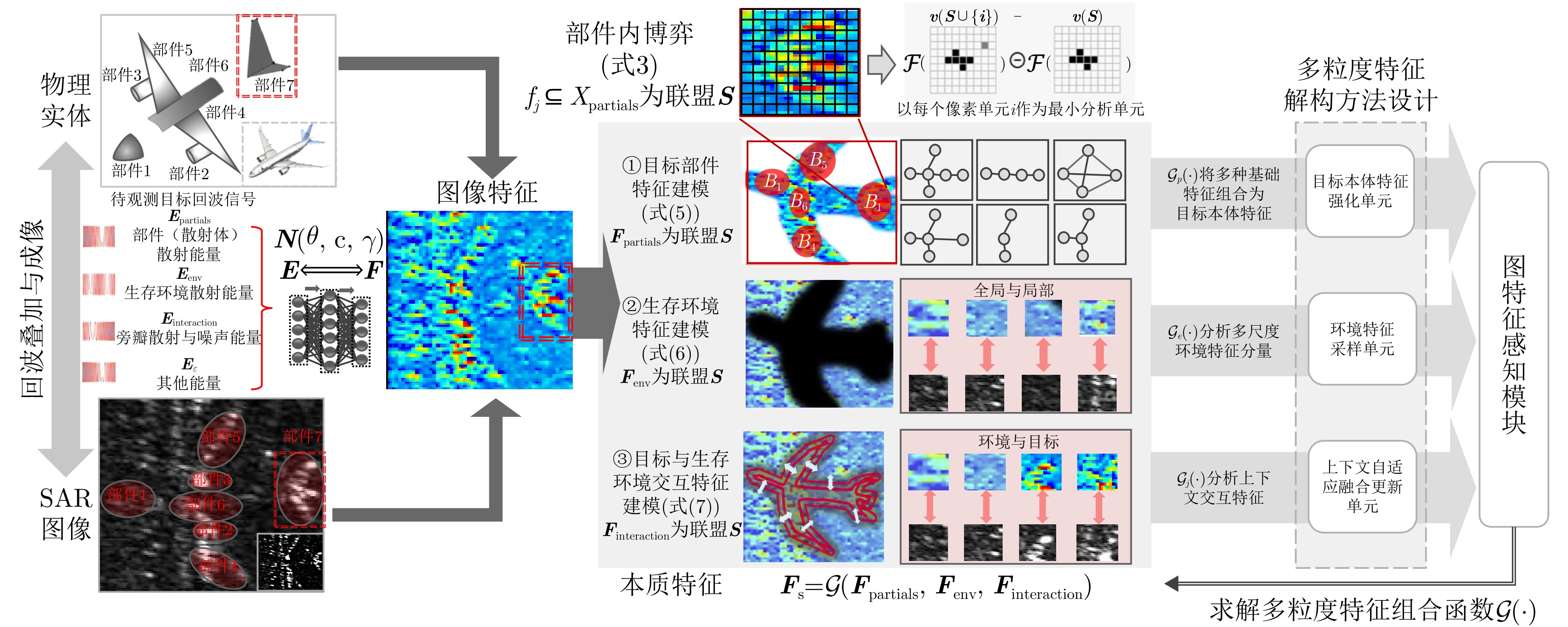

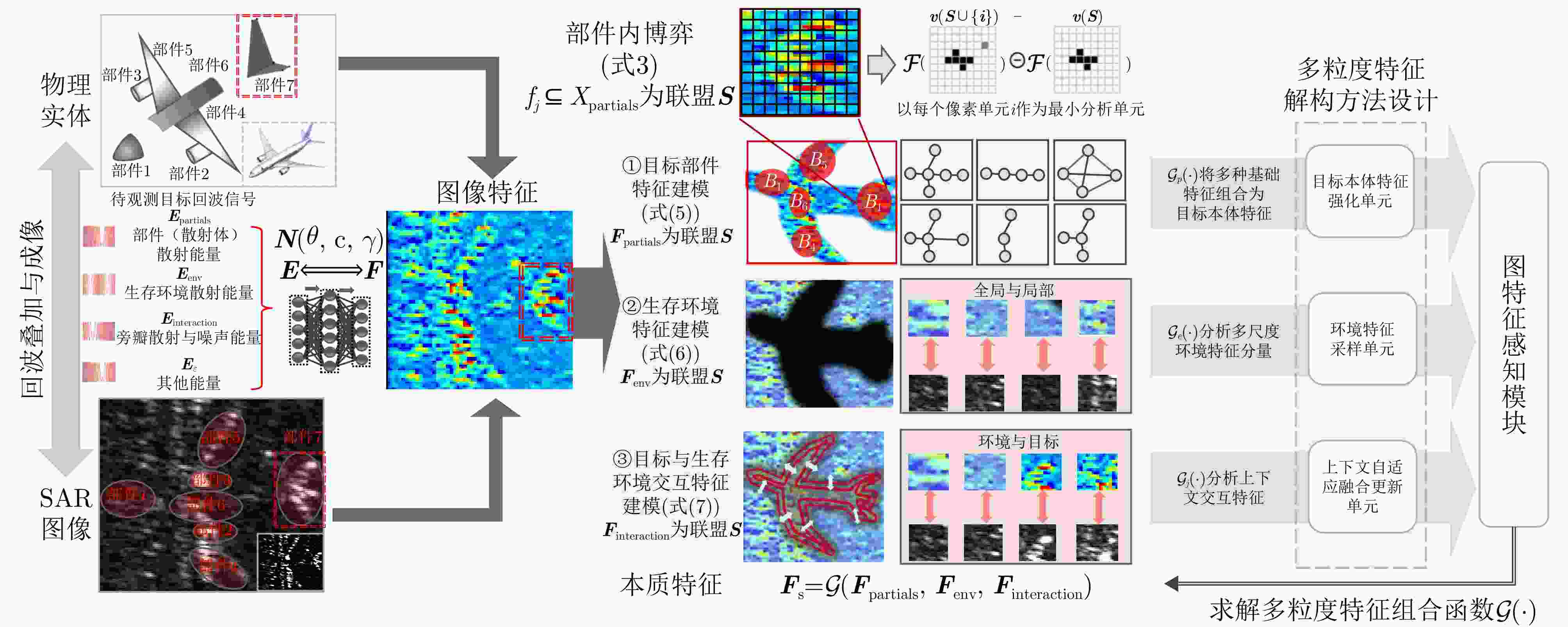

摘要: 基于深度学习的合成孔径雷达(SAR)图像目标识别技术日趋成熟。然而,受散射特性、噪声干扰等影响,同类目标的SAR成像结果存在差异。面向高精度目标识别需求,该文将目标实体、生存环境及其交互空间中不变性特征的组合抽象为目标本质特征,提出基于图网络与不变性特征感知的SAR图像目标识别方法。该方法用双分支网络处理多视角SAR图像,通过旋转可学习单元对齐双支特征并强化旋转免疫的不变性特征。为实现多粒度本质特征提取,设计目标本体特征强化单元、环境特征采样单元、上下文自适应融合更新单元,并基于图神经网络分析其融合结果,构建本质特征拓扑,输出目标类别向量。该文使用t-SNE方法定性评估算法的类别辨识能力,基于准确率等指标定量分析关键单元及整体网络,采用类激活图可视化方法验证各阶段、各分支网络的不变性特征提取能力。该文所提方法在MSTAR车辆、SAR-ACD飞机、OpenSARShip船只数据集上的平均识别准确率分别达到了98.56%, 94.11%, 86.20%。实验结果表明,该算法具备在SAR图像目标识别任务中目标本质特征提取能力,在多类别目标识别方面展现出较高的稳健性。

-

关键词:

- 合成孔径雷达(SAR) /

- 目标识别 /

- 不变性特征提取 /

- 本质特征 /

- 深度学习

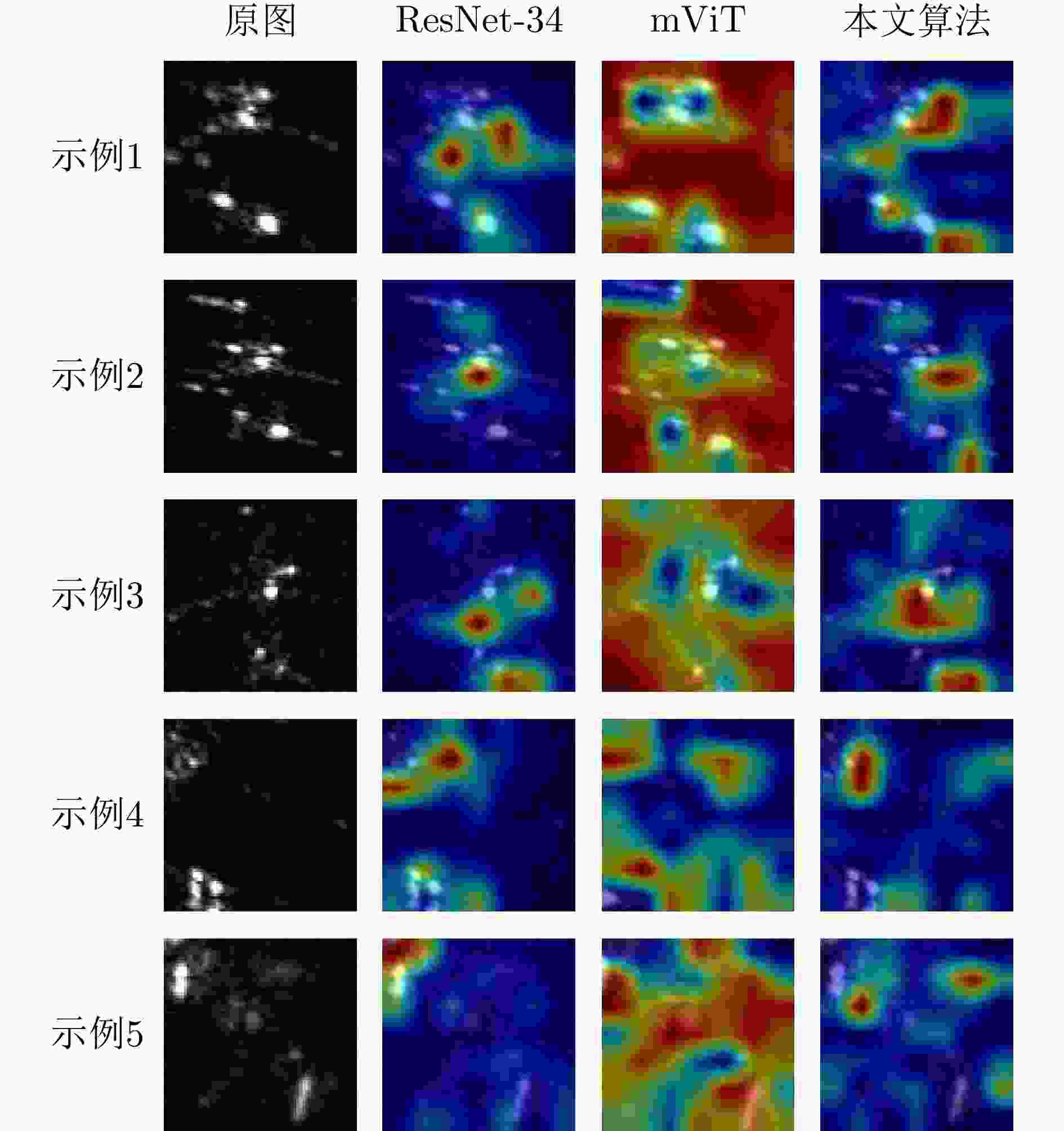

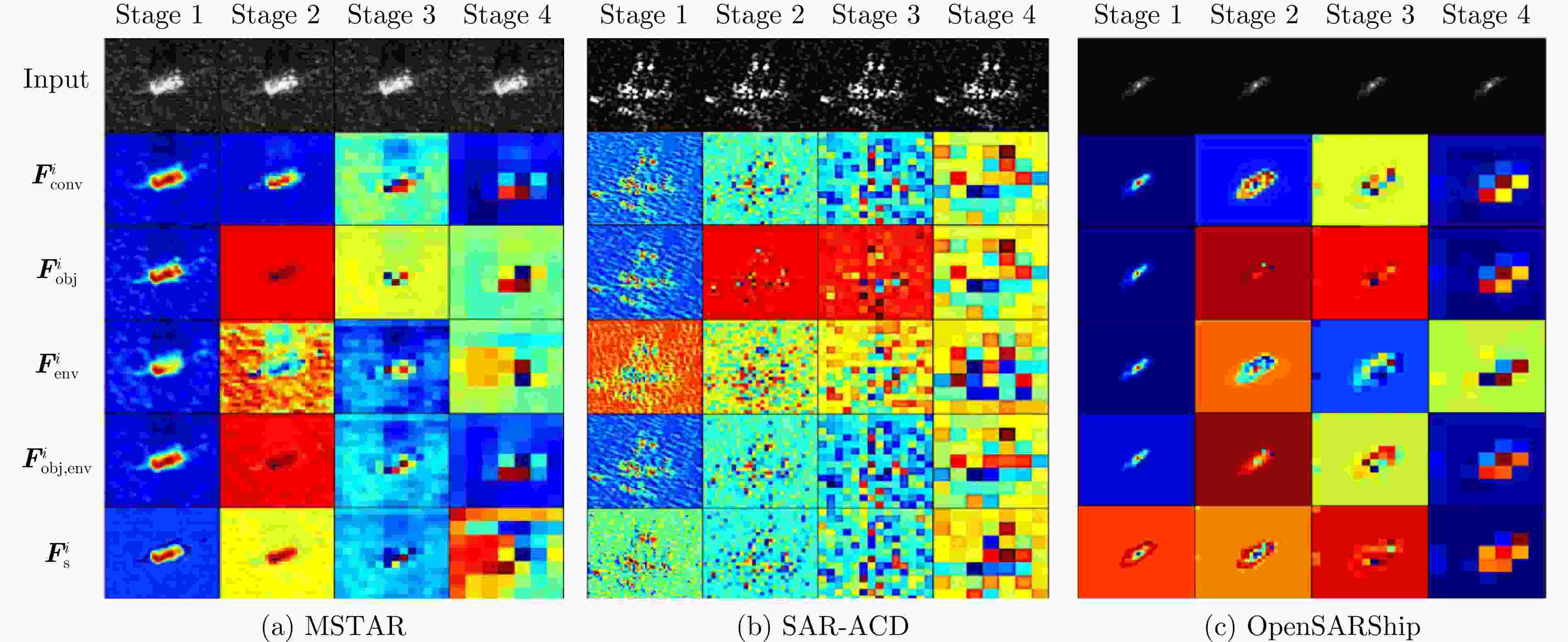

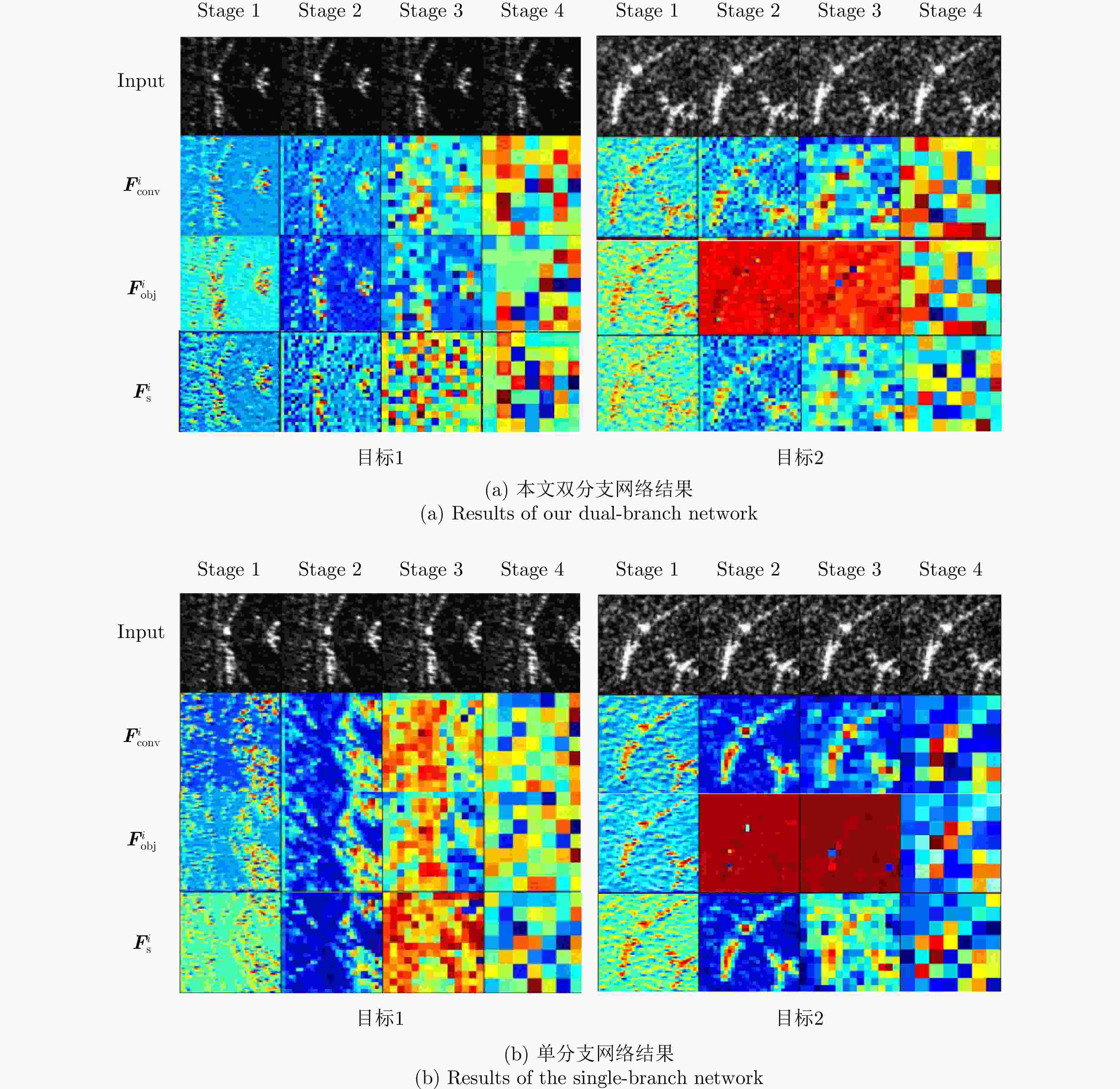

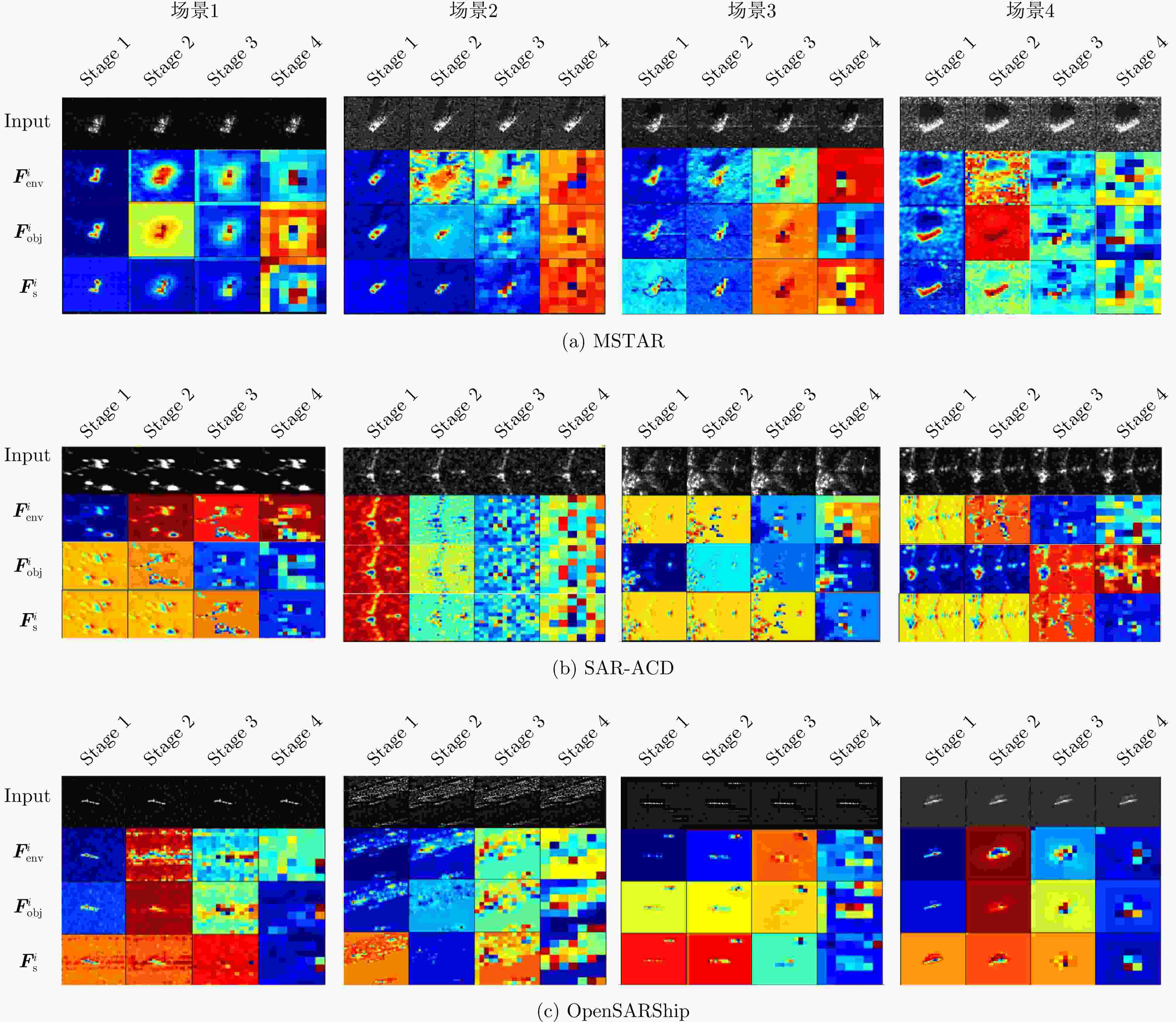

Abstract: Synthetic Aperture Radar (SAR) image target recognition technology based on deep learning has matured. However, challenges remain due to scattering phenomenon and noise interference that cause significant intraclass variability in imaging results. Invariant features, which represent the essential attributes of a specific target class with consistent expressions, are crucial for high-precision recognition. We define these invariant features from the entity, its surrounding environment, and their combined context as the target’s essential features. Guided by multilevel essential feature modeling theory, we propose a SAR image target recognition method based on graph networks and invariant feature perception. This method employs a dual-branch network to process multiview SAR images simultaneously using a rotation-learnable unit to adaptively align dual-branch features and reinforce invariant features with rotational immunity by minimizing intraclass feature differences. Specifically, to support essential feature extraction in each branch, we design a feature-guided graph feature perception module based on multilevel essential feature modeling. This module uses salient points for target feature analysis and comprises a target ontology feature enhancement unit, an environment feature sampling unit, and a context-based adaptive fusion update unit. Outputs are analyzed with a graph neural network and constructed into a topological representation of essential features, resulting in a target category vector. The t-Distributed Stochastic Neighbor Embedding (t-SNE) method is used to qualitatively evaluate the algorithm’s classification ability, while metrics like accuracy, recall, and F1 score are used to quantitatively analyze key units and overall network performance. Additionally, class activation map visualization methods are employed to validate the extraction and analysis of invariant features at different stages and branches. The proposed method achieves recognition accuracies of 98.56% on the MSTAR dataset, 94.11% on SAR-ACD dataset, and 86.20% on OpenSARShip dataset, demonstrating its effectiveness in extracting essential target features. -

表 1 本研究在不同数据集上识别性能对比表

Table 1. Performance on different datasets

数据集 类别 Precision Recall F1 数据集 类别 Precision Recall F1 MSTAR

数据集2S1 0.9863 0.9632 0.9746 SAR-ACD

数据集A220 0.9024 0.7957 0.8457 BRDM2 0.9515 0.9866 0.9687 A320/321 0.9036 0.7353 0.8108 BTR60 0.9844 0.9844 0.9844 A330 0.9444 0.9903 0.9668 D7 0.9966 0.9933 0.9950 ARJ21 0.9619 0.9806 0.9712 SN-132 0.9750 0.9949 0.9848 Boeing737 0.9636 1.0000 0.9815 SN-C71 0.9947 0.9692 0.9818 Boeing787 0.9706 0.9802 0.9754 SN- 9563 0.9949 1.0000 0.9975 OpenSARShip

数据集T62 0.9933 0.9866 0.9899 Bulk 0.8628 0.8089 0.8350 ZIL131 0.9862 0.9565 0.9711 Container 0.8035 0.8164 0.8099 ZSU234 0.9933 0.9967 0.9950 Tanker 0.9198 0.8883 0.9038 表 2 不同方法在本文涉及分类数据集上的性能比对表

Table 2. Performance of different methods on three datasets

算法 MSTAR数据集 SAR-ACD数据集 OpenSARShip数据集 Precision Recall F1 MAP Precision Recall F1 MAP Precision Recall F1 MAP ConvNeXt-v2[12] 0.9605 0.8480 0.8955 0.9774 0.9146 0.4582 0.5909 0.8624 0.6743 0.6983 0.6861 0.7089 mViT[17] 0.8535 0.7788 0.8082 0.9750 0.9070 0.7955 0.8385 0.9245 0.8616 0.7033 0.7690 0.8598 GraphSAGE[21] 0.7325 0.5226 0.5880 0.7050 0.8968 0.8734 0.8799 0.9504 0.6888 0.4165 0.5112 0.6407 VGG19[52] 0.9750 0.9824 0.9786 0.9970 0.8644 0.8476 0.8503 0.9194 0.7514 0.8032 0.7677 0.8217 ResNet-34[53] 0.9761 0.9721 0.9733 0.9978 0.8890 0.8124 0.7879 0.9322 0.7972 0.7538 0.7722 0.8175 Inception-v3[54] 0.9456 0.9250 0.9312 0.9893 0.8742 0.8636 0.8683 0.9286 0.8030 0.7536 0.7735 0.8311 Swin Transformer[55] 0.8188 0.6626 0.6978 0.8286 0.8964 0.5126 0.5924 0.8251 0.7053 0.7377 0.7211 0.7292 ◆CAR[49], PLANE[51], SHIP[44] – – – 0.9984 – – – 0.9720 0.8386 0.8597 0.8490 0.8566 本文提出算法 0.9856 0.9831 0.9843 0.9987 0.9411 0.9137 0.9252 0.9708 0.8620 0.8379 0.8495 0.9198 注:◆当前SOTA算法,CAR指代MSTAR数据集,PLANE指代SAR-ACD数据集,SHIP指代OpenSARShip数据集。黑色加粗数值为最优指标数值。 表 3 基于SAR-ACD数据集的消融实验结果表

Table 3. Evaluation for the module ablation experiment on SAR-ACD dataset

本体特征

强化环境特征

采样多视角

特征对齐Precision Recall F1 MAP × × √ 0.9234 0.8972 0.9080 0.9559 × √ √ 0.9267 0.9113 0.9178 0.9638 √ × √ 0.9255 0.9018 0.9123 0.9643 √ √ × 0.8626 0.8411 0.8492 0.9211 √ √ √ 0.9411 0.9137 0.9252 0.9708 注:黑色加粗数值为最优指标数值。 表 4 不同方法下同类目标特征图相似度对比表

Table 4. Similarity comparison for features of the same class object with different methods

数据集 类别 ConvNeXt[12] Inception-v3[54] mViT[17] 本方法 数据集 类别 ConvNeXt[12] Inception-v3[54] mViT[17] 本方法 MSTAR

数据集2S1 0.8098 0.9909 0.8672 0.8803 SAR-ACD

数据集A220 0.8040 0.8564 0.8976 0.8661 BRDM2 0.8526 0.8886 0.8700 0.8466 A320/321 0.8402 0.8542 0.7312 0.9231 BTR60 0.8243 0.8919 0.8708 0.9099 A330 0.8385 0.8581 0.5092 0.9094 D7 0.8873 0.8883 0.8717 0.9777 ARJ21 0.7956 0.8565 0.7777 0.9081 SN-132 0.8611 0.8973 0.8694 0.9229 Boeing737 0.6519 0.8551 0.8954 0.8644 SN-C71 0.8381 0.8957 0.8701 0.9508 Boeing787 0.8609 0.8538 0.8971 0.9021 SN- 9563 0.8973 0.8955 0.8738 0.9253 OpenSARShip

数据集T62 0.8248 0.8930 0.8629 0.8052 Bulk 0.8086 0.8426 0.8470 0.8813 ZIL131 0.8816 0.8919 0.8482 0.9203 Container 0.8013 0.8345 0.8457 0.8444 ZSU234 0.8531 0.8904 0.8609 0.9338 Tanker 0.8190 0.8215 0.8473 0.8989 注:黑色加粗数值为最优指标数值。 -

[1] YU P, QIN Kai, and CLAUSI D A. Unsupervised polarimetric SAR image segmentation and classification using region growing with edge penalty[J]. IEEE Transactions on Geoscience and Remote Sensing, 2012, 50(4): 1302–1317. doi: 10.1109/TGRS.2011.2164085. [2] SHANG Ronghua, LIU Mengmeng, JIAO Licheng, et al. Region-level SAR image segmentation based on edge feature and label assistance[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5237216. doi: 10.1109/TGRS.2022.3217053. [3] GAO Gui. Statistical modeling of SAR images: A survey[J]. Sensors, 2010, 10(1): 775–795. doi: 10.3390/s100100775. [4] QIN Xianxiang, ZOU Huanxin, ZHOU Shilin, et al. Region-based classification of SAR images using kullback-leibler distance between generalized gamma distributions[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(8): 1655–1659. doi: 10.1109/LGRS.2015.2418217. [5] LOPES A, LAUR H, and NEZRY E. Statistical distribution and texture in multilook and complex sar images[C]. The 10th Annual International Symposium on Geoscience and Remote Sensing, College Park, MD, USA, 1990: 2427–2430. doi: 10.1109/IGARSS.1990.689030. [6] MENON M V. Estimation of the shape and scale parameters of the weibull distribution[J]. Technometrics, 1963, 5(2): 175–182. doi: 10.1080/00401706.1963.10490073. [7] DU Peijun, SAMAT A, WASKE B, et al. Random forest and rotation forest for fully polarized SAR image classification using polarimetric and spatial features[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2015, 105: 38–53. doi: 10.1016/j.isprsjprs.2015.03.002. [8] PARIKH H, PATEL S, and PATEL V. Classification of SAR and PolSAR images using deep learning: A review[J]. International Journal of Image and Data Fusion, 2020, 11(1): 1–32. doi: 10.1080/19479832.2019.1655489. [9] 张翼鹏, 卢东东, 仇晓兰, 等. 基于散射点拓扑和双分支卷积神经网络的SAR图像小样本舰船分类[J]. 雷达学报(中英文), 2024, 13(2): 411–427. doi: 10.12000/JR23172.ZHANG Yipeng, LU Dongdong, QIU Xiaolan, et al. Few-shot ship classification of SAR images via scattering point topology and dual-branch convolutional neural network[J]. Journal of Radars, 2024, 13(2): 411–427. doi: 10.12000/JR23172. [10] LIU Fang, JIAO Licheng, HOU Biao, et al. POL-SAR image classification based on wishart DBN and local spatial information[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(6): 3292–3308. doi: 10.1109/TGRS.2016.2514504. [11] GENG Jie, FAN Jianchao, WANG Hongyu, et al. High-resolution SAR image classification via deep convolutional autoencoders[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(11): 2351–2355. doi: 10.1109/LGRS.2015.2478256. [12] ZHU Yimin, YUAN Kexin, ZHONG Wenlong, et al. Spatial-spectral ConvNeXt for hyperspectral image classification[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2023, 16: 5453–5463. doi: 10.1109/JSTARS.2023.3282975. [13] DAS A and CHANDRAN S. Transfer learning with Res2Net for remote sensing scene classification[C]. 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 2021: 796–801. doi: 10.1109/Confluence51648.2021.9377148. [14] NIU Chaoyang, GAO Ouyang, LU Wanjie, et al. Reg-SA-UNet++: A lightweight landslide detection network based on single-temporal images captured postlandslide[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022, 15: 9746–9759. doi: 10.1109/JSTARS.2022.3219897. [15] SIVASUBRAMANIAN A, VR P, HARI T, et al. Transformer-based convolutional neural network approach for remote sensing natural scene classification[J]. Remote Sensing Applications: Society and Environment, 2024, 33: 101126. doi: 10.1016/j.rsase.2023.101126. [16] WANG Haitao, CHEN Jie, HUANG Zhixiang, et al. FPT: Fine-grained detection of driver distraction based on the feature pyramid vision transformer[J]. IEEE Transactions on Intelligent Transportation Systems, 2022, 24(2): 1594–1608. doi: 10.1109/TITS.2022.3219676. [17] DAI Yuqi, ZHENG Tie, XUE Changbin, et al. SegMarsViT: Lightweight mars terrain segmentation network for autonomous driving in planetary exploration[J]. Remote Sensing, 2022, 14(24): 6297. doi: 10.3390/rs14246297. [18] CHEN Yihan, GU Xingyu, LIU Zhen, et al. A fast inference vision transformer for automatic pavement image classification and its visual interpretation method[J]. Remote Sensing, 2022, 14(8): 1877. doi: 10.3390/rs14081877. [19] HUAN Hai and ZHANG Bo. FDAENet: Frequency domain attention encoder-decoder network for road extraction of remote sensing images[J]. Journal of Applied Remote Sensing, 2024, 18(2): 024510. doi: 10.1117/1.JRS.18.024510. [20] ZHANG Si, TONG Hanghang, XU Jiejun, et al. Graph convolutional networks: A comprehensive review[J]. Computational Social Networks, 2019, 6(1): 11. doi: 10.1186/s40649-019-0069-y. [21] HAMILTON W L, YING R, and LESKOVEC J. Inductive representation learning on large graphs[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 2017: 1025–1035. [22] LIU Chuang, ZHAN Yibing, WU Jia, et al. Graph pooling for graph neural networks: Progress, challenges, and opportunities[C]. The Thirty-Second International Joint Conference on Artificial Intelligence, Macao, China, 2023: 6712–6722. doi: 10.24963/ijcai.2023/752. [23] VELIČKOVIĆ P, CUCURULL G, CASANOVA A, et al. Graph attention networks[C]. The Sixth International Conference on Learning Representation, Vancouver, BC, Canada, 2018: 1–12. [24] OVEIS A H, GIUSTI E, GHIO S, et al. A survey on the applications of convolutional neural networks for synthetic aperture radar: Recent advances[J]. IEEE Aerospace and Electronic Systems Magazine, 2022, 37(5): 18–42. doi: 10.1109/MAES.2021.3117369. [25] WANG Ru, NIE Yinju, and GENG Jie. Multiscale superpixel-guided weighted graph convolutional network for polarimetric SAR image classification[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2024, 17: 3727–3741. doi: 10.1109/JSTARS.2024.3355290. [26] ZHANG Yawei, CAO Yu, FENG Xubin, et al. SAR object detection encounters deformed complex scenes and aliased scattered power distribution[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022, 15: 4482–4495. doi: 10.1109/JSTARS.2022.3157749. [27] WANG Siyuan, WANG Yinghua, LIU Hongwei, et al. A few-shot SAR target recognition method by unifying local classification with feature generation and calibration[J]. IEEE Transactions on Geoscience and Remote Sensing, 2024, 62: 5200319. doi: 10.1109/TGRS.2023.3337856. [28] FENG Yunxiang, YOU Yanan, TIAN Jing, et al. OEGR-DETR: A novel detection transformer based on orientation enhancement and group relations for SAR object detection[J]. Remote Sensing, 2023, 16(1): 106. doi: 10.3390/rs16010106. [29] 吕艺璇, 王智睿, 王佩瑾, 等. 基于散射信息和元学习的SAR图像飞机目标识别[J]. 雷达学报, 2022, 11(4): 652–665. doi: 10.12000/JR22044.LYU Yixuan, WANG Zhirui, WANG Peijin, et al. Scattering information and meta-learning based SAR images interpretation for aircraft target recognition[J]. Journal of Radars, 2022, 11(4): 652–665. doi: 10.12000/JR22044. [30] 张睿, 王梓祺, 李阳, 等. 任务感知的多尺度小样本SAR图像分类方法[J]. 计算机科学, 2024, 51(8): 160–167. doi: 10.11896/jsjkx.230500171.ZHANG Rui, WANG Ziqi, LI Yang, et al. Task-aware few-shot SAR image classification method based on multi-scale attention mechanism[J]. Computer Science, 2024, 51(8): 160–167. doi: 10.11896/jsjkx.230500171. [31] 史宝岱, 张秦, 李宇环, 等. 基于混合注意力机制的SAR图像目标识别算法[J]. 电光与控制, 2023, 30(4): 45–49. doi: 10.3969/j.issn.1671-637X.2023.04.009.SHI Baodai, ZHANG Qin, LI Yuhuan, et al. SAR image target recognition based on hybrid attention mechanism[J]. Electronics Optics & Control, 2023, 30(4): 45–49. doi: 10.3969/j.issn.1671-637X.2023.04.009. [32] 罗汝, 赵凌君, 何奇山, 等. SAR图像飞机目标智能检测识别技术研究进展与展望[J]. 雷达学报(中英文), 2024, 13(2): 307–330. doi: 10.12000/JR23056.LUO Ru, ZHAO Lingjun, HE Qishan, et al. Intelligent technology for aircraft detection and recognition through SAR imagery: Advancements and prospects[J]. Journal of Radars, 2024, 13(2): 307–330. doi: 10.12000/JR23056. [33] KANG Yuzhuo, WANG Zhirui, ZUO Haoyu, et al. ST-Net: Scattering topology network for aircraft classification in high-resolution SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5202117. doi: 10.1109/TGRS.2023.3236987. [34] YI Yonghao, YOU Yanan, LI Chao, et al. EFM-Net: An essential feature mining network for target fine-grained classification in optical remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5606416. doi: 10.1109/TGRS.2023.3265669. [35] 文贡坚, 马聪慧, 丁柏圆, 等. 基于部件级三维参数化电磁模型的SAR目标物理可解释识别方法[J]. 雷达学报, 2020, 9(4): 608–621. doi: 10.12000/JR20099.WEN Gongjian, MA Conghui, DING Baiyuan, et al. SAR target physics interpretable recognition method based on three dimensional parametric electromagnetic part model[J]. Journal of Radars, 2020, 9(4): 608–621. doi: 10.12000/JR20099. [36] POTTER L C and MOSES R L. Attributed scattering centers for SAR ATR[J]. IEEE Transactions on Image Processing, 1997, 6(1): 79–91. doi: 10.1109/83.552098. [37] DATCU M, HUANG Zhongling, ANGHEL A, et al. Explainable, physics-aware, trustworthy artificial intelligence: A paradigm shift for synthetic aperture radar[J]. IEEE Geoscience and Remote Sensing Magazine, 2023, 11(1): 8–25. doi: 10.1109/MGRS.2023.3237465. [38] 朱凯雯, 尤亚楠, 曹婧宜, 等. Hybrid-Gird: 遥感图像细粒度分类可解释方法[J]. 遥感学报, 2024, 28(7): 1722–1734. doi: 10.11834/jrs.20243252.ZHU Kaiwen, YOU Yanan, CAO Jingyi, et al. Hybrid-Gird: An explainable method for fine-grained classification of remote sensing images[J]. National Remote Sensing Bulletin, 2024, 28(7): 1722–1734. doi: 10.11834/jrs.20243252. [39] DENG Huiqi, ZOU Na, DU Mengnan, et al. Unifying fourteen post-hoc attribution methods with taylor interactions[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(7): 4625–4640. doi: 10.1109/TPAMI.2024.3358410. [40] 丁柏圆, 文贡坚, 余连生, 等. 属性散射中心匹配及其在SAR目标识别中的应用[J]. 雷达学报, 2017, 6(2): 157–166. doi: 10.12000/JR16104.DING Baiyuan, WEN Gongjian, YU Liansheng, et al. Matching of attributed scattering center and its application to synthetic aperture radar automatic target recognition[J]. Journal of Radars, 2017, 6(2): 157–166. doi: 10.12000/JR16104. [41] 黄钟泠, 姚西文, 韩军伟. 面向SAR图像解译的物理可解释深度学习技术进展与探讨[J]. 雷达学报, 2022, 11(1): 107–125. doi: 10.12000/JR21165.HUANG Zhongling, YAO Xiwen, and HAN Junwei. Progress and perspective on physically explainable deep learning for synthetic aperture radar image interpretation[J]. Journal of Radars, 2022, 11(1): 107–125. doi: 10.12000/JR21165. [42] FENG Sijia, JI Kefeng, WANG Fulai, et al. Electromagnetic Scattering Feature (ESF) module embedded network based on ASC model for robust and interpretable SAR ATR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5235415. doi: 10.1109/TGRS.2022.3208333. [43] ZHANG Chen, WANG Yinghua, LIU Hongwei, et al. VSFA: Visual and scattering topological feature fusion and alignment network for unsupervised domain adaptation in SAR target recognition[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5216920. doi: 10.1109/TGRS.2023.3317828. [44] WANG Ruiqiu, SU Tao, XU Dan, et al. MIGA-Net: Multi-view image information learning based on graph attention network for SAR target recognition[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2024, 34(11): 10779–10792. doi: 10.1109/TCSVT.2024.3418979. [45] JADERBERG M, SIMONYAN K, ZISSERMAN A, et al. Spatial transformer networks[C]. The 28th International Conference on Neural Information Processing Systems, Montreal, Canada, 2015: 2017–2025. [46] KEYDEL E R, LEE S W, and MOORE J T. MSTAR extended operating conditions: A tutorial[C]. The SPIE 2757, Algorithms for Synthetic Aperture Radar Imagery III, Orlando, FL, US, 1996: 228–242. doi: 10.1117/12.242059. [47] SUN Xian, LV Yixuan, WANG Zhirui, et al. SCAN: Scattering characteristics analysis network for few-shot aircraft classification in high-resolution SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5226517. doi: 10.1109/TGRS.2022.3166174. [48] ZHAO Juanping, ZHANG Zenghui, YAO Wei, et al. OpenSARUrban: A sentinel-1 SAR image dataset for urban interpretation[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2020, 13: 187–203. doi: 10.1109/JSTARS.2019.2954850. [49] ZHOU Xiaoqian, LUO Cai, REN Peng, et al. Multiscale complex-valued feature attention convolutional neural network for SAR automatic target recognition[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2024, 17: 2052–2066. doi: 10.1109/JSTARS.2023.3342986. [50] JIANG Chunyun, ZHANG Huiqiang, ZHAN Ronghui, et al. Open-set recognition model for SAR target based on capsule network with the KLD[J]. Remote Sensing, 2024, 16(17): 3141. doi: 10.3390/rs16173141. [51] ZHANG Tong, TONG Xiaobao, and WANG Yong. Semantics-assisted multiview fusion for SAR automatic target recognition[J]. IEEE Geoscience and Remote Sensing Letters, 2024, 21: 4007005. doi: 10.1109/LGRS.2024.3374375. [52] SIMONYAN K and ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[EB/OL]. https://arxiv.org/abs/1409.1556, 2015. [53] HE Kaiming, ZHANG Xiaohu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. [54] SZEGEDY C, VANHOUCKE V, IOFFE S, et al. Rethinking the inception architecture for computer vision[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2016: 2818–2826. doi: 10.1109/CVPR.2016.308. [55] LIU Ze, LIN Yutong, CAO Yue, et al. Swin transformer: Hierarchical vision transformer using shifted windows[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 2021: 9992–10002. doi: 10.1109/ICCV48922.2021.00986. [56] 李妙歌, 陈渤, 王东升, 等. 面向SAR图像目标分类的CNN模型可视化方法[J]. 雷达学报(中英文), 2024, 13(2): 359–373. doi: 10.12000/JR23107.LI Miaoge, CHEN Bo, WANG Dongsheng, et al. CNN model visualization method for SAR image target classification[J]. Journal of Radars, 2024, 13(2): 359–373. doi: 10.12000/JR23107. [57] CHEN Hongkai, LUO Zixin, ZHANG Jiahui, et al. Learning to match features with seeded graph matching network[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 2021: 6281–6290. doi: 10.1109/ICCV48922.2021.00624. [58] LOWE D G. Distinctive image features from scale-invariant keypoints[J]. International Journal of Computer Vision, 2004, 60(2): 91–110. doi: 10.1023/B:VISI.0000029664.99615.94. [59] RADFORD A, KIM J W, HALLACY C, et al. Learning transferable visual models from natural language supervision[EB/OL]. https://arxiv.org/abs/2103.00020, 2021. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: