Physically Explainable Intelligent Perception and Application of SAR Target Characteristics Based on Time-frequency Analysis

-

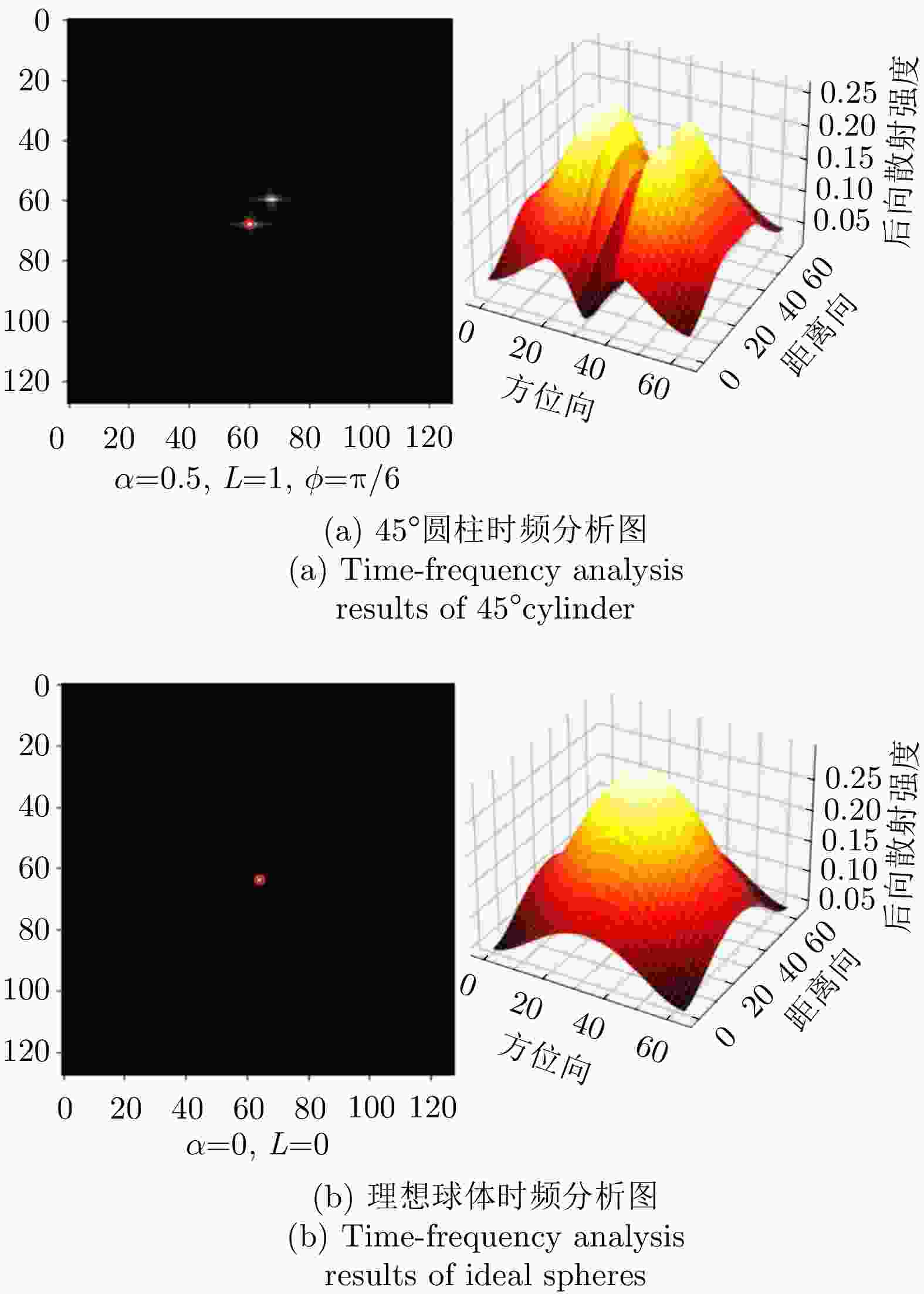

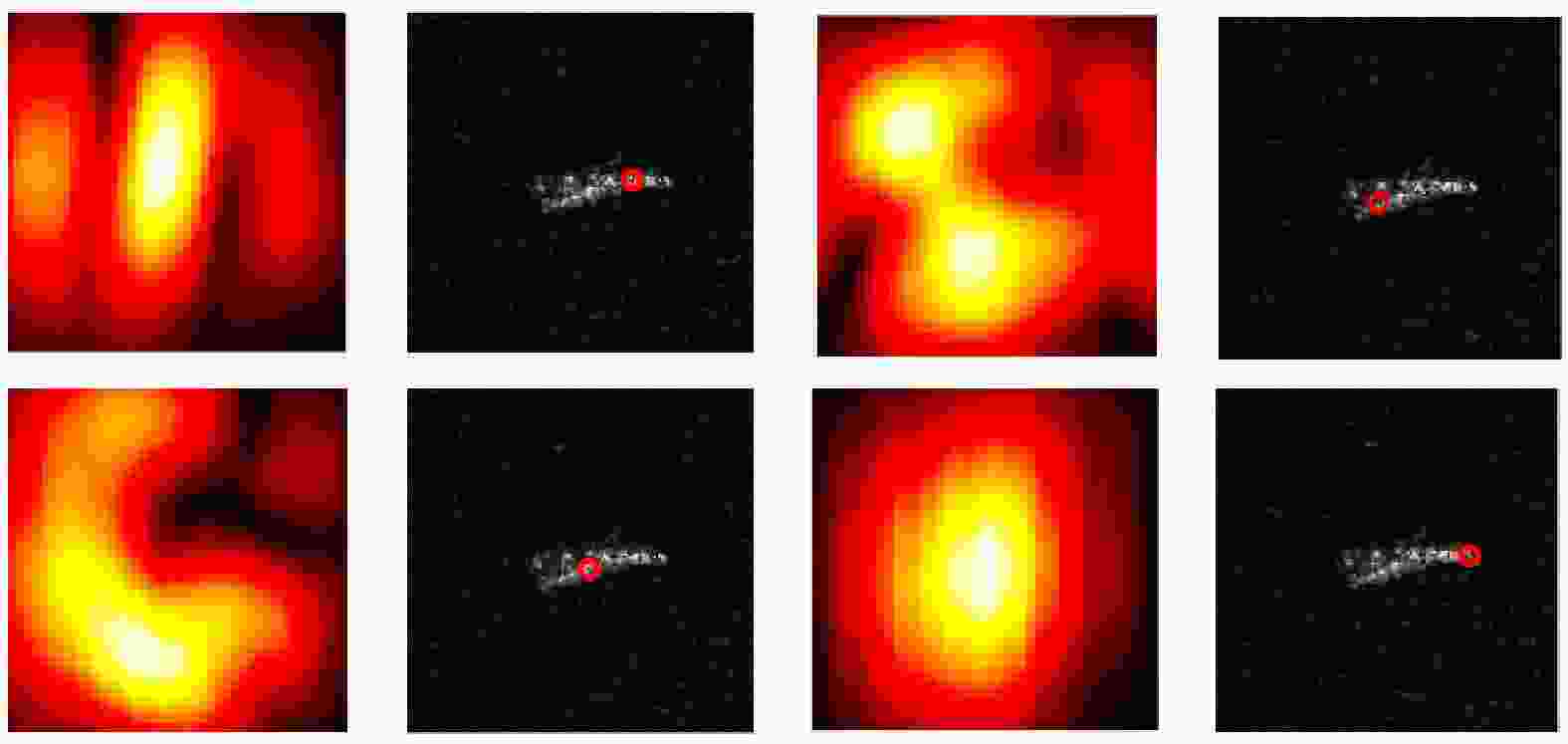

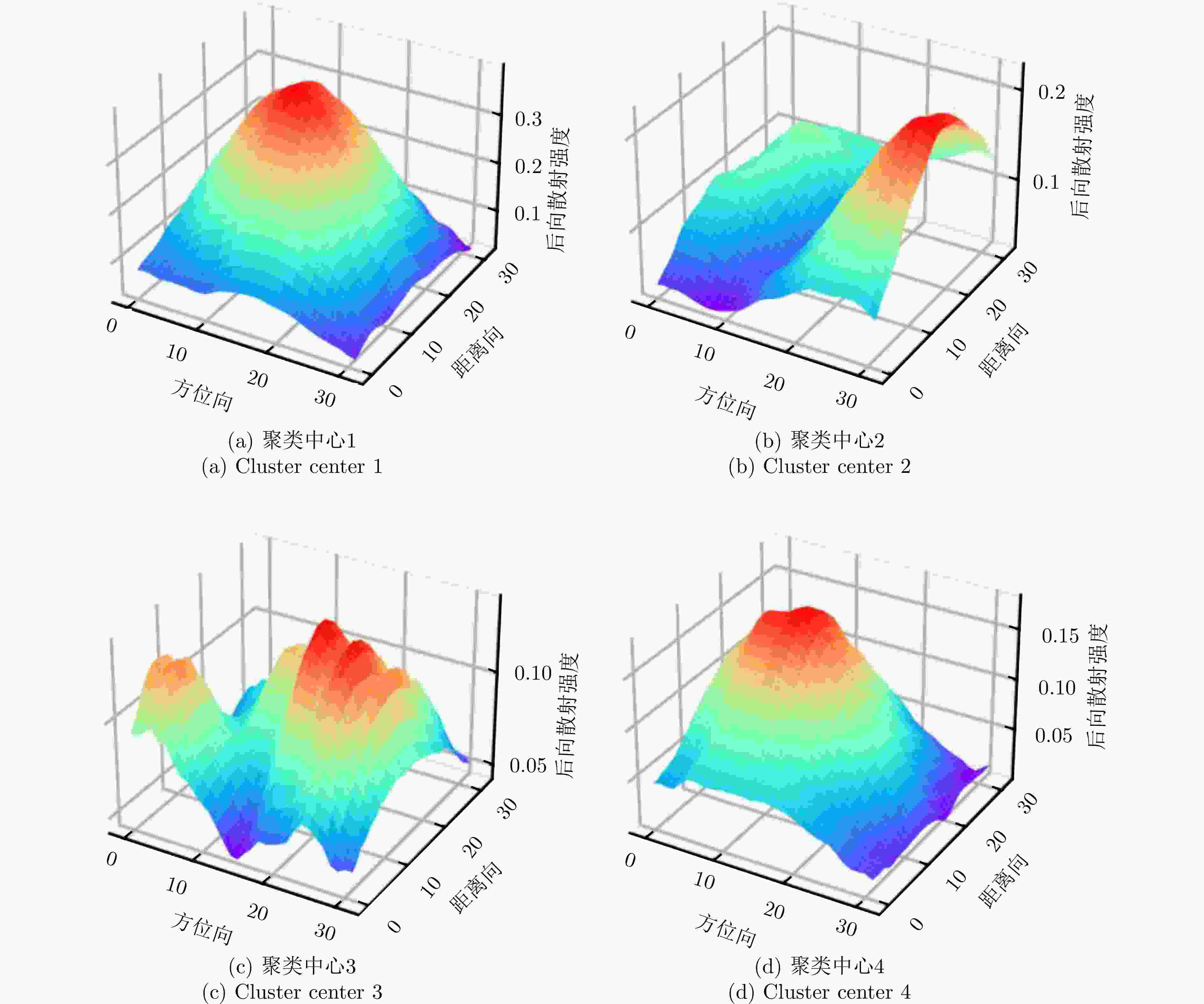

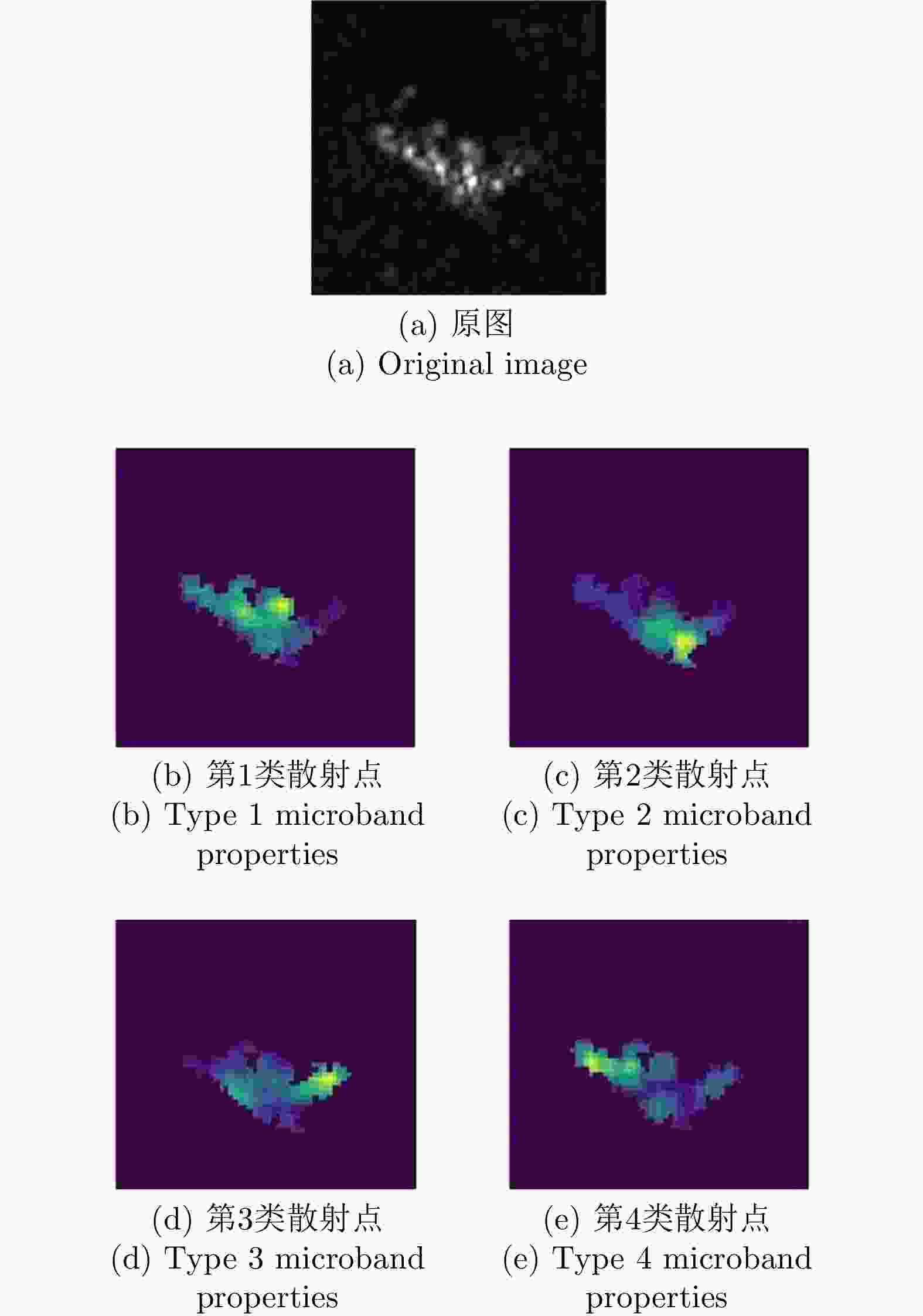

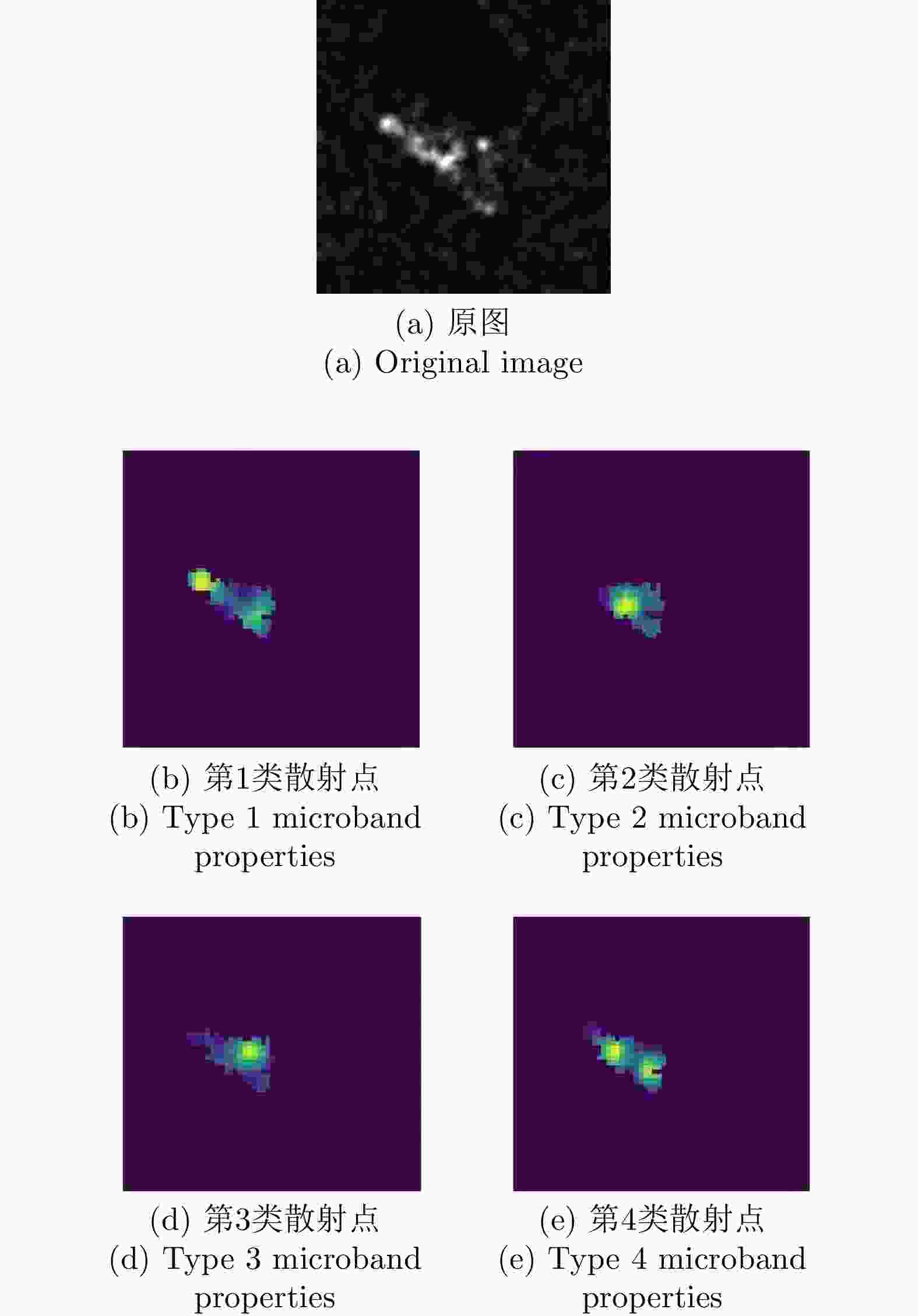

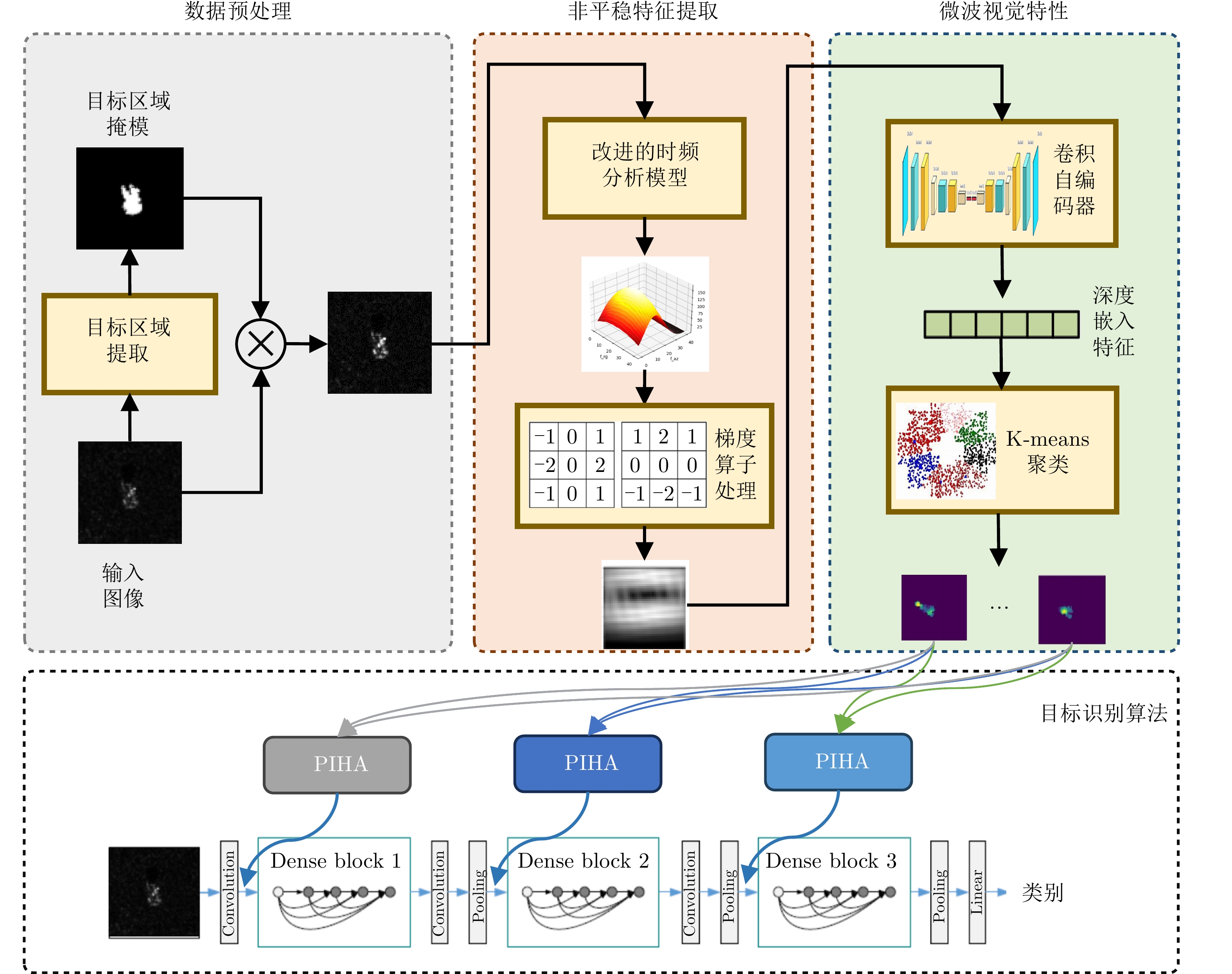

摘要: 合成孔径雷达(SAR)目标识别智能算法目前仍面临缺少鲁棒性、泛化性和可解释性的挑战,理解SAR目标微波特性并将其结合先进的深度学习算法,实现高效鲁棒的SAR目标识别,是目前领域较为关注的研究重点。SAR目标特性反演方法通常计算复杂度较高,难以结合深度神经网络实现端到端的实时预测。为促进SAR目标物理特性在智能识别任务中的应用,发展高效、智能、可解释的微波物理特性感知方法至关重要。该文将高分辨SAR目标的非平稳特性作为一种典型的微波视觉特性,提出一种改进的基于时频分析的目标特性智能感知方法,优化了处理流程和计算效率,使之更适用于SAR目标识别场景,并进一步将其应用到SAR目标智能识别算法中,实现了稳定的性能提升。该方法泛化性强、计算效率高,能得到物理可解释的SAR目标特性分类结果,对目标识别算法的性能提升与属性散射中心模型相当。

-

关键词:

- 合成孔径雷达(SAR) /

- 目标识别 /

- 目标特性 /

- 微波视觉 /

- 时频分析(TFA)

Abstract: The current state of intelligent target recognition approaches for Synthetic Aperture Radar (SAR) continues to experience challenges owing to their limited robustness, generalizability, and interpretability. Currently, research focuses on comprehending the microwave properties of SAR targets and integrating them with advanced deep learning algorithms to achieve effective and resilient SAR target recognition. The computational complexity of SAR target characteristic-inversion approaches is often considerable, rendering their integration with deep neural networks for achieving real-time predictions in an end-to-end manner challenging. To facilitate the utilization of the physical properties of SAR targets in intelligent recognition tasks, advancing the development of microwave physical property sensing technologies that are efficient, intelligent, and interpretable is imperative. This paper focuses on the nonstationary nature of high-resolution SAR targets and proposes an improved intelligent approach for analyzing target characteristics using time-frequency analysis. This method enhances the processing flow and calculation efficiency, making it more suitable for SAR targets. It is integrated with a deep neural network for SAR target recognition to achieve consistent performance improvement. The proposed approach exhibits robust generalization capabilities and notable computing efficiency, enabling the acquisition of classification outcomes of the SAR target characteristics that are readily interpretable from a physical standpoint. The enhancement in the performance of the target recognition algorithm is comparable to that achieved by the attribute scattering center model. -

表 1 MSTAR数据集OFA评价方法设置

Table 1. OFA evaluation method settings for MSTAR dataset

类别 训练集/验证集 OFA-1 OFA-2 OFA-3 型号 俯仰角 数量 型号 俯仰角 数量 型号 俯仰角 数量 型号 俯仰角 数量 BMP-2 9563 17° 233 9563 15° 195 9563 15° 195 / / / / / / / / / 9566 15° 196 / / / / / / / / / C21 15° 196 / / / T-72 132 17° 232 132 15° 196 132 15° 196 / / / / / / / / / 812 15° 195 / / / / / / / / / S7 15° 191 / / / BTR-70 C71 17° 233 C71 15° 196 C71 15° 196 / / / BTR-60 k10yt7532 17° 256 k10yt7532 15° 195 k10yt7532 15° 195 / / / 2S1 b01 17° 299 b01 15° 274 b01 15° 274 b01 15°/30°/45° 274/288/303 BRDM-2 E-71 17° 298 E-71 15° 274 E-71 15° 274 E-71 15°/30°/45° 274/420/423 D7 92v13015 17° 299 92v13015 15° 274 92v13015 15° 274 / / / T-62 A51 17° 299 A51 15° 273 A51 15° 273 / / / ZIL-131 E12 17° 299 E12 15° 274 E12 15° 274 / / / ZSU-234 d08 17° 299 d08 15° 274 d08 15° 274 d08 15°/30°/45° 274/406/422 表 2 不同聚类中心数量对比试验(%)

Table 2. Comparative experiments on the number of centers in different clusters (%)

聚类数量 90 50 OFA-1 OFA-2 OFA-3 OFA-1 OFA-2 OFA-3 2 96.49±1.61 93.99$\pm $0.81 62.32±2.11 94.54±0.98 90.57$\pm $0.98 61.96$\pm $0.92 4 97.40±0.38 94.38±0.74 64.55±1.54 94.79±1.30 91.27±1.95 61.30$\pm $1.30 8 96.95$\pm $0.86 93.44±0.90 63.26$\pm $3.34 94.58$\pm $1.39 90.53±2.08 59.14±4.45 聚类数量 30 10 OFA-1 OFA-2 OFA-3 OFA-1 OFA-2 OFA-3 2 88.48±0.91 84.90$\pm $1.20 60.95±2.39 69.58±1.02 64.31$\pm $1.65 54.78±2.61 4 89.10$\pm $1.36 85.05±1.49 60.86$\pm $1.03 70.23$\pm $1.94 63.92±2.13 54.24$\pm $1.29 8 89.13±1.20 84.56±1.68 56.42±2.66 70.85±1.82 64.85±1.46 53.41±2.43 注:加粗和下划线分别表示最优和次优结果。 表 3 基于ASC和微波视觉特性的PIHA对比实验(%)

Table 3. Comparative experiments of PIHA based on ASC and microwave visual characteristics (%)

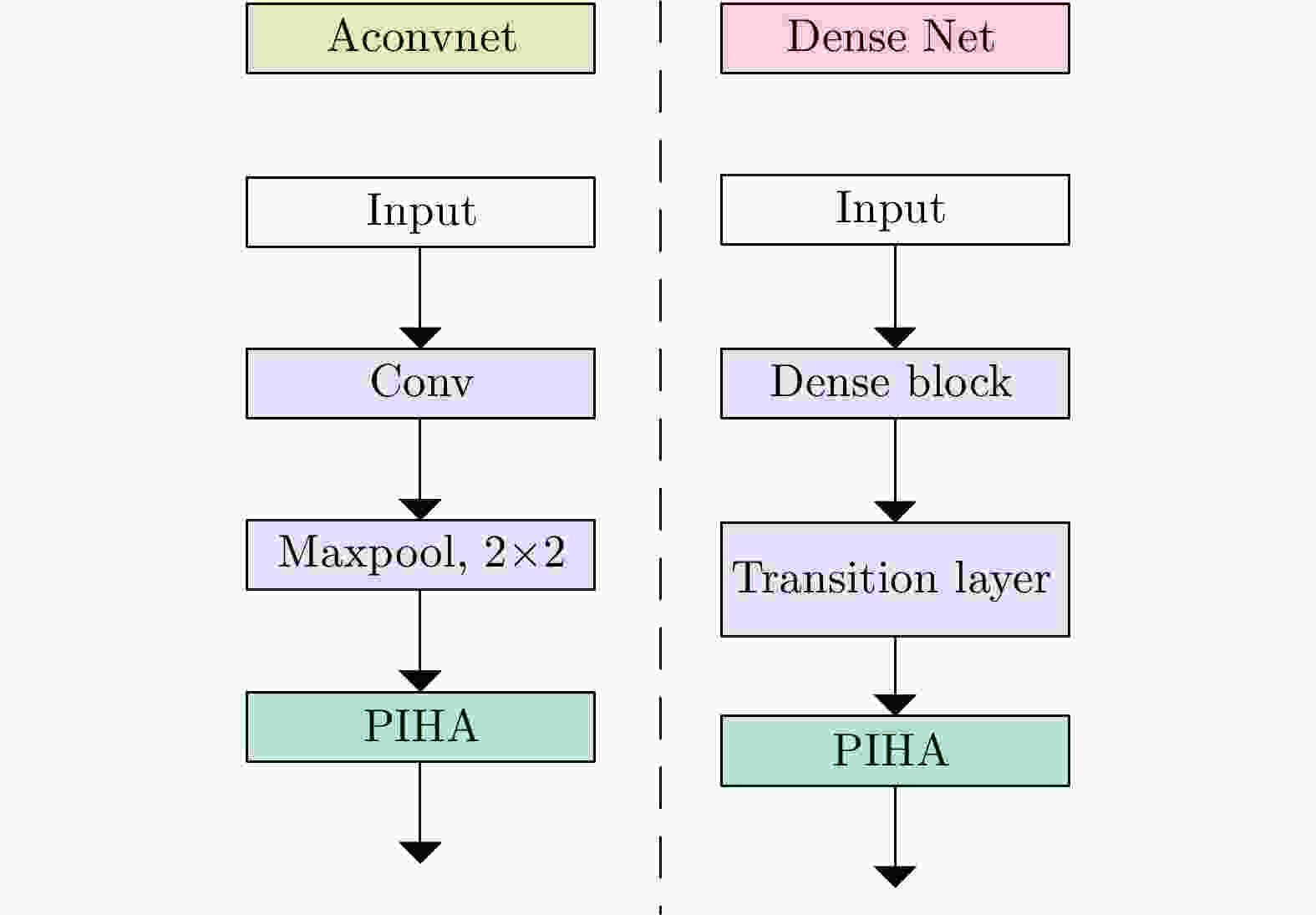

模型 90 50 OFA-1 OFA-2 OFA-3 OFA-1 OFA-2 OFA-3 DenseNet-121 95.37±1.04 91.73±0.76 60.37±2.66 91.60±1.82 88.45±1.58 60.18±1.57 +PIHA (ASC) 97.41±0.99 94.40±1.66 62.07±2.40 95.32±1.30 91.97±1.72 61.81±1.16 +PIHA (TFA) 97.40±0.38 94.38±0.74 64.55±1.54 94.79±1.30 91.27±1.95 61.30±1.30 A-ConvNet 86.95±5.69 84.51±6.06 57.16±3.07 92.48±5.00 88.88±4.82 62.80±2.31 +PIHA (ASC) 94.96±2.84 93.02±3.24 57.91±1.80 93.13±3.24 89.06±3.54 59.11±2.89 +PIHA (TFA) 88.78±4.12 86.46±4.24 61.37±3.69 92.81±4.43 89.45±3.87 60.15±1.16 模型 30 10 OFA-1 OFA-2 OFA-3 OFA-1 OFA-2 OFA-3 DenseNet-121 84.72±1.13 80.29±1.21 57.44±2.56 65.58±3.49 59.32±3.21 56.63±3.18 +PIHA (ASC) 90.20±1.43 85.98±1.73 61.91±1.45 71.97±1.97 65.89±2.08 53.87±1.85 +PIHA (TFA) 89.10±1.36 85.05±1.49 60.86±1.03 70.23±1.94 63.92±2.13 54.24±1.29 A-ConvNet 87.65±2.39 83.04±3.32 58.63±1.73 72.02±1.70 64.76±2.27 52.71±2.28 +PIHA (ASC) 91.18±2.19 86.49±2.49 58.78±1.03 76.11±1.93 69.60±2.95 53.22±1.56 +PIHA (TFA) 90.39±2.58 85.98±2.83 59.88±2.13 77.80±4.85 72.34±4.35 52.35±1.91 注:加粗表示最优结果,括号内表示使用的物理信息。 表 4 本文方法与已有目标识别算法的对比(%)

Table 4. Comparative experiments between the proposed method and existing algorithms (%)

方法 模型描述 输入 90 50 OFA-1 OFA-2 OFA-3 OFA-1 OFA-2 OFA-3 A-ConvNet 数据驱动 幅度 86.95±5.69 84.51±6.06 57.16±3.07 92.48±5.00 88.88±4.82 62.80±2.31 FEC 数据+物理模型 复数 92.32±4.41 86.22±5.03 58.76±5.18 86.23±5.62 81.34±5.83 55.03±2.35 MS-CVNets 数据驱动 复数 96.77$\pm $1.74 94.34$\pm $1.22 66.83±3.96 93.43$\pm $1.89 90.78$\pm $1.83 62.56$\pm $3.71 本文方法 数据+物理模型 复数 97.40±0.38 94.38±0.74 64.55$\pm $0.15 94.79±1.30 91.27±1.95 61.30±1.30 方法 模型描述 30 10 OFA-1 OFA-2 OFA-3 OFA-1 OFA-2 OFA-3 A-ConvNet 数据驱动 幅度 87.65$\pm $2.39 83.04$\pm $3.32 58.63$\pm $1.73 72.02±1.70 64.76±2.27 52.71$\pm $2.28 FEC 数据+物理模型 复数 68.43±7.72 64.48±6.16 51.12±1.81 57.84±3.47 54.04±3.91 43.44±5.97 MS-CVNets 数据驱动 复数 81.33±0.95 77.50±0.97 56.02±1.11 49.11±4.27 44.63±3.74 38.96±5.78 本文方法 数据+物理模型 复数 89.10±1.36 85.05±1.49 60.86±1.03 70.23$\pm $1.94 63.92$\pm $2.13 54.24±1.29 注:加粗和下划线分别表示最优和次优结果。 -

[1] CHEN Keyang, PAN Zongxu, HUANG Zhongling, et al. Learning from reliable unlabeled samples for semi-supervised SAR ATR[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4512205. doi: 10.1109/LGRS.2022.3197892. [2] CHOI J H, LEE M J, JEONG N H, et al. Fusion of target and shadow regions for improved SAR ATR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5226217. doi: 10.1109/TGRS.2022.3165849. [3] ZENG Zhiqiang, SUN Jinping, HAN Zhu, et al. SAR automatic target recognition method based on multi-stream complex-valued networks[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5228618. doi: 10.1109/TGRS.2022.3177323. [4] 金亚秋. 多模式遥感智能信息与目标识别: 微波视觉的物理智能[J]. 雷达学报, 2019, 8(6): 710–716. doi: 10.12000/JR19083.JIN Yaqiu. Multimode remote sensing intelligent information and target recognition: Physical intelligence of microwave vision[J]. Journal of Radars, 2019, 8(6): 710–716. doi: 10.12000/JR19083. [5] DATCU M, HUANG Zhongling, ANGHEL A, et al. Explainable, physics-aware, trustworthy artificial intelligence: A paradigm shift for synthetic aperture radar[J]. IEEE Geoscience and Remote Sensing Magazine, 2023, 11(1): 8–25. doi: 10.1109/MGRS.2023.3237465. [6] 黄钟泠, 姚西文, 韩军伟. 面向SAR图像解译的物理可解释深度学习技术进展与探讨[J]. 雷达学报, 2022, 11(1): 107–125. doi: 10.12000/JR21165.HUANG Zhongling, YAO Xiwen, and HAN Junwei. Progress and perspective on physically explainable deep learning for synthetic aperture radar image interpretation[J]. Journal of Radars, 2022, 11(1): 107–125. doi: 10.12000/JR21165. [7] 文贡坚, 马聪慧, 丁柏圆, 等. 基于部件级三维参数化电磁模型的SAR目标物理可解释识别方法[J]. 雷达学报, 2020, 9(4): 608–621. doi: 10.12000/JR20099.WEN Gongjian, MA Conghui, DING Baiyuan, et al. SAR target physics interpretable recognition method based on three dimensional parametric electromagnetic part model[J]. Journal of Radars, 2020, 9(4): 608–621. doi: 10.12000/JR20099. [8] 郭炜炜, 张增辉, 郁文贤, 等. SAR图像目标识别的可解释性问题探讨[J]. 雷达学报, 2020, 9(3): 462–476. doi: 10.12000/JR20059.GUO Weiwei, ZHANG Zenghui, YU Wenxian, et al. Perspective on explainable SAR target recognition[J]. Journal of Radars, 2020, 9(3): 462–476. doi: 10.12000/JR20059. [9] KARPATNE A, ATLURI G, FAGHMOUS J H, et al. Theory-guided data science: A new paradigm for scientific discovery from data[J]. IEEE Transactions on Knowledge and Data Engineering, 2017, 29(10): 2318–2331. doi: 10.1109/TKDE.2017.2720168. [10] VON RUEDEN L, MAYER S, BECKH K, et al. Informed machine learning – A taxonomy and survey of integrating prior knowledge into learning systems[J]. IEEE Transactions on Knowledge and Data Engineering, 2023, 35(1): 614–633. doi: 10.1109/TKDE.2021.3079836. [11] CHEN Sizhe, WANG Haipeng, XU Feng, et al. Target classification using the deep convolutional networks for SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(8): 4806–4817. doi: 10.1109/TGRS.2016.2551720. [12] LI Yi, DU Lan, and WEI Di. Multiscale CNN based on component analysis for SAR ATR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5211212. doi: 10.1109/TGRS.2021.3100137. [13] INKAWHICH N, DAVIS E, MAJUMDER U, et al. Advanced techniques for robust SAR ATR: Mitigating noise and phase errors[C]. 2020 IEEE International Radar Conference, Washington, USA, 2020: 844–849. doi: 10.1109/RADAR42522.2020.9114784. [14] PENG Bowen, PENG Bo, ZHOU Jie, et al. Scattering model guided adversarial examples for SAR target recognition: Attack and defense[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5236217. doi: 10.1109/TGRS.2022.3213305. [15] FENG Sijia, JI Kefeng, WANG Fulai, et al. Electromagnetic scattering feature (ESF) module embedded network based on ASC model for robust and interpretable SAR ATR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5235415. doi: 10.1109/TGRS.2022.3208333. [16] LIU Zhunga, WANG Longfei, WEN Zaidao, et al. Multilevel scattering center and deep feature fusion learning framework for SAR target recognition[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5227914. doi: 10.1109/TGRS.2022.3174703. [17] 邢孟道, 谢意远, 高悦欣, 等. 电磁散射特征提取与成像识别算法综述[J]. 雷达学报, 2022, 11(6): 921–942. doi: 10.12000/JR22232.XING Mengdao, XIE Yiyuan, GAO Yuexin, et al. Electromagnetic scattering characteristic extraction and imaging recognition algorithm: A review[J]. Journal of Radars, 2022, 11(6): 921–942. doi: 10.12000/JR22232. [18] BAI Xueru, ZHOU Xuening, ZHANG Feng, et al. Robust Pol-ISAR target recognition based on ST-MC-DCNN[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(12): 9912–9927. doi: 10.1109/TGRS.2019.2930112. [19] XUE Ruihang, BAI Xueru, and ZHOU Feng. SAISAR-Net: A robust sequential adjustment ISAR image classification network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5214715. doi: 10.1109/TGRS.2021.3113655. [20] 高悦欣, 李震宇, 盛佳恋, 等. 一种大转角SAR图像散射中心各向异性提取方法[J]. 电子与信息学报, 2016, 38(8): 1956–1961. doi: 10.11999/JEIT151261.GAO Yuexin, LI Zhenyu, SHENG Jialian, et al. Extraction method for anisotropy characteristic of scattering center in wide-angle SAR imagery[J]. Journal of Electronics & Information Technology, 2016, 38(8): 1956–1961. doi: 10.11999/ JEIT151261. [21] KHAN S and GUIDA R. Feasibility of time-frequency urban area analysis on TerraSAR-X fully polarimetric dataset[C]. 2011 Joint Urban Remote Sensing Event, Munich, Germany, 2011: 265–268. doi: 10.1109/JURSE.2011.5764770. [22] ZHANG Lu, HUANG Yue, FERRO-FAMIL L, et al. Effect of polarimetric information on time-frequency analysis using spaceborne SAR image[C]. 13th European Conference on Synthetic Aperture Radar, 2021. [23] FERRO-FAMIL L and POTTIER E. Urban area remote sensing from L-band PolSAR data using time-frequency techniques[C]. 2007 Urban Remote Sensing Joint Event, Paris, France, 2007: 1–6. doi: 10.1109/URS.2007.371769. [24] DUQUENOY M, OVARLEZ J P, FERRO-FAMIL L, et al. Study of dispersive and anisotropic scatterers behavior in radar imaging using time-frequency analysis and polarimetric coherent decomposition[C]. 2006 IEEE Conference on Radar, Verona, USA, 2006: 180–185. doi: 10.1109/RADAR.2006.1631794. [25] FERRO-FAMIL L, REIGBER A, POTTIER E, et al. Scene characterization using subaperture polarimetric SAR data[J]. IEEE Transactions on Geoscience and Remote Sensing, 2003, 41(10): 2264–2276. doi: 10.1109/TGRS.2003.817188. [26] FERRO-FAMIL L, REIGBER A, and POTTIER E. Nonstationary natural media analysis from polarimetric SAR data using a two-dimensional time-frequency decomposition approach[J]. Canadian Journal of Remote Sensing, 2005, 31(1): 21–29. doi: 10.5589/m04-062. [27] HUANG Zhongling, DATCU M, PAN Zongxu, et al. HDEC-TFA: An unsupervised learning approach for discovering physical scattering properties of single-polarized SAR image[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(4): 3054–3071. doi: 10.1109/TGRS.2020.3014335. [28] SPIGAI M, TISON C, and SOUYRIS J C. Time-frequency analysis in high-resolution SAR imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2011, 49(7): 2699–2711. doi: 10.1109/TGRS.2011.2107914. [29] HUANG Zhongling, WU Chong, YAO Xiwen, et al. Physics inspired hybrid attention for SAR target recognition[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2024, 207: 164–174. doi: 10.1016/j.isprsjprs.2023.12.004. [30] HU Jie, SHEN Li, and SUN Gang. Squeeze-and-excitation networks[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7132–7141. doi: 10.1109/CVPR.2018.00745. [31] HUANG Gao, LIU Zhuang, VAN DER MAATEN L, et al. Densely connected convolutional networks[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 2261–2269. doi: 10.1109/CVPR.2017.243. [32] ZHANG Jinsong, XING Mengdao, and XIE Yiyuan. FEC: A feature fusion framework for SAR target recognition based on electromagnetic scattering features and deep CNN features[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(3): 2174–2187. doi: 10.1109/TGRS.2020.3003264. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: