Human Anomalous Gait Termination Recognition via Through-the-wall Radar Based on Micro-Doppler Corner Features and Non-Local Mechanism

-

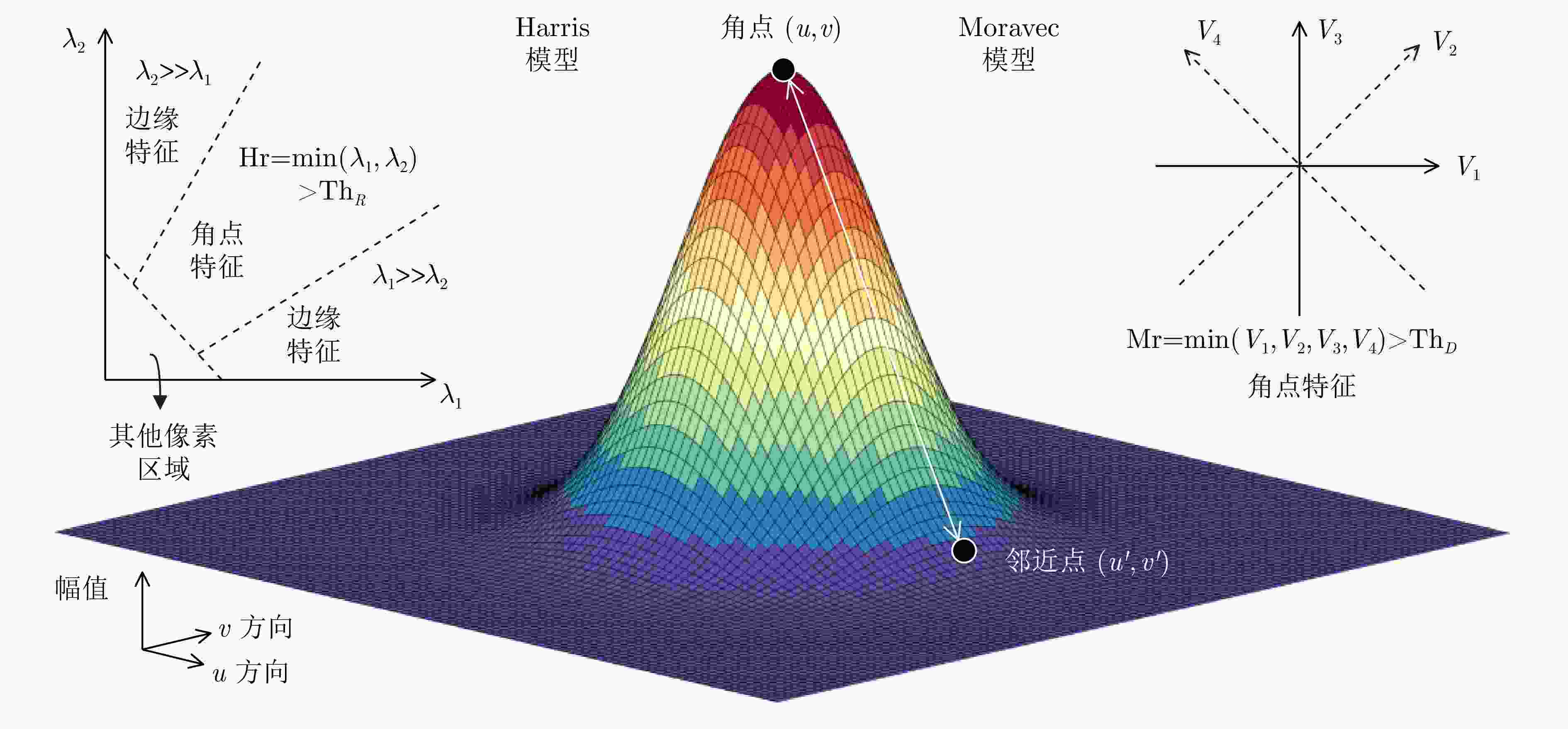

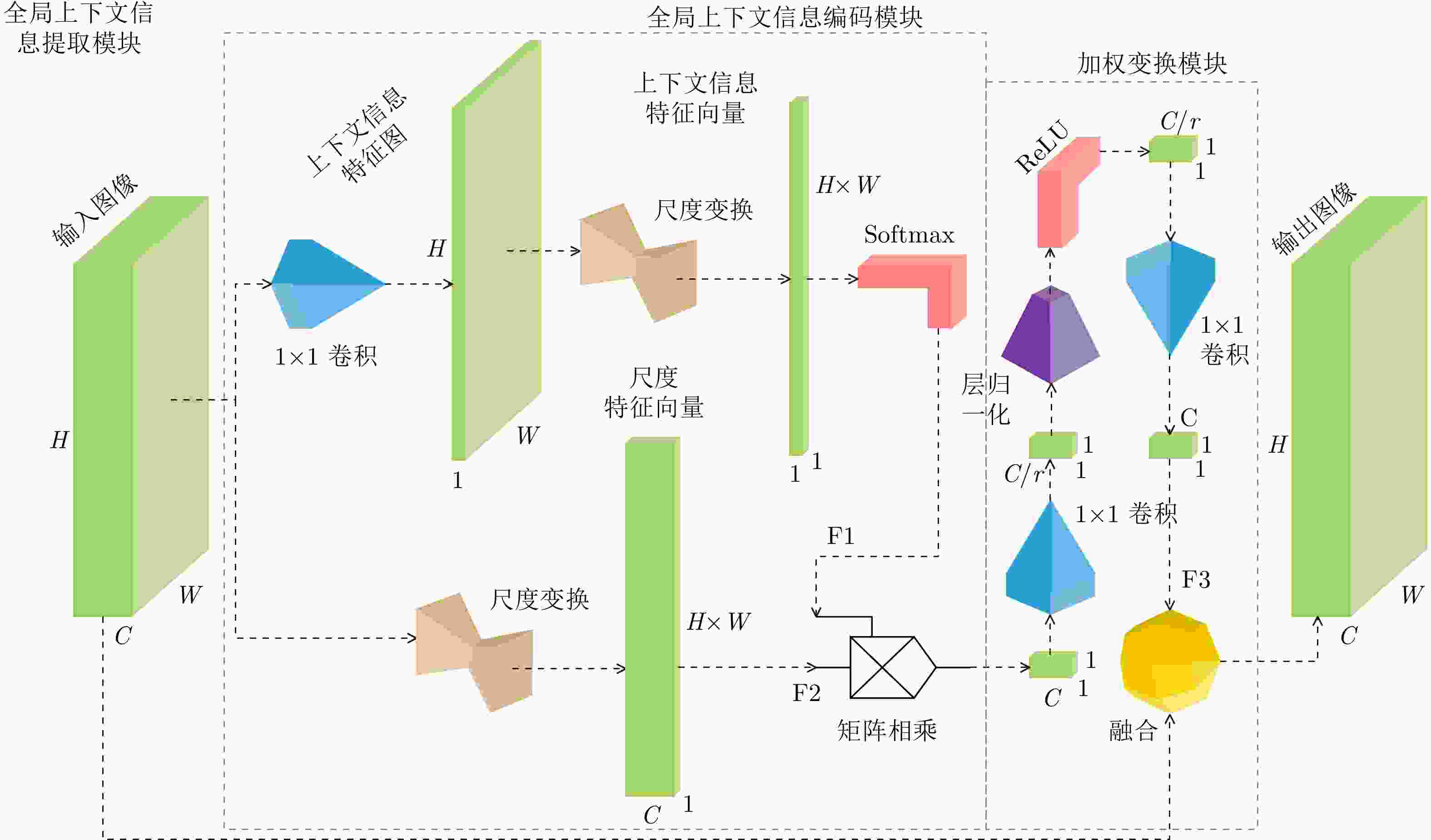

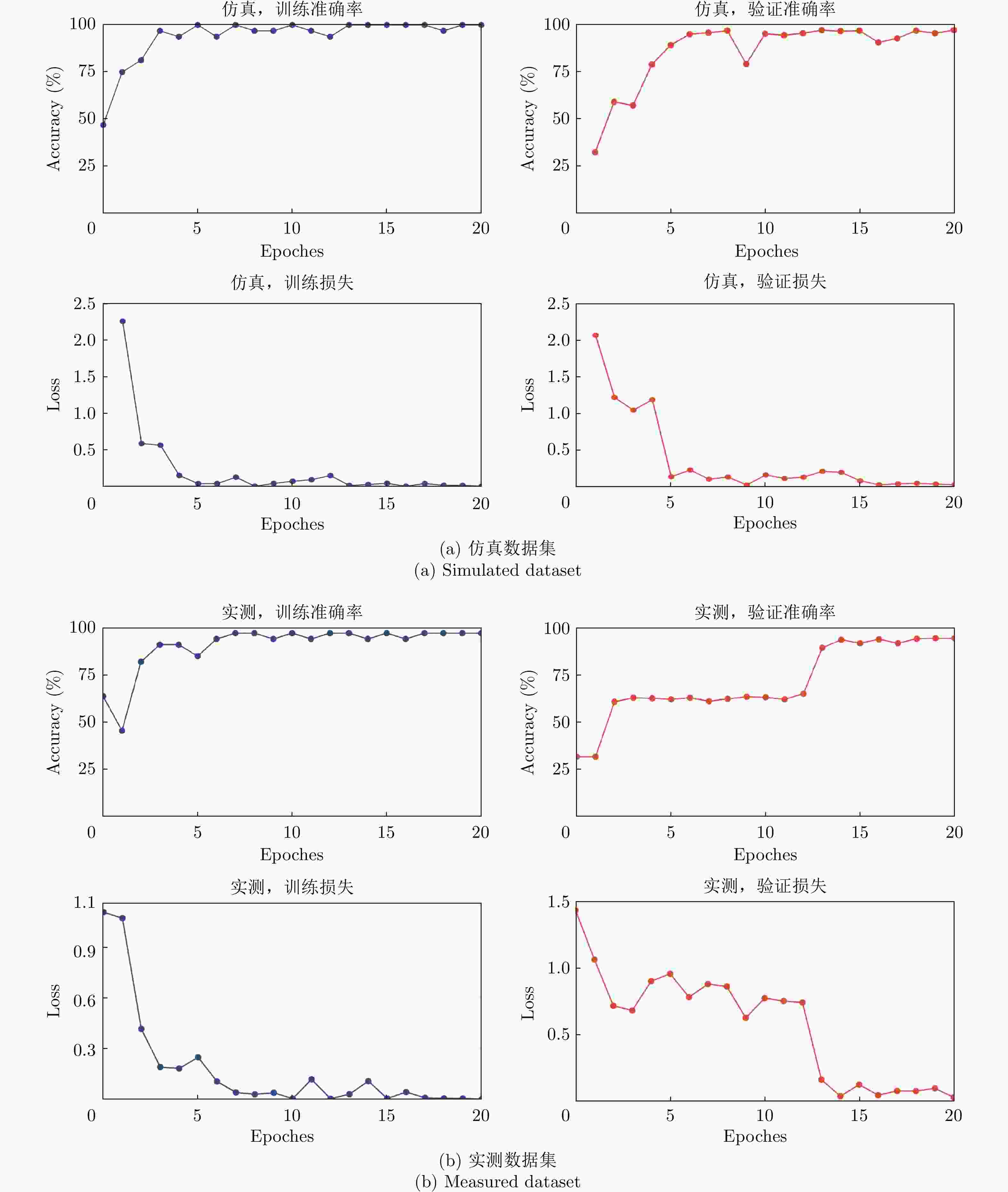

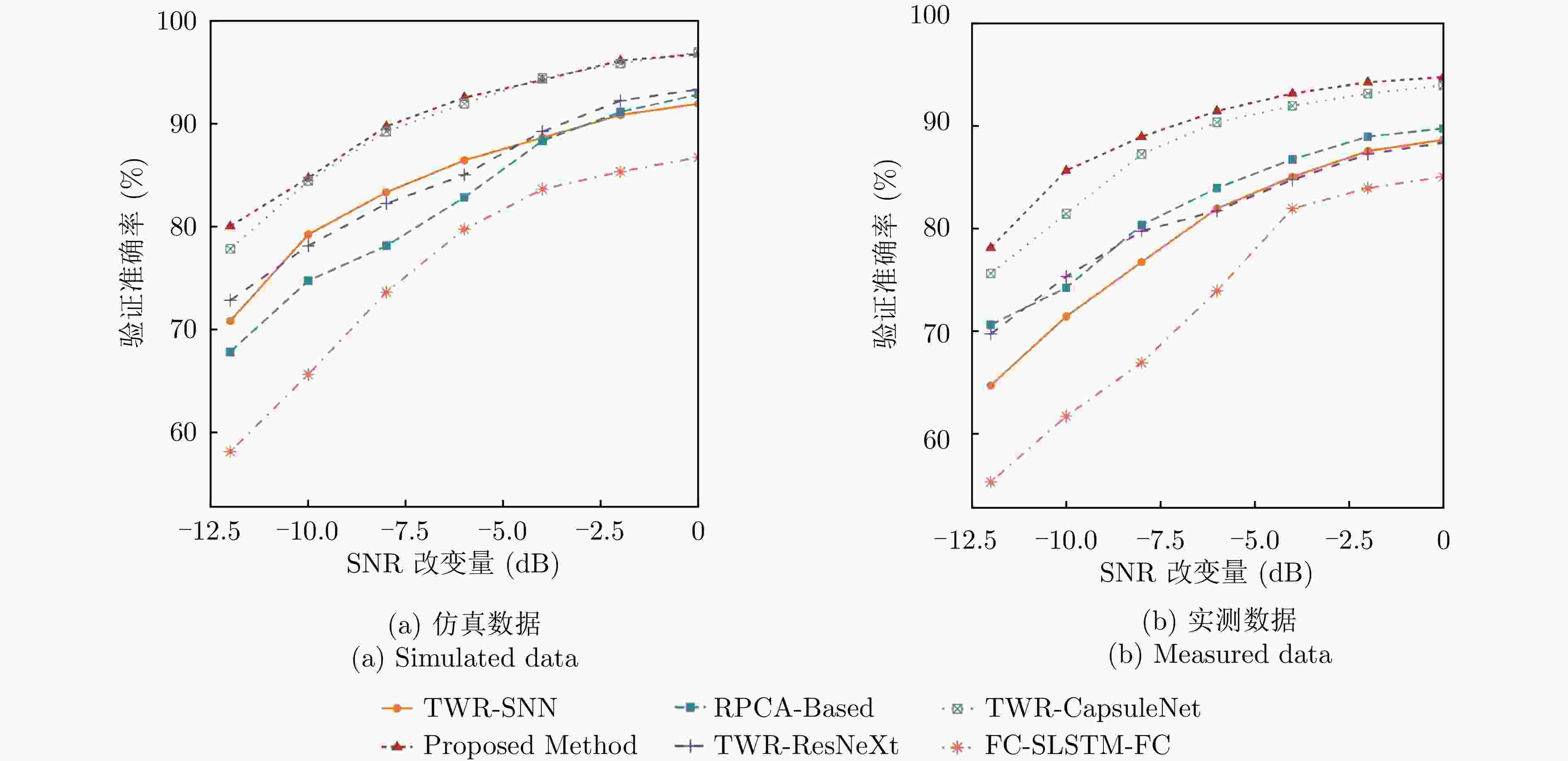

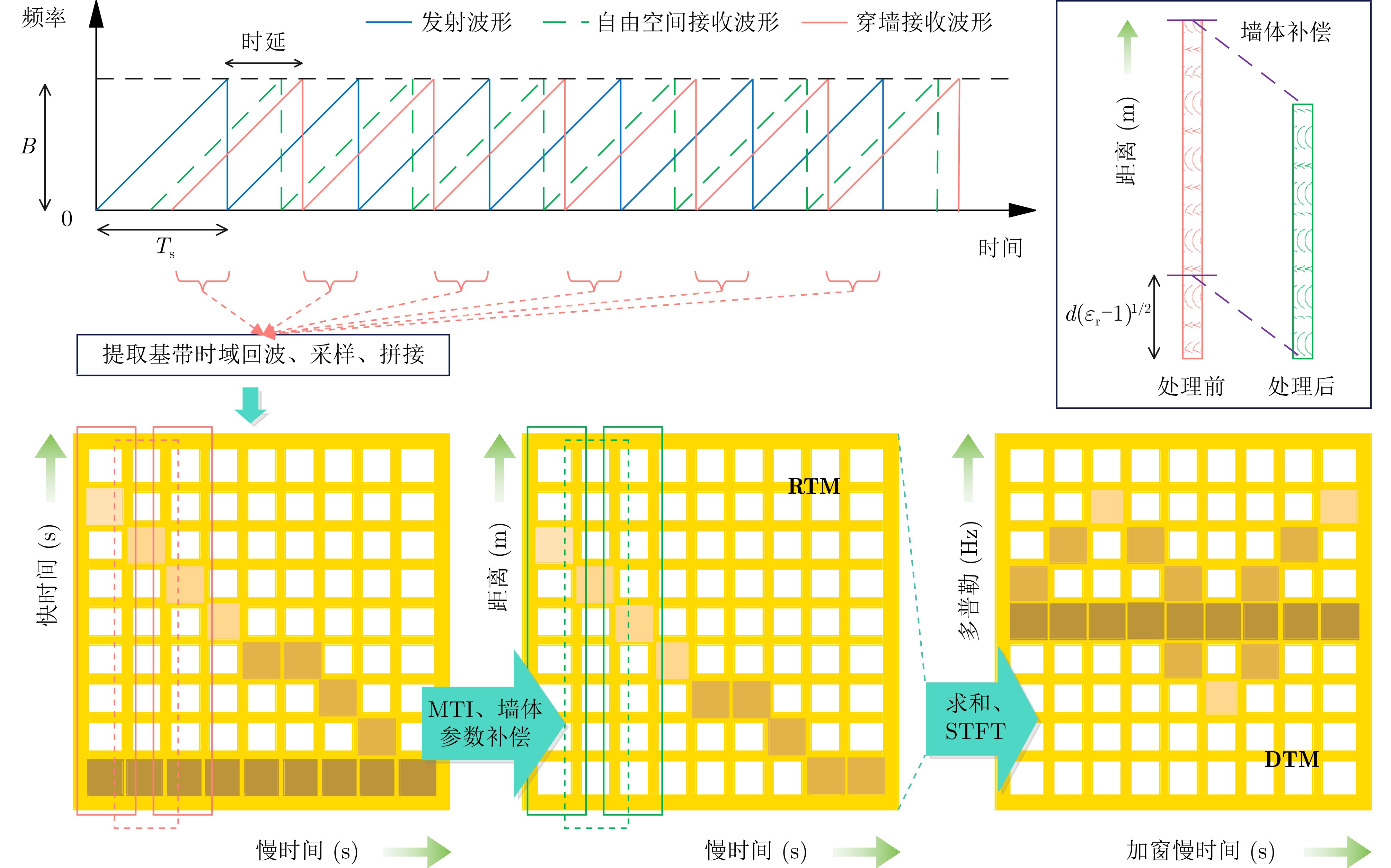

摘要: 穿墙雷达能够穿透建筑物墙体,实现室内人体目标探测。利用深度学习提取不同肢节点的微多普勒特征,可以有效辨识障碍物后的人体行为。但是,当生成训练、验证集与生成测试集的受试者不同时,基于深度学习的行为识别方法测试准确率相对验证准确率往往较低,泛化能力较差。因此,该文提出一种基于微多普勒角点特征与Non-Local机制的穿墙雷达人体步态异常终止行为辨识技术。该方法利用Harris与Moravec检测器提取雷达图像上的角点特征,建立角点特征数据集;利用多链路并行卷积和Non-Local机制构建全局上下文信息提取网络,学习图像像素的全局分布特征;将全局上下文信息提取网络重复堆叠4次得到角点语义特征图,经多层感知机输出行为预测概率。仿真和实测结果表明,所提方法可以有效识别室内人体步行过程中存在的坐卧、跌倒等突发步态异常终止行为,在提升识别准确率、鲁棒性的前提下,有效控制泛化精度误差不超过

$ 6.4\% $ 。Abstract: Through-the-wall radar can penetrate walls and realize indoor human target detection. Deep learning is commonly used to extract the micro-Doppler signature of a target, which can be used to effectively identify human activities behind obstacles. However, the test accuracy of the deep-learning-based recognition methods is low with poor generalization ability when different testers are invited to generate the training set and test set. Therefore, this study proposes a method for recognition of anomalous human gait termination based on micro-Doppler corner features and Non-Local mechanism. In this method, Harris and Moravec detectors are utilized to extract the corner features of the radar image, and the corner feature dataset is established in this manner. Thereafter, multilink parallel convolutions and the Non-Local mechanism are utilized to construct the global contextual information extraction network to learn the global distribution characteristics of the image pixels. The semantic feature maps are generated by repeating four times the global contextual information extraction network. Finally, the probabilities of human activities are predicted using a multilayer perceptron. The numerical simulation and experimental results demonstrate that the proposed method can effectively identify such abnormal gait termination activities as sitting, lying down, and falling, among others, which occur in the process of indoor human walking, and successfully control the generalization accuracy error to be no more than$ 6.4\% $ under the premise of increasing the recognition accuracy and robustness. -

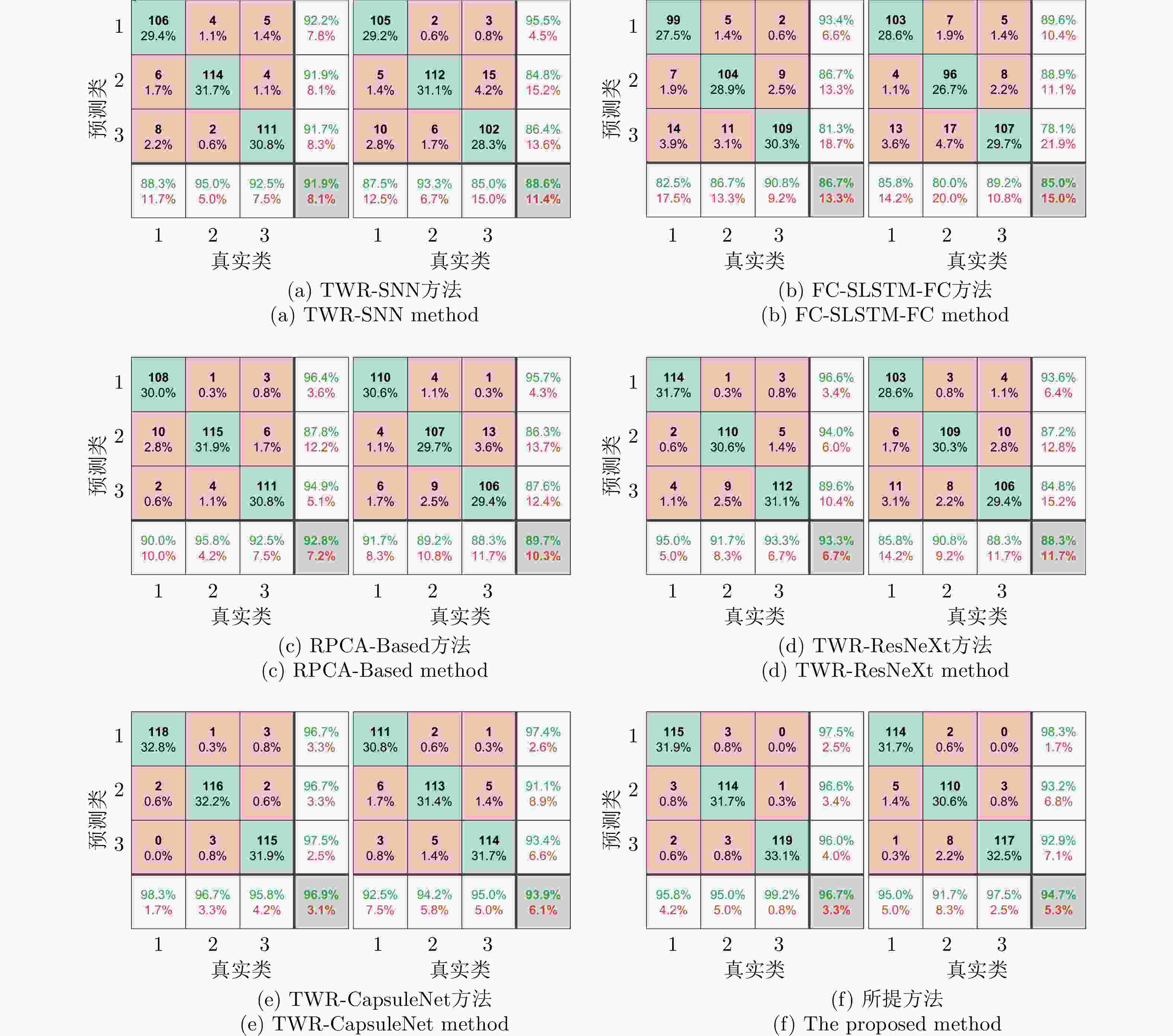

图 9 模型验证的混淆矩阵对比(每组中左侧混淆矩阵对应仿真数据集,右侧混淆矩阵对应实测数据集,数字标签1–3对应S1–S3类样本)

Figure 9. Comparison of confusion matrices for model validation (In each group, the left confusion matrix corresponds to the simulated dataset, the right confusion matrix corresponds to the measured dataset, and the numerical labels 1–3 correspond to the samples of classes S1–S3)

表 1 雷达数据采集系统工作参数设置

Table 1. Radar data acquisition system operating parameters settings

参数 数值 收发天线间距($ \mathrm{m} $) $ 0.15\text{} $ 带宽($ \mathrm{G}\mathrm{H}\mathrm{z} $) $ 0.5{ \text{~}}2.5 $ 快时间采样点数 $ 256 $ 慢时间采样道数 $ 1024 $ 采样时窗(s) $ 4\text{} $ 采样时窗Overlap比例 $ 0.9 $ 墙体厚度($ \mathrm{m} $) $ 0.12\text{} $ 人体运动范围(距离雷达)($ \mathrm{m} $) $ 1\text{~}4 $ 识别状态数 3 天线对地高度($ \mathrm{m} $) 1.5 表 2 网络训练及验证过程参数设置

Table 2. Parameter settings for network training and validation process

参数 数值 批大小 $ 32 $ 每轮训练含批数 $ 45 $ 训练总轮数 $ 20 $ 初始学习率 $ 0.00147 $ 优化器 $ \mathrm{A}\mathrm{d}\mathrm{a}\mathrm{m} $ 训练/验证频率(批数) $ 10 $ 输入图像 R2TM & D2TM: 256×256×3 (伪彩色映射后)

通道维拼接结果:$ 256\times 256\times 6 $批归一化 Population 正则化 $ \mathrm{L}2\;\mathrm{R}\mathrm{e}\mathrm{g}\mathrm{u}\mathrm{l}\mathrm{a}\mathrm{r}\mathrm{i}\mathrm{z}\mathrm{a}\mathrm{t}\mathrm{i}\mathrm{o}\mathrm{n} $ 是否逐层训练 $ \mathrm{否} $ 固化模型选择策略 $ \mathrm{B}\mathrm{e}\mathrm{s}\mathrm{t}\;\mathrm{E}\mathrm{p}\mathrm{o}\mathrm{c}\mathrm{h} $ 表 3 所提方法及现有方法针对验证及测试集的精度对比(%)

Table 3. Comparison of accuracy of proposed and existing methods for validation and test sets (%)

方法 仿真/验证 实测/验证 仿真/测试 实测/测试 仿真差异 实测差异 TWR-SNN 91.9 88.6 78.3 75.0 13.6 13.6 FC-SLSTM-FC 86.7 85.0 71.7 62.8 15.0 22.2 RPCA-Based 92.8 89.7 85.0 80.0 7.8 9.7 TWR-ResNeXt 93.3 88.3 83.3 76.7 10.0 11.6 TWR-CapsuleNet 96.9 93.9 87.8 81.7 9.1 12.2 Proposed method 96.7 94.7 93.3 88.3 3.4 6.4 表 4 所提方法及现有方法在仿真数据集上训练,并在实测数据集上验证及测试的精度对比(%)

Table 4. Comparison of the accuracy of the proposed method and existing methods trained on simulated datasets and validated or tested on measured datasets (%)

方法 验证 测试 差异 TWR-SNN 78.1 70.6 7.5 FC-SLSTM-FC 75.3 60.0 15.3 RPCA-Based 74.2 67.2 7.0 TWR-ResNeXt 81.7 71.1 10.6 TWR-CapsuleNet 83.1 73.9 9.2 Proposed method 85.8 80.0 5.8 表 5 微多普勒角点检测方法的消融验证(%)

Table 5. Ablation validation of micro-Doppler corner detection methods (%)

所用方法 仿真/验证 实测/验证 仿真/测试 实测/测试 仿真差异 实测差异 $ {\mathbf{R}}^{2}\mathbf{T}\mathbf{M} $ $ 88.6 $ $ 86.4 $ $ 79.4 $ $ 80.6 $ $ 9.2 $ $ 5.8 $ $ {\mathbf{D}}^{2}\mathbf{T}\mathbf{M} $ 91.9 93.1 83.3 75.0 8.6 18.1 $ {\mathbf{R}}^{2}\mathbf{T}\mathbf{M}\;\&\;{\mathbf{D}}^{2}\mathbf{T}\mathbf{M} $ 95.8 94.2 89.4 86.1 6.4 8.1 $ {\mathbf{R}}^{2}\mathbf{T}\mathbf{M} $: Harris & $ {\mathbf{D}}^{2}\mathbf{T}\mathbf{M} $: Harris 94.2 90.8 86.1 82.2 8.1 8.6 $ {\mathbf{R}}^{2}\mathbf{T}\mathbf{M} $: Moravec & $ {\mathbf{D}}^{2}\mathbf{T}\mathbf{M} $: Moravec 91.4 90.3 87.2 83.3 4.2 7.0 $ {\mathbf{R}}^{2}\mathbf{T}\mathbf{M} $: Moravec & $ {\mathbf{D}}^{2}\mathbf{T}\mathbf{M} $: Harris 93.1 91.9 86.7 82.8 6.4 9.1 $ {\mathbf{R}}^{2}\mathbf{T}\mathbf{M} $: Harris & $ {\mathbf{D}}^{2}\mathbf{T}\mathbf{M} $: Moravec 96.7 94.7 93.3 88.3 3.4 6.4 表 6 微多普勒角点特征与其他常见计算机视觉特征的性能对比(%)

Table 6. Performance comparison of micro-Doppler corner point features with common computer vision metrics (%)

所用方法 仿真/验证 实测/验证 仿真/测试 实测/测试 仿真差异 实测差异 Canny边缘特征 97.8 90.8 91.7 83.3 6.1 7.5 图像灰度共生矩阵特征(GLCM) 79.4 70.8 65.6 51.1 13.8 19.7 局部二值模式特征(LBP) 73.6 68.9 61.7 53.9 11.9 15.0 Laws纹理特征 96.4 92.5 87.2 84.4 9.2 8.1 微多普勒角点特征 96.7 94.7 93.3 88.3 3.4 6.4 表 7 骨干网络消融验证(%)

Table 7. Ablation validation for the backbone of the neural network (%)

骨干网络 仿真/验证 实测/验证 仿真/测试 实测/测试 仿真差异 实测差异 AlexNet 90.8 87.5 85.6 76.7 5.2 10.8 VGG-16 92.8 92.2 84.4 83.9 8.4 8.3 VGG-19 92.2 90.6 82.8 83.3 9.4 7.3 ResNet-18 94.2 91.1 87.2 83.3 7.0 7.8 ResNet-50 93.6 91.7 89.4 84.4 4.2 7.3 ResNet-101 95.0 90.0 88.3 80.0 6.7 10.0 GoogleNet Inception V1 93.9 91.9 86.1 83.3 7.8 8.6 GoogleNet Inception V2 94.7 92.8 90.6 85.0 4.1 7.8 GoogleNet Inception V3 95.8 93.1 91.1 85.6 4.7 7.5 Proposed 96.7 94.7 93.3 88.3 3.4 6.4 表 8 全局上下文信息提取模块的消融验证(%)

Table 8. Ablation validation of global contextual information extraction module (%)

所用模块 仿真/验证 实测/验证 仿真/测试 实测/测试 仿真差异 实测差异 通道注意力 92.8 90.8 87.2 85.0 5.6 5.8 空间注意力 93.6 89.7 87.2 82.2 6.4 7.5 卷积注意力 94.7 92.5 88.3 83.9 6.4 8.6 Criss-Cross注意力 95.0 92.5 85.6 86.7 9.4 5.8 传统Non-Local模块 96.1 94.4 90.0 86.1 6.1 8.3 BAT 97.5 95.0 91.7 87.8 5.8 7.2 Proposed 96.7 94.7 93.3 88.3 3.4 6.4 -

[1] 刘天亮, 谯庆伟, 万俊伟, 等. 融合空间-时间双网络流和视觉注意的人体行为识别[J]. 电子与信息学报, 2018, 40(10): 2395–2401. doi: 10.11999/JEIT171116LIU Tianliang, QIAO Qingwei, WAN Junwei, et al. Human action recognition via spatio-temporal dual network flow and visual attention fusion[J]. Journal of Electronics & Information Technology, 2018, 40(10): 2395–2401. doi: 10.11999/JEIT171116 [2] 吴培良, 杨霄, 毛秉毅, 等. 一种视角无关的时空关联深度视频行为识别方法[J]. 电子与信息学报, 2019, 41(4): 904–910. doi: 10.11999/JEIT180477WU Peiliang, YANG Xiao, MAO Bingyi, et al. A perspective-independent method for behavior recognition in depth video via temporal-spatial correlating[J]. Journal of Electronics & Information Technology, 2019, 41(4): 904–910. doi: 10.11999/JEIT180477 [3] 金添, 何元, 李新羽, 等. 超宽带雷达人体行为感知研究进展[J]. 电子与信息学报, 2022, 44(4): 1147–1155. doi: 10.11999/JEIT211044JIN Tian, HE Yuan, LI Xinyu, et al. Advances in human activity sensing using ultra-wide band radar[J]. Journal of Electronics & Information Technology, 2022, 44(4): 1147–1155. doi: 10.11999/JEIT211044 [4] 吕温, 徐贵力, 程月华, 等. 基于局部时空特征的人体行为软分类识别[J]. 计算机与现代化, 2014(3): 94–98, 103. doi: 10.3969/j.issn.1006-2475.2014.03.023LV Wen, XU Guili, CHENG Yuehua, et al. Soft Classification in action recognition based on local spatio-temporal features[J]. Computer and Modernization, 2014(3): 94–98, 103. doi: 10.3969/j.issn.1006-2475.2014.03.023 [5] 丁一鹏, 厍彦龙. 穿墙雷达人体动作识别技术的研究现状与展望[J]. 电子与信息学报, 2022, 44(4): 1156–1175. doi: 10.11999/JEIT211051DING Yipeng and SHE Yanlong. Research status and prospect of human movement recognition technique using through-wall radar[J]. Journal of Electronics & Information Technology, 2022, 44(4): 1156–1175. doi: 10.11999/JEIT211051 [6] 金添, 宋勇平, 崔国龙, 等. 低频电磁波建筑物内部结构透视技术研究进展[J]. 雷达学报, 2021, 10(3): 342–359. doi: 10.12000/JR20119JIN Tian, SONG Yongping, CUI Guolong, et al. Advances on penetrating imaging of building layout technique using low frequency radio waves[J]. Journal of Radars, 2021, 10(3): 342–359. doi: 10.12000/JR20119 [7] 金添, 宋勇平. 超宽带雷达建筑物结构稀疏成像[J]. 雷达学报, 2018, 7(3): 275–284. doi: 10.12000/JR18031JIN Tian and SONG Yongping. Sparse imaging of building layouts in ultra-wideband radar[J]. Journal of Radars, 2018, 7(3): 275–284. doi: 10.12000/JR18031 [8] 夏正欢, 张群英, 叶盛波, 等. 一种便携式伪随机编码超宽带人体感知雷达设计[J]. 雷达学报, 2015, 4(5): 527–537. doi: 10.12000/JR15027XIA Zhenghuan, ZHANG Qunying, YE Shengbo, et al. Design of a handheld pseudo random coded UWB radar for human sensing[J]. Journal of Radars, 2015, 4(5): 527–537. doi: 10.12000/JR15027 [9] FALCONER D G, FICKLIN R W, and KONOLIGE K G. Robot-mounted through-wall radar for detecting, locating, and identifying building occupants[C]. Millennium Conference. IEEE International Conference on Robotics and Automation, San Francisco, USA, 2000: 1868–1875. [10] FALCONER D G, FICKLIN R W, and KONOLIGE K G. Detection, location, and identification of building occupants using a robot-mounted through-wall radar[C]. SPIE 4037, Digitization of the Battlespace V and Battlefield Biomedical Technologies II, Orlando, USA, 2000: 72–81. [11] LAI C P, RUAN Qing, and NARAYANAN R M. Hilbert-Huang Transform (HHT) processing of through-wall noise radar data for human activity characterization[C]. 2007 IEEE Workshop on Signal Processing Applications for Public Security and Forensics, Washington, USA, 2007: 1–6. [12] LI Jing, ZENG Zhaofa, SUN Jiguang, et al. Through-wall detection of human Being’s movement by UWB radar[J]. IEEE Geoscience & Remote Sensing Letters, 2012, 9(6): 1079–1083. doi: 10.1109/LGRS.2012.2190707 [13] QI Fugui, LIANG Fulai, LV Hao, et al. Detection and classification of finer-grained human activities based on stepped-frequency continuous-wave through-wall radar[J]. Sensors, 2016, 16(6): 885. doi: 10.3390/s16060885 [14] QI Fugui, LV Hao, LIANG Fulai, et al. MHHT-based method for analysis of micro-doppler signatures for human finer-grained activity using through-wall SFCW Radar[J]. Remote Sensing, 2017, 9(3): 260. doi: 10.3390/rs9030260 [15] PENG Yansong and GUO Shisheng. Detailed feature representation and analysis of low frequency UWB Radar range profile for improving through-wall human activity recognition[C]. 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 2020: 1–6. [16] QI Fugui, LI Zhao, MA Yangyang, et al. Generalization of channel micro-Doppler capacity evaluation for improved finer-grained human activity classification using MIMO UWB radar[J]. IEEE Transactions on Microwave Theory and Techniques, 2021, 69(11): 4748–4761. doi: 10.1109/TMTT.2021.3076055 [17] WANG Xiang, CHEN Pengyun, XIE Hangchen, et al. Through-wall human activity classification using complex-valued convolutional neural network[C]. 2021 IEEE Radar Conference (RadarConf21), Atlanta, USA, 2021: 1–4. [18] ARBABI E, BOULIC R, and THALMANN D. A fast method for finding range of motion in the Human joints[C]. The 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 2007: 5079–5082. [19] MAAREF N, MILLOT P, PICHOT C, et al. A study of UWB FM-CW radar for the detection of human beings in motion inside a building[J]. IEEE Transactions on Geoscience and Remote Sensing, 2009, 47(5): 1297–1300. doi: 10.1109/TGRS.2008.2010709 [20] JIN Tian and YAROVOY A. A through-the-wall radar imaging method based on a realistic model[J]. International Journal of Antennas and Propagation, 2015, 2015: 539510. doi: 10.1155/2015/539510 [21] MA Ruowen, LIU Weiguo, MIAO Chen, et al. The simulation of human walking micro-Doppler echo and comparison of time-frequency analysis method[C]. The First International Conference on Electronics Instrumentation & Information Systems (EIIS), Harbin, China, 2017: 1–6. [22] JOHANSSON T, RAHM J, GUSTAVSSON J, et al. Through-the-wall detection of human activity[C]. SPIE 8021, Radar Sensor Technology XV, Orlando, USA, 2011: 446–454. [23] FAIRCHILD D P and NARAYANAN R M. Classification of human motions using empirical mode decomposition of human micro-Doppler signatures[J]. IET Radar, Sonar & Navigation, 2014, 8(5): 425–434. doi: 10.1049/iet-rsn.2013.0165 [24] OH B S, GUO Xin, and LIN Zhiping. A UAV classification system based on FMCW radar micro-Doppler signature analysis[J]. Expert Systems with Applications, 2019, 132: 239–255. doi: 10.1016/j.eswa.2019.05.007 [25] SAFY M, SHI Guangming, and AMEIN A S. Semi-automatic image registration using Harris corner detection and RANdom SAmple Consensus (RANSAC)[C]. IET International Radar Conference 2013, Xi’an, China, 2013: 1–4. [26] KUMARI M S and SHEKAR B H. On the use of Moravec operator for text detection in document images and video frames[C]. 2011 International Conference on Recent Trends in Information Technology (ICRTIT), Chennai, India: 2011: 910–914. [27] CAO Yue, XU Jiarui, LIN S, et al. GCNet: Non-local networks meet squeeze-excitation networks and beyond[C]. 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea (South), 2020: 1971–1980. [28] VISHWAKARMA S, LI Wenda, TANG Chong, et al. SimHumalator: An open-source end-to-end radar simulator for human activity recognition[J]. IEEE Aerospace and Electronic Systems Magazine, 2022, 37(3): 6–22. doi: 10.1109/MAES.2021.3138948 [29] BHAT S A, DAR M A, SZCZUKO P, et al. Sensing direction of human motion using Single-Input-Single-Output (SISO) channel model and neural networks[J]. IEEE Access, 2022, 10: 56823–56844. doi: 10.1109/ACCESS.2022.3177273 [30] CHENG Can, LING Fei, GUO Shisheng, et al. A real-time human activity recognition method for through-the-wall radar[C]. 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 2020: 1–5. [31] AN Qiang, WANG Shuoguang, YAO Lei, et al. RPCA-based high resolution through-the-wall human motion feature extraction and classification[J]. IEEE Sensors Journal, 2021, 21(17): 19058–19068. doi: 10.1109/JSEN.2021.3088122 [32] CHEN Pengyun, GUO Shisheng, LI Huquan, et al. Through-wall human motion recognition based on transfer learning and ensemble learning[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 3505305. doi: 10.1109/LGRS.2021.3070374 [33] WANG Xiang, WANG Yumiao, GUO Shisheng, et al. Capsule network with multiscale feature fusion for hidden human activity classification[J]. IEEE Transactions on Instrumentation and Measurement, 2023, 72: 2504712. doi: 10.1109/TIM.2023.3238749 [34] SAVAGE R, PALAFOX L F, MORRISON C T, et al. A Bayesian approach to subkilometer crater shape analysis using individual HiRISE images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(10): 5802–5812. doi: 10.1109/TGRS.2018.2825608 [35] WINKELMANN S, SCHAEFFTER T, EGGERS H, et al. SNR enhancement in radial SSFP imaging using partial k-space averaging[J]. IEEE Transactions on Medical Imaging, 2005, 24(2): 254–262. doi: 10.1109/TMI.2004.840845 [36] KRIG S. Computer Vision Metrics: Survey, Taxonomy, and Analysis[M]. Berkeley: Apress, 2014: 85. [37] VISHWAKARMA S, LI Wenda, ADVE R, et al. Learning salient features in radar micro-Doppler signatures using Attention Enhanced Alexnet[C]. International Conference on Radar Systems (RADAR 2022), Hybrid Conference, UK, 2022: 190–195. [38] LI Jiefang, CHEN Xiaolong, YU Gang, et al. High-precision human activity classification via radar micro-Doppler signatures based on deep neural network[C]. IET International Radar Conference (IET IRC 2020), 2020: 1124–1129. [39] JIA Yong, GUO Yong, SONG Ruiyuan, et al. ResNet-based counting algorithm for moving targets in through-the-wall radar[J]. IEEE Geoscience and Remote Sensing Letters, 2021, 18(6): 1034–1038. doi: 10.1109/LGRS.2020.2990742 [40] CHUMA E L and IANO Y. Human movement recognition system using CW Doppler radar sensor with FFT and convolutional neural network[C]. 2020 IEEE MTT-S Latin America Microwave Conference (LAMC 2020), Cali, Colombia, 2021: 1–4. [41] ZHAO Wenwei, WANG Runze, QI Yunliang, et al. BASCNet: Bilateral adaptive spatial and channel attention network for breast density classification in the mammogram[J]. Biomedical Signal Processing and Control, 2021, 70: 103073. doi: 10.1016/j.bspc.2021.103073 [42] RAJAMANI K T, SIEBERT H, and HEINRICH M P. Dynamic deformable attention network (DDANet) for COVID-19 lesions semantic segmentation[J]. Journal of Biomedical Informatics, 2021, 119: 103816. doi: 10.1016/j.jbi.2021.103816 [43] CHI Lu, YUAN Zehuan, MU Yadong, et al. Non-local neural networks with grouped bilinear attentional transforms[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2020: 11801–11810. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: