Research on the Technology of Airborne Multi-channel Wide Angle Staring SAR Ground Moving Target Indication

-

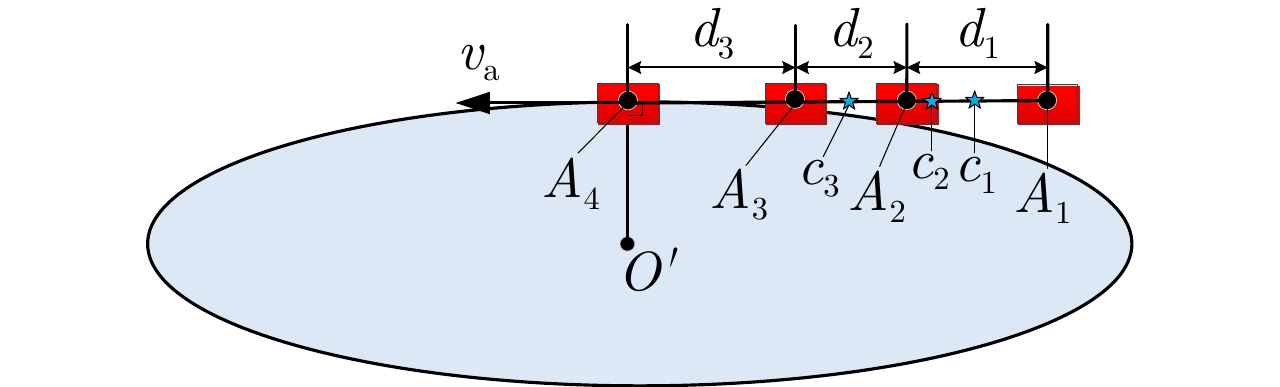

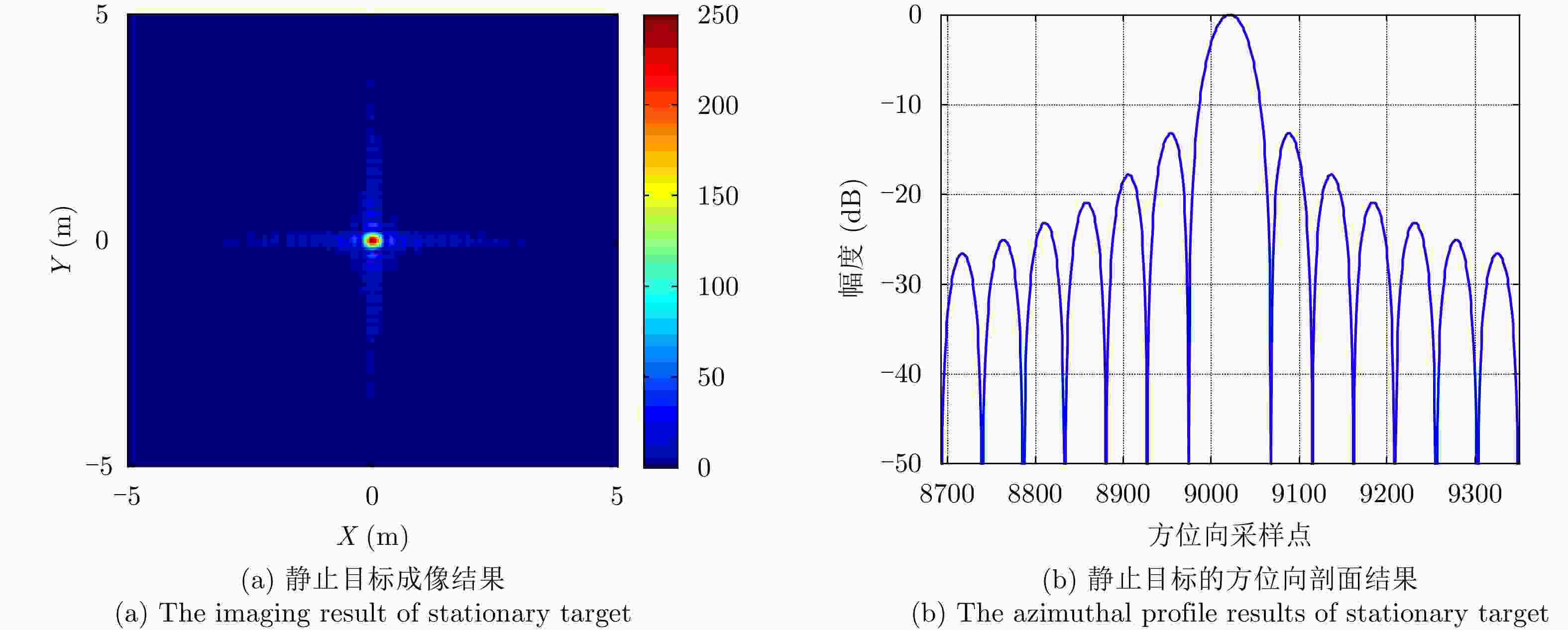

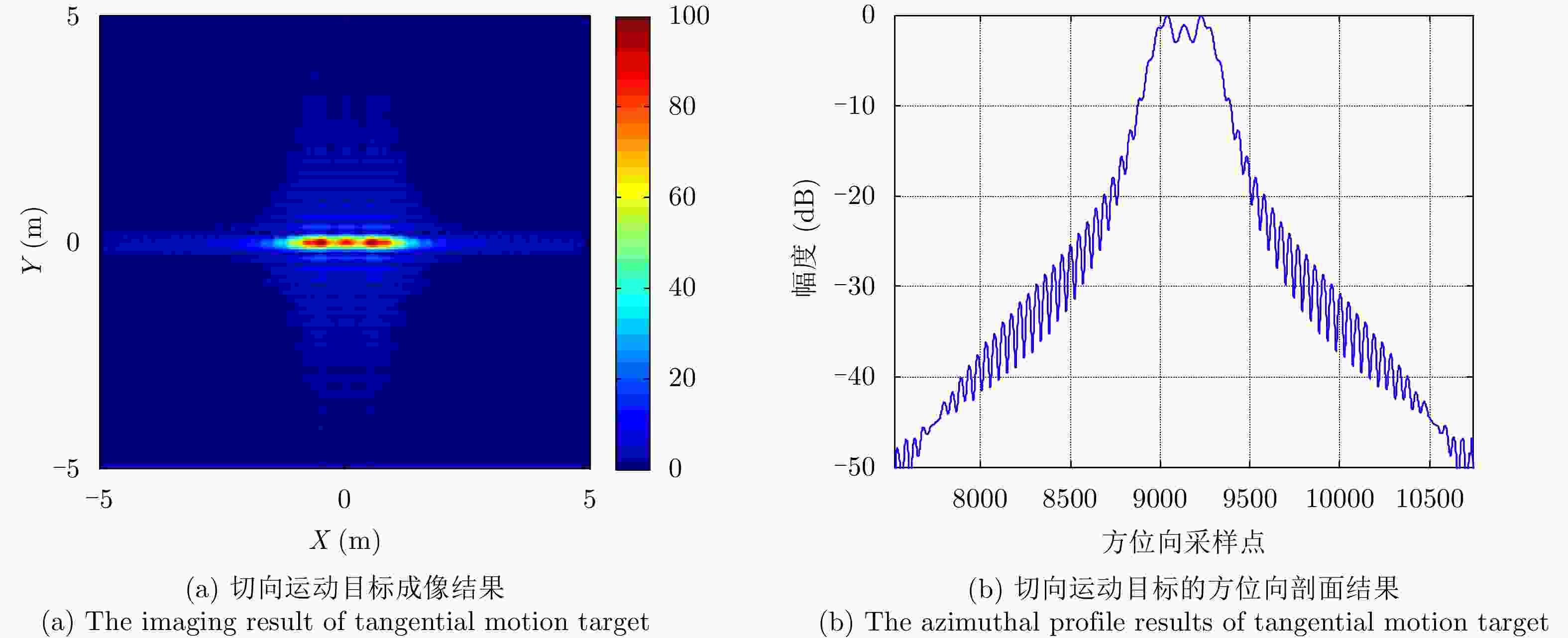

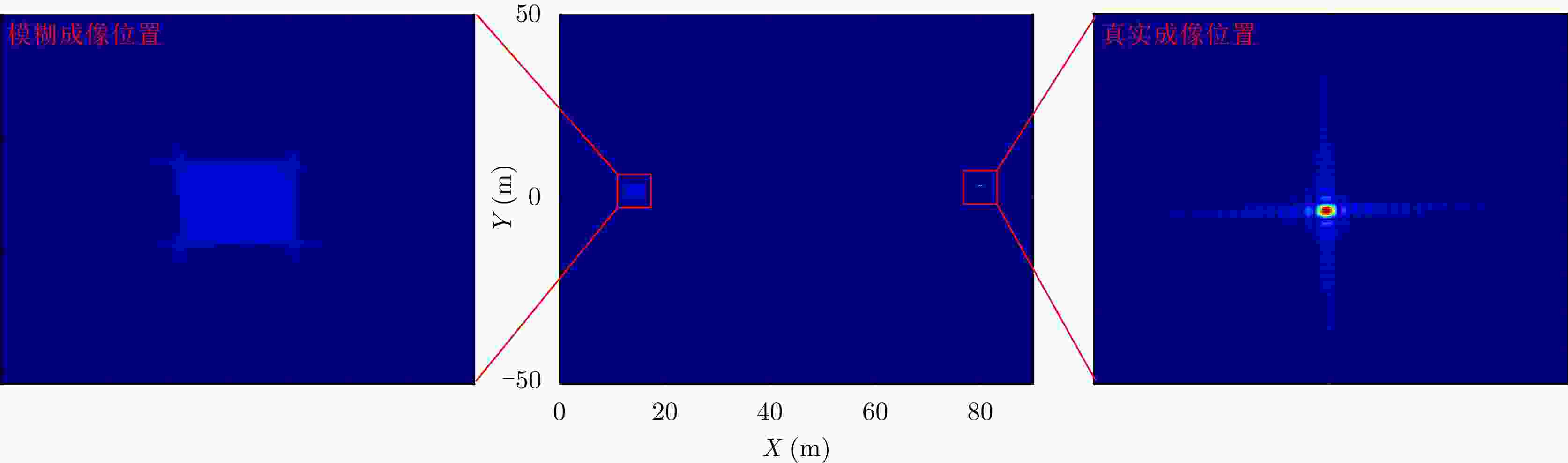

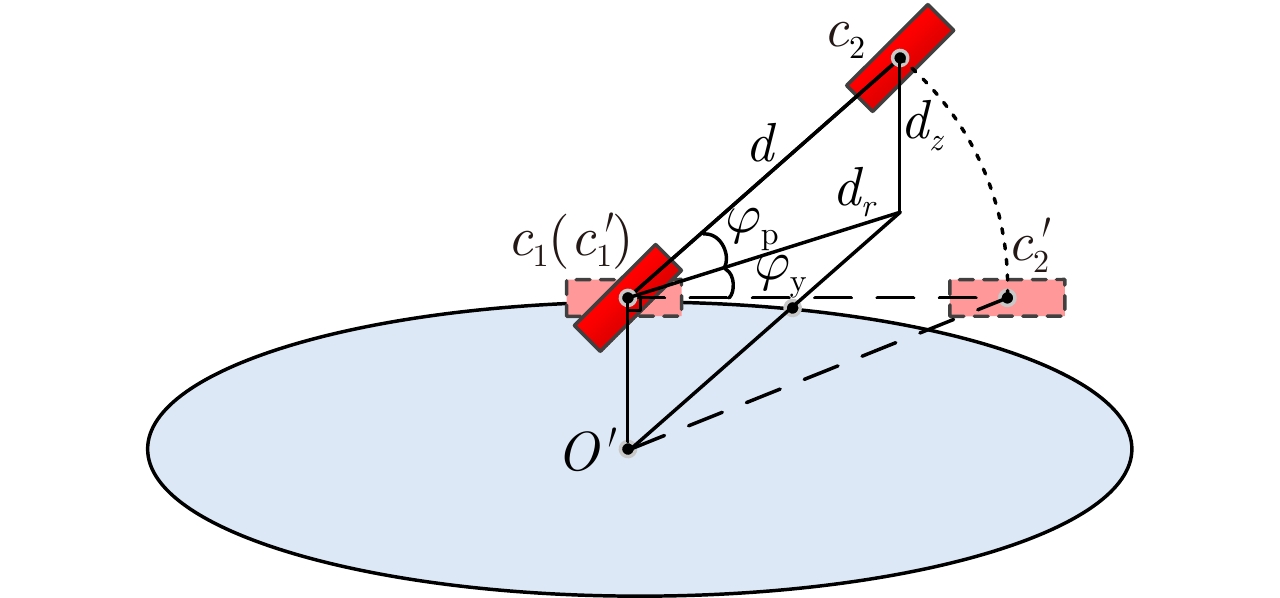

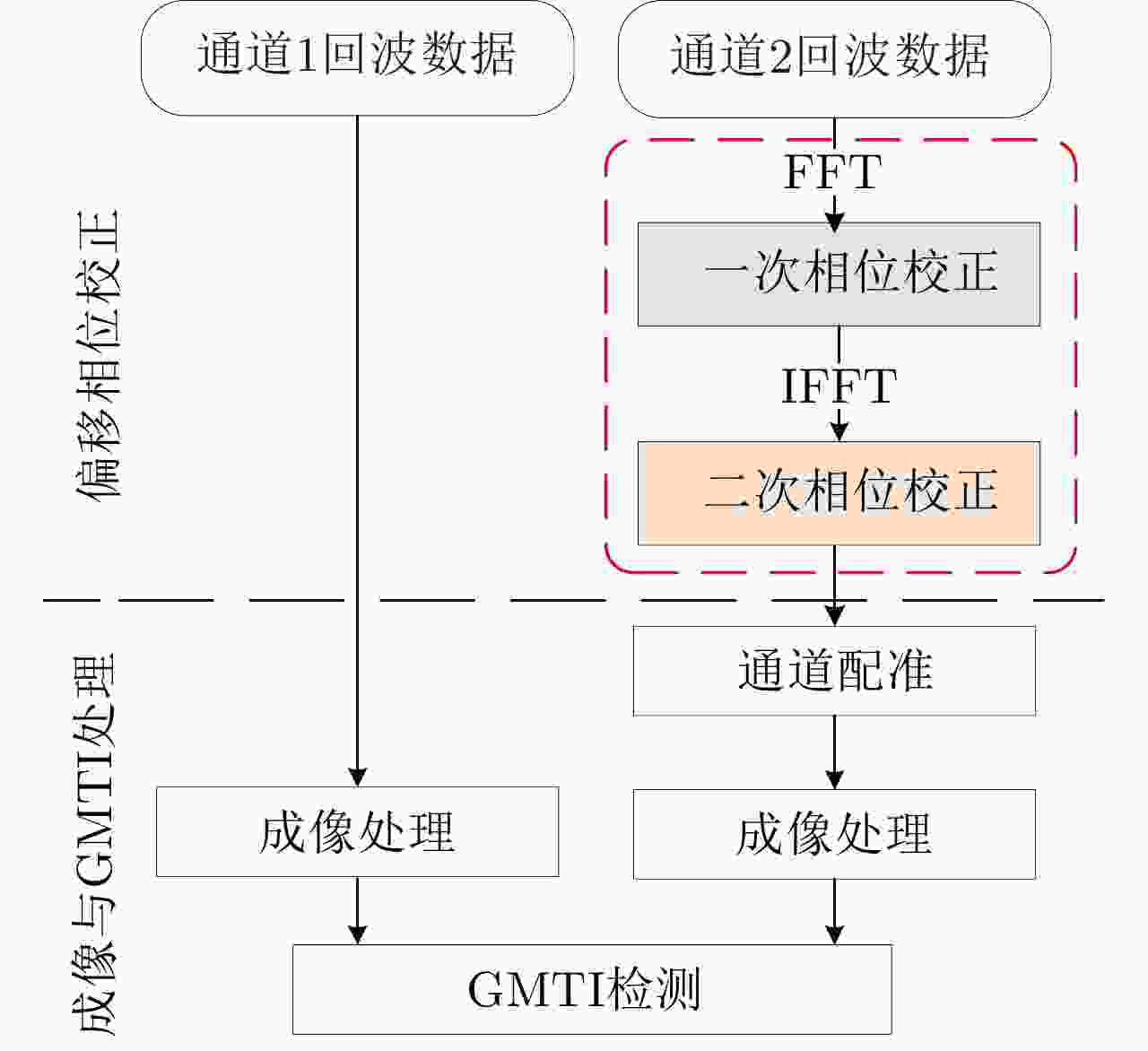

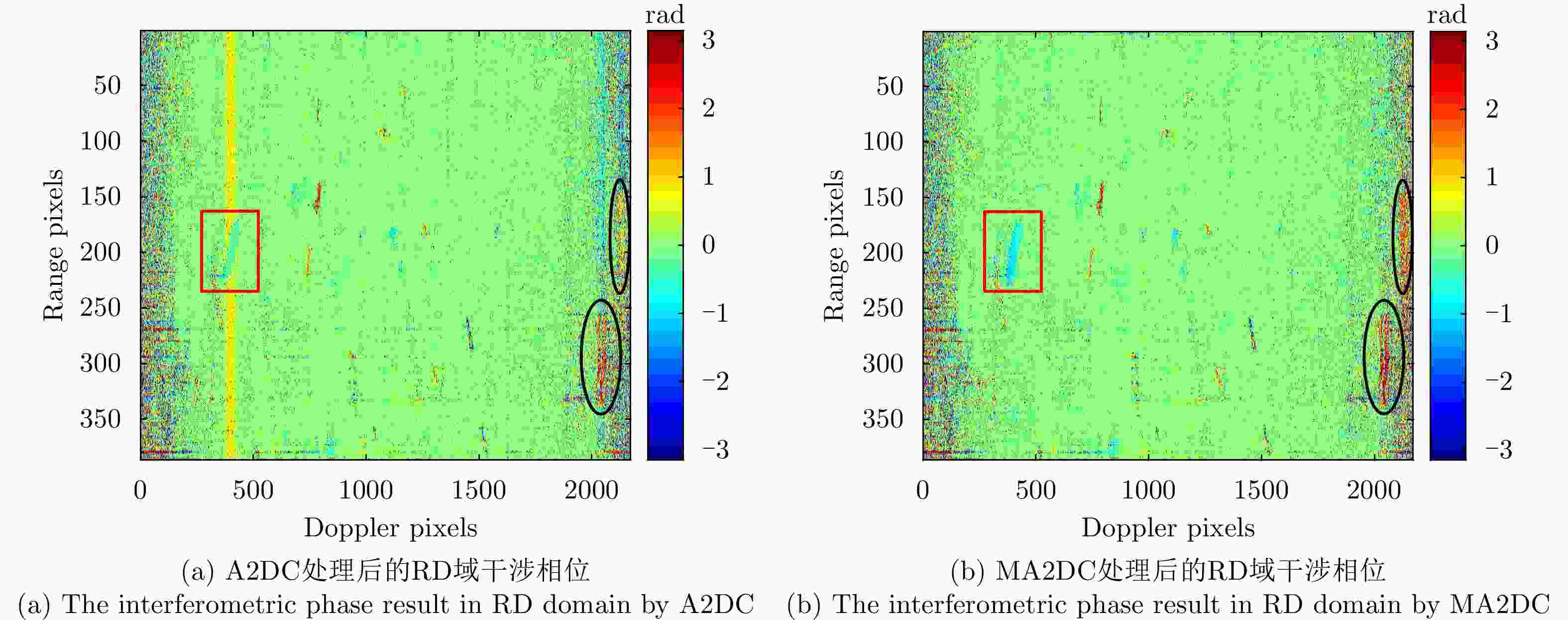

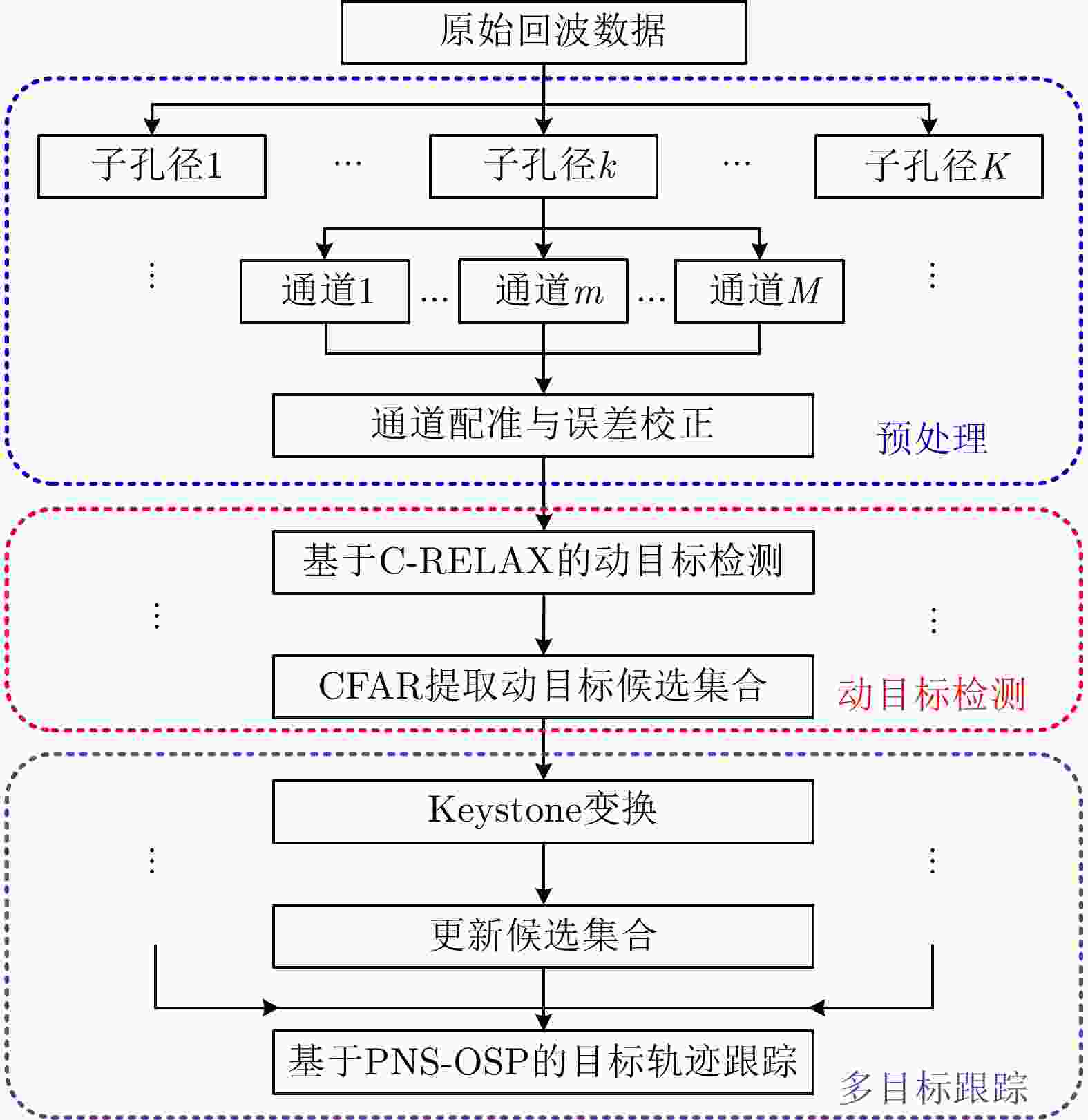

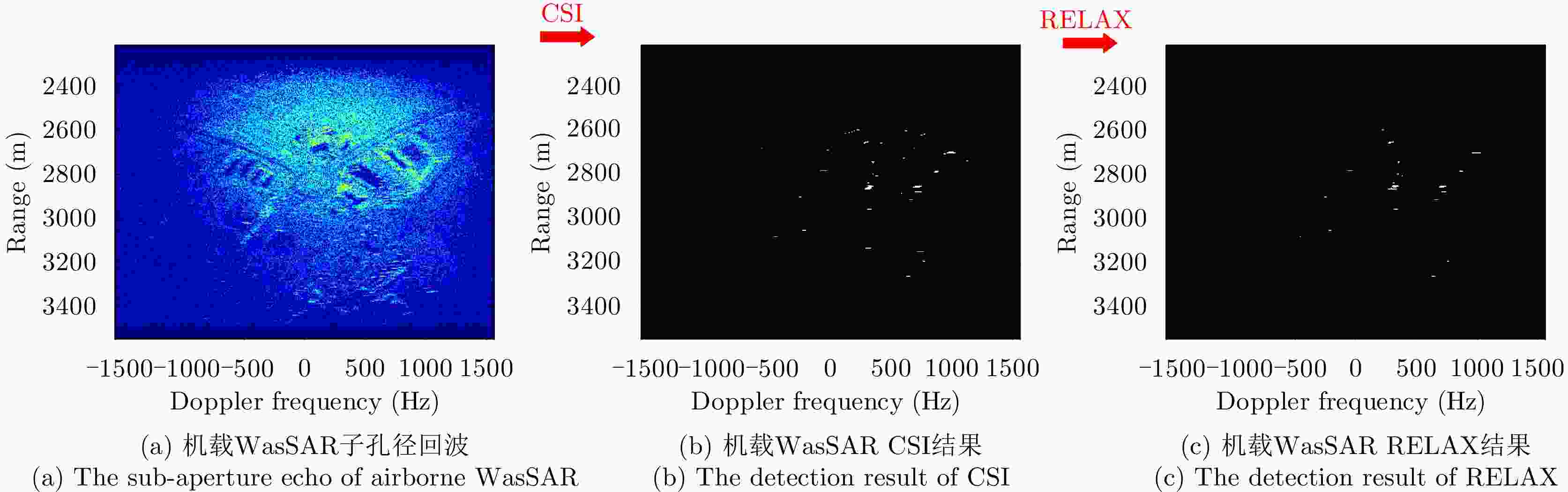

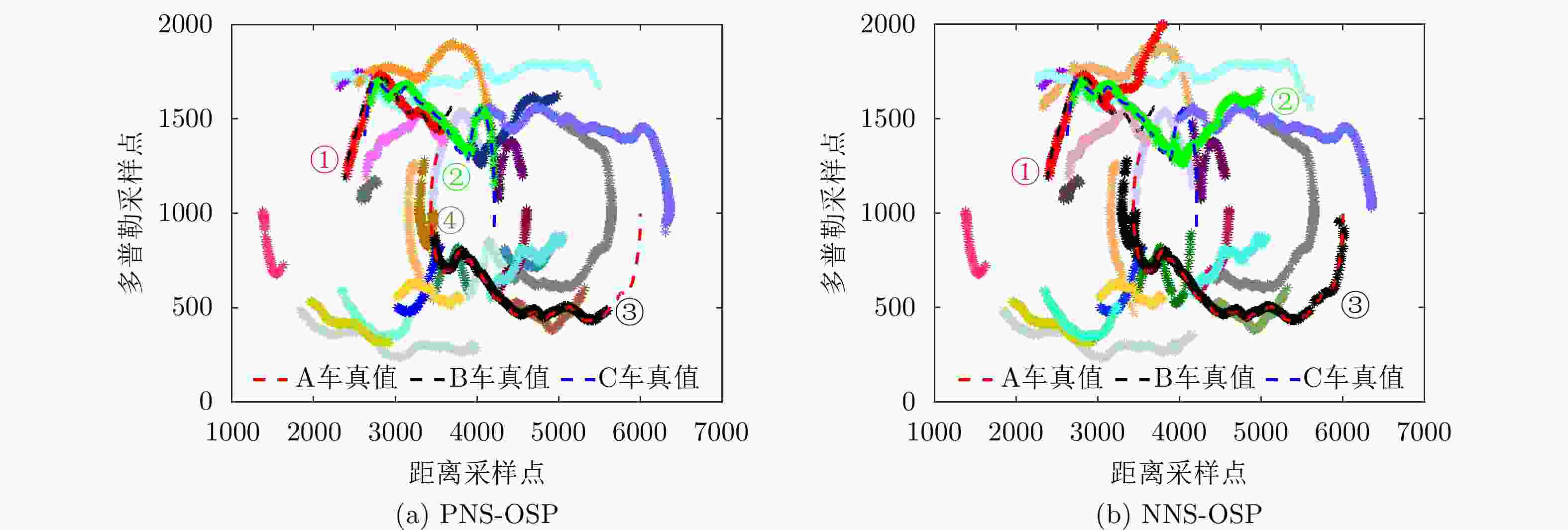

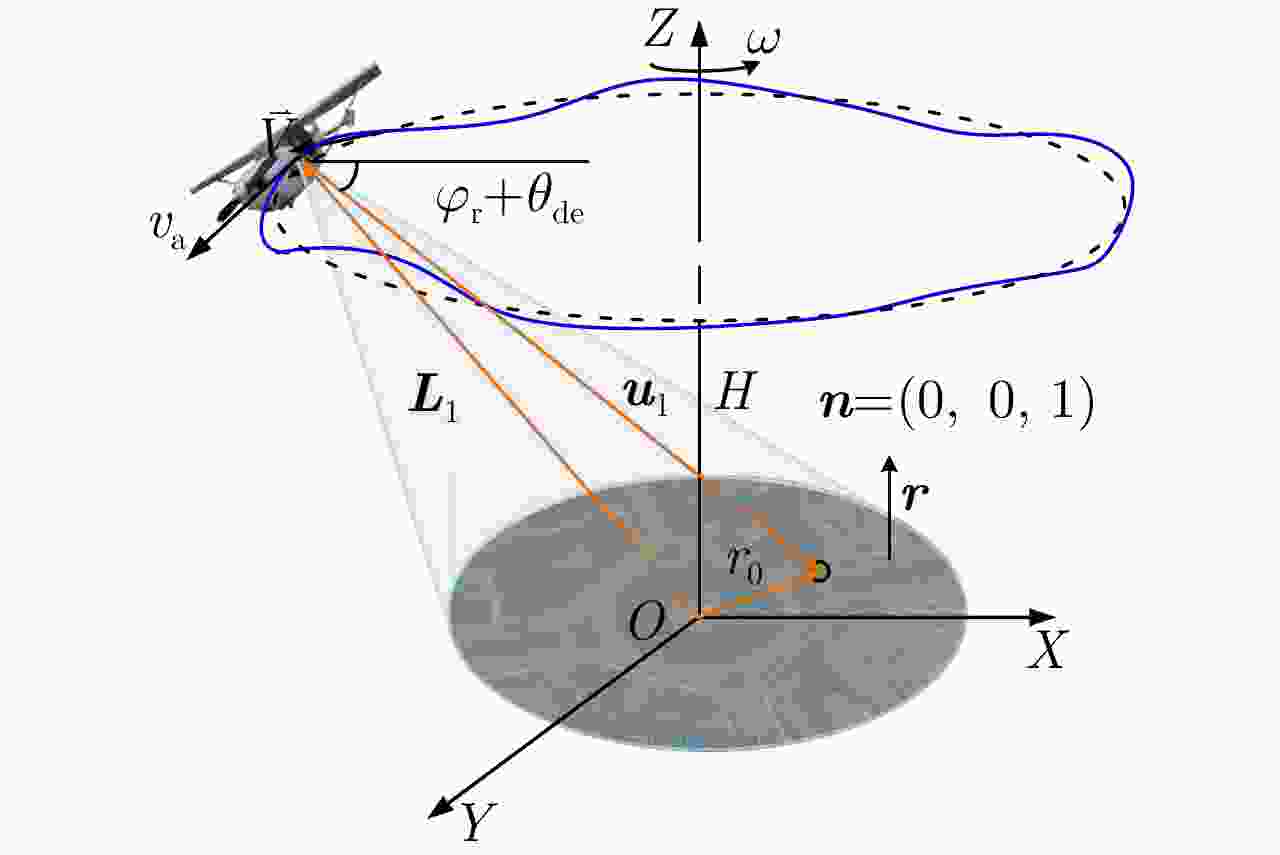

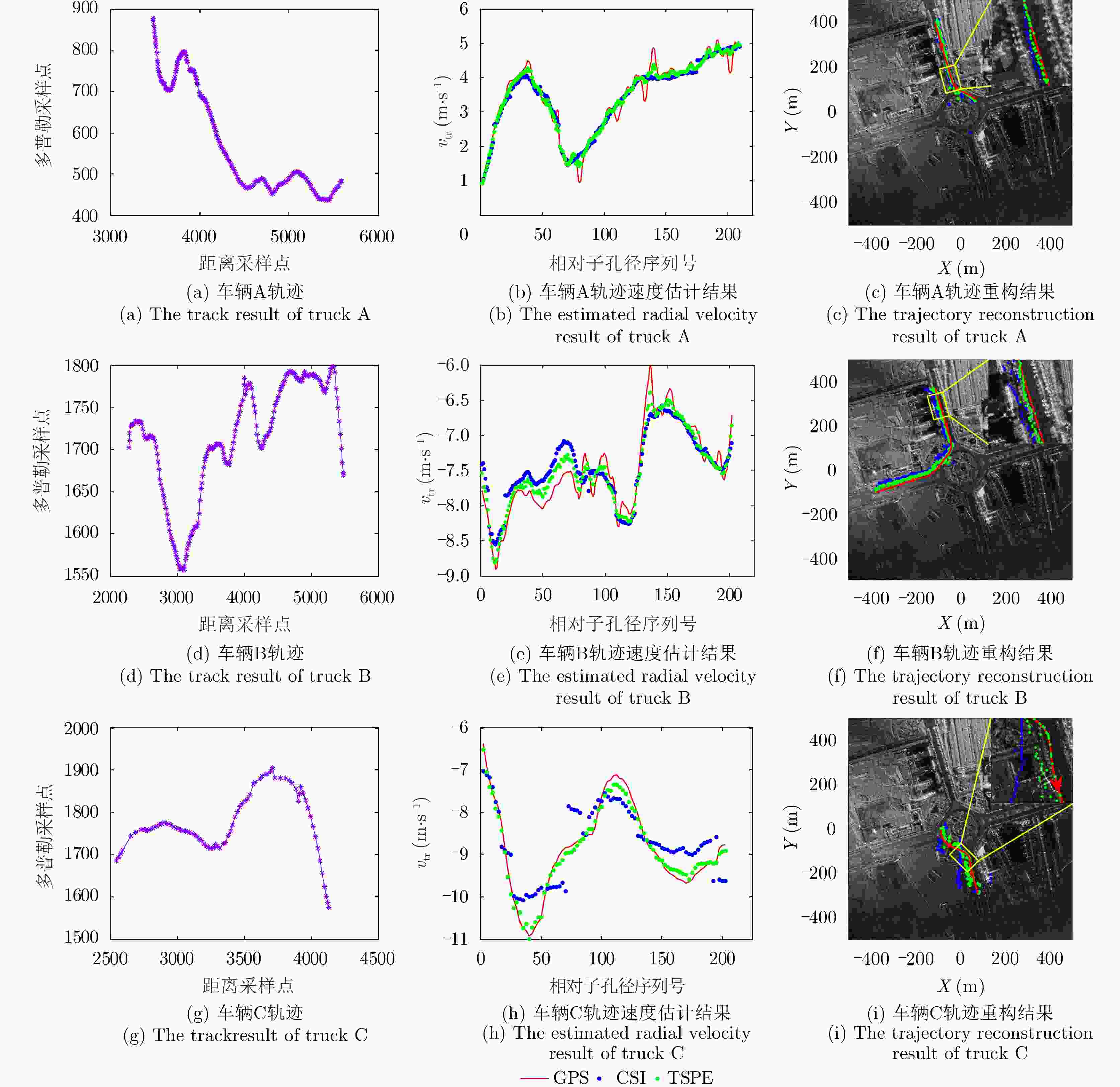

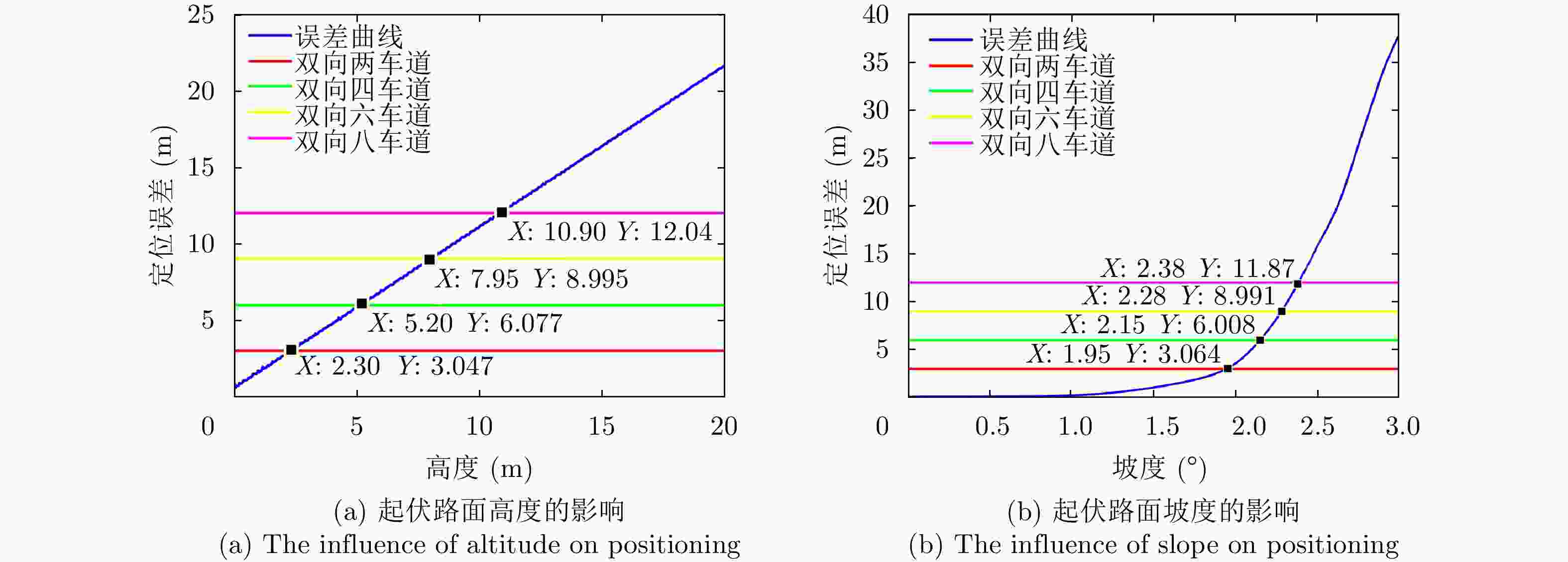

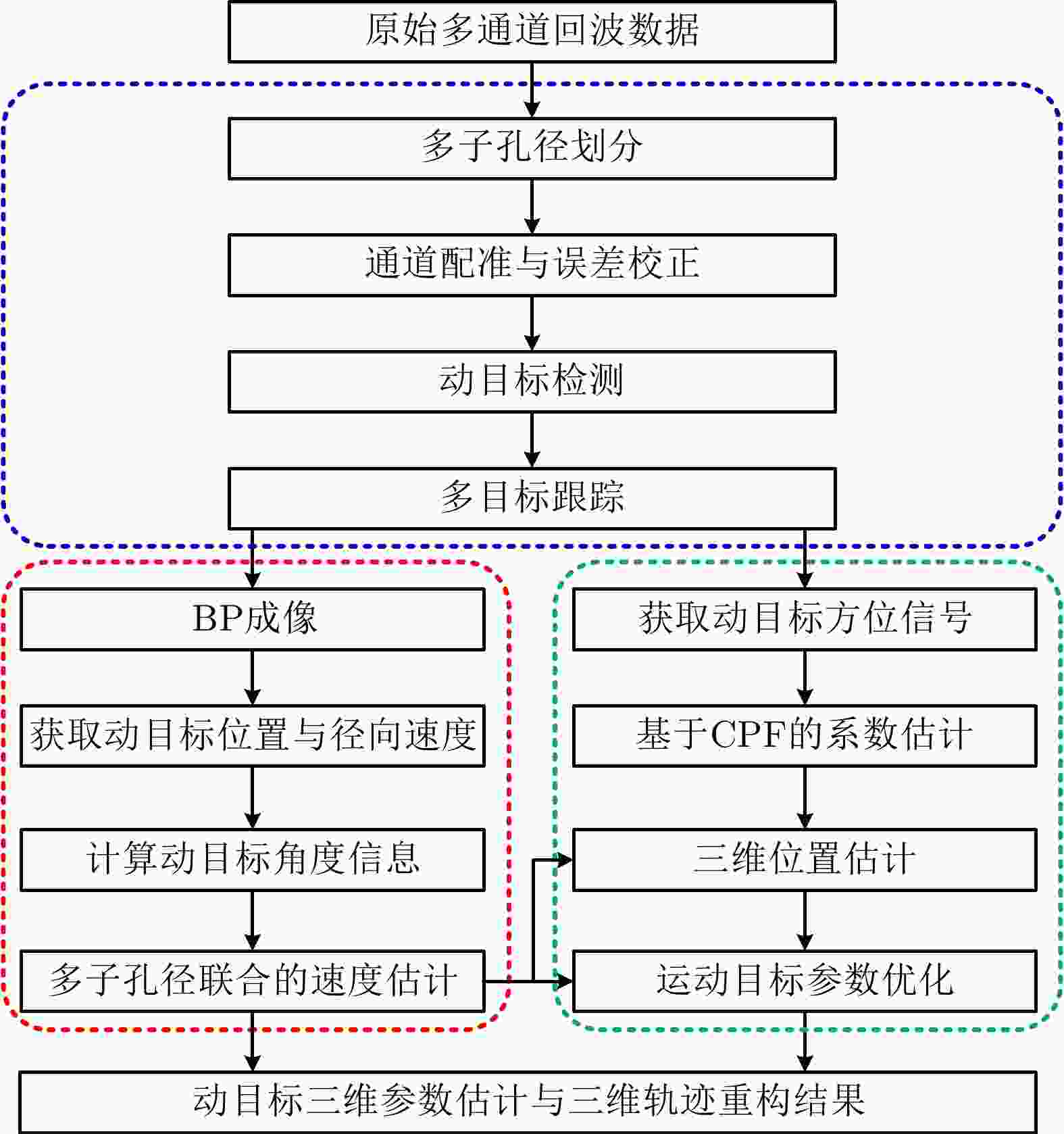

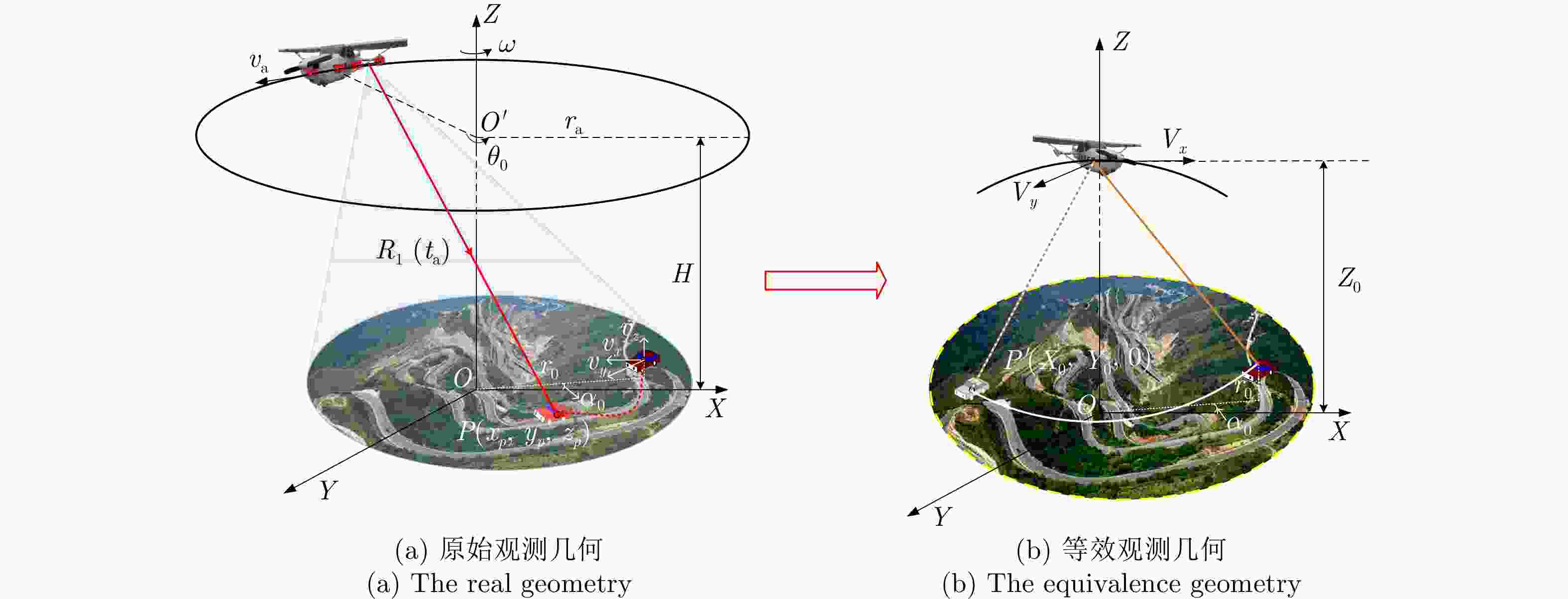

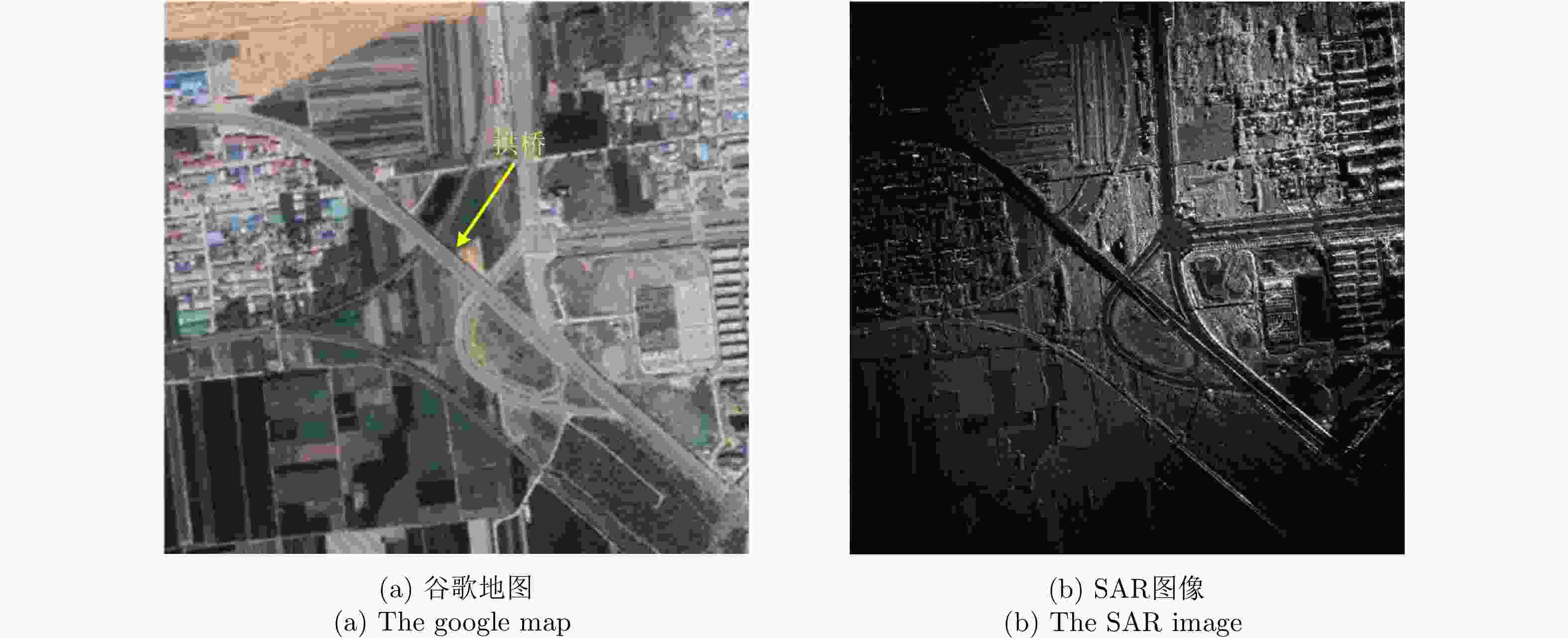

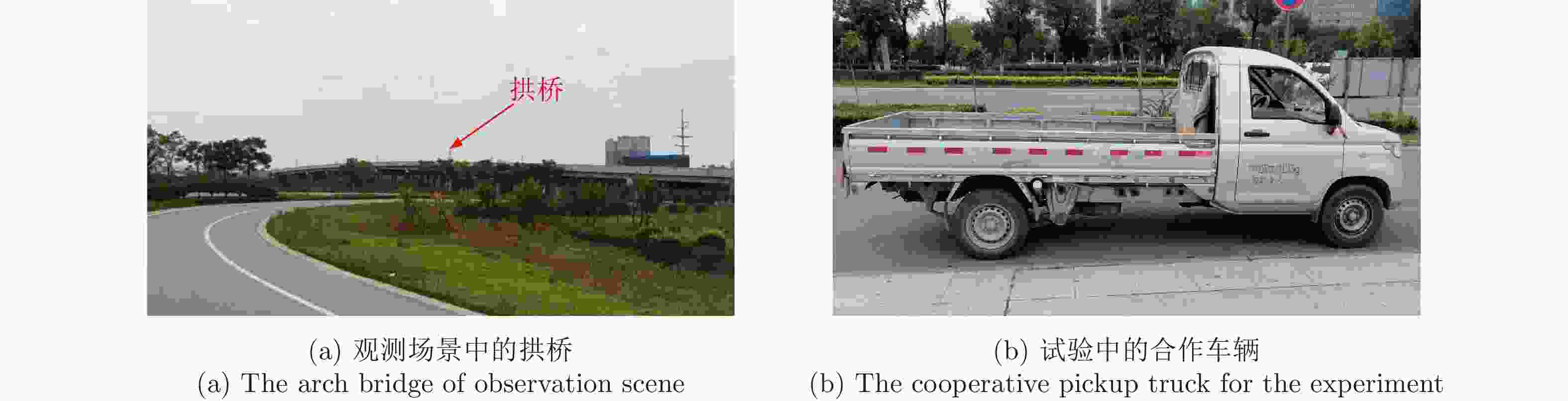

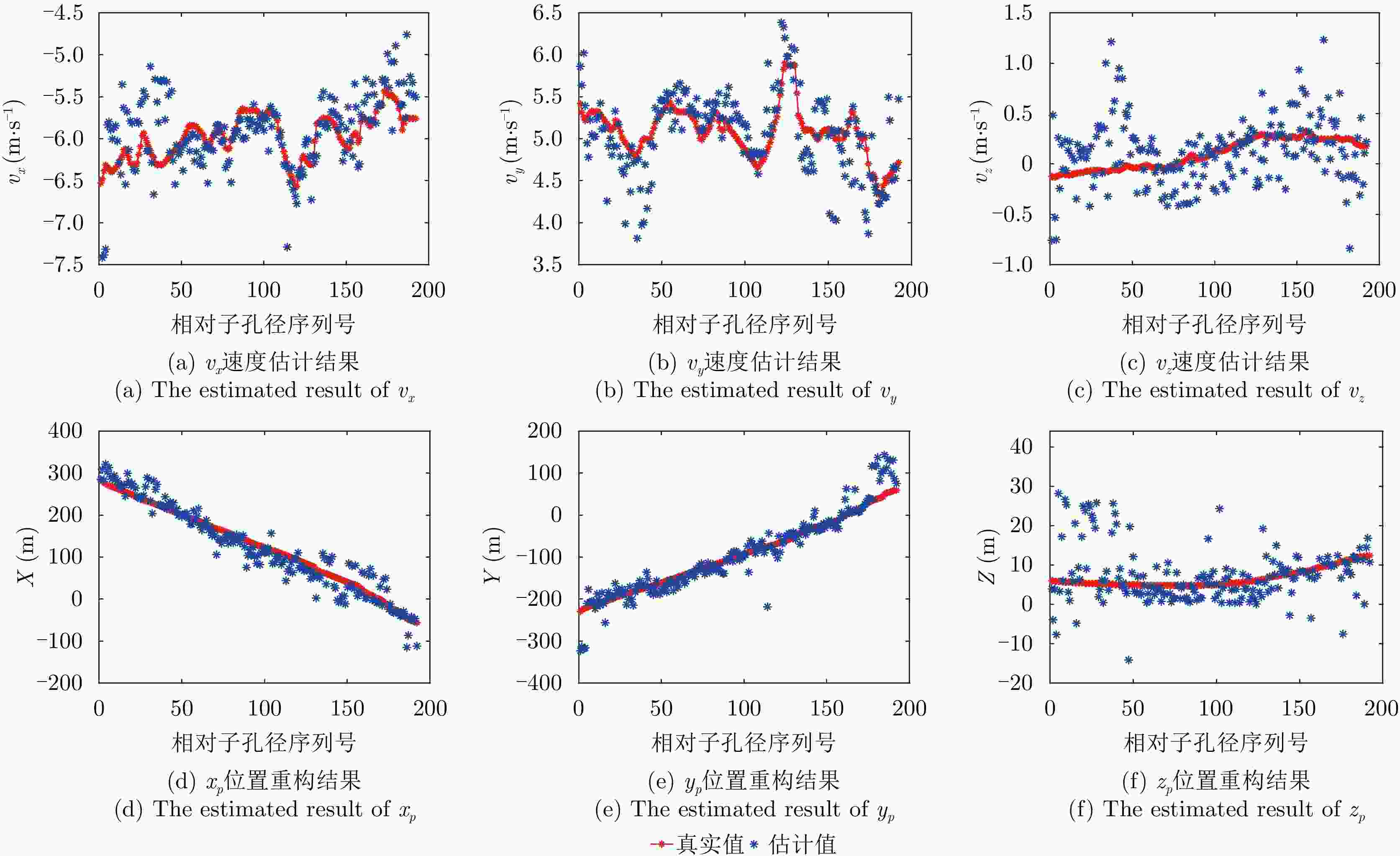

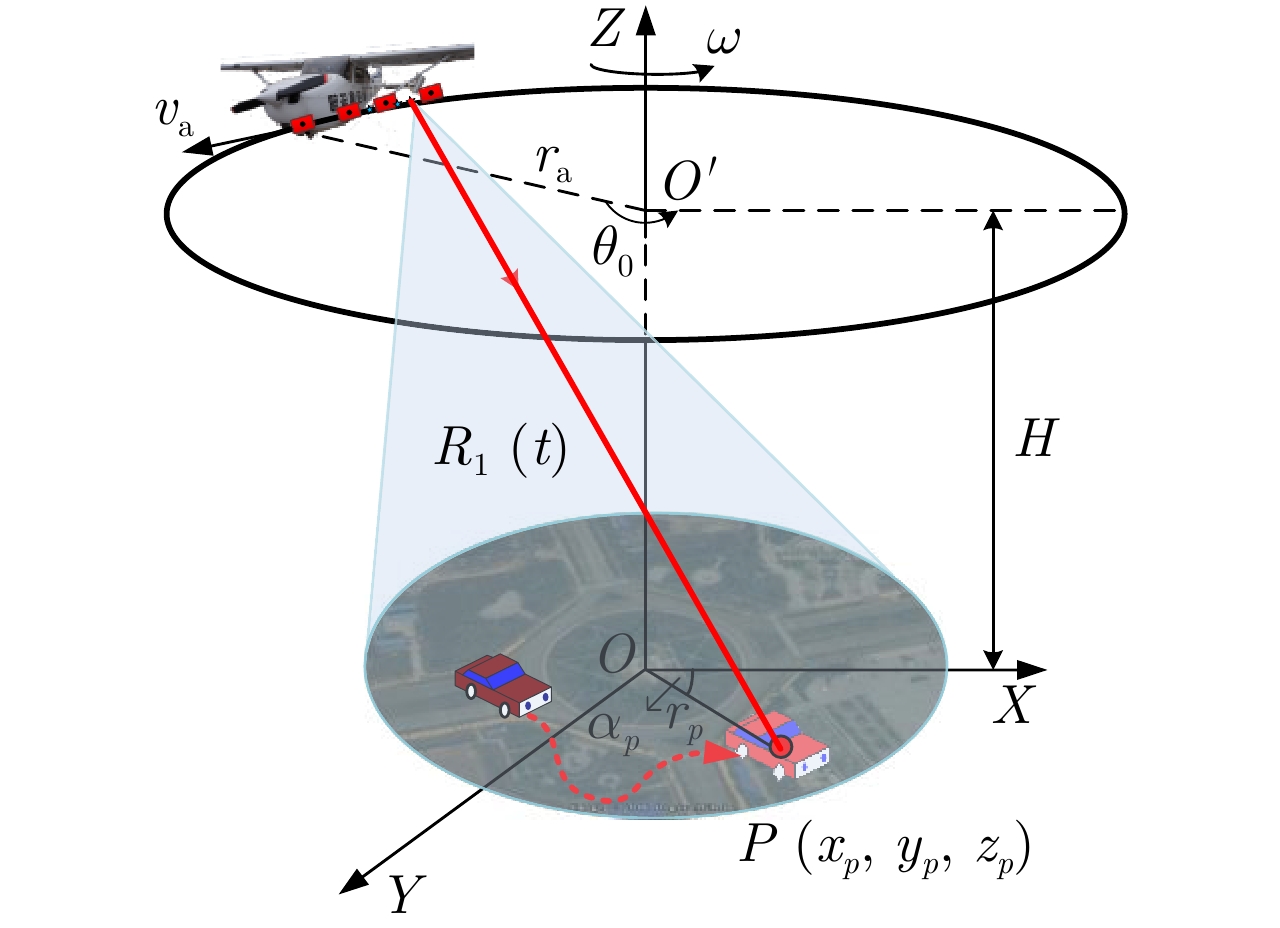

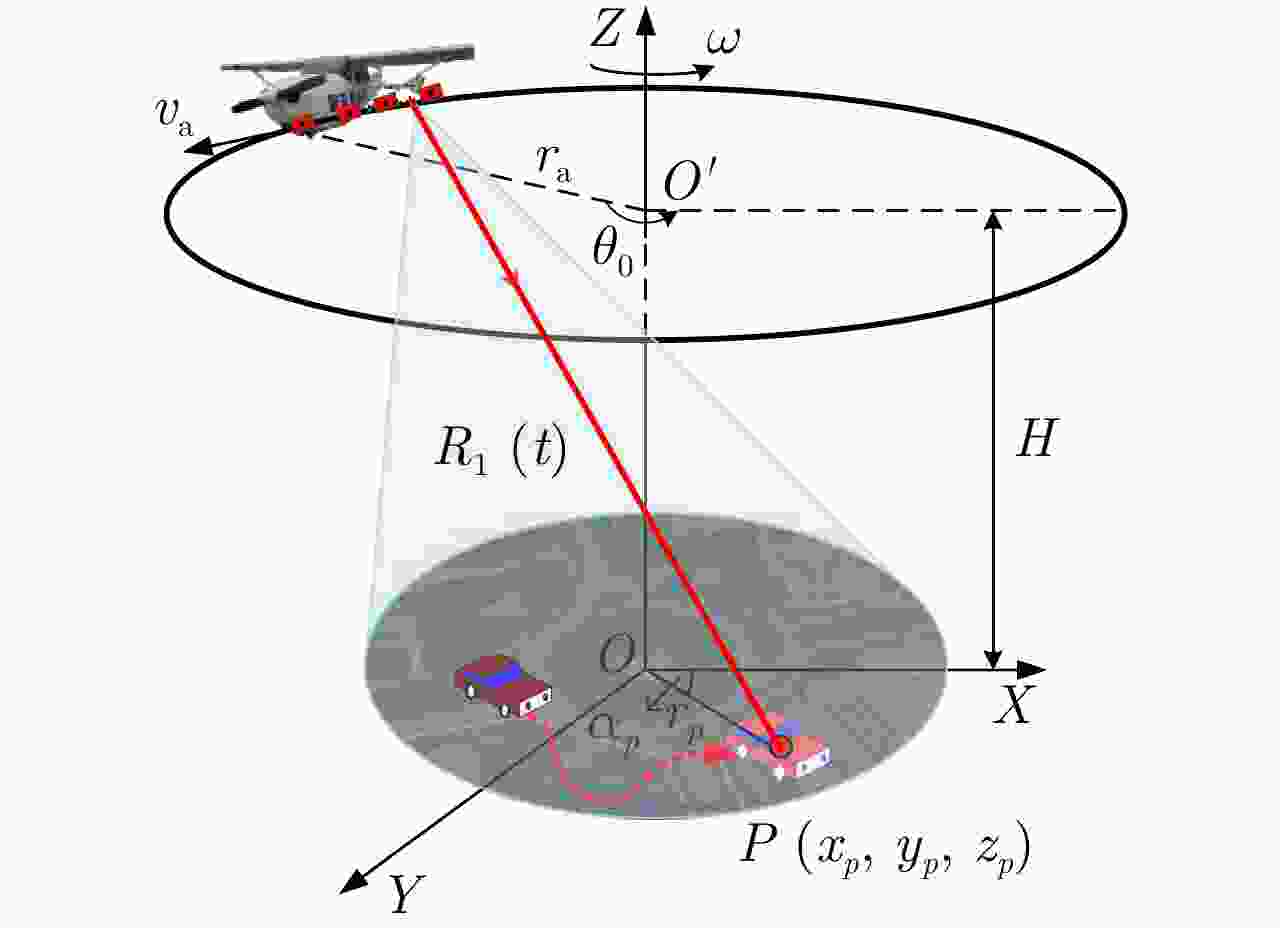

摘要: 机载广角凝视合成孔径雷达(WasSAR)是一种可对观测区域实施多角度长时间凝视成像探测的新兴SAR成像技术。将机载WasSAR成像与地面运动目标指示(GMTI)技术相结合,则可对重点区域内出现的地面运动目标实施持续成像跟踪监视,从而获取准确的动态感知信息。该文首先建立了机载多通道WasSAR动目标回波模型,分析了WasSAR动目标特性;然后,通过采用偏移相位校正和改进二维自适应校正方法,消除了载机姿态误差与通道非均衡的影响;在此基础上,提出了机载多通道WasSAR动目标检测跟踪算法,实现了复杂路况上行驶动目标的准确检测与跟踪;最后,提出了机载多通道WasSAR动目标行驶轨迹重构算法,实现了起伏路面下的动目标行驶轨迹精确重构。此外,该文中给出了作者团队利用自主研制机载多通道WasSAR-GMTI系统开展的外场飞行试验和实测数据处理结果,验证了地面运动目标持续跟踪监视的有效性和实用性,为后续开展更加深入的研究提供基础。Abstract: Airborne Wide angle staring Synthetic Aperture Radar (WasSAR) is a novel SAR imaging technique that enables multiangle and long-time staring imaging of an observation region. The combination of WasSAR and Ground Moving Target Indication (GMTI) facilitates the long-time tracking of moving targets in key areas, thereby obtaining dynamic sensing information. Herein, we initially constructed a moving target echo model for airborne multi-channel WasSAR, followed by an analysis of the characteristics of a moving target during WasSAR imaging. Further, group phase shift calibration and modified A2DC methods are proposed to mitigate the influence of platform attitude errors and channel imbalances. In accordance with this, an extended airborne multi-channel WasSAR ground moving target detection and tracking method is proposed, which achieves accurate detection and tracking of targets moving on complex roads. Finally, a trajectory reconstruction method for moving targets in airborne multi-channel WasSAR-GMTI is presented, demonstrating accurate trajectory reconstruction for targets on complex roads. Moreover, a flight experiment using our independently developed airborne multi-channel WasSAR-GMTI system, along with the associated real data processing results, is presented. These results confirm the validity and practicability of airborne WasSAR-GMTI for moving target dynamic surveillance and serve as a foundation for future research.

-

表 1 机载WasSAR系统仿真参数

Table 1. The simulated parameters of airborne WasSAR system

参数 数值 参数 数值 飞行半径 ${r_{\text{a}}}$ 2000 m 工作频段 Ku波段 飞行高度H 2000 m 距离分辨率 ${\rho _{\text{r}}}$ 0.167 m 飞机速度 ${v_{\text{a}}}$ 180 km/h 脉冲重复频率 ${\text{PRF}}$ 3125 Hz -

[1] CARRARA W G, GOODMAN R S, and MAJEWSKI R M. Spotlight Synthetic Aperture Radar: Signal Processing Algorithms[M]. Boston: Artech House, 1995: 13–78. [2] 徐丰, 王海鹏, 金亚秋. 深度学习在SAR目标识别与地物分类中的应用[J]. 雷达学报, 2017, 6(2): 136–148. doi: 10.12000/JR16130XU Feng, WANG Haipeng, and JIN Yaqiu. Deep learning as applied in SAR target recognition and terrain classification[J]. Journal of Radars, 2017, 6(2): 136–148. doi: 10.12000/JR16130 [3] 王广学. UWB SAR叶簇隐蔽目标变化检测技术研究[D]. [博士论文], 国防科学技术大学, 2011.WANG Guangxue. Foliage-concealed target change detection for UWB SAR[D]. [Ph.D. dissertation], National University of Defense Technology, 2011. [4] 李田, 程晓, 关真富, 等. 基于SAR数据的南极冰山分布监测[J]. 南京信息工程大学学报: 自然科学版, 2020, 12(2): 231–235. doi: 10.13878/j.cnki.jnuist.2020.02.011LI Tian, CHENG Xiao, GUAN Zhenfu, et al. Investigation of Antarctic iceberg distribution based on SAR images[J]. Journal of Nanjing University of Information Science & Technology: Natural Science Edition, 2020, 12(2): 231–235. doi: 10.13878/j.cnki.jnuist.2020.02.011 [5] XIANG Deliang, TANG Tao, BAN Yifang, et al. Man-made target detection from polarimetric SAR data via nonstationarity and asymmetry[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2016, 9(4): 1459–1469. doi: 10.1109/jstars.2016.2520518 [6] Nepal earthquake displacement[EB/OL]. http://www.esa.int/ESA_Multimedia/Images/2015/04/Nepal_earthquake_displacement, 2015. [7] HUANG Yan, LIAO Guisheng, XU Jingwei, et al. GMTI and parameter estimation via time-Doppler chirp-varying approach for single-channel airborne SAR system[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(8): 4367–4383. doi: 10.1109/tgrs.2017.2691742 [8] DA SILVA A B C, JOSHI S K, BAUMGARTNER S V, et al. Phase correction for accurate DOA angle and position estimation of ground-moving targets using multi-channel airborne radar[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4021605. doi: 10.1109/LGRS.2022.3144735 [9] KIRSCHT M. Detection and imaging of arbitrarily moving targets with single-channel SAR[J]. IEE Proceedings - Radar, Sonar and Navigation, 2003, 150(1): 7–11. doi: 10.1049/ip-rsn:20030076 [10] SUWA K, YAMAMOTO K, TSUCHIDA M, et al. Image-based target detection and radial velocity estimation methods for multichannel SAR-GMTI[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(3): 1325–1338. doi: 10.1109/tgrs.2016.2622712 [11] 贺雄鹏. 阵列雷达宽幅地面运动目标检测方法研究[D]. [博士论文], 西安电子科技大学, 2020.HE Xiongpeng. An investigation of ground moving target detection approaches for wide-swath array radar[D]. [Ph.D. dissertation], Xidian University, 2020. [12] SCARBOROUGH S M, CASTEEL JR C H, GORHAM L, et al. A challenge problem for SAR-based GMTI in urban environments[C]. SPIE Algorithms for Synthetic Aperture Radar Imagery XVI, Orlando, USA, 2009: 73370G. [13] SOUMEKH M. Synthetic Aperture Radar Signal Processing with MATLAB Algorithms[M]. New York, USA: Wiley, 1999: 486–551. [14] CHEN Jingwei, AN Daoxiang, WANG Wu, et al. Extended polar format algorithm for large-scene high-resolution was-SAR imaging[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 5326–5338. doi: 10.1109/JSTARS.2021.3081515 [15] JIA Gaowei, BUCHROITHNER M F, CHANG Wenge, et al. Fourier-based 2-D imaging algorithm for circular synthetic aperture radar: Analysis and application[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2016, 9(1): 475–489. doi: 10.1109/JSTARS.2015.2502430 [16] HORN R, NOTTENSTEINER A, REIGBER A, et al. F-SAR—DLR’s new multifrequency polarimetric airborne SAR[C]. 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 2009: II-902–II-905. [17] PINHEIRO M, PRATS P, SCHEIBER R, et al. Tomographic 3D reconstruction from airborne circular SAR[C]. 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 2009: III-21–III-24. [18] PONCE O, PRATS-IRAOLA P, PINHEIRO M, et al. Fully polarimetric high-resolution 3-D imaging with circular SAR at L-band[J]. IEEE Transactions on Geoscience and Remote Sensing, 2014, 52(6): 3074–3090. doi: 10.1109/tgrs.2013.2269194 [19] 安道祥, 陈乐平, 冯东, 等. 机载圆周SAR成像技术研究[J]. 雷达学报, 2020, 9(2): 221–242. doi: 10.12000/JR20026AN Daoxiang, CHEN Leping, FENG Dong, et al. Study of the airborne circular synthetic aperture radar imaging technology[J]. Journal of Radars, 2020, 9(2): 221–242. doi: 10.12000/JR20026 [20] 丁金闪. 视频SAR成像与动目标阴影检测技术[J]. 雷达学报, 2020, 9(2): 321–334. doi: 10.12000/JR20018DING Jinshan. Focusing algorithms and moving target detection based on video SAR[J]. Journal of Radars, 2020, 9(2): 321–334. doi: 10.12000/JR20018 [21] 林赟, 洪文. 圆迹合成孔径雷达成像与应用[M]. 北京: 电子工业出版社, 2020: 1–30.LIN Yun and HONG Wen. Circular Synthetic Aperture Radar Imaging and Its Applications[M]. Beijing: Publishing House of Electronics Industry, 2020: 1–30. [22] 林赟, 张琳, 韦立登, 等. 无先验模型复杂结构设施SAR全方位三维成像方法研究[J]. 雷达学报, 2022, 11(5): 909–919. doi: 10.12000/JR22148LIN Yun, ZHANG Lin, WEI Lideng, et al. Research on full-aspect three-dimensional SAR imaging method for complex structural facilities without prior model[J]. Journal of Radars, 2022, 11(5): 909–919. doi: 10.12000/JR22148 [23] LIN Yun, HONG Wen, TAN Weixian, et al. Interferometric circular SAR method for three-dimensional imaging[J]. IEEE Geoscience and Remote Sensing Letters, 2011, 8(6): 1026–1030. doi: 10.1109/lgrs.2011.2150732 [24] 王武. 机载圆周SAR成像及地面动目标指示技术研究[D]. [博士论文], 国防科技大学, 2019.WANG Wu. Study on circular SAR imaging and ground moving target indication[D]. [Ph.D. dissertation], National University of Defense Technology, 2019. [25] UYSAL F, MURTHY V, and SCARBOROUGH S M. Blind phase calibration for along-track interferometry: Application to gotcha data set[C]. SPIE Algorithms for Synthetic Aperture Radar Imagery XXI, Baltimore, USA, 2014: 90930O. [26] PAGE D, OWIRKA G, NICHOLS H, et al. Detection and tracking of moving vehicles with gotcha radar systems[J]. IEEE Aerospace and Electronic Systems Magazine, 2014, 29(1): 50–60. doi: 10.1109/MAES.2014.130075 [27] HERSEY R K and CULPEPPER E. Radar processing architecture for simultaneous SAR, GMTI, ATR, and tracking[C]. 2016 IEEE Radar Conference, Philadelphia, USA, 2016: 1–5. [28] DEMING R W, MACINTOSH S, and BEST M. Three-channel processing for improved geo-location performance in SAR-based GMTI interferometry[C]. SPIE Algorithms for Synthetic Aperture Radar Imagery XIX, Baltimore, USA, 2012: 83940F. [29] DEMING R, BEST M, and FARRELL S. Simultaneous SAR and GMTI using ATI/DPCA[C]. SPIE Algorithms for Synthetic Aperture Radar Imagery XXI, Baltimore, USA, 2014: 90930U. [30] PILLAI U, LI Keyong, and SCARBOROUGH S M. Geolocation of moving targets in Gotcha data using multimode processing[C]. SPIE Algorithms for Synthetic Aperture Radar Imagery XXII, Baltimore, USA, 2015: 947508. [31] PILLAI U, LI Keyong, and SCARBOROUGH S M. Target geolocation in Gotcha data using panoramic processing[C]. 2015 IEEE Radar Conference, Arlington, USA, 2015: 21–26. [32] RAYNAL A M, BICKEL D L, and DOERRY A W. Stationary and moving target shadow characteristics in synthetic aperture radar[C]. SPIE Radar Sensor Technology XVIII, Baltimore, USA, 2014: 90771B. [33] WELLS L, SORENSEN K, DOERRY A, et al. Developments in SAR and IFSAR systems and technologies at Sandia National Laboratories[C]. 2003 IEEE Aerospace Conference Proceeding, Big Sky, USA, 2003: 2_1085–2_1095. [34] Sandia national laboratories. Pathfinder radar ISR & SAR systems[EB/OL]. https://www.sandia.gov/radar/pathfinder-radar-isr-and-synthetic-aperture-radar-systems/video/. [35] DAMINI A, BALAJI B, PARRY C, et al. A videoSAR mode for the x-band wideband experimental airborne radar[C]. SPIE Algorithms for Synthetic Aperture Radar Imagery XVII, Orlando, USA, 2010: 76990E. [36] CHEN Jingwei, AN Daoxiang, WANG Wu, et al. A novel generation method of high quality video image for high resolution airborne ViSAR[J]. Remote Sensing, 2021, 13(18): 3706. doi: 10.3390/rs13183706 [37] PALM S, SOMMER R, JANSSEN D, et al. Airborne circular W-band SAR for multiple aspect urban site monitoring[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(9): 6996–7016. doi: 10.1109/tgrs.2019.2909949 [38] HENKE D and MEIER E. Tracking and refocussing of moving targets in multichannel SAR data[C]. 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 2015: 3735–3738. [39] SHEN Wenjie, LIN Yun, YU Lingjuan, et al. Single channel circular SAR moving target detection based on logarithm background subtraction algorithm[J]. Remote Sensing, 2018, 10(5): 742. doi: 10.3390/rs10050742 [40] 聊蕾, 左潇丽, 云涛, 等. 基于图像序列的VideoSAR动目标检测方法[J]. 雷达科学与技术, 2016, 14(6): 563–567, 573. doi: 10.3969/j.issn.1672-2337.2016.06.001LIAO Lei, ZUO Xiaoli, YUN Tao, et al. An approach to detect moving target in VideoSAR imagery sequence[J]. Radar Science and Technology, 2016, 14(6): 563–567, 573. doi: 10.3969/j.issn.1672-2337.2016.06.001 [41] 张营, 朱岱寅, 俞翔, 等. 一种VideoSAR动目标阴影检测方法[J]. 电子与信息学报, 2017, 39(9): 2197–2202. doi: 10.11999/JEIT161394ZHANG Ying, ZHU Daiyin, YU Xiang, et al. Approach to moving targets shadow detection for VideoSAR[J]. Journal of Electronics & Information Technology, 2017, 39(9): 2197–2202. doi: 10.11999/JEIT161394 [42] ZHONG Chao, DING Jinshan, and ZHANG Yuhong. Joint tracking of moving target in single-channel video SAR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5212718. doi: 10.1109/TGRS.2021.3115491 [43] QIN Siqi, DING Jinshan, WEN Liwu, et al. Joint track-before-detect algorithm for high-maneuvering target indication in video SAR[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 8236–8248. doi: 10.1109/jstars.2021.3104603 [44] LIU Zhongkang, AN Daoxiang, and HUANG Xiaotao. Moving target shadow detection and global background reconstruction for VideoSAR based on single-frame imagery[J]. IEEE Access, 2019, 7: 42418–42425. doi: 10.1109/ACCESS.2019.2907146 [45] CHEN Leping, AN Daoxiang, and HUANG Xiaotao. A backprojection-based imaging for circular synthetic aperture radar[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2017, 10(8): 3547–3555. doi: 10.1109/JSTARS.2017.2683497 [46] CHEN Leping, AN Daoxiang, and HUANG Xiaotao. Resolution analysis of circular synthetic aperture radar noncoherent imaging[J]. IEEE Transactions on Instrumentation and Measurement, 2020, 69(1): 231–240. doi: 10.1109/TIM.2019.2890932 [47] WANG Wu, AN Daoxiang, LUO Yuxiao, et al. The fundamental trajectory reconstruction results of ground moving target from single-channel CSAR geometry[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(10): 5647–5657. doi: 10.1109/TGRS.2018.2823310 [48] LUO Yuxiao, AN Daoxiang, WANG Wu, et al. Local road area extraction in CSAR imagery exploiting improved curvilinear structure detector[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5227615. doi: 10.1109/TGRS.2022.3172227 [49] LI Jianpeng, AN Daoxiang, WANG Wu, et al. A novel method for single-channel CSAR ground moving target imaging[J]. IEEE Sensors Journal, 2019, 19(19): 8642–8649. doi: 10.1109/JSEN.2019.2912863 [50] AN Daoxiang, WANG Wu, and ZHOU Zhimin. Refocusing of ground moving target in circular synthetic aperture radar[J]. IEEE Sensors Journal, 2019, 19(19): 8668–8674. doi: 10.1109/JSEN.2019.2922649 [51] GE Beibei, FAN Chongyi, AN Daoxiang, et al. A novel phase calibration method for dual-channel CSAR-GMTI processing[J]. IEEE Geoscience and Remote Sensing Letters, 2020, 17(4): 636–640. doi: 10.1109/lgrs.2019.2926436 [52] GE Beibei, AN Daoxiang, CHEN Leping, et al. Ground moving target detection and trajectory reconstruction methods for multichannel airborne circular SAR[J]. IEEE Transactions on Aerospace and Electronic Systems, 2022, 58(4): 2900–2915. doi: 10.1109/TAES.2022.3141332 [53] GE Beibei, AN Daoxiang, LIU Jinyuan, et al. Modified adaptive 2-D calibration algorithm for airborne multichannel SAR-GMTI[J]. IEEE Geoscience and Remote Sensing Letters, 2023, 20: 4004805. doi: 10.1109/LGRS.2023.3267148 [54] 葛蓓蓓. 机载多通道WasSAR运动目标检测与轨迹重构技术研究[D]. [博士论文], 国防科技大学, 2023.GE Beibei. Study on moving target detection and trajectory reconstruction methods for airborne multi-channel WasSAR[D]. [Ph.D. dissertation], National University of Defense Technology, 2023. [55] TIAN Xiaoqing, LIU Jing, MALLICK M, et al. Simultaneous detection and tracking of moving-target shadows in ViSAR imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(2): 1182–1199. doi: 10.1109/TGRS.2020.2998782 [56] HENKE D, MAGNARD C, FRIOUD M, et al. Moving-target tracking in single-channel wide-beam SAR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2012, 50(11): 4735–4747. doi: 10.1109/tgrs.2012.2191561 [57] HENKE D, DOMINGUEZ E M, SMALL D, et al. Moving target tracking in single- and multichannel SAR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(6): 3146–3159. doi: 10.1109/tgrs.2014.2369060 [58] ZHANG Yun, MU Huilin, JIANG Yicheng, et al. Moving target tracking based on improved GMPHD filter in circular SAR system[J]. IEEE Geoscience and Remote Sensing Letters, 2019, 16(4): 559–563. doi: 10.1109/LGRS.2018.2878467 [59] 穆慧琳. 多通道SAR地面运动目标检测与成像研究[D]. [博士论文], 哈尔滨工业大学, 2021.MU Huilin. Research on ground moving target detection and imaging in multichannel SAR system[D]. [Ph.D. dissertation], Harbin Institute of Technology, 2021. [60] DEMING R W. Along-track interferometry for simultaneous SAR and GMTI: Application to Gotcha challenge data[C]. SPIE Algorithms for Synthetic Aperture Radar Imagery XVIII, Orlando, USA, 2011: 80510P. [61] 刘向阳. 机载多通道SAR-GMTI误差分析与补偿方法研究[D]. [博士论文], 西安电子科技大学, 2010.LIU Xiangyang. Study on error analysis and compensation for multi-channel airborne SAR-GMTI systems[D]. [Ph.D. dissertation], Xidian University, 2010. [62] ENDER J H G. The airborne experimental multi-channel SAR-system AER-II[C]. European SAR Conference, Konigswinter, Germany, 1996: 49–52. [63] GIERULL C H. Digital channel balancing of along-track interferometric SAR data[R]. Technical Report DRDC TM 2003-024, 2003. [64] SONG Yongping, LOU Jun, and JIN Tian. A novel II-CFAR detector for ROI extraction in SAR image[C]. 2013 IEEE International Conference on Signal Processing, Communication and Computing (ICSPCC 2013), Kunming, China, 2013: 1–4. [65] PALM S, MARESCH A, and STILLA U. Investigation on circular mapping by FMCW-SAR on small airplanes[J]. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 2013, 40-1(W-1): 281–286. doi: 10.5194/isprsarchives-XL-1-W1-281-2013 -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: