-

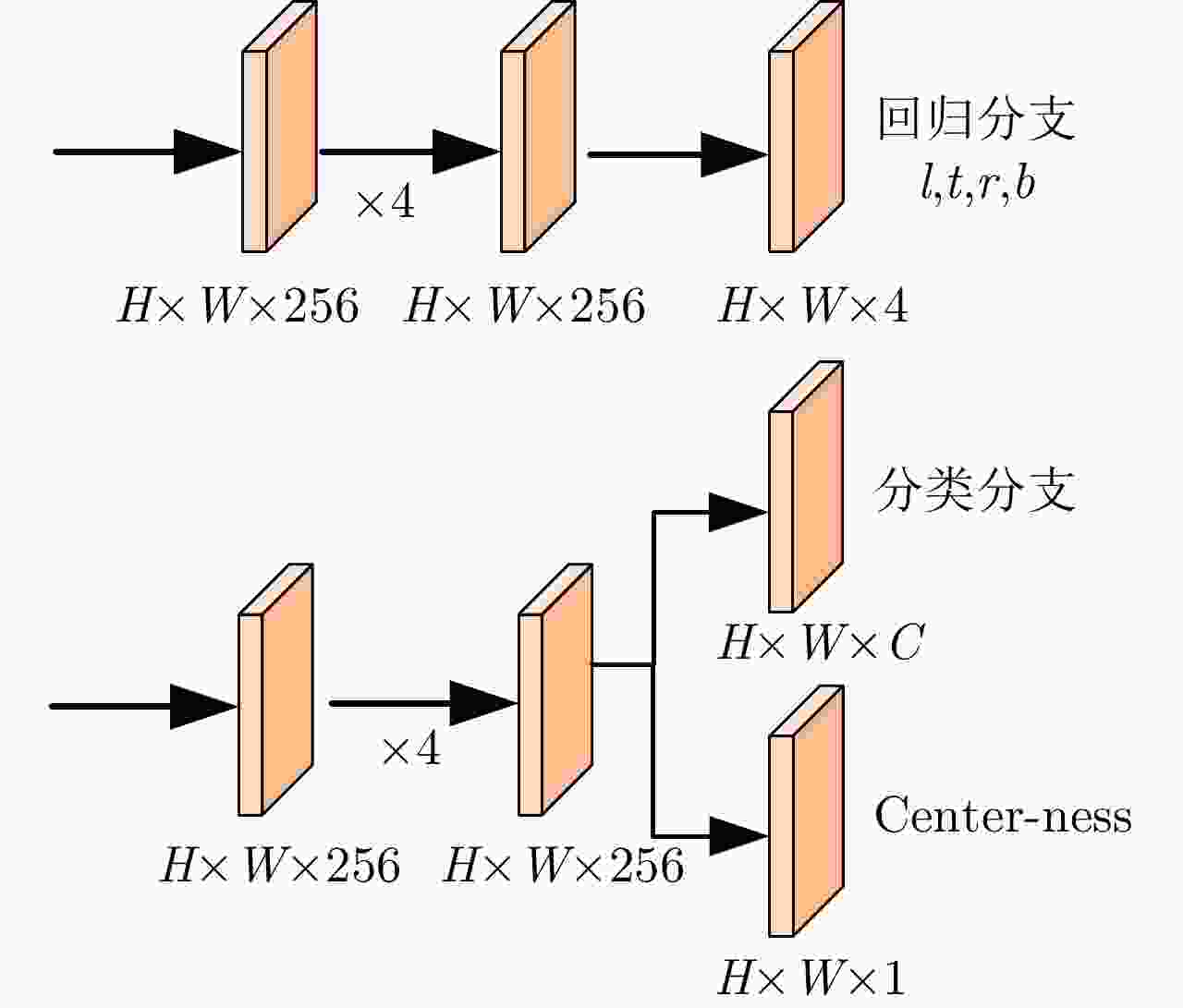

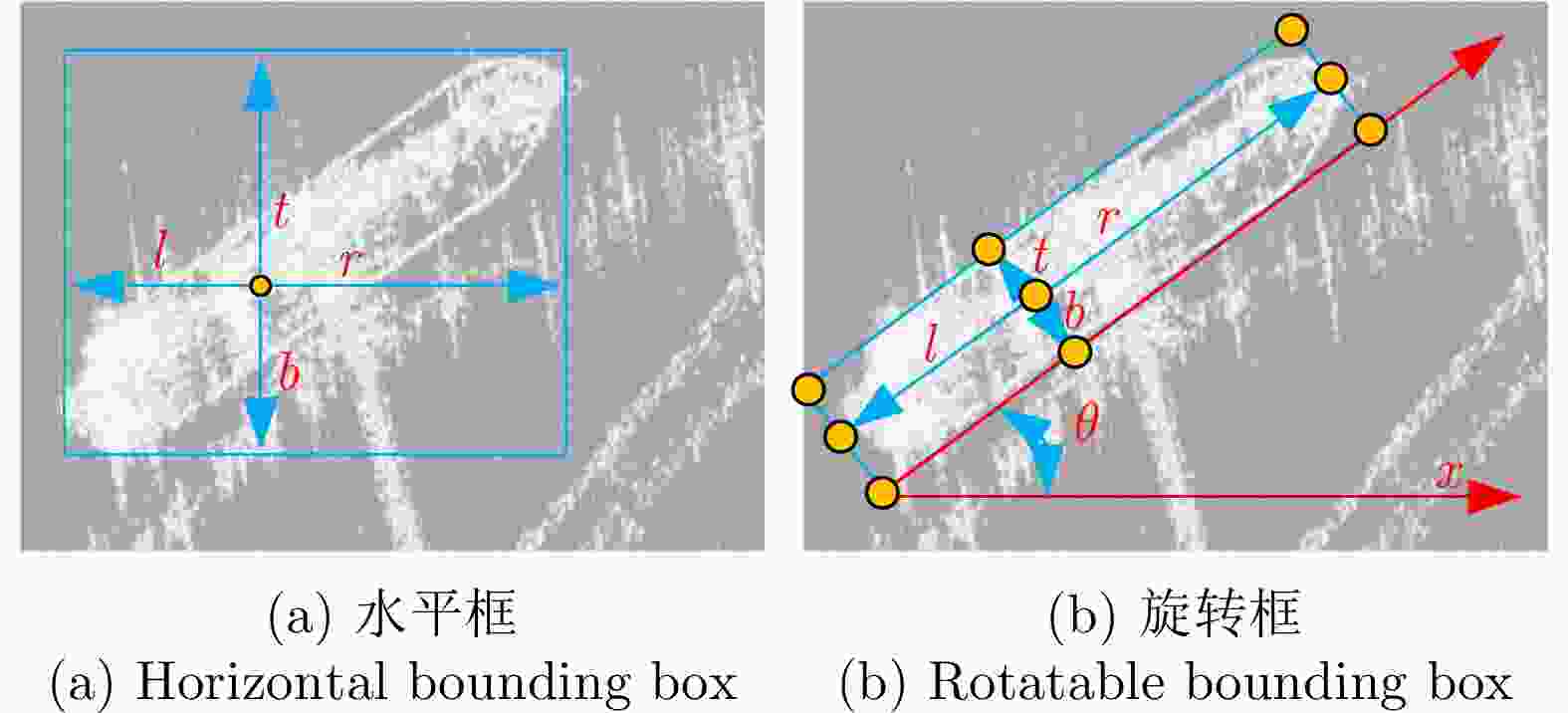

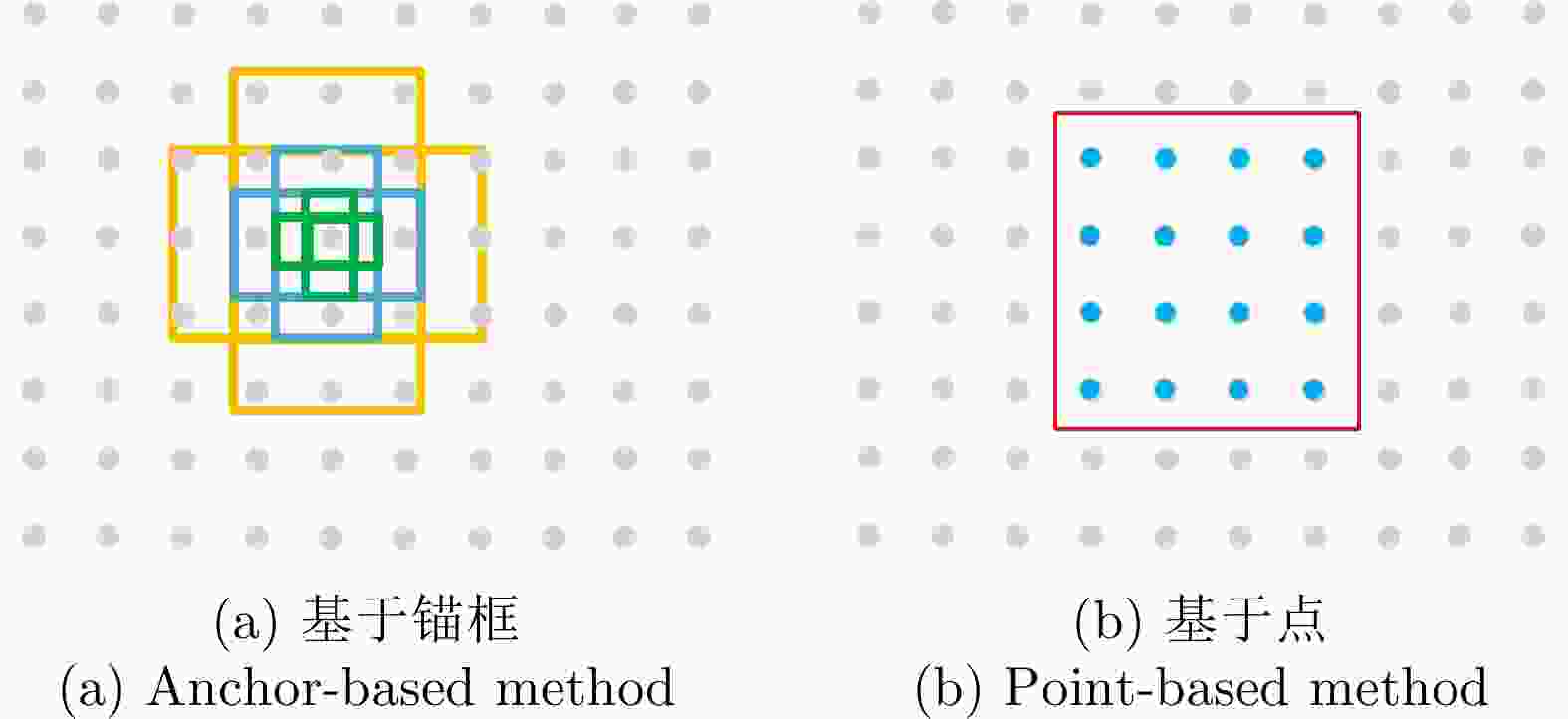

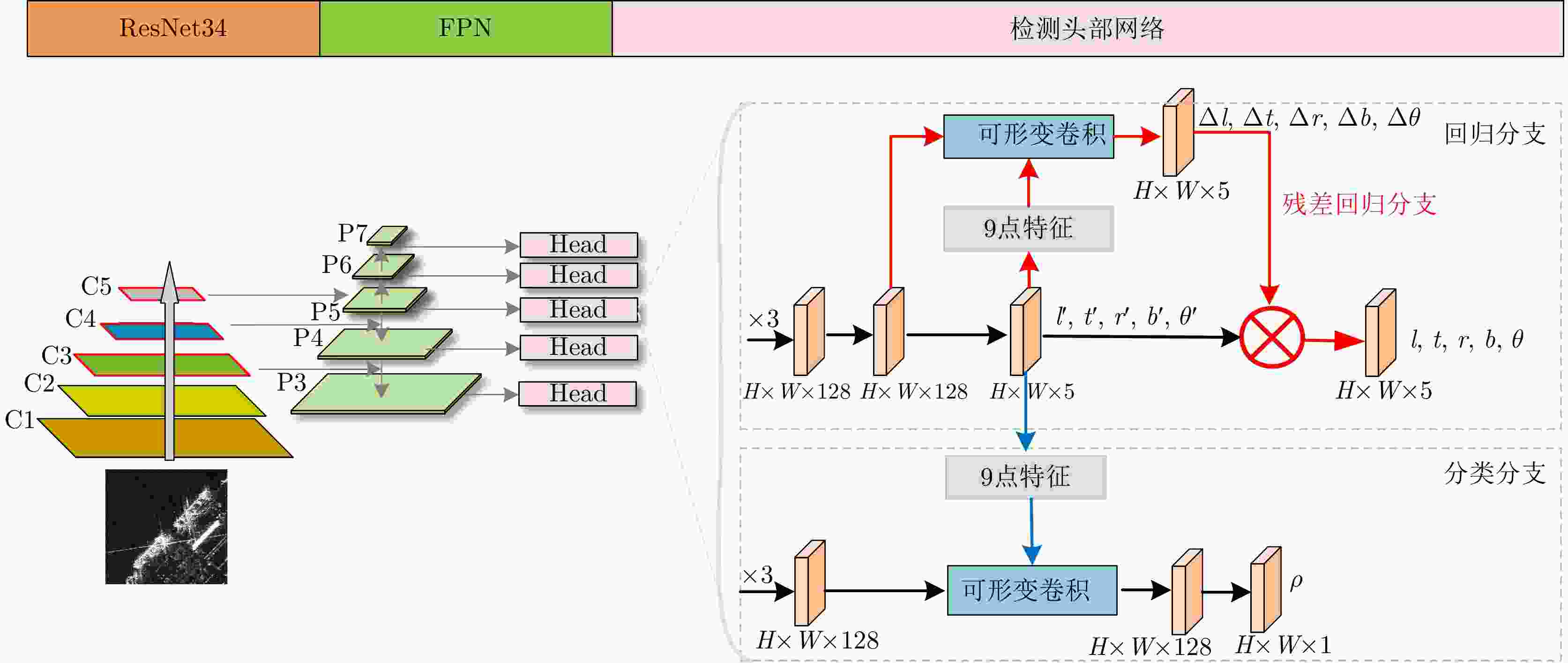

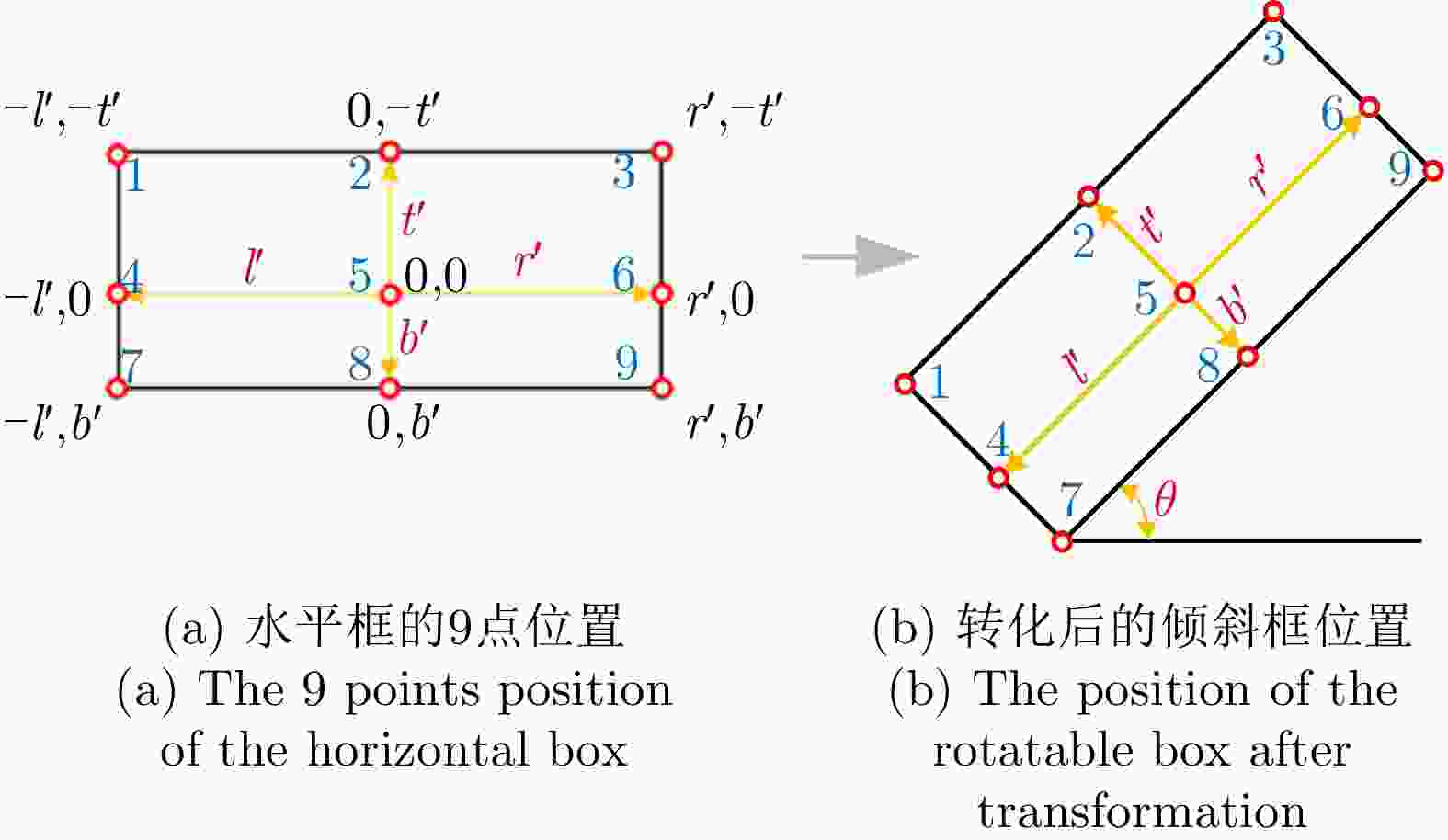

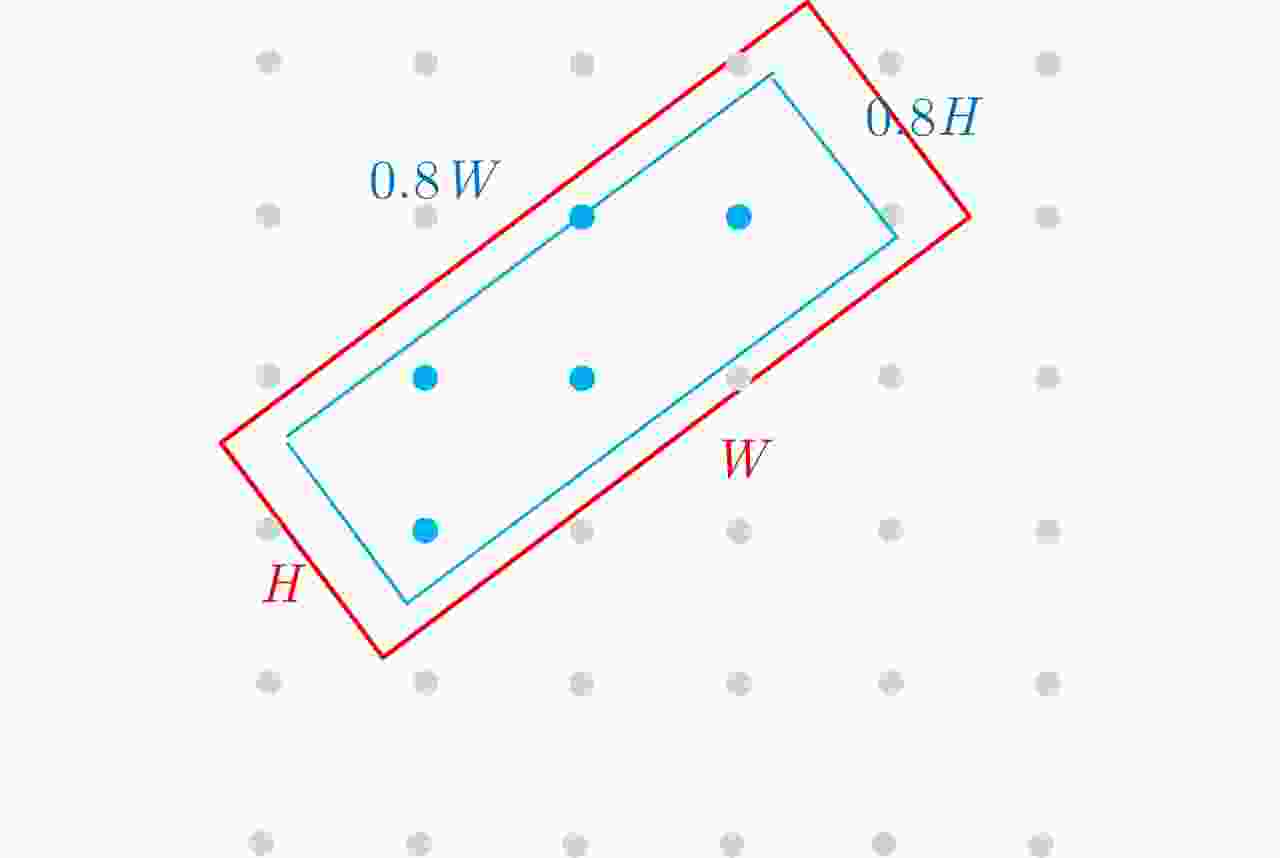

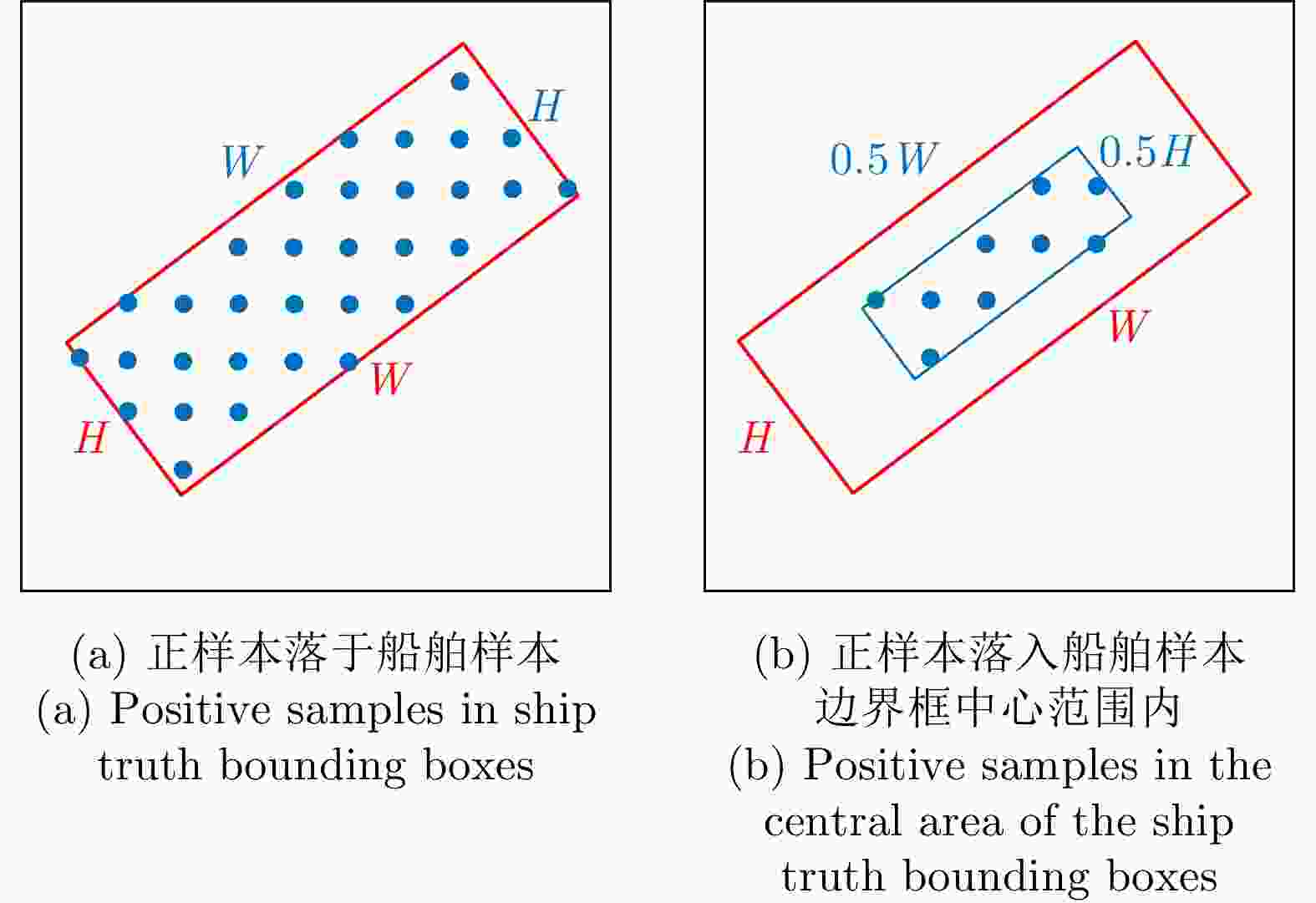

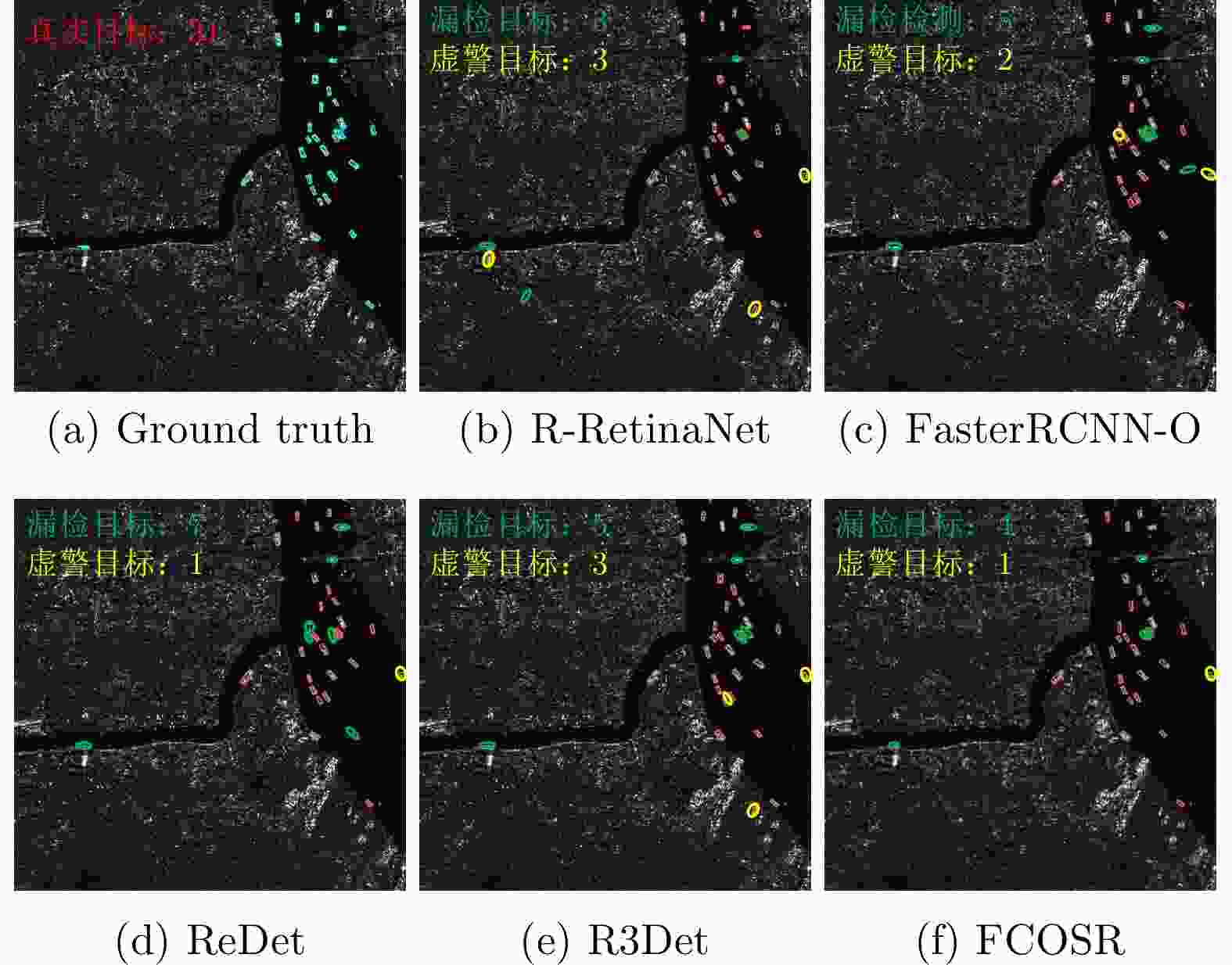

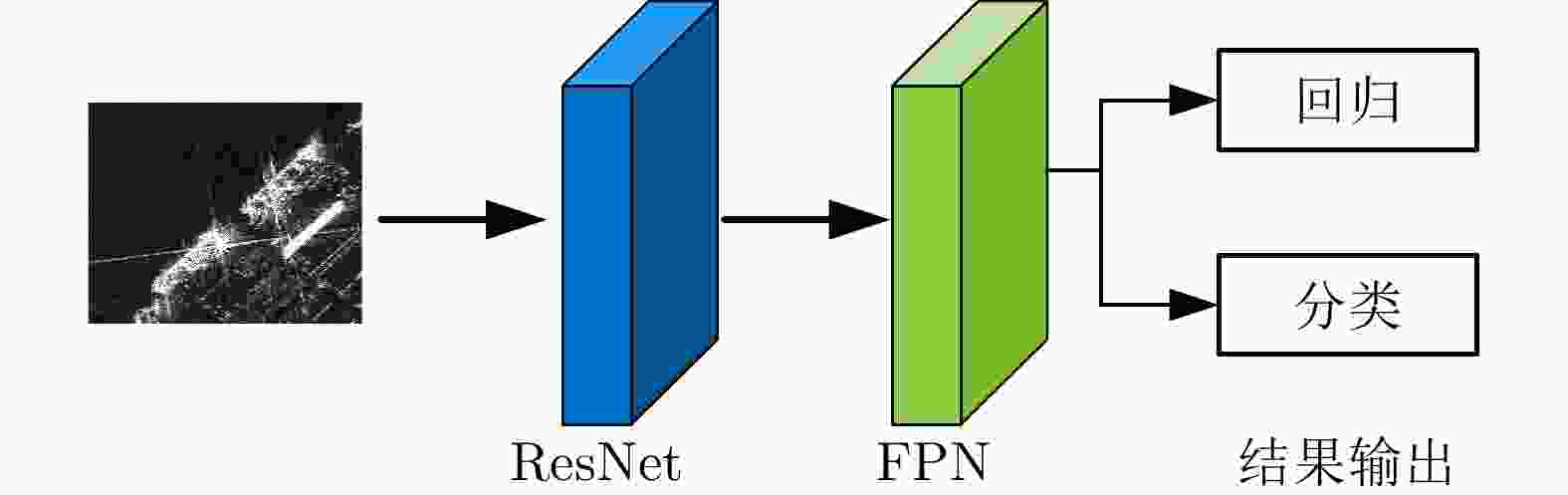

摘要: 以FCOS为代表的无锚框网络避免了预设锚框带来的超参设定问题,然而其水平框的输出结果无法指示任意朝向下SAR船舶目标的精确边界和朝向。针对此问题,该文提出了一种名为FCOSR的检测算法。首先在FCOS回归分支中添加角度参量使其输出旋转框结果。其次,引入基于可形变卷积的9点特征参与船舶置信度和边界框残差值的预测来降低陆地虚警并提升边界框回归精度。最后,在训练阶段使用旋转自适应样本选择策略为每个船舶样本分配合适的正样本点,实现网络检测精度的提高。相较于FCOS以及目前已公开发表的锚框旋转检测网络,该网络在SSDD+和HRSID数据集上表现出更快的检测速率和更高的检测精度,mAP分别为91.7%和84.3%,影像切片平均检测时间仅需33 ms。Abstract: The anchor-free network represented by a Fully Convolutional One-Stage object detector (FCOS) avoids the hyperparameter setting issue caused by the preset anchor boxes; however, the result of the horizontal bounding boxes cannot indicate the precise boundary and orientation of the arbitrary-oriented ship detection in synthetic-aperture radar images. To solve this problem, this paper proposes a detection algorithm named FCOSR. First, the angle parameter is added to the FCOS regression branch to output the rotatable bounding boxes. Second, 9-point features based on deformable convolution are introduced to predict the ship confidence and the boundary-box residual to reduce the land false alarm and improve the accuracy of the boundary box regression. Finally, in the training stage, the rotatable adaptive sample selection strategy is used to allocate appropriate positive sample points to the real ship to improve the network detection accuracy. Compared to the FCOS and currently published anchor-based rotatable detection networks, the proposed network exhibited faster detection speed and higher detection accuracy on the SSDD+ and HRSID datasets with the mAPs of 91.7% and 84.3%, respectively. The average detection time of image slices was only 33 ms.

-

表 1 COCO指标

Table 1. COCO metrics

指标 解释 ${\rm{mAP} }$ ${{\rm{mAP}}}$ at IOU=0.50:0.05:0.95 ${\rm mAP}_{50}$ ${{\rm{mAP}}}$ at IoU=0.50 ${\rm mAP}_{ {\rm{S} } }$ ${{\rm{mAP}}}_{50}$ for small Ship: ${\rm{area}} < {32}^{2}$ ${ {\rm{mAP} } }_{{\rm{M}}}$ ${{\rm{mAP}}}_{50}$ for medium Ship: ${32}^{2} < {\rm{area}} < {96}^{2}$ ${ {\rm{mAP} } }_{{\rm{L}}}$ ${{\rm{mAP}}}_{50}$ for large Ship: ${\rm{area}} > {96}^{2}$ 表 2 不同样本选择方法的实验结果

Table 2. Results of different samples selection methods

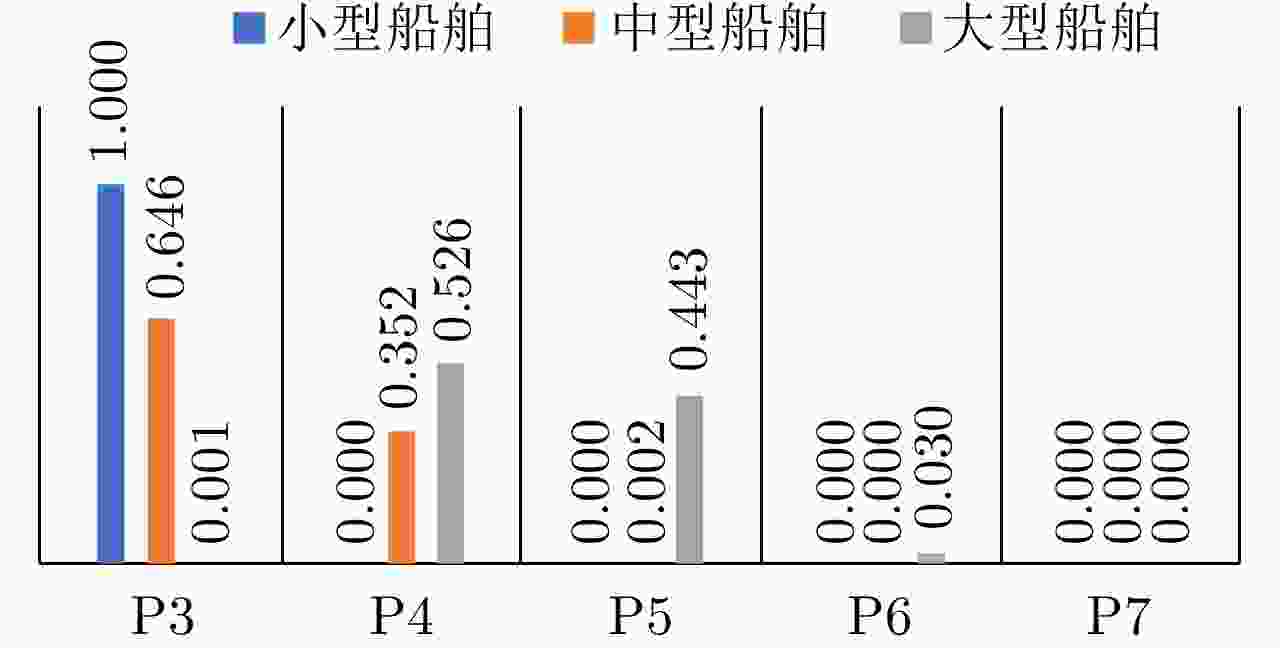

方法 k mAP (%) $\rm{m}{\rm{AP} }_{50}$ (%) $\rm{m} {\rm{AP} }_{S }$ (%) $ \rm{m} {\rm{AP}}_{{M}} $ (%) $\rm{m} {\rm{AP} }_{\rm{L} }$ (%) Time (s/iter) 采样方式a – 30.2 75.7 34.0 31.2 30.3 0.266 采样方式b – 38.6 85.6 38.3 43.4 41.5 0.269 采样方式c – 36.6 83.5 39.2 32.0 33.6 0.268 RATSS(无第4步) 5 40.3 87.2 39.4 43.4 70.1 0.287 RATSS 3 40.2 91.8 38.7 44.9 55.1 0.295 5 42.2 91.7 40.5 46.2 64.7 0.297 7 41.8 92.2 40.4 45.7 50.9 0.300 9 40.6 90.3 39.7 42.9 57.3 0.302 11 41.8 91.0 41.3 43.9 52.9 0.302 表 3 消融实验结果(%)

Table 3. Results of ablation experiments (%)

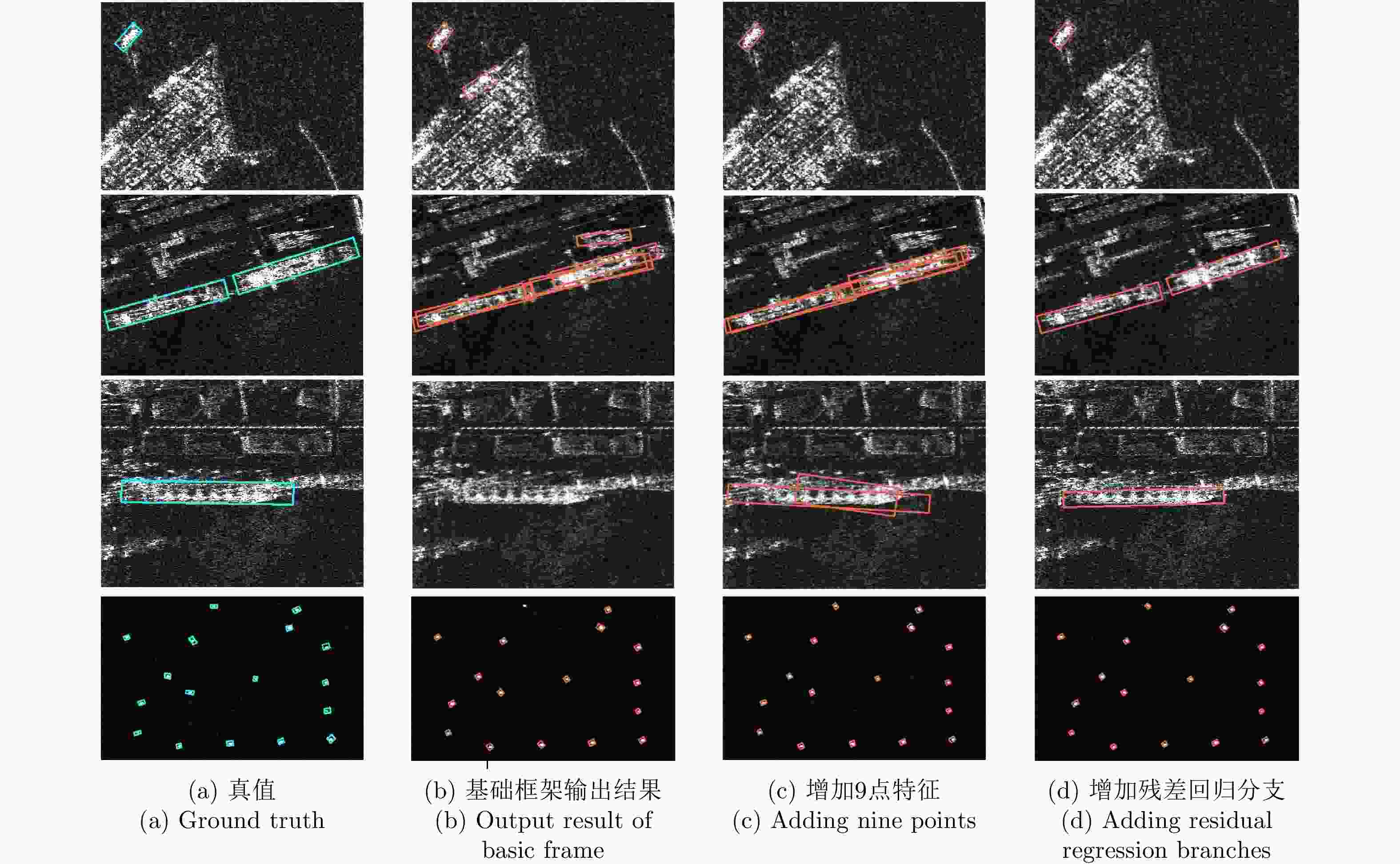

9点特征表示 残差回归分支 mAP $\rm{m} {\rm{AP} }_{50}$ $\rm{m} {\rm{AP} }_{\rm{S} }$ $\rm{m} {\rm{AP} }_{\rm{M} }$ $\rm{m} {\rm{AP} }_{\rm{L} }$ × × 34.8 85.4 35.8 33.7 30.6 √ × 38.3 89.8 37.7 40.2 53.8 √ √ 42.2 91.7 40.5 46.2 64.7 表 4 近岸与远岸船舶的mAP结果值(%)

Table 4. The mAP results of the ships in inshore and offshore (%)

场景 9点特征表示 残差回归

分支$\rm{m}{\rm{AP} }_{50}$ $\rm{m} {\rm{AP} }_{\rm{S} }$ $\rm{m} {\rm{AP} }_{\rm{M} }$ $\rm{m} {\rm{AP} }_{\rm{L} }$ 近岸 × × 61.7 24.0 21.1 37.5 √ × 75.6 28.6 29.5 68.5 √ √ 76.3 30.7 36.9 70.1 远岸 × × 95.1 40.0 42.9 26.7 √ × 95.4 41.0 47.6 51.6 √ √ 97.4 44.0 52.3 64.2 表 5 FCOS与FCOSR的对比

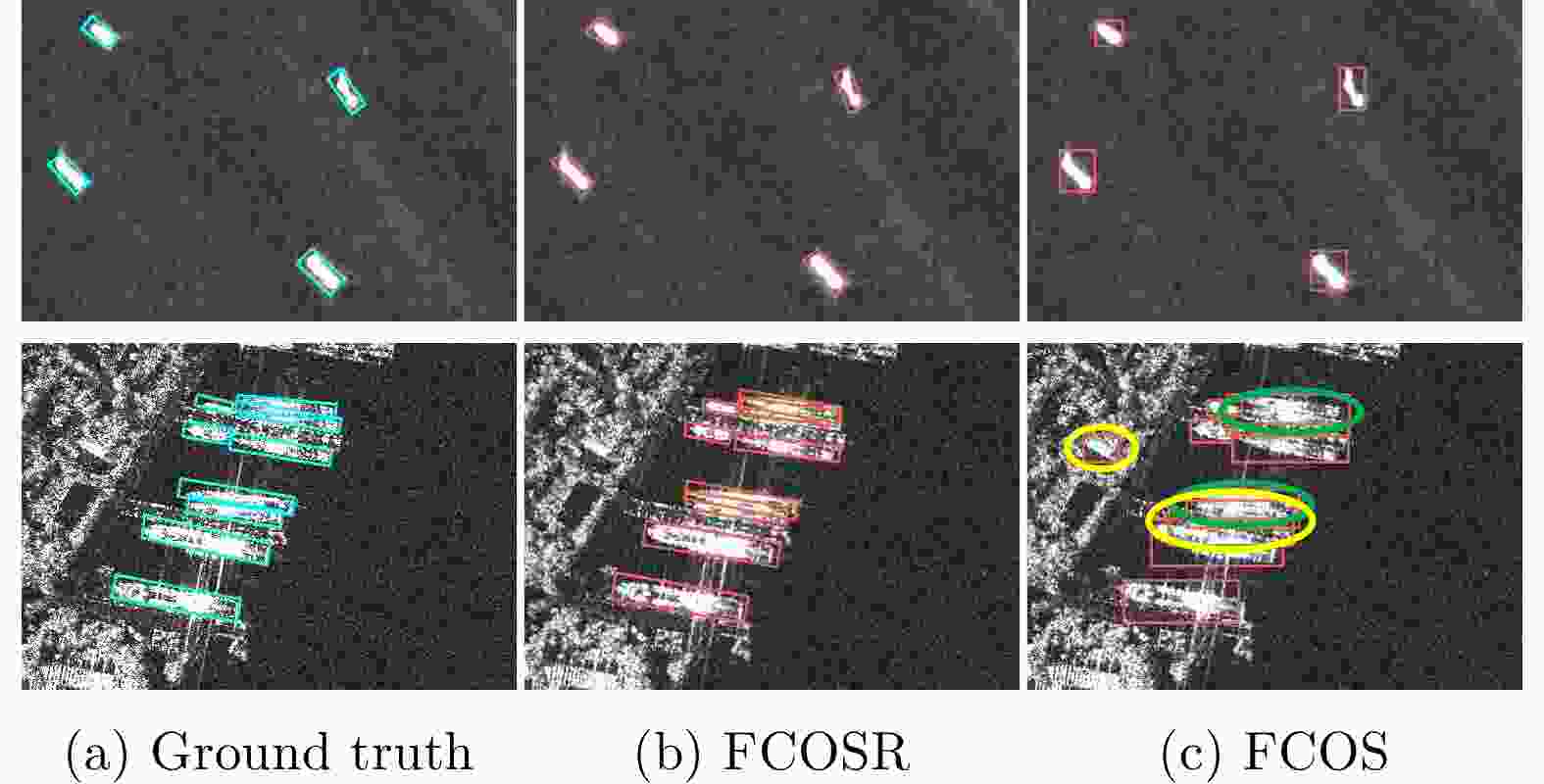

Table 5. The performance comparison of FCOS and FCOSR

方法 骨干网络类型 FPN输出

通道数SSDD+ HRSID FPS Size

(MB)Time

(s/iter)Recall (%) mAP (%) mAP50 (%) Recall (%) mAP (%) mAP50 (%) FCOS ResNet50 256 97.18 45.1 93.7 87.20 50.5 82.9 21.6 192.1 0.352 FCOSR ResNet50 256 94.55 41.9 92.1 87.55 44.9 83.5 20.8 196.8 0.584 FCOSR ResNet34 128 94.17 42.2 91.7 87.59 47.3 84.3 30.1 188.1 0.297 表 6 不同检测网络的精度对比

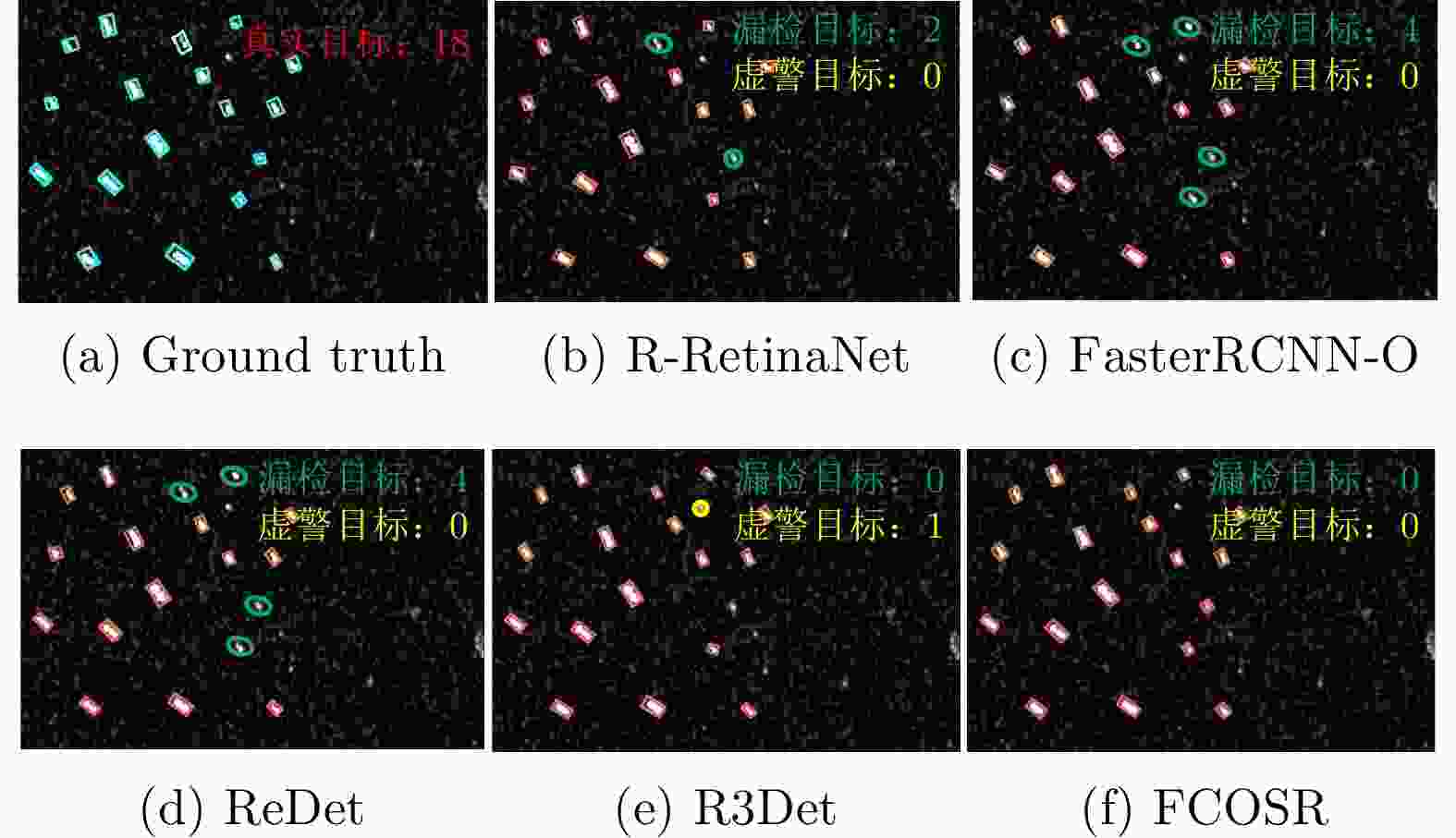

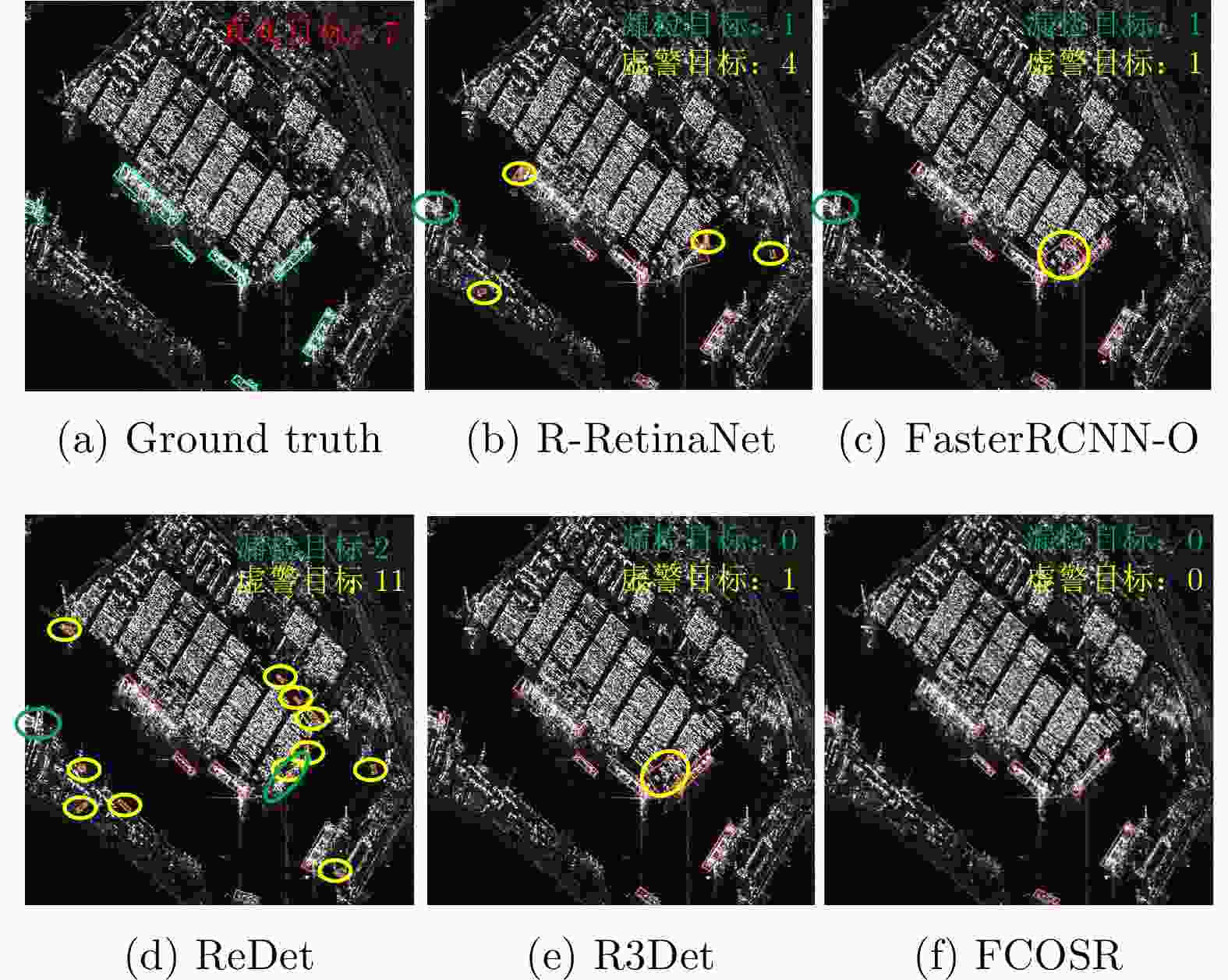

Table 6. The comparison of the accuracy of different detection networks

方法 SSDD+ HRSID FPS Size

(MB)Time

(s/iter)Recall (%) mAP (%) mAP50 (%) Recall (%) mAP (%) mAP50 (%) ReDet 88.16 43.69 87.57 83.11 44.2 80.1 11.8 256.8 0.544 R3Det 91.16 40.47 89.29 86.14 47.2 83.7 11.4 378.9 0.638 R-RetinaNet 89.85 36.00 86.20 83.57 42.1 80.9 15.6 290.2 0.331 FasterRCNN-O 91.16 42.15 90.12 85.56 46.1 82.8 13.2 441.5 0.496 FCOSR 94.17 42.20 91.70 87.59 47.3 84.3 30.1 188.1 0.297 -

[1] 郭倩, 王海鹏, 徐丰. SAR图像飞机目标检测识别进展[J]. 雷达学报, 2020, 9(3): 497–513. doi: 10.12000/JR20020GUO Qian, WANG Haipeng, and XU Feng. Research progress on aircraft detection and recognition in SAR imagery[J]. Journal of Radars, 2020, 9(3): 497–513. doi: 10.12000/JR20020 [2] 樊海玮, 史双, 蔺琪, 等. 复杂背景下SAR图像船舶目标检测算法研究[J]. 计算机技术与发展, 2021, 31(10): 49–55. doi: 10.3969/j.issn.1673-629X.2021.10.009FAN Haiwei, SHI Shuang, LIN Qi, et al. Research on ship target detection algorithm in complex background SAR image[J]. Computer Technology and Development, 2021, 31(10): 49–55. doi: 10.3969/j.issn.1673-629X.2021.10.009 [3] 李健伟, 曲长文, 彭书娟. 基于级联CNN的SAR图像舰船目标检测算法[J]. 控制与决策, 2019, 34(10): 2191–2197. doi: 10.13195/j.kzyjc.2018.0168LI Jianwei, QU Changwen, and PENG Shujuan. A ship detection method based on cascade CNN in SAR images[J]. Control and Decision, 2019, 34(10): 2191–2197. doi: 10.13195/j.kzyjc.2018.0168 [4] CUI Zongyong, LI Qi, CAO Zongjie, et al. Dense attention pyramid networks for multi-scale ship detection in SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(11): 8983–8997. doi: 10.1109/TGRS.2019.2923988 [5] 张晓玲, 张天文, 师君, 等. 基于深度分离卷积神经网络的高速高精度SAR舰船检测[J]. 雷达学报, 2019, 8(6): 841–851. doi: 10.12000/JR19111ZHANG Xiaoling, ZHANG Tianwen, SHI Jun, et al. High-speed and high-accurate SAR ship detection based on a depthwise separable convolution neural network[J]. Journal of Radars, 2019, 8(6): 841–851. doi: 10.12000/JR19111 [6] 陈慧元, 刘泽宇, 郭炜炜, 等. 基于级联卷积神经网络的大场景遥感图像舰船目标快速检测方法[J]. 雷达学报, 2019, 8(3): 413–424. doi: 10.12000/JR19041CHEN Huiyuan, LIU Zeyu, GUO Weiwei, et al. Fast detection of ship targets for large-scale remote sensing image based on a cascade convolutional neural network[J]. Journal of Radars, 2019, 8(3): 413–424. doi: 10.12000/JR19041 [7] WANG Zhen, WANG Buhong, and XU Nan. SAR ship detection in complex background based on multi-feature fusion and non-local channel attention mechanism[J]. International Journal of Remote Sensing, 2021, 42(19): 7519–7550. doi: 10.1080/01431161.2021.1963003 [8] WANG Yuanyuan, WANG Chao, ZHANG Hong, et al. Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery[J]. Remote Sensing, 2019, 11(5): 531. doi: 10.3390/rs11050531 [9] ZHAO Yan, ZHAO Lingjun, XIONG Boli, et al. Attention receptive pyramid network for ship detection in SAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2020, 13: 2738–2756. doi: 10.1109/JSTARS.2020.2997081 [10] ZHANG Tianwen, ZHANG Xiaoling, and KE Xiao. Quad-FPN: A novel quad feature pyramid network for SAR ship detection[J]. Remote Sensing, 2021, 13(14): 2771. doi: 10.3390/rs13142771 [11] LAW H and DENG Jia. CornerNet: Detecting objects as paired keypoints[J]. International Journal of Computer Vision, 2020, 128(3): 642–656. doi: 10.1007/s11263-019-01204-1 [12] ZHOU Xingyi, WANG Dequan, and KRÄHENBÜHL P. Objects as points[C]. arXiv preprint arXiv: 1904.07850, 2019. [13] TIAN Zhi, SHEN Chunhua, CHEN Hao, et al. FCOS: Fully convolutional one-stage object detection[C]. The 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 9626–9635. [14] KONG Tao, SUN Fuchun, LIU Huaping, et al. FoveaBox: Beyound anchor-based object detection[J]. IEEE Transactions on Image Processing, 2020, 29: 7389–7398. doi: 10.1109/TIP.2020.3002345 [15] CUI Zongyong, WANG Xiaoya, LIU Nengyuan, et al. Ship detection in large-scale SAR images via spatial shuffle-group enhance attention[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(1): 379–391. doi: 10.1109/TGRS.2020.2997200 [16] GUO Haoyuan, YANG Xi, WANG Nannan, et al. A CenterNet++ model for ship detection in SAR images[J]. Pattern Recognition, 2021, 112: 107787. doi: 10.1016/j.patcog.2020.107787 [17] SUN Zhongzhen, DAI Muchen, LENG Xiangguang, et al. An anchor-free detection method for ship targets in high-resolution SAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 7799–7816. doi: 10.1109/JSTARS.2021.3099483 [18] FU Jiamei, SUN Xian, WANG Zhirui, et al. An anchor-free method based on feature balancing and refinement network for multiscale ship detection in SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(2): 1331–1344. doi: 10.1109/TGRS.2020.3005151 [19] MA Jianqi, SHAO Weiyuan, YE Hao, et al. Arbitrary-oriented scene text detection via rotation proposals[J]. IEEE Transactions on Multimedia, 2018, 20(11): 3111–3122. doi: 10.1109/TMM.2018.2818020 [20] JIANG Yingying, ZHU Xiangyu, WANG Xiaobing, et al. R2CNN: Rotational region CNN for orientation robust scene text detection[C]. arXiv preprint arXiv: 1706.09579, 2017. [21] YANG Xue, YANG Jirui, YAN Junchi, et al. SCRDet: Towards more robust detection for small, cluttered and rotated objects[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea (South), 2019: 8231–8240. [22] YANG Xue, LIU Qingqing, YAN Junchi, et al. R3Det: Refined single-stage detector with feature refinement for rotating object[C]. arXiv preprint arXiv: 1908.05612, 2019. [23] HAN Jiaming, DING Jian, XUE Nan, et al. ReDet: A rotation-equivariant detector for aerial object detection[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021: 2785–2794. [24] WANG Jizhou, LU Changhua, and JIANG Weiwei. Simultaneous ship detection and orientation estimation in SAR images based on attention module and angle regression[J]. Sensors, 2018, 18(9): 2851. doi: 10.3390/s18092851 [25] LIU Lei, CHEN Guowei, PAN Zongxu, et al. Inshore ship detection in SAR images based on deep neural networks[C]. IGARSS 2018 - 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 2018: 25–28. [26] AN Quanzhi, PAN Zongxu, LIU Lei, et al. DRBox-v2: An improved detector with rotatable boxes for target detection in SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(11): 8333–8349. doi: 10.1109/TGRS.2019.2920534 [27] CHEN Chen, HE Chuan, HU Changhua, et al. MSARN: A deep neural network based on an adaptive recalibration mechanism for multiscale and arbitrary-oriented SAR ship detection[J]. IEEE Access, 2019, 7: 159262–159283. doi: 10.1109/ACCESS.2019.2951030 [28] PAN Zhenru, YANG Rong, and ZHANG Zhimin. MSR2N: Multi-stage rotational region based network for arbitrary-oriented ship detection in SAR images[J]. Sensors, 2020, 20(8): 2340. doi: 10.3390/s20082340 [29] CHEN Shiqi, ZHANG Jun, and ZHAN Ronghui. R2FA-Det: Delving into high-quality rotatable boxes for ship detection in SAR images[J]. Remote Sensing, 2020, 12(12): 2031. doi: 10.3390/rs12122031 [30] YANG Rong, WANG Gui, PAN Zhenru, et al. A novel false alarm suppression method for CNN-based SAR ship detector[J]. IEEE Geoscience and Remote Sensing Letters, 2021, 18(8): 1401–1405. doi: 10.1109/LGRS.2020.2999506 [31] DAI Jifeng, QI Haozhi, XIONG Yuwen, et al. Deformable convolutional networks[C]. 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 764–773. [32] ZHANG Shifeng, CHI Cheng, YAO Yongqiang, et al. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2020: 9756–9765. [33] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(2): 318–327. doi: 10.1109/TPAMI.2018.2858826 [34] LI Jianwei, QU Changwen, and SHAO Jiaqi. Ship detection in SAR images based on an improved faster R-CNN[C]. 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 2017: 1–6. [35] WEI Shunjun, ZENG Xiangfeng, QU Qizhe, et al. HRSID: A high-resolution SAR images dataset for ship detection and instance segmentation[J]. IEEE Access, 2020, 8: 120234–120254. doi: 10.1109/ACCESS.2020.3005861 [36] XIA Guisong, BAI Xiang, DING Jian, et al. DOTA: A large-scale dataset for object detection in aerial images[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 3974–3983. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: