Multichannel False-target Discrimination in SAR Images Based on Sub-aperture and Full-aperture Feature Learning

-

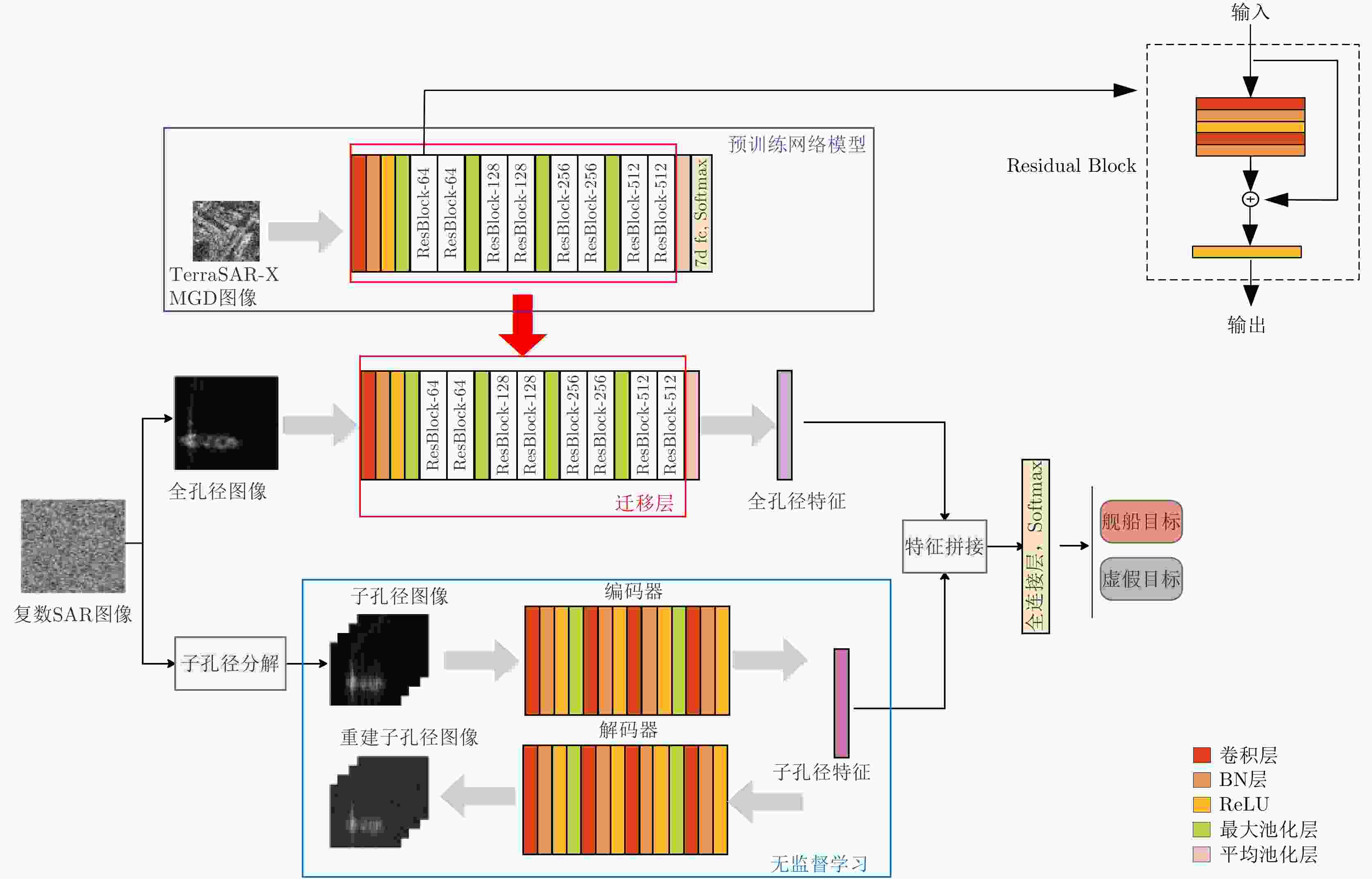

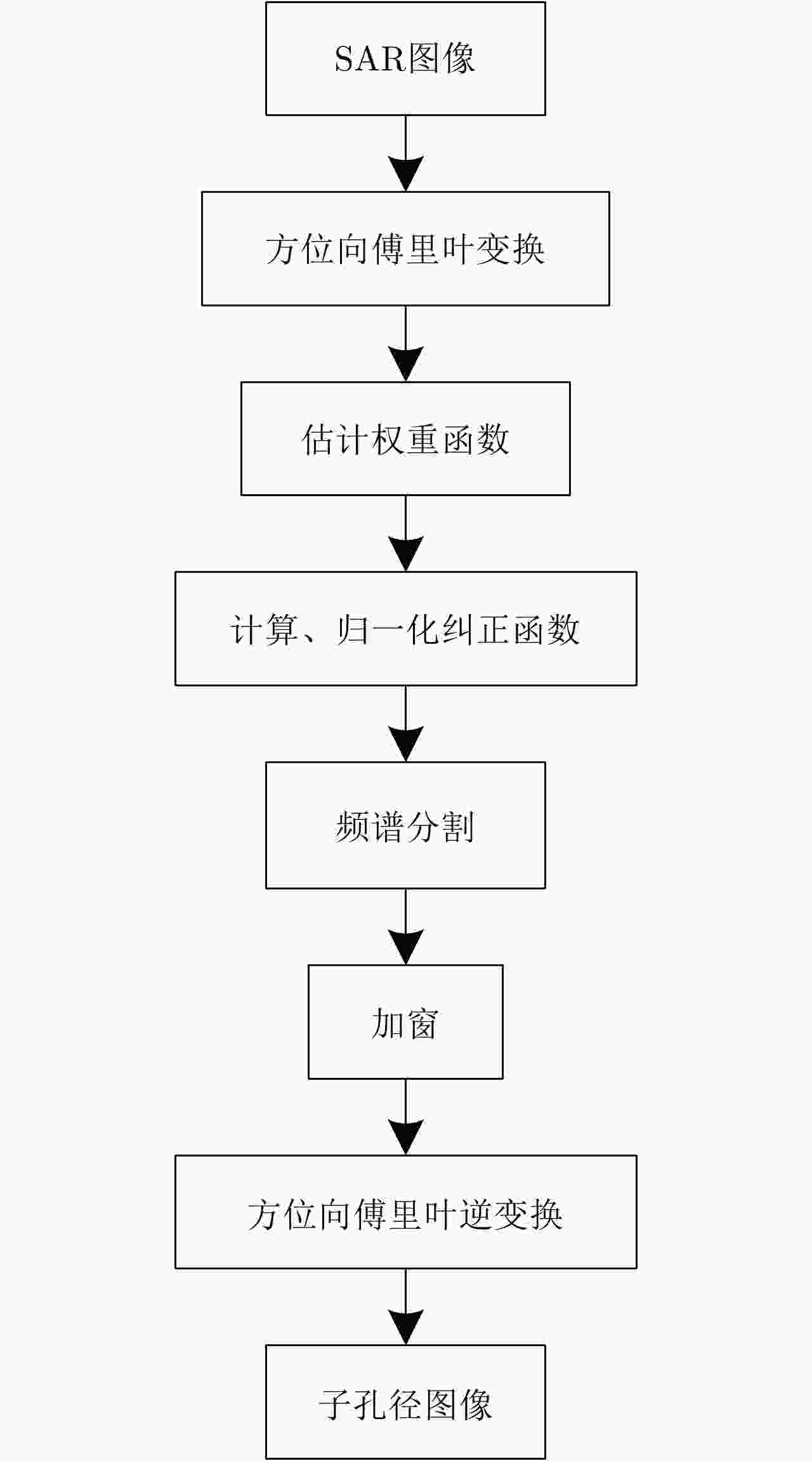

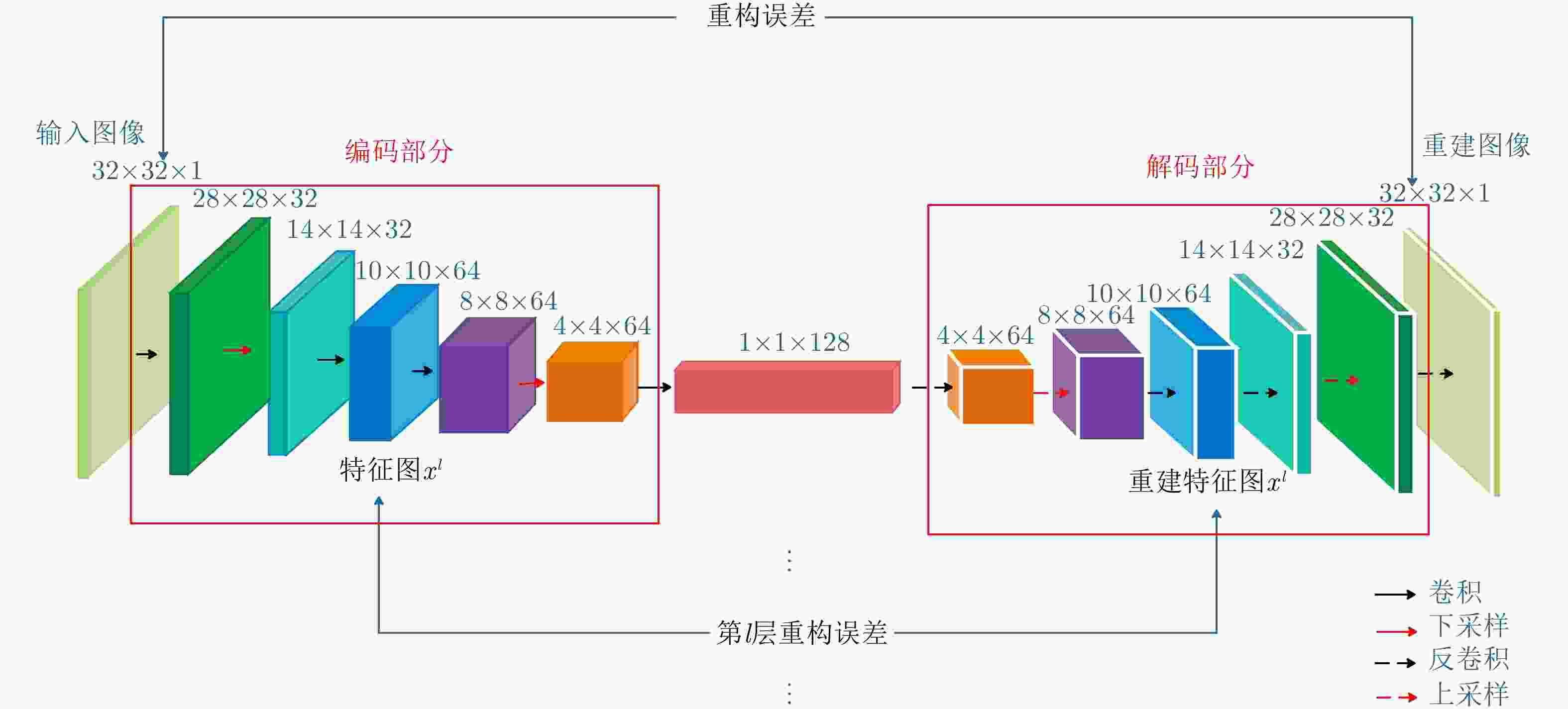

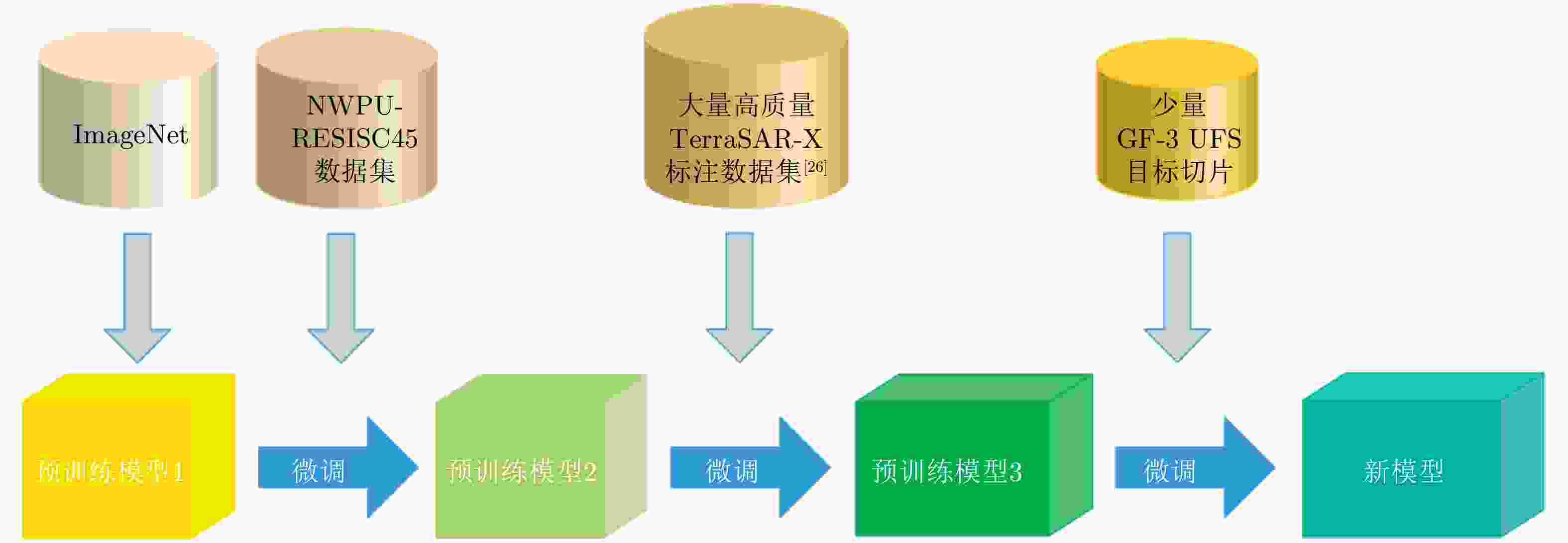

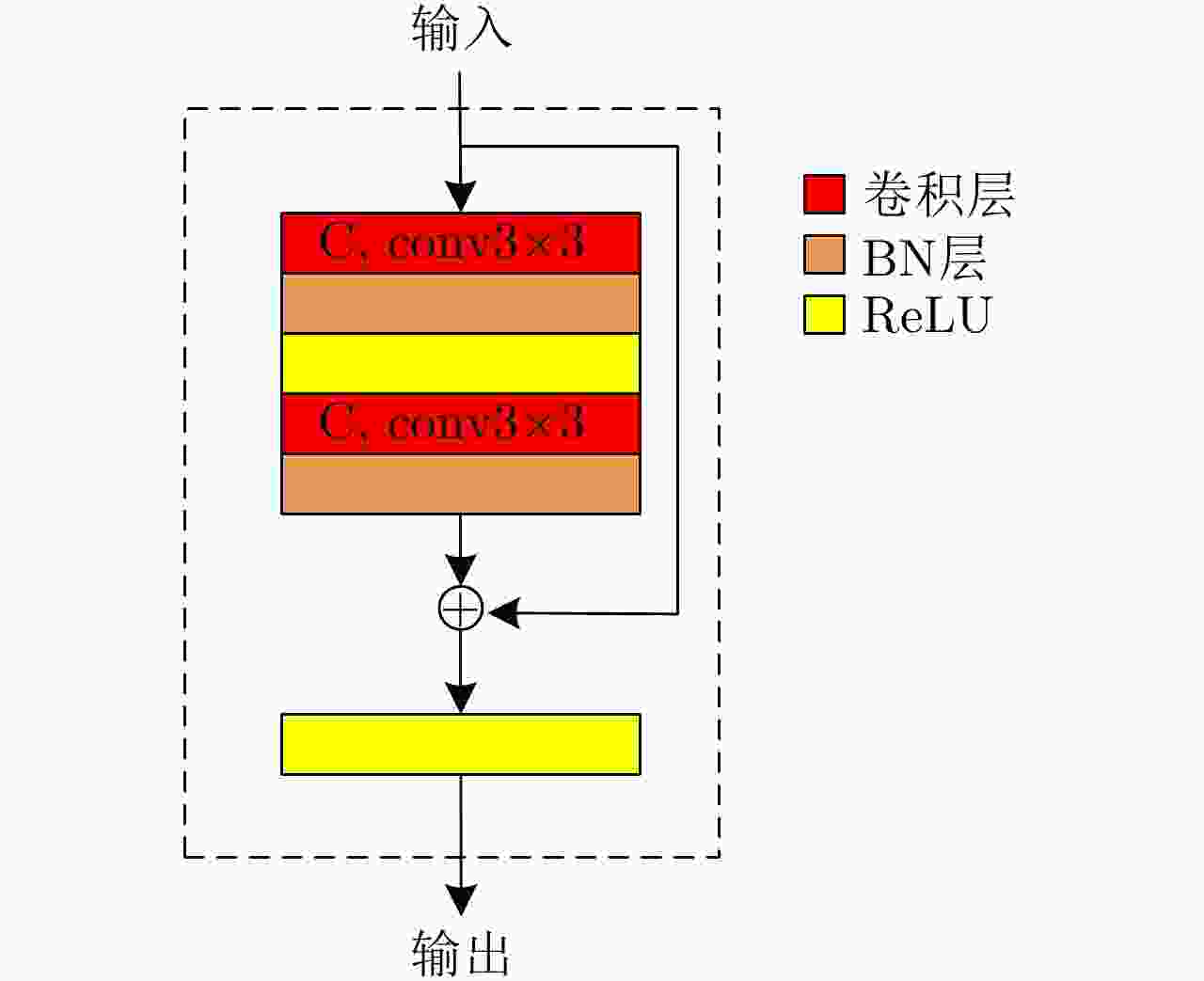

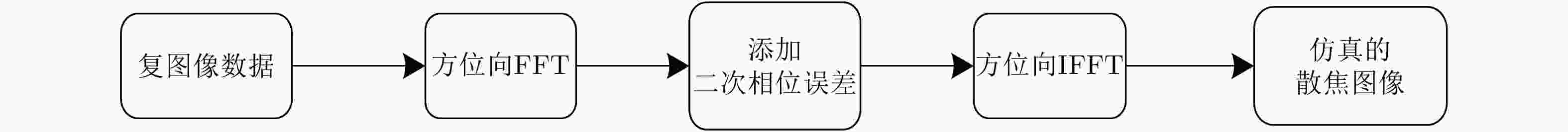

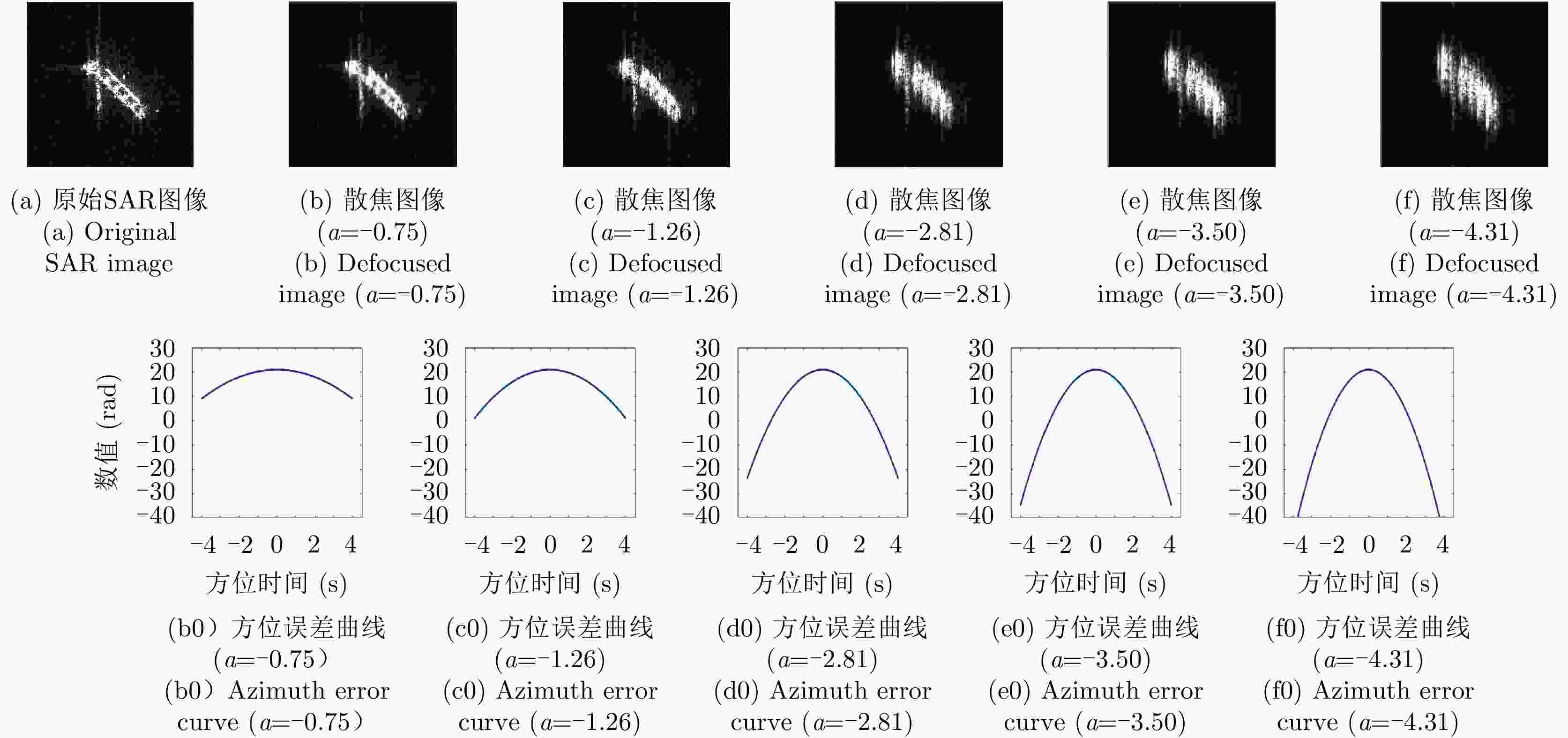

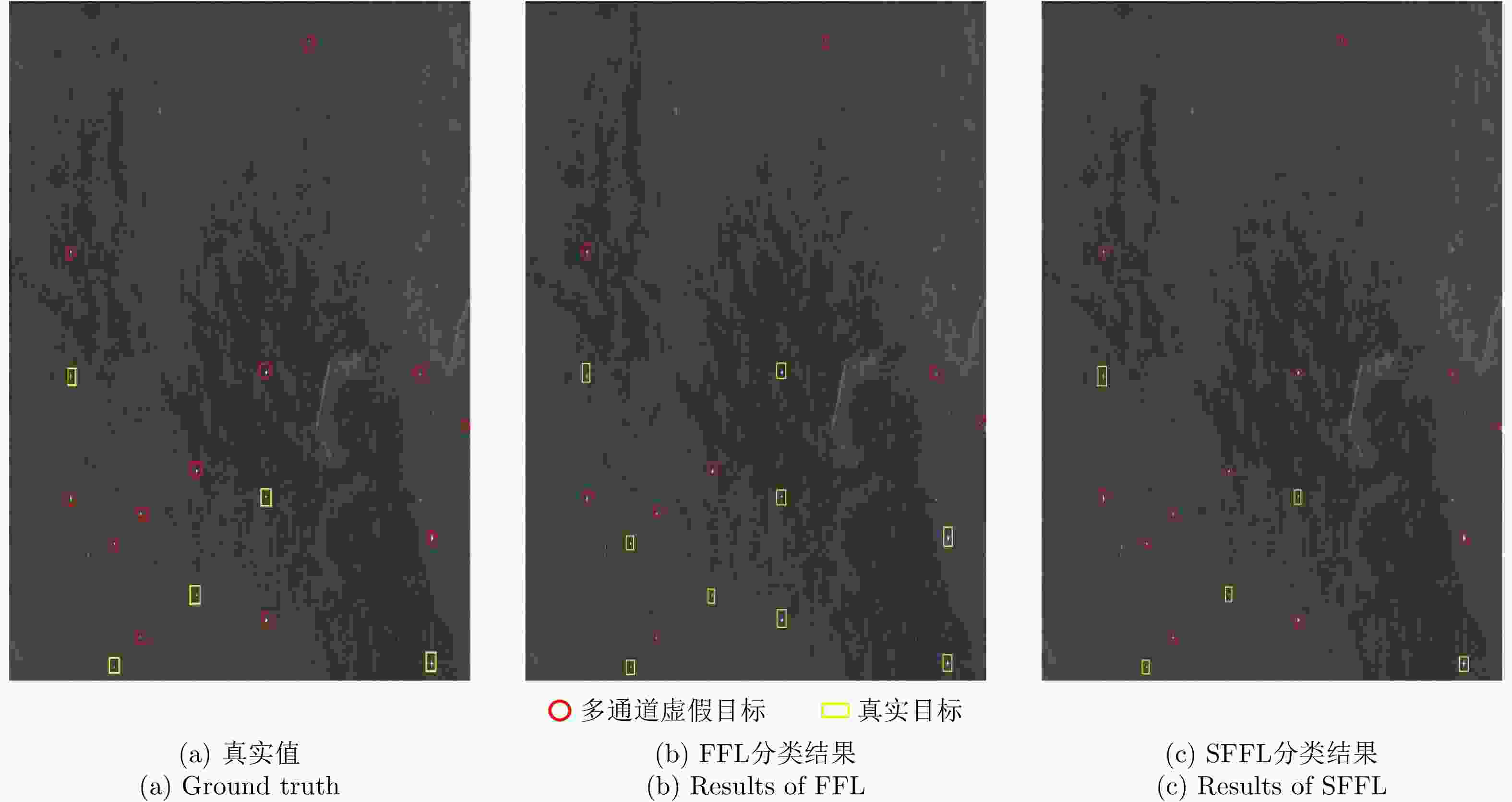

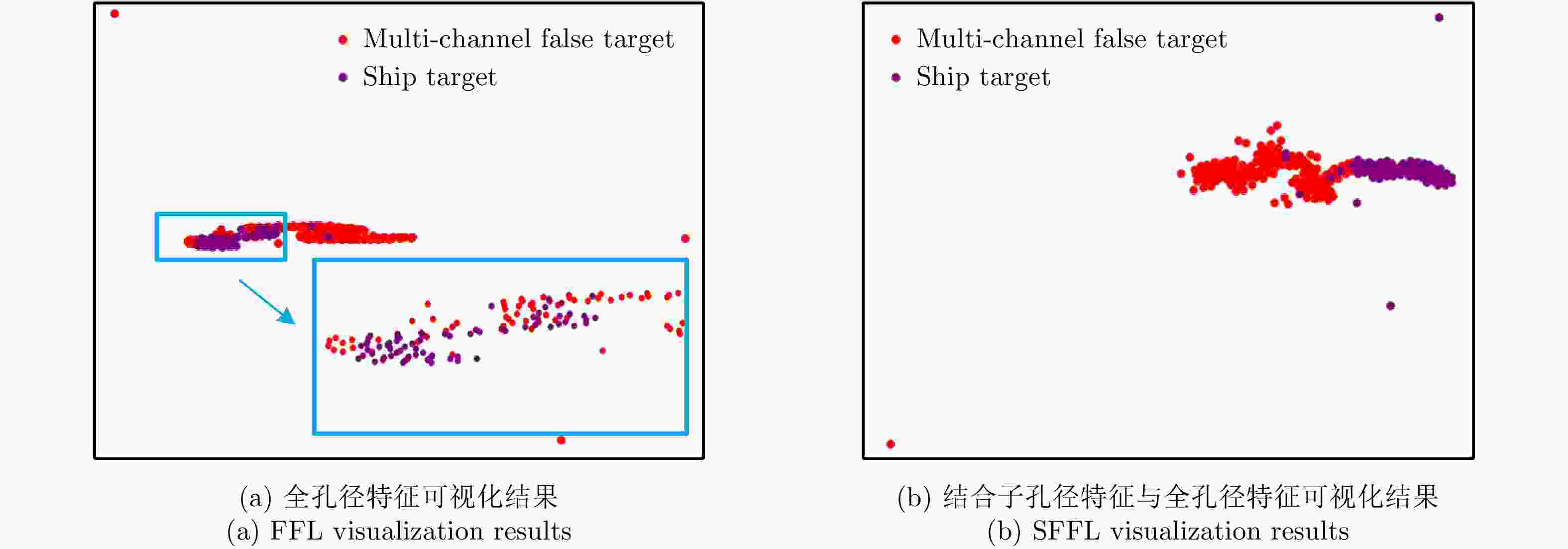

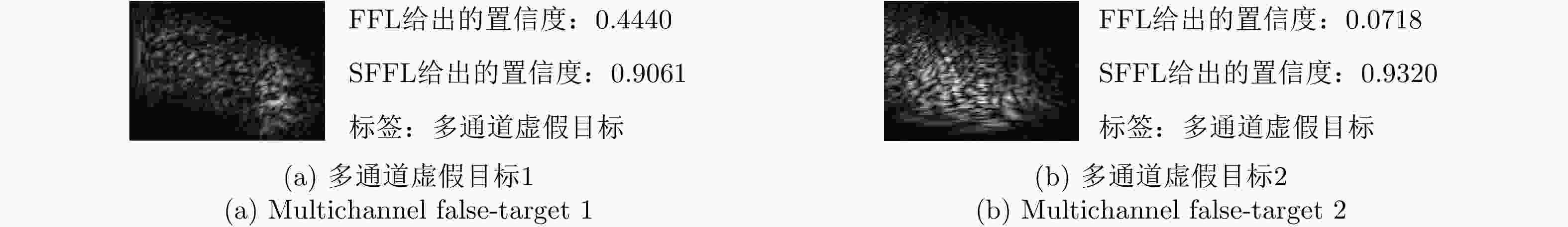

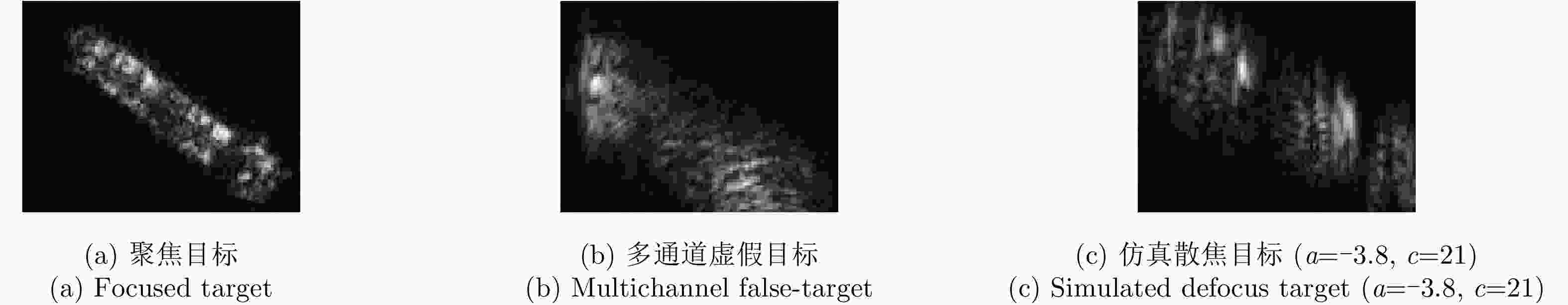

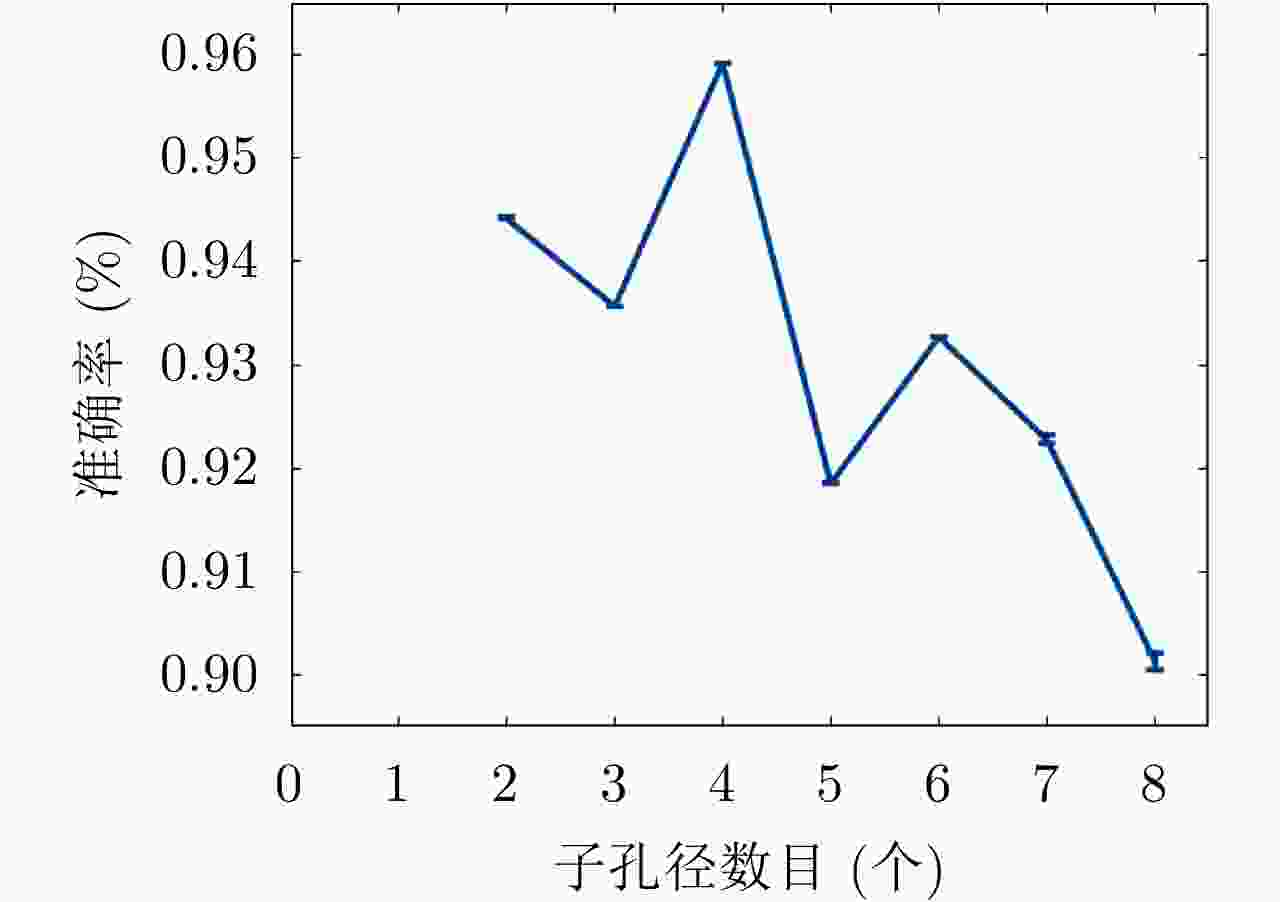

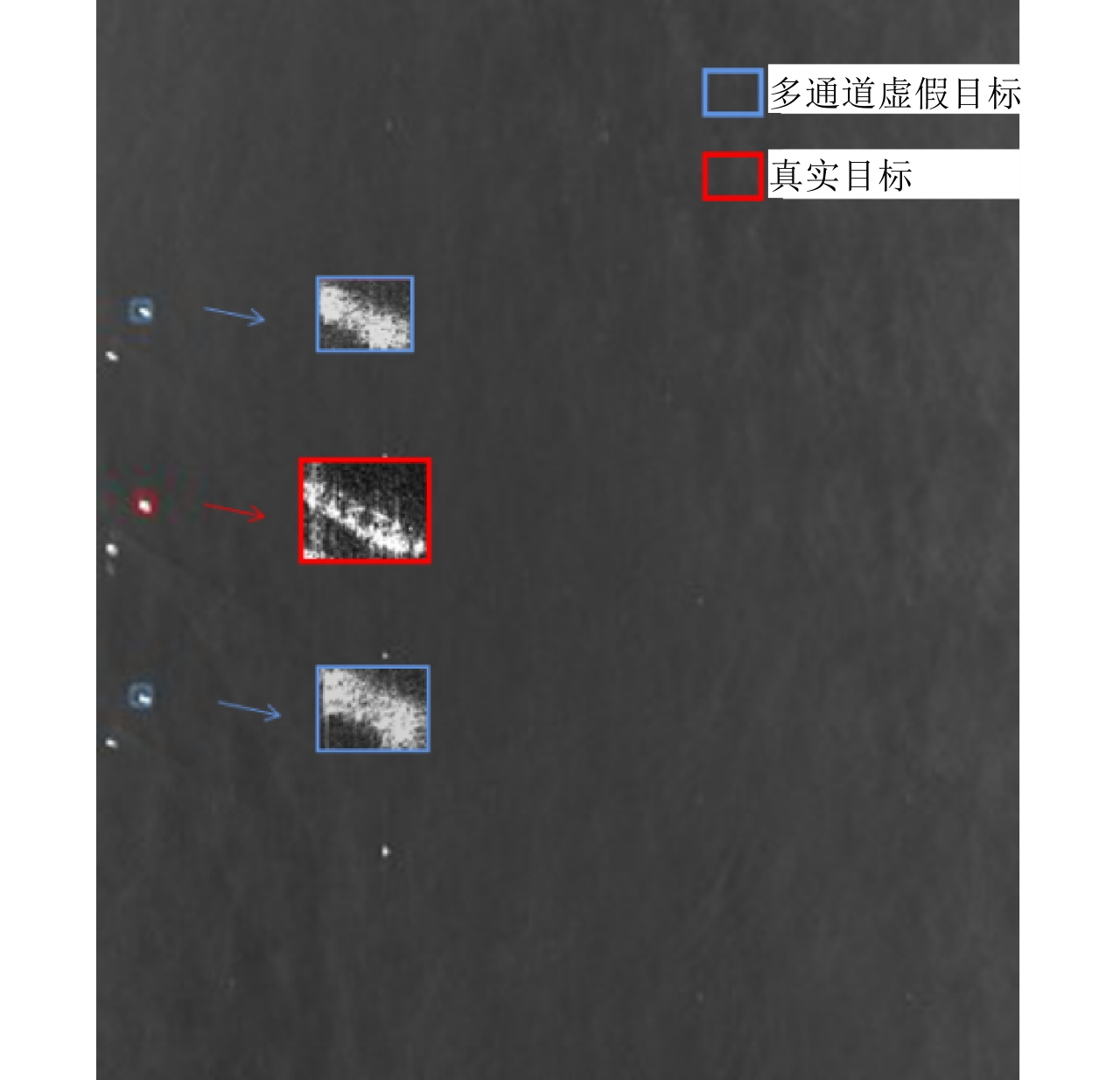

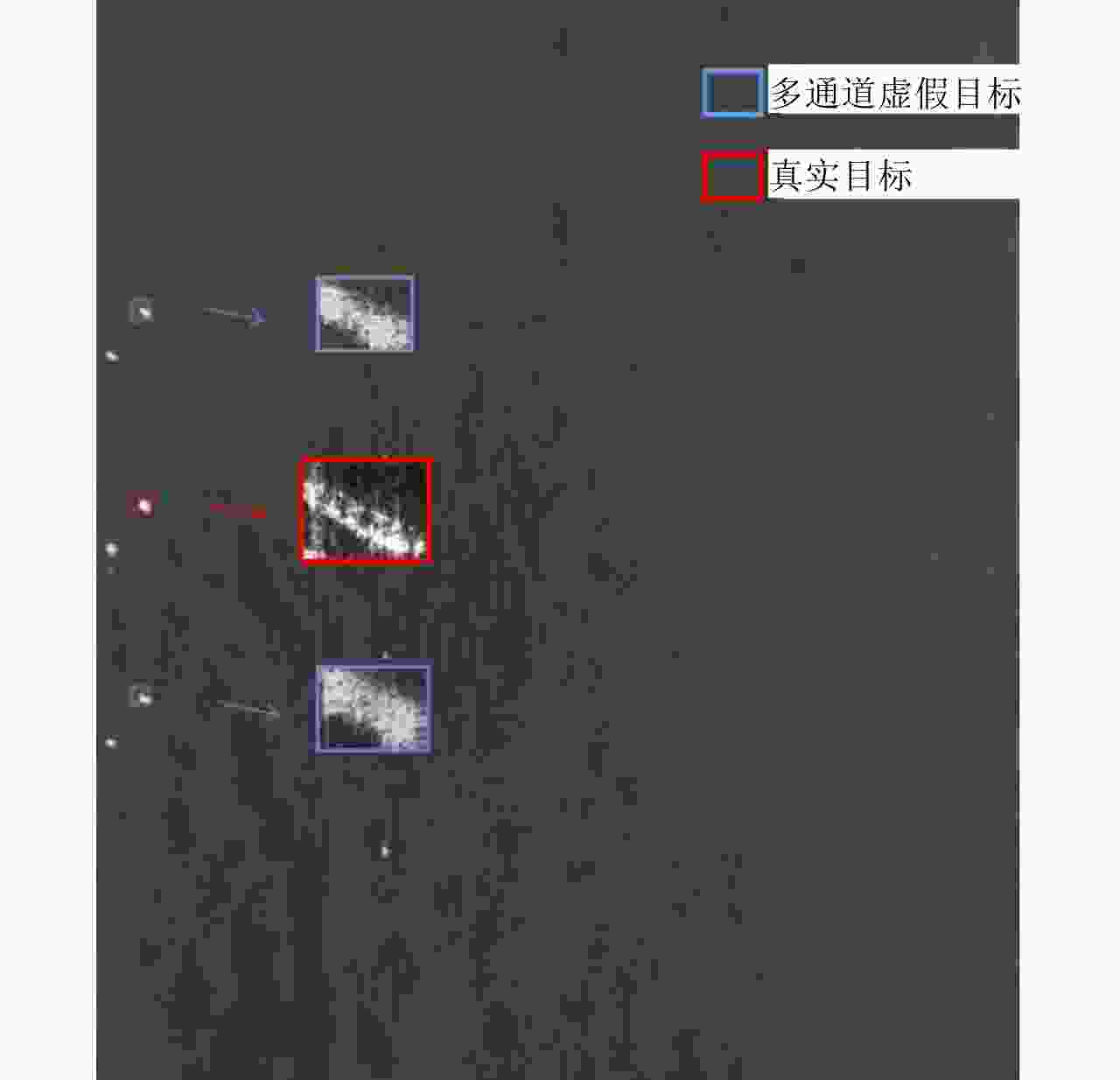

摘要: SAR多通道引起的虚假目标与散焦的船舶目标形状纹理特征非常相似,在全孔径SAR图像中难以区分。针对此类虚假目标造成的虚警问题,该文提出一种基于子孔径与全孔径特征学习的SAR多通道虚假目标鉴别方法。首先,对复数SAR图像进行幅值计算得到幅度图像,利用迁移学习方法提取幅度图像中的全孔径特征;接着,对复数SAR图像进行子孔径分解获得一系列子孔径图像,然后用栈式卷积自编码器(SCAE)提取子孔径图像中的子孔径特征;最后,将子孔径和全孔径特征进行串联并利用联合特征进行分类。在高分三号超精细条带模式SAR图像上的实验结果表明,该方法可以有效的鉴别船舶目标和多通道虚假目标,与仅使用全孔径特征学习的方法相比准确率提升了16.32%。Abstract: False targets caused by multichannel Synthetic Aperture Radar (SAR) are similar to a defocused ship in both shape and texture, making it difficult to discriminate in the full-aperture SAR image. To address the issue of false alarms caused by such false targets, this paper proposes a multichannel SAR false-target discrimination method based on sub-aperture and full-aperture feature learning. First, amplitude calculation is performed on complex SAR images to obtain the amplitude images, and transfer learning is utilized to extract the full-aperture features from the amplitude images. Then, sub-aperture decomposition is performed on complex SAR images to obtain a series of sub-aperture images, and the Stacked Convolutional Auto-Encoders (SCAE) are applied to extract the sub-aperture features from the sub-aperture images. Finally, the sub-aperture and the full-aperture features are concatenated to form the joint features, which are used to accomplish target discrimination. The accuracy of the method proposed in this paper is 16.32% higher than that of the approach only using the full-aperture feature on GF-3 UFS SAR images.

-

表 1 各个自编码单元的卷积层设计

Table 1. Design of convolutional layers in each auto-encoder unit

自编码单元 通道数 卷积核尺寸 1 32 5×5 2 64 5×5 3 64 3×3 4 128 4×4 表 2 基于子孔径与全孔径特征学习的算法

Table 2. SFFL algorithm

输入:复数SAR图像$ C\left(x,y\right) $ for 所有训练样本$ C\left(x,y\right) $ do: (1) 通过子孔径分解获得子孔径图像$ S\left(x,y\right) $ (2) 训练栈式卷积自编码网络$ {F}_{2} $,获得子孔径特征${\rm{\varphi } }_{2}\left(x, y\right)$ for 子孔径图像$ S\left(x,y\right) $ do: for 所有自编码单元$ l $ do: 计算自编码单元的输出

${y}_{i}^{l-1}={F}_{2}\left(\displaystyle\sum\limits_{i=1}^{ {M}_{l-1} }{x}_{i}^{l-1},{\theta }_{2}\right)$计算损失函数

${\rm{Loss} } = \displaystyle\sum \limits_{i = 1}^{ {M_{l - 1} } } \left\| {x_i^{l - 1} - y_i^{l - 1} } \right\|_{\rm{F}}^2$反向传播,更新参数θ2 end for end for (3) 计算全孔径幅度图像$ I\left(x,y\right)=\sqrt{{A\left(x,y\right)}^{2}+{B\left(x,y\right)}^{2}} $ (4) 迁移学习ResNet-18预训练模型$ {F}_{1} $,微调参数θ1,获得

全孔径特征${\rm{\varphi } }_{1}\left(x, y\right)$(5) 特征归一化${\rm{\varphi } }_{1}\left(x, y\right)$, ${\rm{\varphi } }_{2}\left(x, y\right)$ (6) 特征拼接得到${\rm{\psi } }\left(x, y\right)$ (7) 计算损失函数(交叉熵) (8) 反向传播,更新参数θ end for 输出:最终模型 表 3 GF-3超精细条带图像参数

Table 3. The detailed information of GF-3 UFS SAR images used in the experiment

参数 图像1—图像8 成像模式 UFS 产品类型 SLC 产品级别 L1A级 轨道模式 升轨 极化方式 DH 斜距分辨率(m) 2.5~5.0 方位向分辨率(m) 3 幅宽(km) 30 像元间距[Rg×Az](m) 1.124×1.729 入射角(º) 39.54~41.52 表 4 二分类问题混淆矩阵

Table 4. Confusion matrix of binary classification

预测结果 实际为真 实际为假 预测为真 TP FP 预测为假 FN TN 表 5 不同方法的鉴别性能对比

Table 5. Comparison of discrimination performance of different methods

实验方法 准确率(%) 运行时间(s) SLCF+SVM 90.56 0.8823 SFL 90.99 4.9824 FFL 83.69 4.2189 SFFL 96.57 5.3546 表 6 不同方法的混淆矩阵

Table 6. Confusion matrix of different methods

方法 预测结果 识别结果实际为真 识别结果实际为假 SLCF+SVM 预测为真 70 20 预测为假 2 141 SFL 预测为真 68 17 预测为假 4 144 FFL 预测为真 69 35 预测为假 3 126 SFFL 预测为真 69 5 预测为假 3 156 表 7 加入散焦数据结果对比(%)

Table 7. Comparison of two methods after adding defocus data (%)

测试数据 FFL方法准确率 SFFL方法准确率 GF-3数据 83.69 96.57 GF-3数据+仿真散焦数据 80.41 96.73 -

[1] 杜兰, 王兆成, 王燕, 等. 复杂场景下单通道SAR目标检测及鉴别研究进展综述[J]. 雷达学报, 2020, 9(1): 34–54. doi: 10.12000/JR19104DU Lan, WANG Zhaocheng, WANG Yan, et al. Survey of research progress on target detection and discrimination of single-channel SAR images for complex scenes[J]. Journal of Radars, 2020, 9(1): 34–54. doi: 10.12000/JR19104 [2] 吴亮, 雷斌, 韩冰, 等. 卫星姿态误差对多通道SAR成像质量的影响[J]. 测绘通报, 2015, (1): 124–130. doi: 10.13474/j.cnki.11-2246.2015.0026WU Liang, LEI Bin, HAN Bing, et al. The impact of satellite attitude error on multi-channel SAR image quality[J]. Bulletin of Surveying and Mapping, 2015, (1): 124–130. doi: 10.13474/j.cnki.11-2246.2015.0026 [3] 张双喜, 乔宁, 邢孟道, 等. 多普勒频谱模糊情况下的星载方位向多通道高分宽幅SAR-GMTI杂波抑制方法[J]. 雷达学报, 2020, 9(2): 295–303. doi: 10.12000/JR20005ZHANG Shuangxi, QIAO Ning, XING Mengdao, et al. A novel clutter suppression approach for the space-borne multiple channel in the azimuth high-resolution and wide-swath SAR-GMTI system with an ambiguous Doppler spectrum[J]. Journal of Radars, 2020, 9(2): 295–303. doi: 10.12000/JR20005 [4] ZHANG Shuangxi, XING Mengdao, XIA Xianggen, et al. Multichannel HRWS SAR imaging based on range-variant channel calibration and multi-Doppler-direction restriction ambiguity suppression[J]. IEEE Transactions on Geoscience and Remote Sensing, 2014, 52(7): 4306–4327. doi: 10.1109/TGRS.2013.2281329 [5] PAN Zongxu, LIU Lei, QIU Xiaolan, et al. Fast vessel detection in Gaofen-3 SAR images with ultrafine strip-map mode[J]. Sensors, 2017, 17(7): 1578. doi: 10.3390/s17071578 [6] DI MARTINO G, IODICE A, RICCIO D, et al. Filtering of azimuth ambiguity in stripmap synthetic aperture radar images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2014, 7(9): 3967–3978. doi: 10.1109/JSTARS.2014.2320155 [7] 温雪娇, 仇晓兰, 尤红建, 等. 高分辨率星载SAR起伏运动目标精细聚焦与参数估计方法[J]. 雷达学报, 2017, 6(2): 213–220. doi: 10.12000/JR17005WEN Xuejiao, QIU Xiaolan, YOU Hongjian, et al. Focusing and parameter estimation of fluctuating targets in high resolution spaceborne SAR[J]. Journal of Radars, 2017, 6(2): 213–220. doi: 10.12000/JR17005 [8] WEN Xuejiao, QIU Xiaolan, and YOU Hongjian. Focusing and parameter estimating of fluctuating target in high resolution spaceborne SAR[C]. 2016 CIE International Conference on Radar, Guangzhou, China, 2016: 1–5. doi: 10.1109/RADAR.2016.8059537. [9] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. doi: 10.1109/TPAMI.2016.2577031 [10] LIU Wei, ANGUELOV D, ERHAN D, et al. SSD: Single shot MultiBox detector[C]. The 14th European Conference on Computer Vision, Amsterdam, Holland, 2016. doi: 10.1007/978-3-319-46448-0_2. [11] LI Jianwei, QU Changwen, and SHAO Jiaqi. Ship detection in SAR images based on an improved faster R-CNN[C]. 2017 SAR in Big Data Era: Models, Methods and Applications, Beijing, China, 2017: 1–6. doi: 10.1109/BIGSARDATA.2017.8124934. [12] KANG Miao, LENG Xiangguang, LIN Zhao, et al. A modified faster R-CNN based on CFAR algorithm for SAR ship detection[C]. 2017 International Workshop on Remote Sensing with Intelligent Processing, Shanghai, China, 2017: 1–4. doi: 10.1109/RSIP.2017.7958815. [13] LIU Lei, CHEN Guowei, PAN Zongxu, et al. Inshore ship detection in SAR images based on deep neural networks[C]. 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 2018: 25–28. doi: 10.1109/IGARSS.2018.8519555. [14] ZHANG Fan, WANG Yunchong, NI Jun, et al. SAR target small sample recognition based on CNN cascaded features and AdaBoost rotation forest[J]. IEEE Geoscience and Remote Sensing Letters, 2020, 17(6): 1008–1012. doi: 10.1109/LGRS.2019.2939156 [15] LENG Xiangguang, JI Kefeng, ZHOU Shilin, et al. Ship detection based on complex signal kurtosis in single-channel SAR imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(9): 6447–6461. doi: 10.1109/TGRS.2019.2906054 [16] LENG Xiangguang, JI Kefeng, ZHOU Shilin, et al. Discriminating ship from radio frequency interference based on noncircularity and non-gaussianity in sentinel-1 SAR imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(1): 352–363. doi: 10.1109/TGRS.2018.2854661 [17] ZHANG Zhimian, WANG Haipeng, XU Feng, et al. Complex-valued convolutional neural network and its application in polarimetric SAR image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(12): 7177–7188. doi: 10.1109/TGRS.2017.2743222 [18] HUANG Zhongling, DATCU M, PAN Zongxu, et al. Deep SAR-Net: Learning objects from signals[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 161: 179–193. doi: 10.1016/j.isprsjprs.2020.01.016 [19] TANG Jiaxin, ZHANG Fan, ZHOU Yongsheng, et al. A fast inference networks for SAR target few-shot learning based on improved siamese networks[C]. 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 2019: 1212–1215. doi: 10.1109/IGARSS.2019.8898180. [20] OUCHI K, TAMAKI S, YAGUCHI H, et al. Ship detection based on coherence images derived from cross correlation of multilook SAR images[J]. IEEE Geoscience and Remote Sensing Letters, 2004, 1(3): 184–187. doi: 10.1109/LGRS.2004.827462 [21] MARINO A, SANJUAN-FERRER M J, HAJNSEK I, et al. Ship detection with spectral analysis of synthetic aperture radar: A comparison of new and well-known algorithms[J]. Remote Sensing, 2015, 7(5): 5416–5439. doi: 10.3390/rs70505416 [22] RENGA A, GRAZIANO M D, and MOCCIA A. Segmentation of marine SAR images by sublook analysis and application to sea traffic monitoring[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(3): 1463–1477. doi: 10.1109/TGRS.2018.2866934 [23] BREKKE C, ANFINSEN S N, and LARSEN Y. Subband extraction strategies in ship detection with the subaperture cross-correlation magnitude[J]. IEEE Geoscience and Remote Sensing Letters, 2013, 10(4): 786–790. doi: 10.1109/LGRS.2012.2223656 [24] SOUYRIS J C, HENRY C, and ADRAGNA F. On the use of complex SAR image spectral analysis for target detection: Assessment of polarimetry[J]. IEEE Transactions on Geoscience and Remote Sensing, 2003, 41(12): 2725–2734. doi: 10.1109/TGRS.2003.817809 [25] FERRO-FAMIL L, REIGBER A, POTTIER E, et al. Scene characterization using subaperture polarimetric SAR data[J]. IEEE Transactions on Geoscience and Remote Sensing, 2003, 41(10): 2264–2276. doi: 10.1109/TGRS.2003.817188 [26] DUMITRU C O, SCHWARZ G, and DATCU M. Land cover semantic annotation derived from high-resolution SAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2016, 9(6): 2215–2232. doi: 10.1109/JSTARS.2016.2549557 [27] HUANG Zhongling, DUMITRU C O, PAN Zongxu, et al. Classification of large-scale high-resolution SAR images with deep transfer learning[J]. IEEE Geoscience and Remote Sensing Letters, 2021, 18(1): 107–111. doi: 10.1109/LGRS.2020.2965558 [28] TerraSAR-X Basic Product Specification Document, Issue1.9[Online]. http://sss.terrasar-x.dlr.de/pdfs/TX-GS-DD-3302.pdf, 2013. [29] HUANG Zhongling, PAN Zongxu, and LEI Bin. What, where, and how to transfer in SAR target recognition based on deep CNNs[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(4): 2324–2336. doi: 10.1109/TGRS.2019.2947634 -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: