SAR and Infrared Image Fusion in Complex Contourlet Domain Based on Joint Sparse Representation

DOI: 10.12000/JR17019 cstr: 32380.14.JR17019

-

摘要: 针对红外图像与SAR图像的灰度差异性大、两者融合图像不太符合人类视觉认知的问题,提出了一种基于联合稀疏表示的复Contourlet域红外图像与SAR图像融合方法。首先对红外图像与SAR图像分别进行复Contourlet分解。然后利用K-奇异值分解(K-Singular Value Decomposition, K-SVD)方法获得两幅源图像低频分量的过完备字典,并根据联合稀疏表示模型生成联合字典,通过正交匹配追踪(Orthogonal Matching Pursuit, OMP)方法求出源图像低频分量在联合字典下的稀疏表示系数,接着采用选择最大化策略对两个低频分量的稀疏表示系数进行选取,随后进行稀疏表示重构获得融合的低频分量;对高频分量结合视觉敏感度系数和能量匹配度两个活跃度准则进行融合,以捕获源图像丰富的细节信息。最后经复Contourlet逆变换获得融合图像。与3种经典融合方法及近年来提出的基于非下采样Contourlet变换(Non-Subsampled Contourlet Transform, NSCT)、基于稀疏表示的融合方法相比,该方法能够有效突出源图像的显著特征,最大程度地继承源图像的信息。

-

关键词:

- 图像融合 /

- SAR图像 /

- 红外图像 /

- 复Contourlet变换 /

- 联合稀疏表示

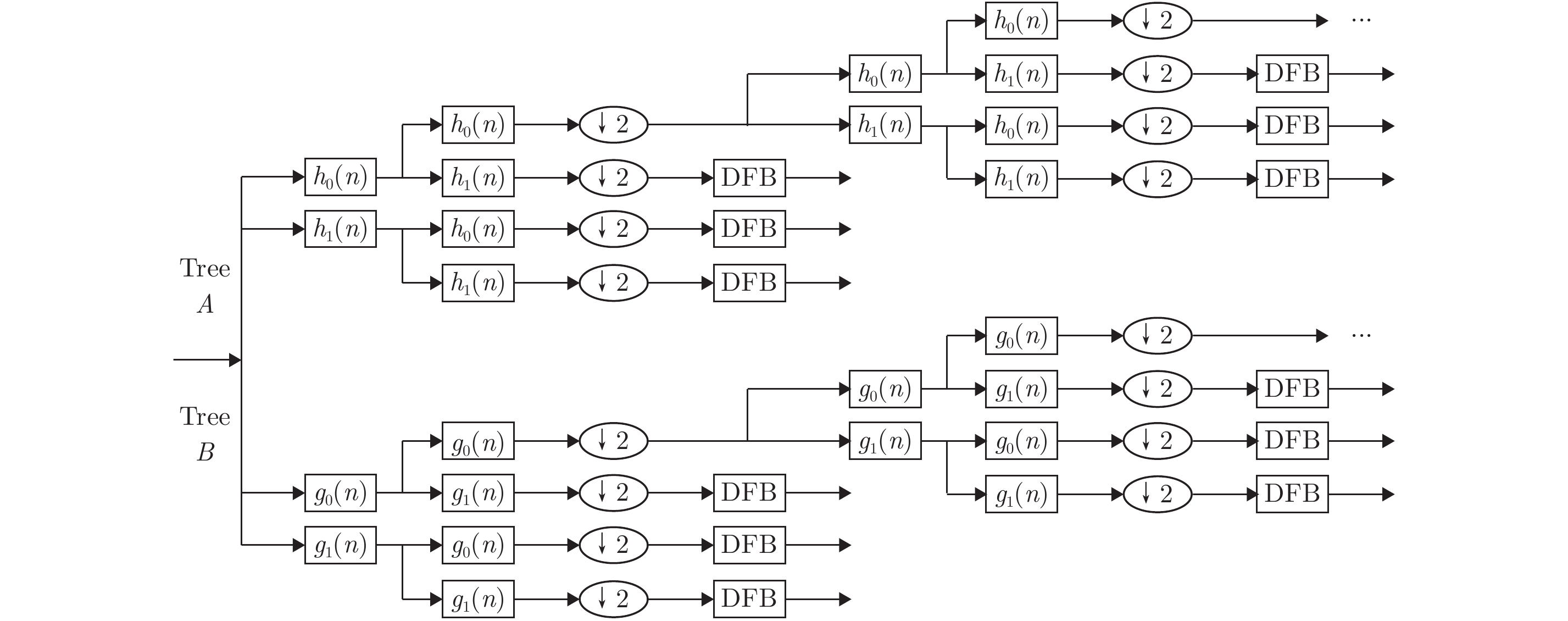

Abstract: To investigate the problems of the large grayscale difference between infrared and Synthetic Aperture Radar (SAR) images and their fusion image not being fit for human visual perception, we propose a fusion method for SAR and infrared images in the complex contourlet domain based on joint sparse representation. First, we perform complex contourlet decomposition of the infrared and SAR images. Then, we employ the K-Singular Value Decomposition (K-SVD) method to obtain an over-complete dictionary of the low-frequency components of the two source images. Using a joint sparse representation model, we then generate a joint dictionary. We obtain the sparse representation coefficients of the low-frequency components of the source images in the joint dictionary by the Orthogonal Matching Pursuit (OMP) method and select them using the selection maximization strategy. We then reconstruct these components to obtain the fused low-frequency components and fuse the high-frequency components using two criteria——the coefficient of visual sensitivity and the degree of energy matching. Finally, we obtain the fusion image by the inverse complex contourlet transform. Compared with the three classical fusion methods and recently presented fusion methods, e.g., that based on the Non-Subsampled Contourlet Transform (NSCT) and another based on sparse representation, the method we propose in this paper can effectively highlight the salient features of the two source images and inherit their information to the greatest extent. -

Table 1. Quantitative evaluation of six fusion methods

Image group Fusion method IE MI CC SF AG SD Time (s) Group 1 LP method 6.839 11.405 0.687 32.852 22.406 35.499 2.126 WT method 6.724 11.119 0.869 25.667 17.947 29.863 1.827 NSCT method 6.821 11.295 0.813 26.881 18.373 33.828 50.332 DT-CWT method 6.759 11.236 0.719 25.691 17.881 32.614 3.765 Method in Ref. [11] 6.887 11.411 0.788 24.312 16.955 32.022 67.782 Proposed method 7.091 11.827 0.829 30.276 20.294 43.965 27.232 Group 2 LP method 6.973 12.070 0.739 29.114 21.005 33.825 1.728 WT method 7.097 12.181 0.883 25.643 19.298 35.330 0.898 NSCT method 6.895 11.808 0.729 23.456 17.272 33.062 39.590 DT-CWT method 6.902 11.848 0.784 22.633 16.745 32.184 3.371 Method in Ref. [11] 7.140 12.843 0.7833 30.390 22.565 37.019 53.945 Proposed method 7.305 12.615 0.869 28.648 20.887 44.539 22.733 Group 3 LP method 6.633 12.141 0.834 28.629 18.687 33.825 1.351 WT method 6.797 12.1241 0.803 25.643 19.298 35.330 0.557 NSCT method 6.801 11.973 0.864 26.105 17.539 40.052 26.433 DT-CWT method 6.665 11.723 0.846 23.539 16.745 32.184 1.371 Method in Ref. [11] 6.796 12.234 0.812 25.518 17.244 37.274 37.274 Proposed method 6.864 11.8401 0.836 28.689 18.982 40.084 18.613 -

[1] Chen Lei, Yang Feng-bao, Wang Zhi-she, et al. Mixed fusion algorithm of SAR and visible images with feature level and pixel[J]. Opto-Electronic Engineering, 2014, 41(3): 55–60. [2] Zeng Xian-wei, Fang Yang-wang, Wu You-li, et al.. A new guidance law based on information fusion and optimal control of structure stochastic jump system[C]. Proceedings of 2007 IEEE International Conference on Automation and Logistics, Jinan, China, 2007: 624–627. [3] Ye Chun-qi, Wang Bao-shu, and Miao Qi-guang. Fusion algorithm of SAR and panchromatic images based on region segmentation in NSCT domain[J]. Systems Engineering and Electronics, 2010, 32(3): 609–613. [4] Xu Xing, Li Ying, Sun Jin-qiu, et al. An algorithm for image fusion based on curvelet transform[J]. Journal of Northwestern Polytechnical University, 2008, 26(3): 395–398. [5] Shi Zhi, Zhang Zhuo, and Yue Yan-gang. Adaptive image fusion algorithm based on shearlet transform[J]. Acta Photonica Sinica, 2013, 42(1): 115–120. DOI: 10.3788/gzxb [6] Liu Jian, Lei Ying-jie, Xing Ya-qiong, et al. Fusion technique for SAR and gray visible image based on hidden Markov model in non-subsample shearlet transform domain[J]. Control and Decision, 2016, 31(3): 453–457. [7] Chen Di-peng and Li Qi. The use of complex contourlet transform on fusion scheme[C]. Proceedings of World Academy of Science, Engineering and Technology, Prague, Czech Republic, 2005: 342–347. [8] Wu Yi-quan, Wan Hong, and Ye Zhi-long. Fabric defect image noise reduction based on complex contourlet transform and anisotropic diffusion[J]. CAAI Transactions on Intelligent Systems, 2013, 8(3): 214–219. [9] Wei Qi, Bioucas-Dias J, Dobigeon N, et al. Hyperspectral and multispectral image fusion based on a sparse representation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(7): 3658–3668. DOI: 10.1109/TGRS.2014.2381272 [10] Yu Nan-nan, Qiu Tian-shuang, Bi Feng, et al. Image features extraction and fusion based on joint sparse representation[J]. IEEE Journal of Selected Topics in Signal Processing, 2011, 5(5): 1074–1082. DOI: 10.1109/JSTSP.2011.2112332 [11] Wang Jun, Peng Jin-ye, Feng Xiao-yi, et al. Image fusion with nonsubsampled contourlet transform and sparse representation[J]. Journal of Electronic Imaging, 2013, 22(4): 043019. DOI: 10.1117/1.JEI.22.4.043019 [12] Duarte M F, Sarvotham S, Baron D, et al.. Distributed compressed sensing of jointly sparse signals[C]. Proceedings of Conference Record of the Thirty-Ninth Asilomar Conference on Signals, Systems and Computers Asilomar, Pacific Grove, CA, USA, 2005: 1537–1541. [13] Aharon M, Elad M, and Bruckstein A. rmK-SVD: An algorithm for designing overcomplete dictionaries for sparse representation[J]. IEEE Transactions on Signal Processing, 2006, 54(11): 4311–4322. DOI: 10.1109/TSP.2006.881199 [14] Mallat S G and Zhang Zhi-feng. Matching pursuits with time-frequency dictionaries[J]. IEEE Transactions on Signal Processing, 1993, 41(12): 3397–3415. DOI: 10.1109/78.258082 [15] Kong Wei-wei and Lei Ying-jie. Technique for image fusion based on NSST domain and human visual characteristics[J]. Journal of Harbin Engineering University, 2013, 34(6): 777–782. [16] Fan Xin-nan, Zhang Ji, Li Min, et al. A multi-sensor image fusion algorithm based on local feature difference[J]. Journal of Optoelectronics·Laser, 2014, 25(10): 2025–2032. -

作者中心

作者中心 专家审稿

专家审稿 责编办公

责编办公 编辑办公

编辑办公

下载:

下载: