- Home

- Articles & Issues

-

Data

- Dataset of Radar Detecting Sea

- SAR Dataset

- SARGroundObjectsTypes

- SARMV3D

- AIRSAT Constellation SAR Land Cover Classification Dataset

- 3DRIED

- UWB-HA4D

- LLS-LFMCWR

- FAIR-CSAR

- MSAR

- SDD-SAR

- FUSAR

- SpaceborneSAR3Dimaging

- Sea-land Segmentation

- SAR Multi-domain Ship Detection Dataset

- SAR-Airport

- Hilly and mountainous farmland time-series SAR and ground quadrat dataset

- SAR images for interference detection and suppression

- HP-SAR Evaluation & Analytical Dataset

- GDHuiYan-ATRNet

- Multi-System Maritime Low Observable Target Dataset

- DatasetinthePaper

- DatasetintheCompetition

- Report

- Course

- About

- Publish

- Editorial Board

- Chinese

Article Navigation >

Journal of Radars

>

2026

> Online First

| Citation: | XU Congan, GAO Long, ZHANG Chi, et al. Maritime multimodal data resource system—infrared-visible dual-modal dataset for ship detection[J]. Journal of Radars, in press. doi: 10.12000/JR25144 |

Maritime Multimodal Data Resource System—Infrared-visible Dual-modal Dataset for Ship Detection

DOI: 10.12000/JR25144 CSTR: 32380.14.JR25144

More Information-

Abstract

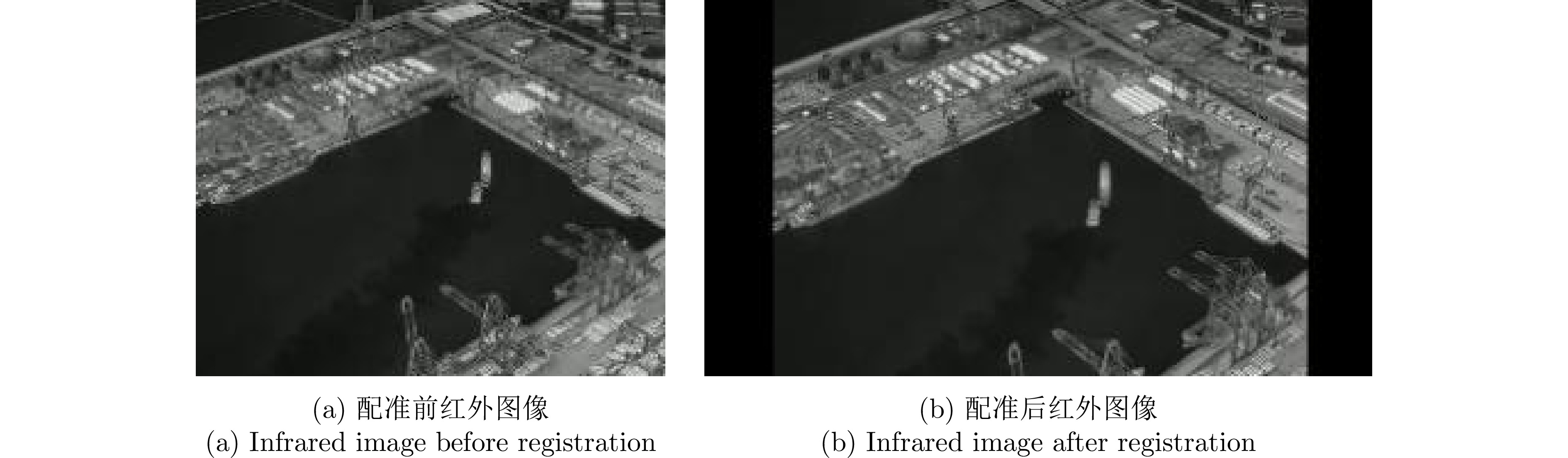

A maritime multimodal data resource system provides a foundation for multisensor collaborative detection using radar, Synthetic Aperture Radar (SAR), and electro-optical sensors, enabling fine-grained target perception. Such systems are essential for advancing the practical application of detection algorithms and improving maritime target surveillance capabilities. To this end, this study constructs a maritime multimodal data resource system using multisource data collected from the sea area near a port in the Bohai Sea. Data were acquired using SAR, radar, visible-light cameras, infrared cameras, and other sensors mounted on shore-based and airborne platforms. The data were labeled by performing automatic correlation registration and manual correction. According to the requirements of different tasks, multiple task-oriented multimodal associated datasets were compiled. This paper focuses on one subset of the overall resource system, namely the Dual-Modal Ship Detection (DMSD), which consists exclusively of visible-light and infrared image pairs. The dataset contains2163 registered image pairs, with intermodal alignment achieved through an affine transformation. All images were collected in real maritime environments and cover diverse sea conditions and backgrounds, including cloud, rain, fog, and backlighting. The dataset was evaluated using representative algorithms, including YOLO and CFT. Experimental results show that the dataset achieves an mAP@50 of approximately 0.65 with YOLOv8 and 0.63 with CFT, demonstrating its effectiveness in supporting research on optimizing bimodal fusion strategies and enhancing detection robustness in complex maritime scenarios. -

-

References

[1] LIU Zikun, YUAN Liu, WENG Lubin, et al. A high resolution optical satellite image dataset for ship recognition and some new baselines[C]. The 6th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2017), Porto, Portugal, 2017: 324–331. doi: 10.5220/0006120603240331.[2] ZHANG Zhengning, ZHANG Lin, WANG Yue, et al. ShipRSImageNet: A large-scale fine-grained dataset for ship detection in high-resolution optical remote sensing images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 8458–8472. doi: 10.1109/JSTARS.2021.3104230.[3] CHEN Kaiyan, WU Ming, LIU Jiaming, et al. FGSD: A dataset for fine-grained ship detection in high resolution satellite images[EB/OL]. https://arxiv. org/abs/2003.06832, 2020.[4] HAN Yaqi, LIAO Jingwen, LU Tianshu, et al. KCPNet: Knowledge-driven context perception networks for ship detection in infrared imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5000219. doi: 10.1109/TGRS.2022.3233401.[5] NIRGUDKAR S, DEFILIPPO M, SACARNY M, et al. MassMIND: Massachusetts maritime INfrared dataset[J]. The International Journal of Robotics Research, 2023, 42(1/2): 21–32. doi: 10.1177/02783649231153020.[6] TOET A. TNO image fusion dataset[EB/OL]. https://figshare.com/articles/dataset/TNO_Image_Fusion_Dataset/1008029, 2022.[7] JIA Xinyu, ZHU Chuang, LI Minzhen, et al. LLVIP: A visible-infrared paired dataset for low-light vision[C]. IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, Canada, 2021: 3489–3497. doi: 10.1109/ICCVW54120.2021.00389.[8] LI Yiming, LI Zhiheng, CHEN Nuo, et al. Multiagent multitraversal multimodal self-driving: Open MARS dataset[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2024: 22041–22051. doi: 10.1109/CVPR52733.2024.02081.[9] HWANG S, PARK J, KIM N, et al. Multispectral pedestrian detection: Benchmark dataset and baseline[C]. The 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, USA, 2015: 1037–1045. doi: 10.1109/CVPR.2015.7298706.[10] 苏丽, 崔世豪, 张雯. 基于改进暗通道先验的海上低照度图像增强算法[J]. 海军航空大学学报, 2024, 39(5): 576–586. doi: 10.7682/j.issn.2097-1427.2024.05.007.SU Li, CUI Shihao, and ZHANG Wen. An algorithm for enhancing low-light images at sea based on improved dark channel priors[J]. Journal of Naval Aviation University, 2024, 39(5): 576–586. doi: 10.7682/j.issn.2097-1427.2024.05.007.[11] 曾文锋, 李树山, 王江安. 基于仿射变换模型的图像配准中的平移、旋转和缩放[J]. 红外与激光工程, 2001, 30(1): 18–20, 17. doi: 10.3969/j.issn.1007-2276.2001.01.006.ZENG Wenfeng, LI Shushan, and WANG Jiang’an. Translation, rotation and scaling changes in image registration based affine transformation model[J]. Infrared and Laser Engineering, 2001, 30(1): 18–20, 17. doi: 10.3969/j.issn.1007-2276.2001.01.006.[12] 于乐凯, 曹政, 孙艳丽, 等. 海上舰船目标可见光/红外图像匹配方法[J]. 海军航空大学学报, 2024, 39(6): 755–764, 772. doi: 10.7682/j.issn.2097-1427.2024.06.013.YU Lekai, CAO Zheng, SUN Yanli, et al. Visible and infrared images matching method for maritime ship targets[J]. Journal of Naval Aviation University, 2024, 39(6): 755–764, 772. doi: 10.7682/j.issn.2097-1427.2024.06.013.[13] GOYAL P, DOLLÁR P, GIRSHICK R, et al. Accurate, large minibatch SGD: Training ImageNet in 1 hour[EB/OL]. https://arxiv.org/abs/1706.02677, 2018.[14] FANG Qingyun, HAN Dapeng, WANG Zhaokui. Cross-modality fusion transformer for multispectral object detection[EB/OL]. https://arxiv.org/abs/2111.00273, 2022.[15] BOCHKOVSKIY A, WANG C Y, LIAO H Y M. YOLOv4: Optimal speed and accuracy of object detection[EB/OL]. https://arxiv.org/abs/2004.10934, 2020.[16] WANG Jinpeng, XU Cong’an, ZHAO Chunhui, et al. Multimodal object detection of UAV remote sensing based on joint representation optimization and specific information enhancement[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2024, 17: 12364–12373. doi: 10.1109/JSTARS.2024.3373816.[17] ZHANG Jiaqing, LEI Jie, XIE Weiying, et al. SuperYOLO: Super resolution assisted object detection in multimodal remote sensing imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5605415. doi: 10.1109/TGRS.2023.3258666. -

Proportional views

- Figure 1. RGB images and infrared images of ships

- Figure 2. Images under different sea conditions and target conditions

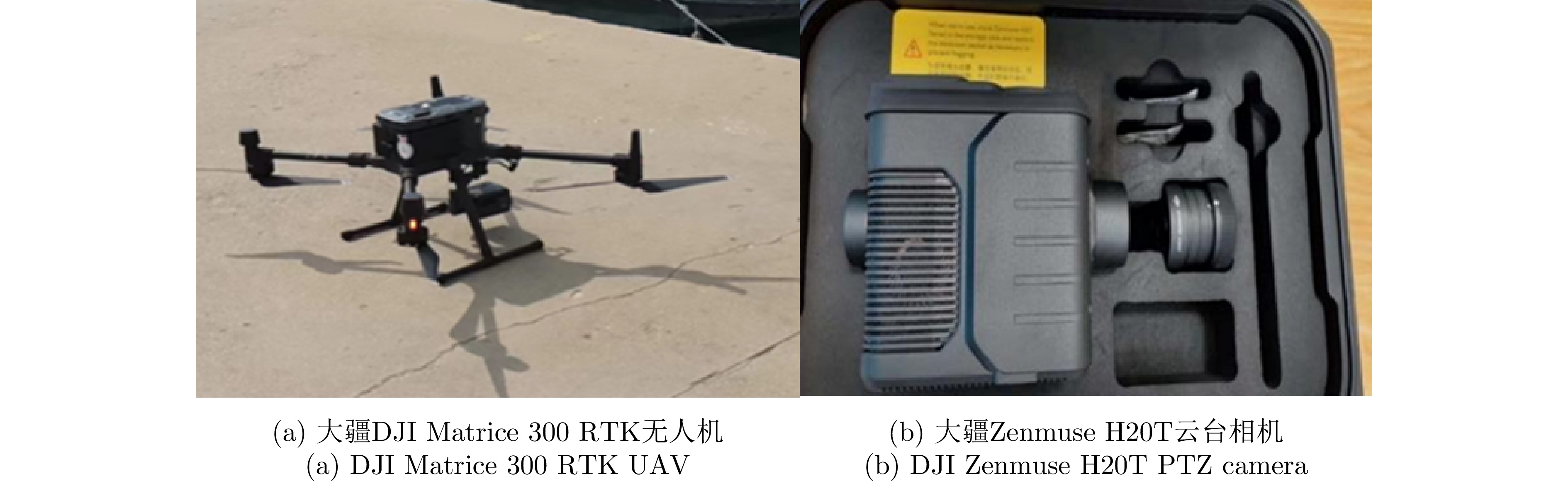

- Figure 3. Image acquisition equipment

- Figure 4. Comparison of infrared image registration before and after

- Figure 5. Interface of labelimg software

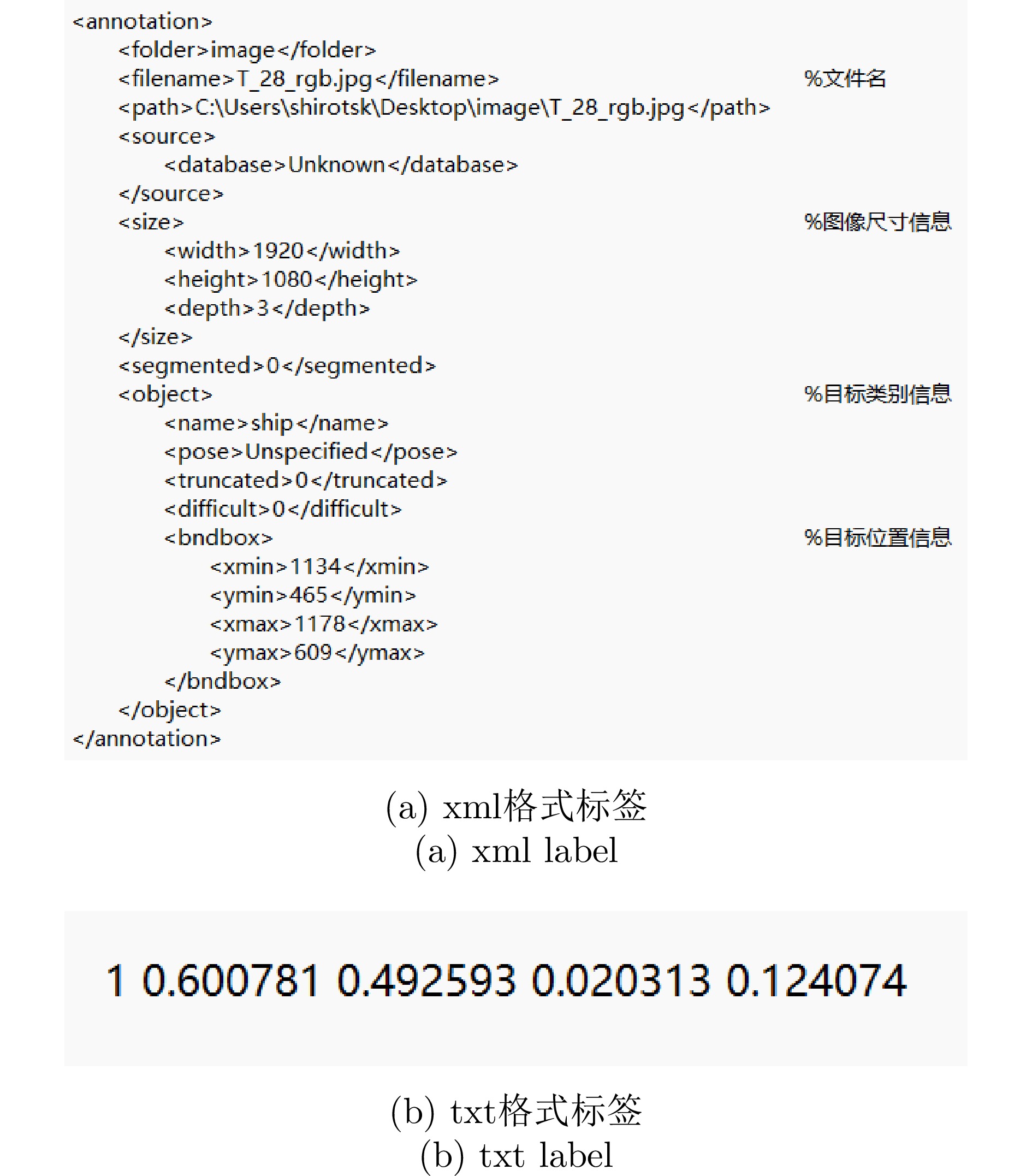

- Figure 6. Two label formats

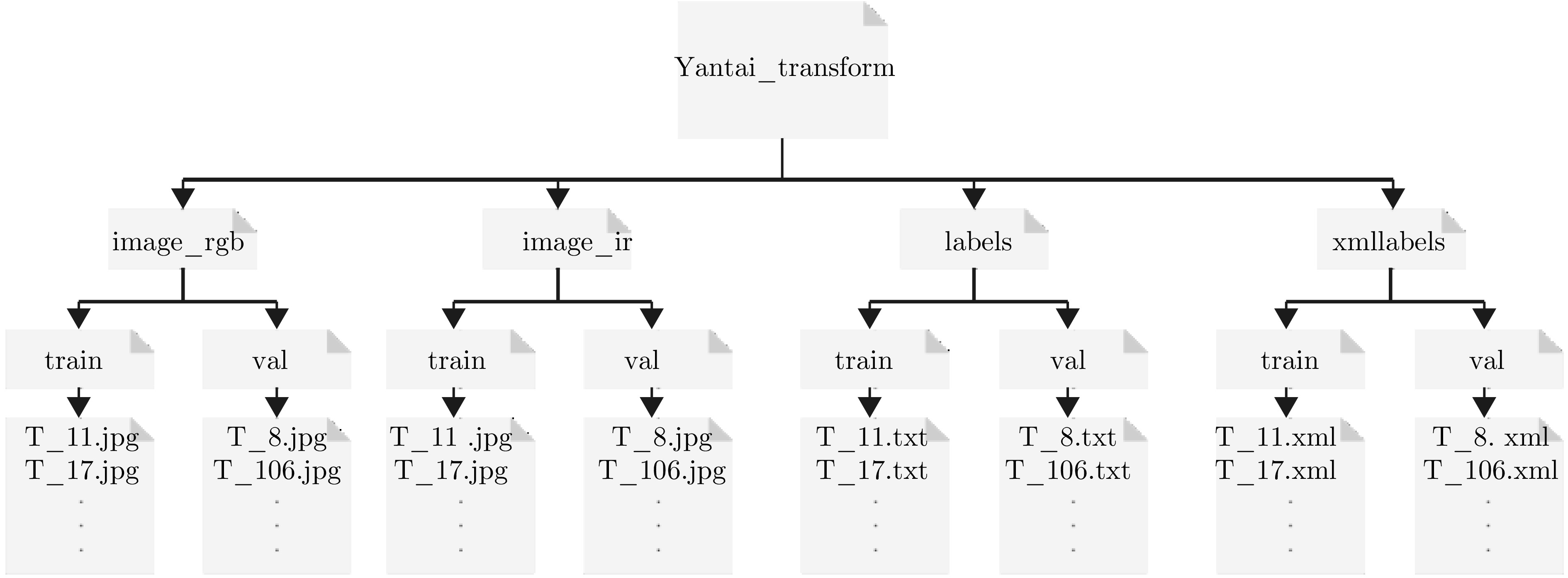

- Figure 7. Dataset folder structure

Submit Manuscript

Submit Manuscript Peer Review

Peer Review Editor Work

Editor Work

DownLoad:

DownLoad: