| [1] |

ABBASI R, BASHIR A K, ALYAMANI H J, et al. Lidar point cloud compression, processing and learning for autonomous driving[J]. IEEE Transactions on Intelligent Transportation Systems, 2023, 24(1): 962–979. doi: 10.1109/TITS.2022.3167957. |

| [2] |

QURESHI A H, ALALOUL W S, MURTIYOSO A, et al. Smart rebar progress monitoring using 3D point cloud model[J]. Expert Systems with Applications, 2024, 249: 123562. doi: 10.1016/j.eswa.2024.123562. |

| [3] |

PETKOV P. Object detection and recognition with PointPillars in LiDAR point clouds-comparisions[R]. NSC-614-5849, 2024.

|

| [4] |

龚靖渝, 楼雨京, 柳奉奇, 等. 三维场景点云理解与重建技术[J]. 中国图象图形学报, 2023, 28(6): 1741–1766. doi: 10.11834/jig.230004. GONG Jingyu, LOU Yujing, LIU Fengqi, et al. Scene point cloud understanding and reconstruction technologies in 3D space[J]. Journal of Image and Graphics, 2023, 28(6): 1741–1766. doi: 10.11834/jig.230004. |

| [5] |

ZHANG Bo, WANG Haosen, YOU Suilian, et al. A small-size 3D object detection network for analyzing the sparsity of raw LiDAR point cloud[J]. Journal of Russian Laser Research, 2023, 44(6): 646–655. doi: 10.1007/s10946-023-10173-3. |

| [6] |

LI Shaorui, ZHU Zhenchang, DENG Weitang, et al. Estimation of aboveground biomass of different vegetation types in mangrove forests based on UAV remote sensing[J]. Sustainable Horizons, 2024, 11: 100100. doi: 10.1016/j.horiz.2024.100100. |

| [7] |

|

| [8] |

GUO Chi, LIU Yang, LUO Yarong, et al. Research progress in the application of image semantic information in visual SLAM[J]. Acta Geodaetica et Cartographica Sinica, 2024, 53(6): 1057–1076. doi: 10.11947/j.AGCS.2024.20230259. |

| [9] |

ZHANG L, VAN OOSTEROM P, and LIU H. Visualization of point cloud models in mobile augmented reality using continuous level of detail method[C]. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, London, UK, 2020: 167–170. doi: 10.5194/isprs-archives-XLIV-4-W1-2020-167-2020. |

| [10] |

POLIYAPRAM V, WANG Weimin, and NAKAMURA R. A point-wise LiDAR and image multimodal fusion network (PMNet) for aerial point cloud 3D semantic segmentation[J]. Remote Sensing, 2019, 11(24): 2961. doi: 10.3390/rs11242961. |

| [11] |

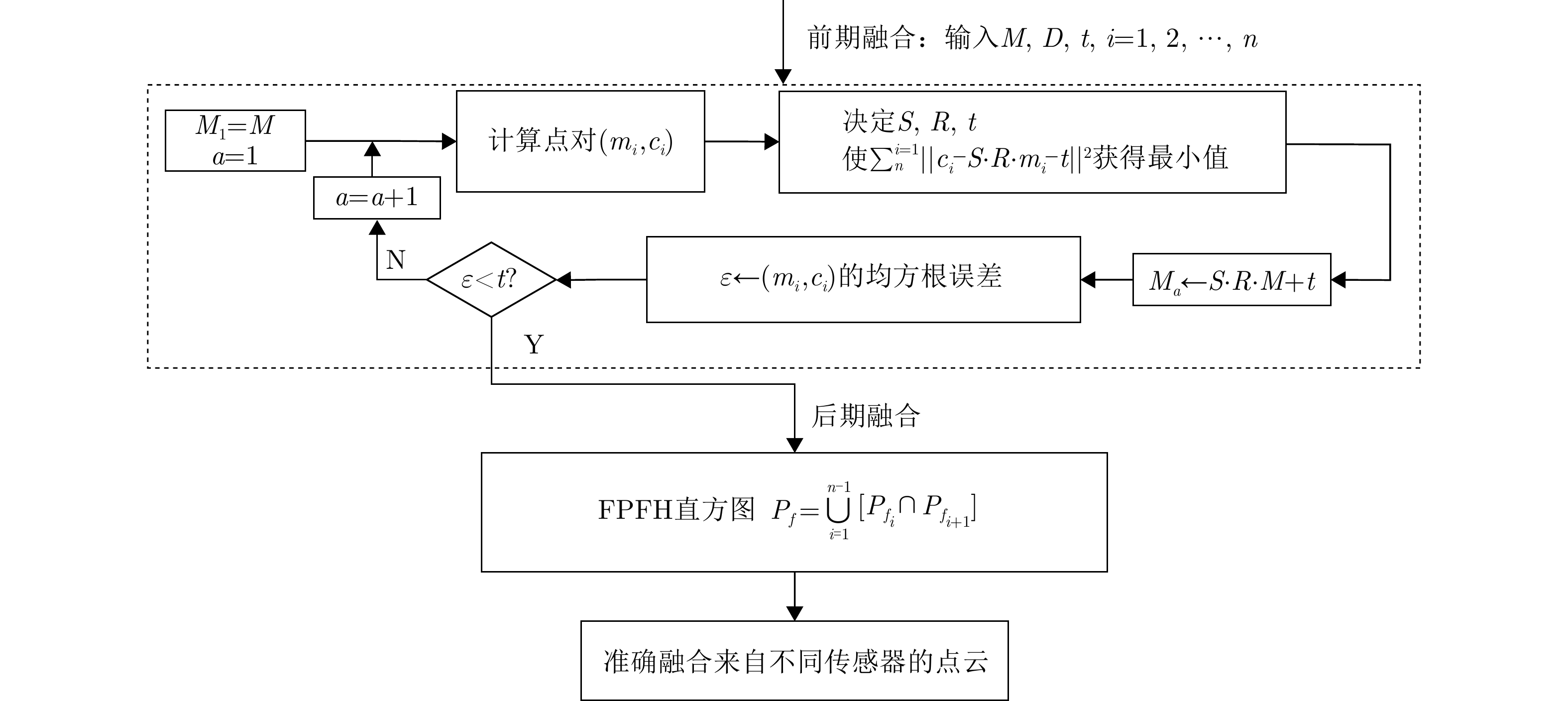

ZENG Tianjiao, ZHANG Wensi, ZHAN Xu, et al. A novel multimodal fusion framework based on point cloud registration for near-field 3D SAR perception[J]. Remote Sensing, 2024, 16(6): 952. doi: 10.3390/rs16060952. |

| [12] |

CRISAN A, PEPE M, COSTANTINO D, et al. From 3D point cloud to an intelligent model set for cultural heritage conservation[J]. Heritage, 2024, 7(3): 1419–1437. doi: 10.3390/heritage7030068. |

| [13] |

KIVILCIM C Ö and DURAN Z. A semi-automated point cloud processing methodology for 3D cultural heritage documentation[C]. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 2016: 293–296. doi: 10.5194/isprs-archives-XLI-B5-293-2016. |

| [14] |

WANG Yong, ZHOU Pengbo, GENG Guohua, et al. Enhancing point cloud registration with transformer: Cultural heritage protection of the Terracotta Warriors[J]. Heritage Science, 2024, 12(1): 314. doi: 10.1186/s40494-024-01425-9. |

| [15] |

YANG Su, HOU Miaole, and LI Songnian. Three-dimensional point cloud semantic segmentation for cultural heritage: A comprehensive review[J]. Remote Sensing, 2023, 15(3): 548. doi: 10.3390/rs15030548. |

| [16] |

LI Jiayi, MA Zhiliang, CHEN Lijie, et al. Comprehensive review of multimodal data fusion methods for construction robot localization[J]. Computer Engineering and Applications, 2024, 60(15): 11–23. doi: 10.3778/j.issn.1002-8331.2402-0008. |

| [17] |

ZHOU Yan, LI Wenjun, DANG Zhaolong, et al. Survey of 3D model recognition based on deep learning[J]. Journal of Frontiers of Computer Science and Technology, 2024, 18(4): 916–929. doi: 10.3778/j.issn.1673-9418.2309010. |

| [18] |

WANG Zhengren. 3D representation methods: A survey[J]. arXiv preprint arXiv, 2410.06475, 2024.

|

| [19] |

ZHOU Yin, SUN Pei, ZHANG Yu, et al. End-to-end multi-view fusion for 3D object detection in LiDAR point clouds[C]. The 3rd Annual Conference on Robot Learning, Osaka, Japan, 2019: 923–932.

|

| [20] |

CHEN Huixian, WU Yiquan, and ZHANG Yao. Research progress of 3D point cloud analysis methods based on deep learning[J]. Chinese Journal of Scientific Instrument, 2023, 44(11): 130–158. doi: 10.19650/j.cnki.cjsi.J2311134. |

| [21] |

LUO Haojun and WEN C Y. A low-cost relative positioning method for UAV/UGV coordinated heterogeneous system based on visual-lidar fusion[J]. Aerospace, 2023, 10(11): 924. doi: 10.3390/aerospace10110924. |

| [22] |

LI Yangyan, BU Rui, SUN Mingchao, et al. PointCNN: Convolution on X-transformed points[C]. The 32nd International Conference on Neural Information Processing Systems, Montréal Canada, 2018: 828–838.

|

| [23] |

GEIGER A, LENZ P, STILLER C, et al. Vision meets robotics: The KITTI dataset[J]. The International Journal of Robotics Research, 2013, 32(11): 1231–1237. doi: 10.1177/0278364913491297. |

| [24] |

CAESAR H, BANKITI V, LANG A H, et al. nuScenes: A multimodal dataset for autonomous driving[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 11621–11631. DOI: 10.1109/CVPR42600.2020.01164. |

| [25] |

MADDERN W, PASCOE G, LINEGAR C, et al. 1 year, 1000 km: The oxford robotcar dataset[J]. The International Journal of Robotics Research, 2017, 36(1): 3–15. doi: 10.1177/0278364916679498. |

| [26] |

CURLESS B and LEVOY M. A volumetric method for building complex models from range images[C]. The 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, USA, 1996: 303–312. doi: 10.1145/237170.237269. |

| [27] |

MIAN A S, BENNAMOUN M, and OWENS R A. A novel representation and feature matching algorithm for automatic pairwise registration of range images[J]. International Journal of Computer Vision, 2006, 66(1): 19–40. doi: 10.1007/s11263-005-3221-0. |

| [28] |

SUN Pei, KRETZSCHMAR H, DOTIWALLA X, et al. Scalability in perception for autonomous driving: Waymo open dataset[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 2446–2454. doi: 10.1109/CVPR42600.2020.00252. |

| [29] |

GEYER J, KASSAHUN Y, MAHMUDI M, et al. A2D2: Audi autonomous driving dataset[J]. arXiv preprint arXiv, 2004.06320, 2020.

|

| [30] |

HUANG Xinyu, CHENG Xinjing, GENG Qichuan, et al. The apolloscape dataset for autonomous driving[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, USA, 2018: 954–960. doi: 10.1109/CVPRW.2018.00141. |

| [31] |

CHANG Mingfang, LAMBERT J, SANGKLOY P, et al. Argoverse: 3D tracking and forecasting with rich maps[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 8748–8757. doi: 10.1109/CVPR.2019.00895. |

| [32] |

STURM J, BURGARD W, and CREMERS D. Evaluating egomotion and structure-from-motion approaches using the TUM RGB-D benchmark[C]. The Workshop on Color-Depth Camera Fusion in Robotics at the IEEE/RJS International Conference on Intelligent Robot Systems, Vilamoura, Algarve, 2012: 6.

|

| [33] |

CHOI Y, KIM N, HWANG S, et al. KAIST multi-spectral day/night data set for autonomous and assisted driving[J]. IEEE Transactions on Intelligent Transportation Systems, 2018, 19(3): 934–948. doi: 10.1109/TITS.2018.2791533. |

| [34] |

CHOI S, ZHOU Qianyi, and KOLTUN V. Robust reconstruction of indoor scenes[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 5556–5565. doi: 10.1109/CVPR.2015.7299195. |

| [35] |

ZENG A, SONG S, NIEßNER M, et al. 3DMatch: Learning local geometric descriptors from RGB-D reconstructions[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 1802–1811. doi: 1 0.1109/CVPR.2017.29. |

| [36] |

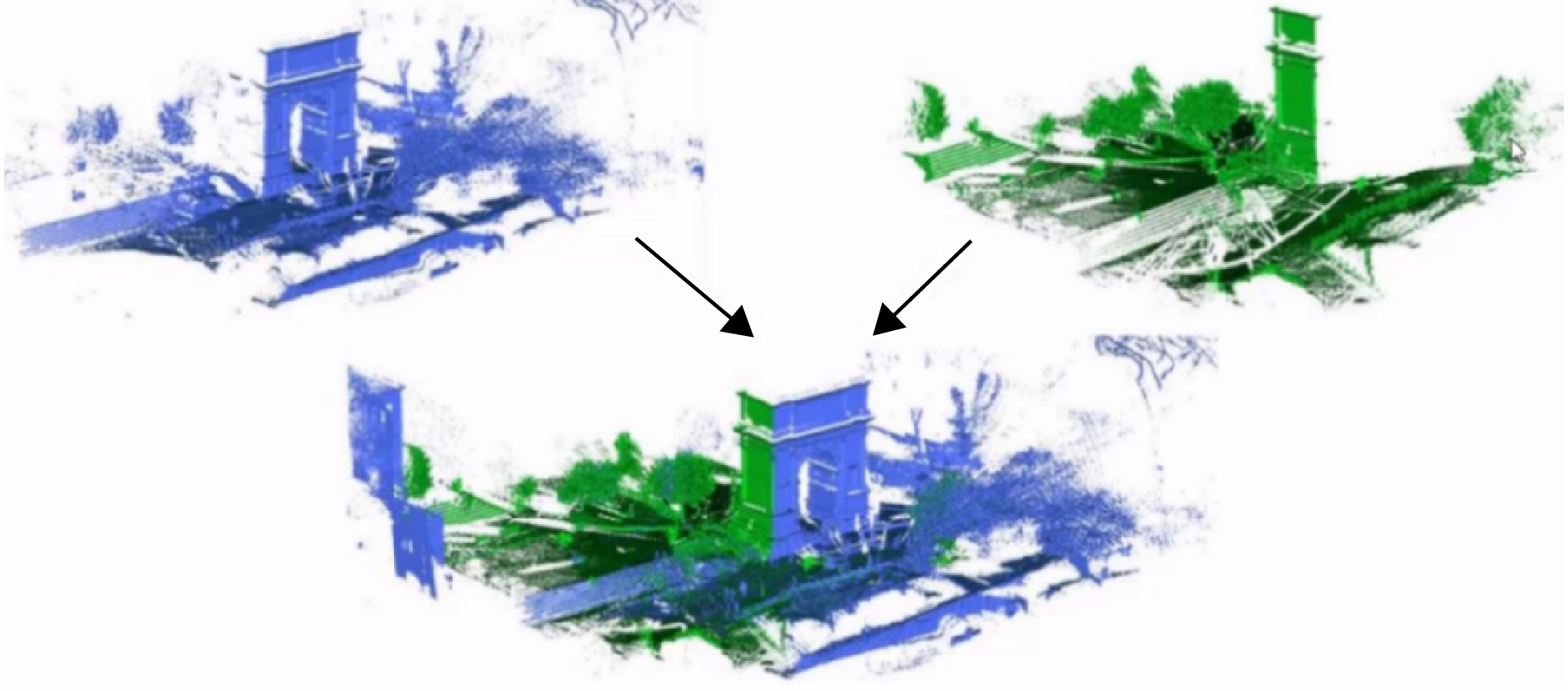

ABDELAZEEM M, ELAMIN A, AFIFI A, et al. Multi-sensor point cloud data fusion for precise 3D mapping[J]. The Egyptian Journal of Remote Sensing and Space Science, 2021, 24(3): 835–844. doi: 10.1016/j.ejrs.2021.06.002. |

| [37] |

CÓRDOVA-ESPARZA D M, TERVEN J R, JIMÉNEZ-HERNÁNDEZ H, et al. A multiple camera calibration and point cloud fusion tool for Kinect V2[J]. Science of Computer Programming, 2017, 143: 1–8. doi: 10.1016/j.scico.2016.11.004. |

| [38] |

PANAGIOTIDIS D, ABDOLLAHNEJAD A, and SLAVÍK M. 3D point cloud fusion from UAV and TLS to assess temperate managed forest structures[J]. International Journal of Applied Earth Observation and Geoinformation, 2022, 112: 102917. doi: 10.1016/j.jag.2022.102917. |

| [39] |

YANG Weijun, LIU Yang, HE Huagui, et al. Airborne LiDAR and photogrammetric point cloud fusion for extraction of urban tree metrics according to street network segmentation[J]. IEEE Access, 2021, 9: 97834–97842. doi: 10.1109/ACCESS.2021.3094307. |

| [40] |

PARVAZ S, TEFERLE F, and NURUNNABI A. Airborne cross-source point clouds fusion by slice-to-slice adjustment[C]. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Athens, Greece, 2024: 161–168. doi: 10.5194/isprs-annals-X-4-W4-2024-161-2024. |

| [41] |

CHENG Hongtai and HAN Jiayi. Toward precise dense 3D reconstruction of indoor hallway: A confidence-based panoramic LiDAR point cloud fusion approach[J]. Industrial Robot, 2025, 52(1): 116–125. doi: 10.1108/IR-03-2024-0132. |

| [42] |

LI Shiming, GE Xuming, HU Han, et al. Laplacian fusion approach of multi-source point clouds for detail enhancement[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2021, 171: 385–396. doi: 10.1016/j.isprsjprs.2020.11.021. |

| [43] |

BAI Zhengwei, WU Guoyuan, BARTH M J, et al. PillarGrid: Deep learning-based cooperative perception for 3D object detection from onboard-roadside LiDAR[C]. 2022 IEEE 25th International Conference on Intelligent Transportation Systems, Macau, China, 2022: 1743–1749. doi: 10.1109/ITSC55140.2022.9921947. |

| [44] |

ZHANG Pengcheng, HE Huagui, WANG Yun, et al. 3D urban buildings extraction based on airborne LiDAR and photogrammetric point cloud fusion according to U-Net deep learning model segmentation[J]. IEEE Access, 2022, 10: 20889–20897. doi: 10.1109/ACCESS.2022.3152744. |

| [45] |

GUO Lijie, WU Yanjie, DENG Lei, et al. A feature-level point cloud fusion method for timber volume of forest stands estimation[J]. Remote Sensing, 2023, 15(12): 2995. doi: 10.3390/rs15122995. |

| [46] |

QIAO Donghao and ZULKERNINE F. Adaptive feature fusion for cooperative perception using LiDAR point clouds[C]. 2023 IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, USA, 2023: 1186–1195. doi: 10.1109/WACV56688.2023.00124. |

| [47] |

GRIFONI E, VANNINI E, LUNGHI I, et al. 3D multi-modal point clouds data fusion for metrological analysis and restoration assessment of a panel painting[J]. Journal of Cultural Heritage, 2024, 66: 356–366. doi: 10.1016/j.culher.2023.12.007. |

| [48] |

CHEN Qi, TANG Sihai, YANG Qing, et al. Cooper: Cooperative perception for connected autonomous vehicles based on 3D point clouds[C]. 2019 IEEE 39th International Conference on Distributed Computing Systems, Dallas, USA, 2019: 514–524. doi: 10.1109/ICDCS.2019.00058. |

| [49] |

HURL B, COHEN R, CZARNECKI K, et al. TruPercept: Trust modelling for autonomous vehicle cooperative perception from synthetic data[C]. 2020 IEEE Intelligent Vehicles Symposium, Las Vegas, USA, 2020: 341–347. doi: 10.1109/IV47402.2020.9304695. |

| [50] |

LI Jie, ZHUANG Yiqi, PENG Qi, et al. Pose estimation of non-cooperative space targets based on cross-source point cloud fusion[J]. Remote Sensing, 2021, 13(21): 4239. doi: 10.3390/rs13214239. |

| [51] |

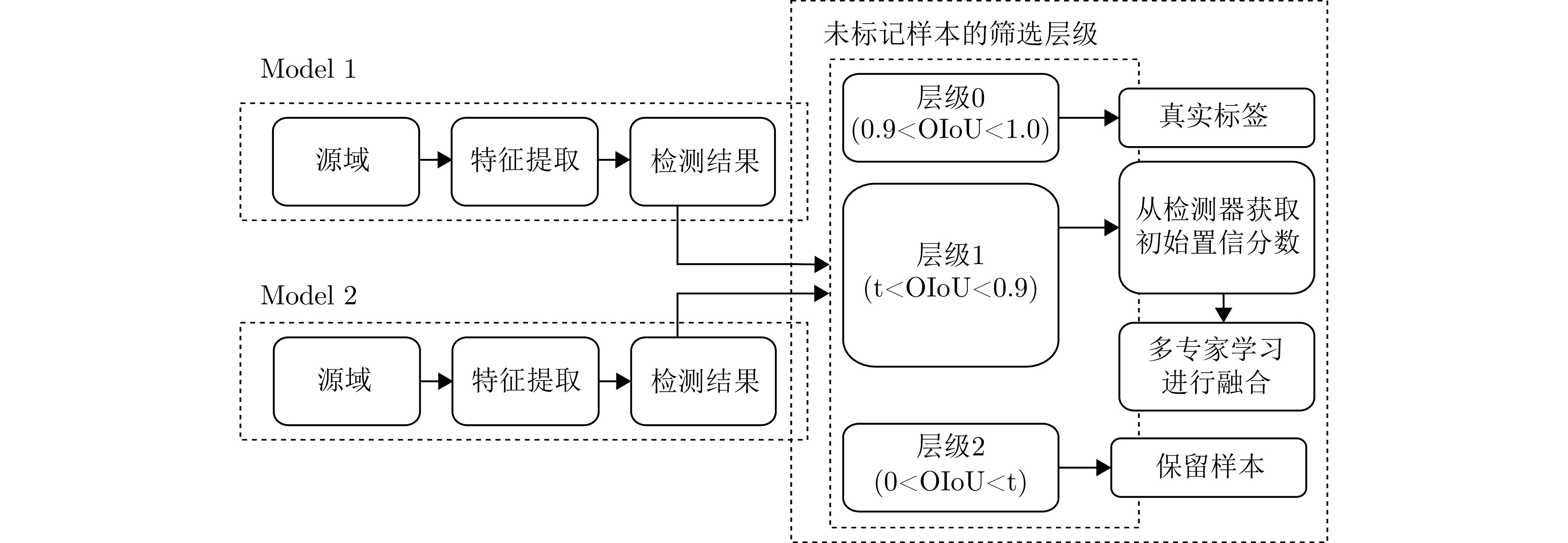

TANG Zhiri, HU Ruihan, CHEN Yanhua, et al. Multi-expert learning for fusion of pedestrian detection bounding box[J]. Knowledge-Based Systems, 2022, 241: 108254. doi: 10.1016/j.knosys.2022.108254. |

| [52] |

YU Haibao, LUO Yizhen, SHU Mao, et al. DAIR-V2X: A large-scale dataset for vehicle-infrastructure cooperative 3D object detection[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 21361–21370. doi: 10.1109/CVPR52688.2022.02067. |

| [53] |

RIZALDY A, AFIFI A J, GHAMISI P, et al. Dimensional dilemma: Navigating the fusion of hyperspectral and LiDAR point cloud data for optimal precision-2D vs. 3D[C]. 2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 2024: 7729–7733. doi: 10.1109/IGARSS53475.2024.10641140. |

| [54] |

LUO Wenjie, YANG Bin, and URTASUN R. Fast and furious: Real time end-to-end 3D detection, tracking and motion forecasting with a single convolutional net[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 3569–3577. doi: 10.1109/CVPR.2018.00376. |

| [55] |

LI Jing, LI Rui, WANG Junzheng, et al. Obstacle information detection method based on multiframe three-dimensional lidar point cloud fusion[J]. Optical Engineering, 2019, 58(11): 116102. doi: 10.1117/1.OE.58.11.116102. |

| [56] |

HUANG Rui, ZHANG Wanyue, KUNDU A, et al. An LSTM approach to temporal 3D object detection in LiDAR point clouds[C]. The 16th European Conference on Computer Vision, Glasgow, UK, 2020: 266–282. doi: 10.1007/978-3-030-58523-5_16. |

| [57] |

YIN Junbo, SHEN Jianbing, GUAN Chenye, et al. LiDAR-based online 3D video object detection with graph-based message passing and spatiotemporal transformer attention[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 11495–11504. doi: 10.1109/CVPR42600.2020.01151. |

| [58] |

YANG Zetong, ZHOU Yin, CHEN Zhifeng, et al. 3D-MAN: 3D multi-frame attention network for object detection[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 1863–1872. doi: 10.1109/CVPR46437.2021.00190. |

| [59] |

QI C R, ZHOU Yin, NAJIBI M, et al. Offboard 3D object detection from point cloud sequences[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 6134–6144. doi: 10.1109/CVPR46437.2021.00607. |

| [60] |

SUN Pei, WANG Weiyue, CHAI Yuning, et al. RSN: Range sparse net for efficient, accurate LiDAR 3D object detection[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 5725–5734. doi: 10.1109/CVPR46437.2021.00567. |

| [61] |

YIN Tianwei, ZHOU Xingyi, and KRÄHENBÜHL P. Center-based 3D object detection and tracking[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 11784–11793. doi: 10.1109/CVPR46437.2021.01161. |

| [62] |

LUO Chenxu, YANG Xiaodong, and YUILLE A. Exploring simple 3D multi-object tracking for autonomous driving[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 10488–10497. doi: 10.1109/ICCV48922.2021.01032. |

| [63] |

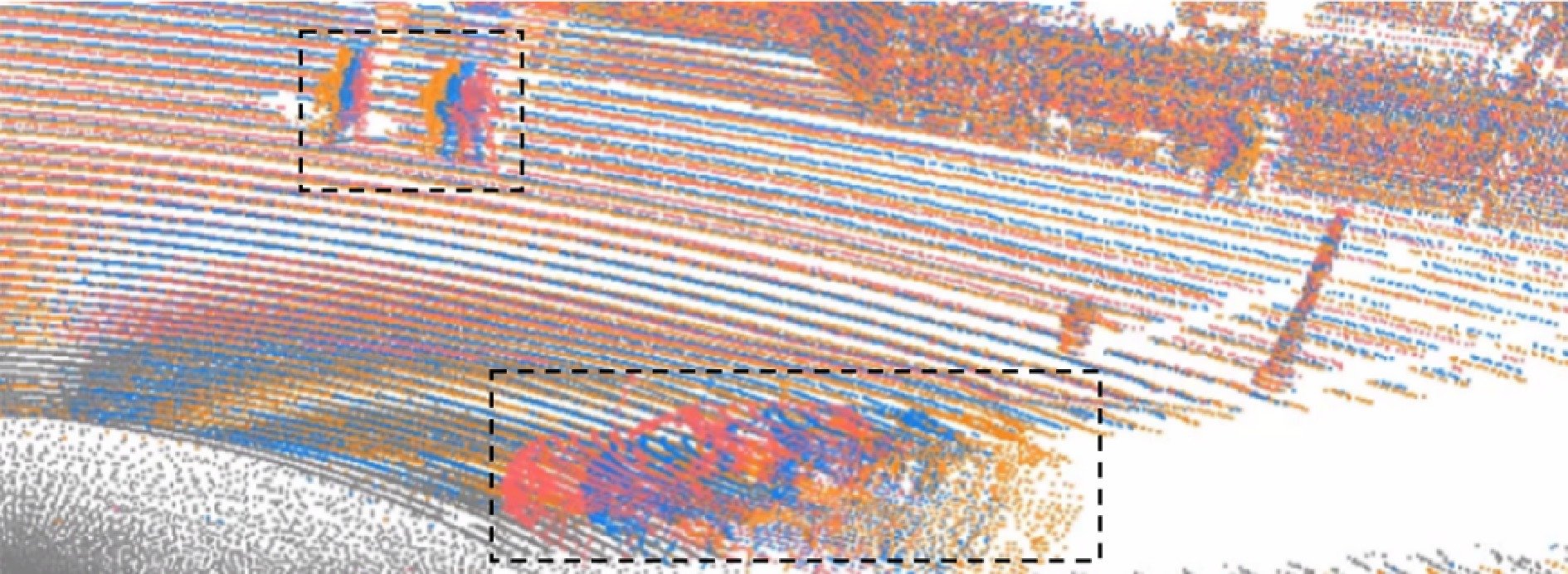

MAO Donghai, LI Shoujun, WANG Feng, et al. Block filtering method for LiDAR point cloud fusion image[J]. Bulletin of Surveying and Mapping, 2021(10): 67–72, 131. doi: 10.13474/j.cnki.11-2246.2021.307. |

| [64] |

CHEN Xuesong, SHI Shaoshuai, ZHU Benjin, et al. MPPNet: Multi-frame feature intertwining with proxy points for 3D temporal object detection[C]. The 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022: 680–697. doi: 10.1007/978-3-031-20074-8_39. |

| [65] |

HU Yihan, DING Zhuangzhuang, GE Runzhou, et al. AFDetV2: Rethinking the necessity of the second stage for object detection from point clouds[C]. The 36th AAAI Conference on Artificial Intelligence, 2022: 969–979. doi: 10.1609/aaai.v36i1.19980. |

| [66] |

HE Chenhang, LI Ruihuang, ZHANG Yabin, et al. MSF: Motion-guided sequential fusion for efficient 3D object detection from point cloud sequences[C]. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 5196–5205. doi: 10.1109/CVPR52729.2023.00503. |

| [67] |

SHI Lingfeng, LV Yunfeng, YIN Wei, et al. Autonomous multiframe point cloud fusion method for mmWave radar[J]. IEEE Transactions on Instrumentation and Measurement, 2023, 72: 4505708. doi: 10.1109/TIM.2023.3302936. |

| [68] |

YANG Yanding, JIANG Kun, YANG Diange, et al. Temporal point cloud fusion with scene flow for robust 3D object tracking[J]. IEEE Signal Processing Letters, 2022, 29: 1579–1583. doi: 10.1109/LSP.2022.3185948. |

| [69] |

CHEN Hui, FENG Yan, YANG Jian, et al. 3D reconstruction approach for outdoor scene based on multiple point cloud fusion[J]. Journal of the Indian Society of Remote Sensing, 2019, 47(10): 1761–1772. doi: 10.1007/s12524-019-01029-y. |

| [70] |

CHENG Yuqi, LI Wenlong, JIANG Cheng, et al. MVGR: Mean-variance minimization global registration method for multiview point cloud in robot inspection[J]. IEEE Transactions on Instrumentation and Measurement, 2024, 73: 8504915. doi: 10.1109/TIM.2024.3413191. |

| [71] |

LIU Junjie, LIU Junlong, YAN Shaotian, et al. MPC: Multi-view probabilistic clustering[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 9509–9518. doi: 10.1109/CVPR52688.2022.00929. |

| [72] |

KLOEKER L, KOTULLA C, and ECKSTEIN L. Real-time point cloud fusion of multi-LiDAR infrastructure sensor setups with unknown spatial location and orientation[C]. 2020 IEEE 23rd International Conference on Intelligent Transportation Systems, Rhodes, Greece, 2020: 1–8. doi: 10.1109/ITSC45102.2020.9294312. |

| [73] |

YU Xumin, TANG Lulu, RAO Yongming, et al. Point-BERT: Pre-training 3D point cloud transformers with masked point modeling[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 19313–19322. doi: 10.1109/CVPR52688.2022.01871. |

| [74] |

LI Yao, ZHOU Yong, ZHAO Jiaqi, et al. FA-MSVNet: Multi-scale and multi-view feature aggregation methods for stereo 3D reconstruction[J]. Multimedia Tools and Applications, 2024: 1–23. doi: 10.1007/s11042-024-20431-4. |

| [75] |

CHEN Zhimin, LI Yingwei, JING Longlong, et al. Point cloud self-supervised learning via 3D to multi-view masked autoencoder[J]. arXiv preprint arXiv, 2311.10887, 2023.

|

| [76] |

LING Xiao and QIN Rongjun. A graph-matching approach for cross-view registration of over-view and street-view based point clouds[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 185: 2–15. doi: 10.1016/j.isprsjprs.2021.12.013. |

| [77] |

深圳市博大建设集团有限公司. 一种多视角三维激光点云拼接方法及系统[P]. 中国, 202411659468.1, 2024.

Shenzhen Boda Construction Group Co., Ltd. Multi-view three-dimensional laser point cloud splicing method and system[P]. CN, 202411659468.1, 2024.

|

| [78] |

北京鹏太光耀科技有限公司. 一种基于多目结构光的全向点云融合方法[P]. 中国, 202411346535.4, 2025.

Beijing Pengtai Guangyao Technology Co., Ltd. Omnidirectional point cloud fusion method based on multi-view structured light[P]. CN, 202411346535.4, 2025.

|

| [79] |

KHAN K N, KHALID A, TURKAR Y, et al. VRF: Vehicle road-side point cloud fusion[C]. The 22nd Annual International Conference on Mobile Systems, Applications and Services, Tokyo, Japan, 2024: 547–560. doi: 10.1145/3643832.3661874. |

| [80] |

BREYER M, OTT L, SIEGWART R, et al. Closed-loop next-best-view planning for target-driven grasping[C]. 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems, Kyoto, Japan, 2022: 1411–1416. doi: 10.1109/IROS47612.2022.9981472. |

| [81] |

JIANG Changjian, GAO Ruilan, SHAO Kele, et al. LI-GS: Gaussian splatting with LiDAR incorporated for accurate large-scale reconstruction[J]. IEEE Robotics and Automation Letters, 2025, 10(2): 1864–1871. doi: 10.1109/LRA.2024.3522846. |

| [82] |

YANG Shuo, LU Huimin, and LI Jianru. Multifeature fusion-based object detection for intelligent transportation systems[J]. IEEE Transactions on Intelligent Transportation Systems, 2023, 24(1): 1126–1133. doi: 10.1109/TITS.2022.3155488. |

| [83] |

MA Nan, XIAO Chuansheng, WANG Mohan, et al. A review of point cloud and image cross-modal fusion for self-driving[C]. 2022 18th International Conference on Computational Intelligence and Security, Chengdu, China, 2022: 456–460. doi: 10.1109/CIS58238.2022.00103. |

| [84] |

LIU Zhijian, TANG Haotian, AMINI A, et al. BEVFusion: Multi-task multi-sensor fusion with unified bird’s-eye view representation[C]. 2023 IEEE International Conference on Robotics and Automation, London, UK, 2023: 2774–2781. doi: 10.1109/ICRA48891.2023.10160968. |

| [85] |

KUMAR G A, LEE J H, HWANG J, et al. LiDAR and camera fusion approach for object distance estimation in self-driving vehicles[J]. Symmetry, 2020, 12(2): 324. doi: 10.3390/sym12020324. |

| [86] |

HUANG Keli, SHI Botian, LI Xiang, et al. Multi-modal sensor fusion for auto driving perception: A survey[J]. arXiv preprint arXiv, 2202.02703, 2022.

|

| [87] |

CAI Yiyi, OU Yang, and QIN Tuanfa. Improving SLAM techniques with integrated multi-sensor fusion for 3D reconstruction[J]. Sensors, 2024, 24(7): 2033. doi: 10.3390/s24072033. |

| [88] |

RODRIGUEZ-GARCIA B, RAMÍREZ-SANZ J M, MIGUEL-ALONSO I, et al. Enhancing learning of 3D model unwrapping through virtual reality serious game: Design and usability validation[J]. Electronics, 2024, 13(10): 1972. doi: 10.3390/electronics13101972. |

| [89] |

KAVALIAUSKAS P, FERNANDEZ J B, MCGUINNESS K, et al. Automation of construction progress monitoring by integrating 3D point cloud data with an IFC-based BIM model[J]. Buildings, 2022, 12(10): 1754. doi: 10.3390/buildings12101754. |

| [90] |

XU Yusheng and STILLA U. Toward building and civil infrastructure reconstruction from point clouds: A review on data and key techniques[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 2857–2885. doi: 10.1109/JSTARS.2021.3060568. |

| [91] |

JHONG S Y, CHEN Y Y, HSIA C H, et al. Density-aware and semantic-guided fusion for 3-D object detection using LiDAR-camera sensors[J]. IEEE Sensors Journal, 2023, 23(18): 22051–22063. doi: 10.1109/JSEN.2023.3302314. |

| [92] |

LUO Zhipeng, ZHOU Changqing, PAN Liang, et al. Exploring point-BEV fusion for 3D point cloud object tracking with transformer[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(9): 5921–5935. doi: 10.1109/TPAMI.2024.3373693. |

| [93] |

LIU Jianan, ZHAO Qiuchi, XIONG Weiyi, et al. SMURF: Spatial multi-representation fusion for 3D object detection with 4D imaging radar[J]. IEEE Transactions on Intelligent Vehicles, 2024, 9(1): 799–812. doi: 10.1109/TIV.2023.3322729. |

| [94] |

HAO Ruidong, WEI Zhongwei, HE Xu, et al. Robust point cloud registration network for complex conditions[J]. Sensors, 2023, 23(24): 9837. doi: 10.3390/s23249837. |

| [95] |

BAYRAK O C, MA Zhenyu, MARIAROSARIA FARELLA E, et al. Operationalize large-scale point cloud classification: Potentials and challenges[C]. EGU General Assembly Conference Abstracts 2024, Vienna, Austria, 2024: 10034. doi: 10.5194/egusphere-egu24-10034. |

| [96] |

SHAN Shuo, LI Chenxi, WANG Yiye, et al. A deep learning model for multi-modal spatio-temporal irradiance forecast[J]. Expert Systems with Applications, 2024, 244: 122925. doi: 10.1016/j.eswa.2023.122925. |

| [97] |

QIAN Jiaming, FENG Shijie, TAO Tianyang, et al. Real-time 3D point cloud registration[C]. SPIE 11205, Seventh International Conference on Optical and Photonic Engineering, Phuket, Thailand, 2019: 495–500. doi: 10.1117/12.2547865. |

| [98] |

SHI Fengyuan, ZHENG Xunjiang, JIANG Lihui, et al. Fast point cloud registration algorithm using parallel computing strategy[J]. Journal of Physics: Conference Series, 2022, 2235(1): 012104. doi: 10.1088/1742-6596/2235/1/012104. |

| [99] |

LUO Yukui. Securing FPGA as a shared cloud-computing resource: Threats and mitigations[D]. [Ph.D. dissertation], Northeastern University, 2023.

|

| [100] |

WANG Laichao, LU Weiding, TIAN Yuan, et al. 6D object pose estimation with attention aware bi-gated fusion[C]. The 30th International Conference on Neural Information Processing, Changsha, China, 2024: 573–585. doi: 10.1007/978-981-99-8082-6_44. |

| [101] |

HONG Jiaxin, ZHANG Hongbo, LIU Jinghua, et al. A transformer-based multi-modal fusion network for 6D pose estimation[J]. Information Fusion, 2024, 105: 102227. doi: 10.1016/j.inffus.2024.102227. |

Submit Manuscript

Submit Manuscript Peer Review

Peer Review Editor Work

Editor Work

DownLoad:

DownLoad: