| [1] |

KUMAR P. Human activity recognition with deep learning: Overview, challenges & possibilities[J]. CCF Transactions on Pervasive Computing and Interaction, 2021, 339(3): 1–29. doi: 10.20944/preprints202102.0349.v1. |

| [2] |

HUANG Qingqing, ZHOU Fengyu, and LIU Meizhen. Survey of human action recognition algorithms based on video[J]. Application Research of Computers, 2020, 37(11): 3213–3219. doi: 10.19734/j.issn.1001-3695.2019.08.0253 |

| [3] |

QIAN Huifang, YI Jianping, and FU Yunhu. Review of human action recognition based on deep learning[J]. Journal of Frontiers of Computer Science & Technology, 2021, 15(3): 438–455. doi: 10.3778/j.issn.1673-9418.2009095 |

| [4] |

SCHULDT C, LAPTEV I, and CAPUTO B. Recognizing human actions: A local SVM approach[C]. 2004 IEEE International Conference on Pattern Recognition, Cambridge, UK, 2004: 32–36.

|

| [5] |

|

| [6] |

KUEHNE H, JHUANG H, GARROTE E, et al. HMDB: A large video database for human motion recognition[C]. 2011 IEEE International Conference on Computer Vision, Barcelona, Spain, 2011: 2556–2563.

|

| [7] |

|

| [8] |

SHAHROUDY A, LIU Jun, NG T T, et al. NTU RGB+D: A large scale dataset for 3D human activity analysis[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 1010–1019.

|

| [9] |

杜浩. 基于深度学习的超宽带雷达人体行为辨识研究[D]. [博士论文], 国防科技大学, 2020: 1–5.

DU Hao. Research on deep learning-based human behavior recognition in ultra-wideband radar[D]. [Ph. D. dissertation], National University of Defense Technology, 2020: 1–5.

|

| [10] |

PAULI M, GOTTEL B, SCHERR S, et al. Miniaturized millimeter-wave radar sensor for high-accuracy applications[J]. IEEE Transactions on Microwave Theory and Techniques, 2017, 65(5): 1707–1715. doi: 10.1109/TMTT.2017.2677910 |

| [11] |

LIU Yichen and XU Feng. Gesture recognition based on radar technology[J]. Journal of China Academy of Electronics and Information Technology, 2016, 11(6): 609–613. doi: 10.3969/j.issn.1673-5692.2016.06.009 |

| [12] |

DING Chuanwei, ZHANG Li, GU Chen, et al. Non-contact human motion recognition based on UWB radar[J]. IEEE Journal on Emerging and Selected Topics in Circuits and Systems, 2018, 8(2): 306–315. doi: 10.1109/JETCAS.2018.2797313 |

| [13] |

KIM Y and MOON T. Human detection and activity classification based on micro-Doppler signatures using deep convolutional neural networks[J]. IEEE Geoscience and Remote Sensing Letters, 2016, 13(1): 8–12. doi: 10.1109/LGRS.2015.2491329 |

| [14] |

CRALEY J, MURRAY T S, MENDAT D R, et al. Action recognition using micro-Doppler signatures and a recurrent neural network[C]. 2017 51st Annual Conference on Information Sciences and Systems, Baltimore, USA, 2017: 1–5.

|

| [15] |

WANG Mingyang, ZHANG Y D, and CUI Guolong. Human motion recognition exploiting radar with stacked recurrent neural network[J]. Digital Signal Processing, 2019, 87: 125–131. doi: 10.1016/j.dsp.2019.01.013 |

| [16] |

LI Xinyu, HE Yuan, FIORANELLI F, et al. Semisupervised human activity recognition with radar micro-Doppler signatures[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5103112. doi: 10.1109/TGRS.2021.3090106 |

| [17] |

DU Hao, JIN Tian, SONG Yongping, et al. A three-dimensional deep learning framework for human behavior analysis using range-Doppler time points[J]. IEEE Geoscience and Remote Sensing Letters, 2020, 17(4): 611–615. doi: 10.1109/LGRS.2019.2930636 |

| [18] |

LI Lianlin and CUI Tiejun. Recent progress in intelligent electromagnetic sensing[J]. Journal of Radars, 2021, 10(2): 183–190. doi: 10.12000/JR21049 |

| [19] |

LI Lianlin, SHUANG Ya, MA Qian, et al. Intelligent metasurface imager and recognizer[J]. Light: Science & Applications, 2019, 8(1): 97. doi: 10.1038/s41377-019-0209-z |

| [20] |

FIORANELLI F, SHAH S A, LI Haobo, et al. Radar sensing for healthcare[J]. Electronics Letters, 2019, 55(19): 1022–1024. doi: 10.1049/el.2019.2378 |

| [21] |

MENG Zhen, FU Song, YAN Jie, et al. Gait recognition for co-existing multiple people using millimeter wave sensing[C]. The AAAI Conference on Artificial Intelligence, New York, USA, 2020: 849–856.

|

| [22] |

ZHU Zhengliang, YANG Degui, ZHANG Junchao, et al. Dataset of human motion status using IR-UWB through-wall radar[J]. Journal of Systems Engineering and Electronics, 2021, 32(5): 1083–1096. doi: 10.23919/JSEE.2021.000093 |

| [23] |

SONG Yongkun, JIN Tian, DAI Yongpeng, et al. Through-wall human pose reconstruction via UWB MIMO radar and 3D CNN[J]. Remote Sensing, 2021, 13(2): 241. doi: 10.3390/rs13020241 |

| [24] |

AMIN M G, 朱国富, 陆必应, 金添, 等译. 穿墙雷达成像[M]. 北京: 电子工业出版社, 2014: 22–25.

AMIN M G, ZHU Guofu, LU Biying, JIN Tian, et al. translation. Through-The-Wall Radar Imaging[M]. Beijing, China: Publishing House of Electronic Industry, 2014: 22–25.

|

| [25] |

詹姆斯 D. 泰勒, 胡春明, 王建明, 孙俊, 等译. 超宽带雷达应用与设计[M]. 北京: 电子工业出版社, 2017: 54–55.

TAYLOR J D, HU Chunming, WANG Jianming, SUN Jun, et al. translation. Ultrawideband Radar: Applications and Design[M]. Beijing, China: Publishing House of Electronic Industry, 2017: 54–55.

|

| [26] |

孙鑫. 超宽带穿墙雷达成像方法与技术研究[D]. [博士论文], 国防科学技术大学, 2015: 16–17.

SUN Xin. Research on method and technique of ultra-wideband through-the-wall radar imaging[D]. [Ph. D. dissertation], National University of Defense Technology, 2015: 16–17.

|

| [27] |

JIN Tian and SONG Yongping. Review on human target detection using through-wall radar[J]. Chinese Journal of Radio Science, 2020, 35(4): 486–495. doi: 10.13443/j.cjors.2020040804 |

| [28] |

ASH M, RITCHIE M, and CHETTY K. On the application of digital moving target indication techniques to short-range FMCW radar data[J]. IEEE Sensors Journal, 2018, 18(10): 4167–4175. doi: 10.1109/JSEN.2018.2823588 |

| [29] |

SONG Yongping, LOU Jun, and TIAN Jin. A novel II-CFAR detector for ROI extraction in SAR image[C]. 2013 IEEE International Conference on Signal Processing, Communication and Computing, Kunming, China, 2013: 1–4.

|

| [30] |

NORTON-WAYNE L. Image reconstruction from projections[J]. Optica Acta: International Journal of Optics, 1980, 27(3): 281–282. doi: 10.1080/713820221 |

| [31] |

MCCORKLE J W. Focusing of synthetic aperture ultra wideband data[C]. 1991 IEEE International Conference on Systems Engineering, Dayton, USA, 1991: 1–5.

|

| [32] |

BOBICK A F and DAVIS J W. The recognition of human movement using temporal templates[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2001, 23(3): 257–267. doi: 10.1109/34.910878 |

| [33] |

DAS DAWN D and SHAIKH S H. A comprehensive survey of human action recognition with spatio-temporal interest point (STIP) detector[J]. The Visual Computer, 2016, 32(3): 289–306. doi: 10.1007/s00371-015-1066-2 |

| [34] |

WANG Heng, KLÄSER A, SCHMID C, et al. Dense trajectories and motion boundary descriptors for action recognition[J]. International Journal of Computer Vision, 2013, 103(1): 60–79. doi: 10.1007/s11263-012-0594-8 |

| [35] |

SIMONYAN K and ZISSERMAN A. Two-stream convolutional networks for action recognition in videos[C]. The 27th International Conference on Neural Information Processing Systems, Montreal, Canada, 2014: 568–576.

|

| [36] |

WANG Limin, XIONG Yuanjun, WANG Zhe, et al. Temporal segment networks: Towards good practices for deep action recognition[C]. 2016 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 20–36.

|

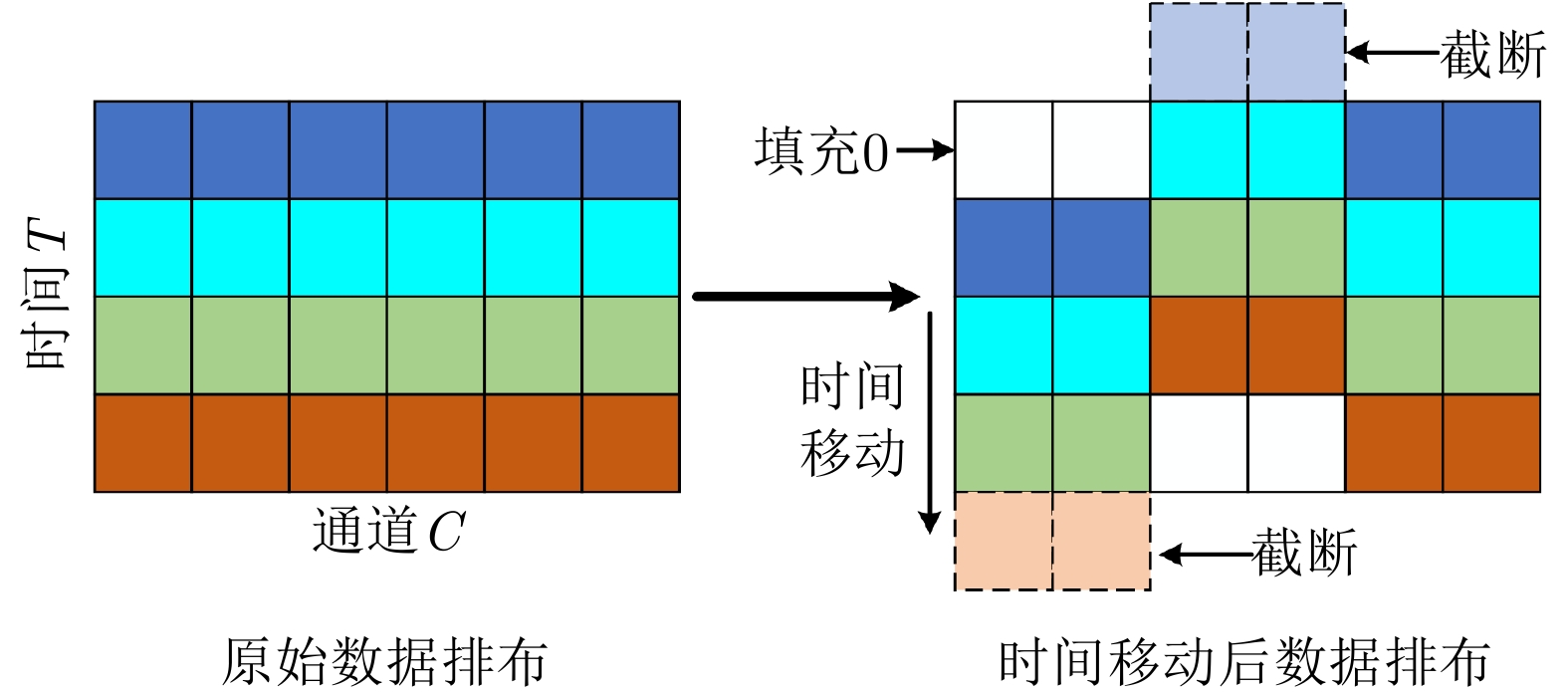

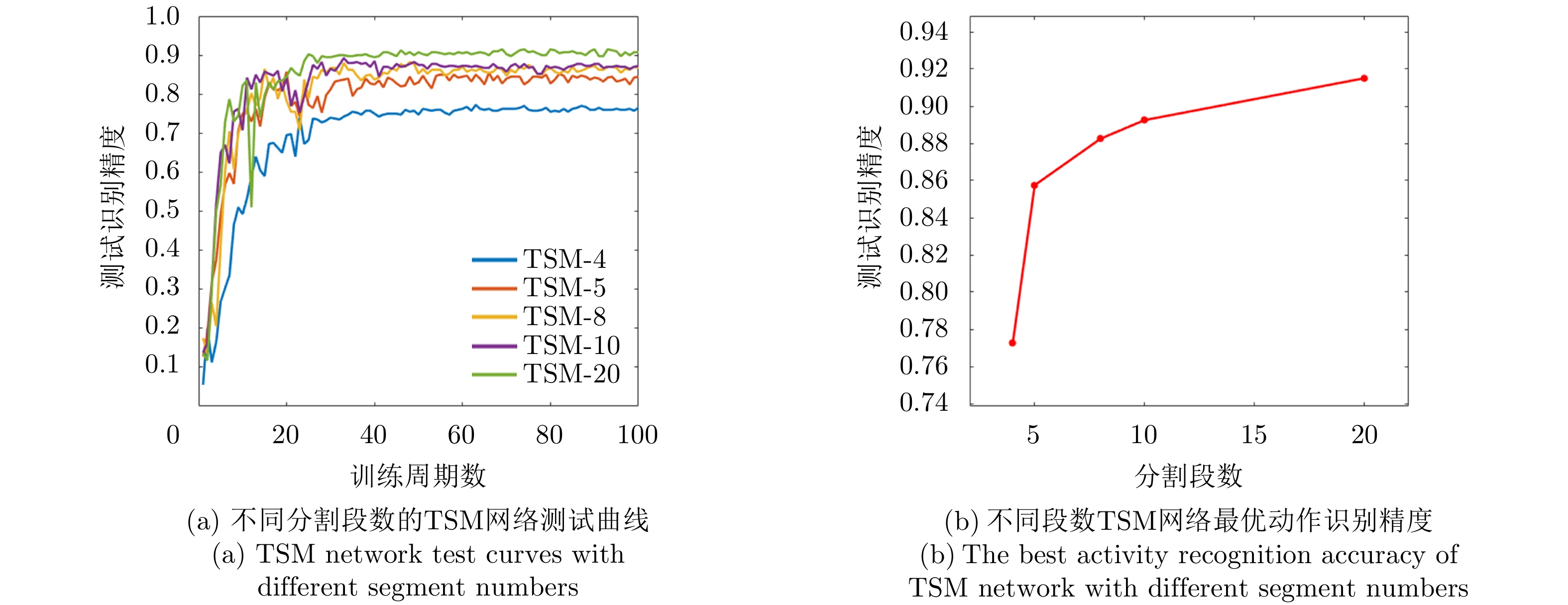

| [37] |

LIN Ji, GAN Chuang, and HAN Song. TSM: Temporal shift module for efficient video understanding[C]. 2019 IEEE/CVF IEEE International Conference on Computer Vision, Seoul, Korea, 2019: 7083–7093.

|

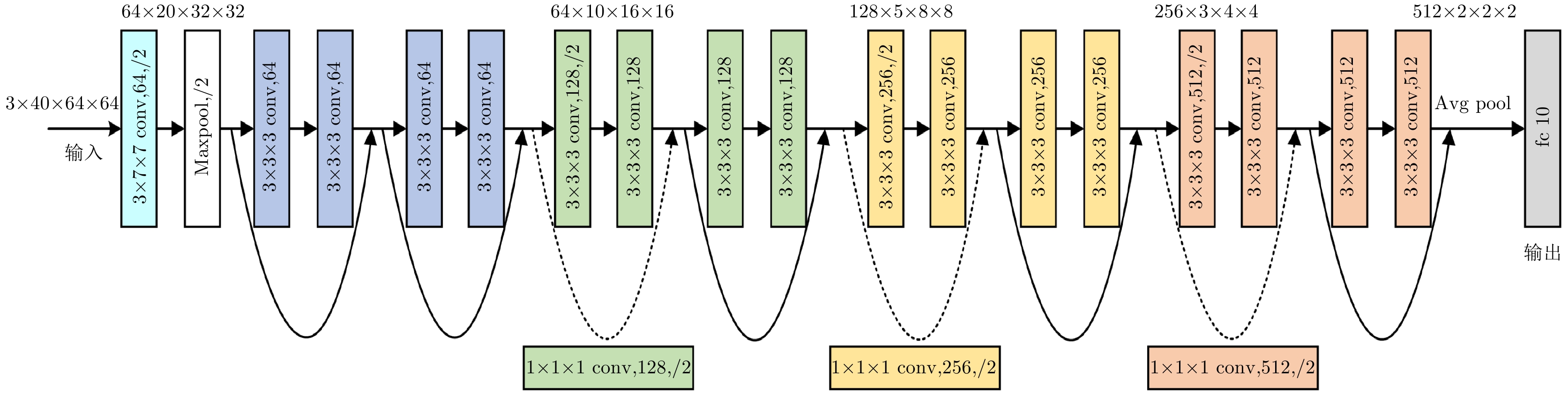

| [38] |

JI Shuiwang, XU Wei, YANG Ming, et al. 3D convolutional neural networks for human action recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(1): 221–231. doi: 10.1109/TPAMI.2012.59 |

| [39] |

TRAN D, BOURDEV L, FERGUS R, et al. Learning spatiotemporal features with 3D convolutional networks[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 4489–4497.

|

| [40] |

|

| [41] |

FEICHTENHOFER C, FAN Haoqi, MALIK J, et al. SlowFast networks for video recognition[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 6202–6210.

|

Submit Manuscript

Submit Manuscript Peer Review

Peer Review Editor Work

Editor Work

DownLoad:

DownLoad: