| [1] |

CUI Zongyong, TANG Cui, CAO Zongjie, et al. SAR unlabeled target recognition based on updating CNN with assistant decision[J]. IEEE Geoscience and Remote Sensing Letters, 2018, 15(10): 1585–1589. doi: 10.1109/LGRS.2018.2851600. |

| [2] |

PEI Jifang, HUANG Yulin, HUO Weibo, et al. SAR automatic target recognition based on multiview deep learning framework[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(4): 2196–2210. doi: 10.1109/TGRS.2017.2776357. |

| [3] |

WANG Chen, SHI Jun, ZHOU Yuanyuan, et al. Semisupervised learning-based SAR ATR via self-consistent augmentation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(6): 4862–4873. doi: 10.1109/TGRS.2020.3013968. |

| [4] |

BAI Xueru, XUE Ruihang, WANG Li, et al. Sequence SAR image classification based on bidirectional convolution-recurrent network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(11): 9223–9235. doi: 10.1109/TGRS.2019.2925636. |

| [5] |

AMRANI M, JIANG Feng, XU Yunzhong, et al. SAR-oriented visual saliency model and directed acyclic graph support vector metric based target classification[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2018, 11(10): 3794–3810. doi: 10.1109/JSTARS.2018.2866684. |

| [6] |

ZHOU Yuanyuan, SHI Jun, WANG Chen, et al. SAR ground moving target refocusing by combining mRe³ network and TV β-LSTM[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5200814. doi: 10.1109/TGRS.2020.3033656. |

| [7] |

WANG Chen, SHI Jun, YANG Xiaqing, et al. Geospatial object detection via deconvolutional region proposal network[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2019, 12(8): 3014–3027. doi: 10.1109/JSTARS.2019.2919382. |

| [8] |

HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. The 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. |

| [9] |

ARAZO E, ORTEGO D, ALBERT P, et al. Unsupervised label noise modeling and loss correction[C]. The 36th International Conference on Machine Learning, Long Beach, USA, 2019: 312–321.

|

| [10] |

ZHANG Chiyuan, BENGIO S, HARDT M, et al. Understanding deep learning (still) requires rethinking generalization[J]. Communications of the ACM, 2021, 64(3): 107–115. doi: 10.1145/3446776. |

| [11] |

SONG H, KIM M, PARK D, et al. Learning from noisy labels with deep neural networks: A survey[J]. IEEE Transactions on Neural Networks and Learning Systems, 2023, 34(11): 8135–8153. doi: 10.1109/TNNLS.2022.3152527. |

| [12] |

VEIT A, ALLDRIN N, CHECHIK G, et al. Learning from noisy large-scale datasets with minimal supervision[C]. The 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 839–847. doi: 10.1109/CVPR.2017.696. |

| [13] |

VAHDAT A. Toward robustness against label noise in training deep discriminative neural networks[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 5601–5610.

|

| [14] |

REED S, LEE H, ANGUELOV D, et al. Training deep neural networks on noisy labels with bootstrapping[C]. The 3rd International Conference on Learning Representations, San Diego, USA, 2015.

|

| [15] |

LEE K H, HE Xiaodong, ZHANG Lei, et al. CleanNet: Transfer learning for scalable image classifier training with label noise[C]. The 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 5447–5456. doi: 10.1109/CVPR.2018.00571. |

| [16] |

ZHANG Zhilu and SABUNCU M R. Generalized cross entropy loss for training deep neural networks with noisy labels[C]. The 32nd International Conference on Neural Information Processing Systems, Montréal, Canada, 2018: 8792–8802.

|

| [17] |

KANG Jian, FERNANDEZ-BELTRAN R, DUAN Puhong, et al. Robust normalized softmax loss for deep metric learning-based characterization of remote sensing images with label noise[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 59(10): 8798–8811. doi: 10.1109/TGRS.2020.3042607. |

| [18] |

SRIVASTAVA N, HINTON G, KRIZHEVSKY A, et al. Dropout: A simple way to prevent neural networks from overfitting[J]. The Journal of Machine Learning Research, 2014, 15(1): 1929–1958.

|

| [19] |

GOODFELLOW I J, SHLENS J, and SZEGEDY C. Explaining and harnessing adversarial examples[C]. The 3rd International Conference on Learning Representations, San Diego, USA, 2015.

|

| [20] |

ZHANG Hongyi, CISSÉ M, DAUPHIN Y N, et al. Mixup: Beyond empirical risk minimization[C]. The 6th International Conference on Learning Representations, Vancouver, Canada, 2018.

|

| [21] |

PEREYRA G, TUCKER G, CHOROWSKI J, et al. Regularizing neural networks by penalizing confident output distributions[C]. The 5th International Conference on Learning Representations, Toulon, France, 2017.

|

| [22] |

SZEGEDY C, VANHOUCKE V, IOFFE S, et al. Rethinking the inception architecture for computer vision[C]. The 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 2818–2826. doi: 10.1109/CVPR.2016.308. |

| [23] |

HUA Yuansheng, LOBRY S, MOU Lichao, et al. Learning multi-label aerial image classification under label noise: A regularization approach using word embeddings[C]. The 2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, USA, 2020: 525–528. doi: 10.1109/IGARSS39084.2020.9324069. |

| [24] |

SHENG Mengmeng, SUN Zeren, CAI Zhenhuang, et al. Adaptive integration of partial label learning and negative learning for enhanced noisy label learning[C]. The 38th AAAI Conference on Artificial Intelligence, Vancouver, Canada, 2024. doi: 10.1609/aaai.v38i5.28284. |

| [25] |

ALBERT P, ORTEGO D, ARAZO E, et al. Addressing out-of-distribution label noise in webly-labelled data[C]. The 2022 IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, USA, 2022: 392–401. doi: 10.1109/WACV51458.2022.00245. |

| [26] |

ZHANG Dongyu, HU Ruofan, and RUNDENSTEINER E A. CoLafier: Collaborative noisy label purifier with local intrinsic dimensionality guidance[C]. The 2024 SIAM International Conference on Data Mining, Houston, USA, 2024: 82–90.

|

| [27] |

NGUYEN D T, MUMMADI C K, NGO T P N, et al. SELF: Learning to filter noisy labels with self-ensembling[C]. The 8th International Conference on Learning Representations, Addis Ababa, Ethiopia, 2020.

|

| [28] |

WEI Hongxin, FENG Lei, CHEN Xiangyu, et al. Combating noisy labels by agreement: A joint training method with co-regularization[C]. The 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 13726–13735. doi: 10.1109/CVPR42600.2020.01374. |

| [29] |

HAN Bo, YAO Quanming, YU Xingrui, et al. Co-teaching: Robust training of deep neural networks with extremely noisy labels[C]. The 32nd International Conference on Neural Information Processing Systems, Montréal, Canada, 2018: 8536–8546.

|

| [30] |

PARK D, CHOI S, KIM D, et al. Robust data pruning under label noise via maximizing re-labeling accuracy[C]. The 37th International Conference on Neural Information Processing Systems, New Orleans, USA, 2024: 3257.

|

| [31] |

赵娟萍, 郭炜炜, 柳彬, 等. 基于概率转移卷积神经网络的含噪标记SAR图像分类[J]. 雷达学报, 2017, 6(5): 514–523. doi: 10.12000/JR16140. ZHAO Juanping, GUO Weiwei, LIU Bin, et al. Convolutional neural network-based SAR image classification with noisy labels[J]. Journal of Radars, 2017, 6(5): 514–523. doi: 10.12000/JR16140. |

| [32] |

SHANG Ronghua, LIN Junkai, JIAO Licheng, et al. SAR image segmentation using region smoothing and label correction[J]. Remote Sensing, 2020, 12(5): 803. doi: 10.3390/rs12050803. |

| [33] |

HUANG Zhongling, DUMITRU C O, PAN Zongxu, et al. Classification of large-scale high-resolution SAR images with deep transfer learning[J]. IEEE Geoscience and Remote Sensing Letters, 2021, 18(1): 107–111. doi: 10.1109/LGRS.2020.2965558. |

| [34] |

滑文强, 王爽, 侯彪. 基于半监督学习的SVM-Wishart极化SAR图像分类方法[J]. 雷达学报, 2015, 4(1): 93–98. doi: 10.12000/JR14138. HUA Wenqiang, WANG Shuang, and HOU Biao. Semi-supervised learning for classification of polarimetric SAR images based on SVM-wishart[J]. Journal of Radars, 2015, 4(1): 93–98. doi: 10.12000/JR14138. |

| [35] |

SUN Yuanshuang, WANG Yinghua, LIU Hongwei, et al. Gradual domain adaptation with pseudo-label denoising for SAR target recognition when using only synthetic data for training[J]. Remote Sensing, 2023, 15(3): 708. doi: 10.3390/rs15030708. |

| [36] |

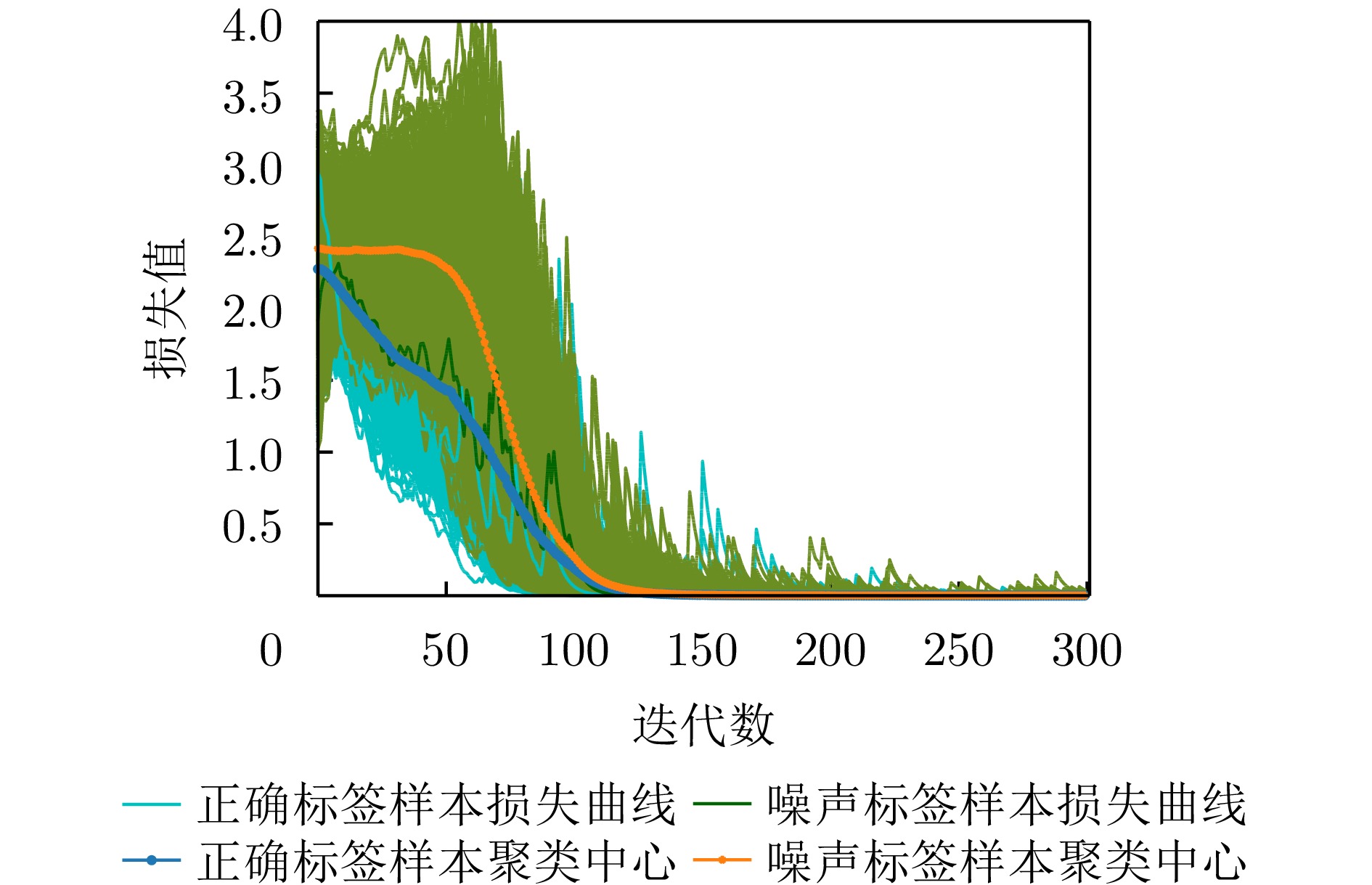

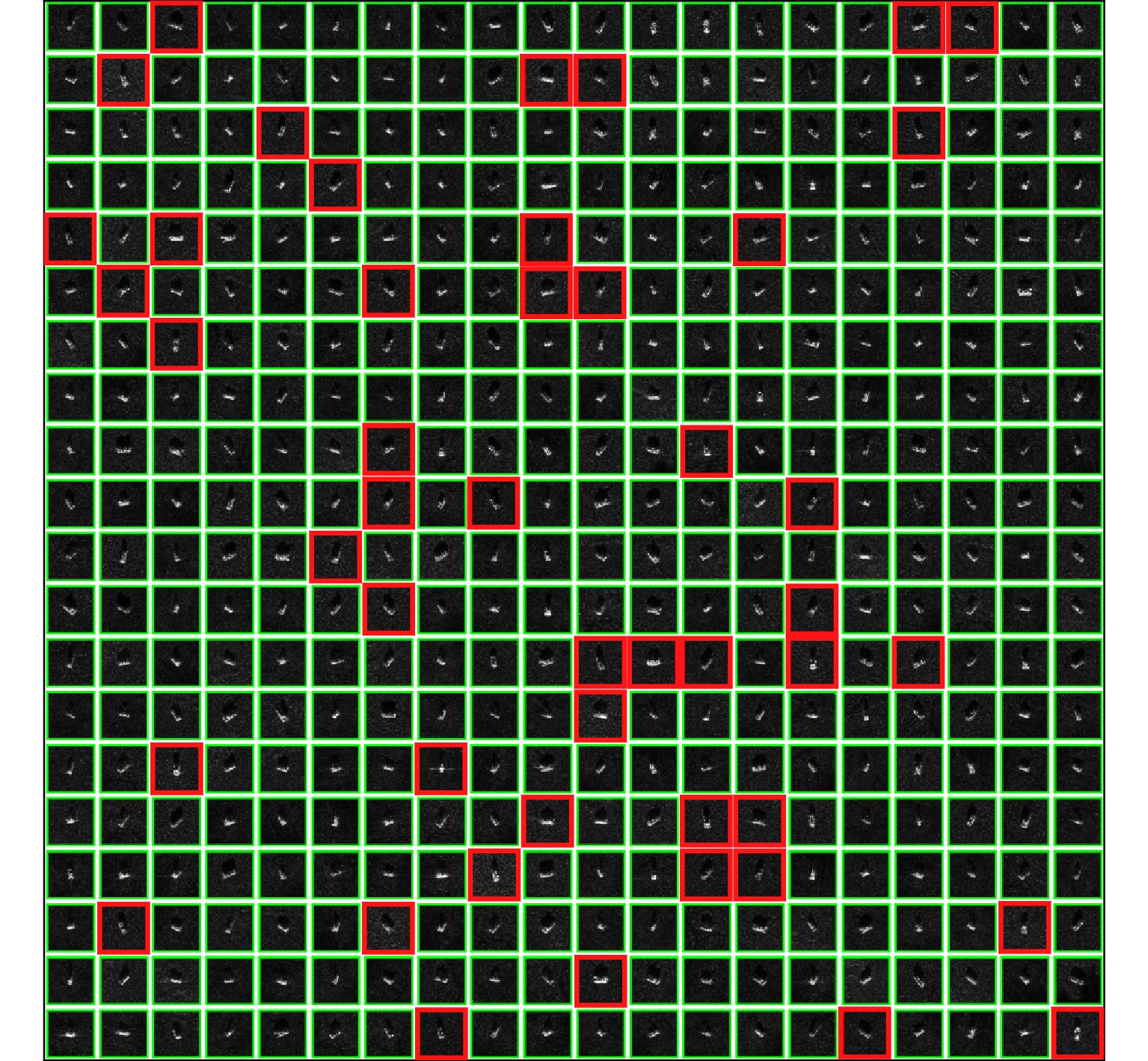

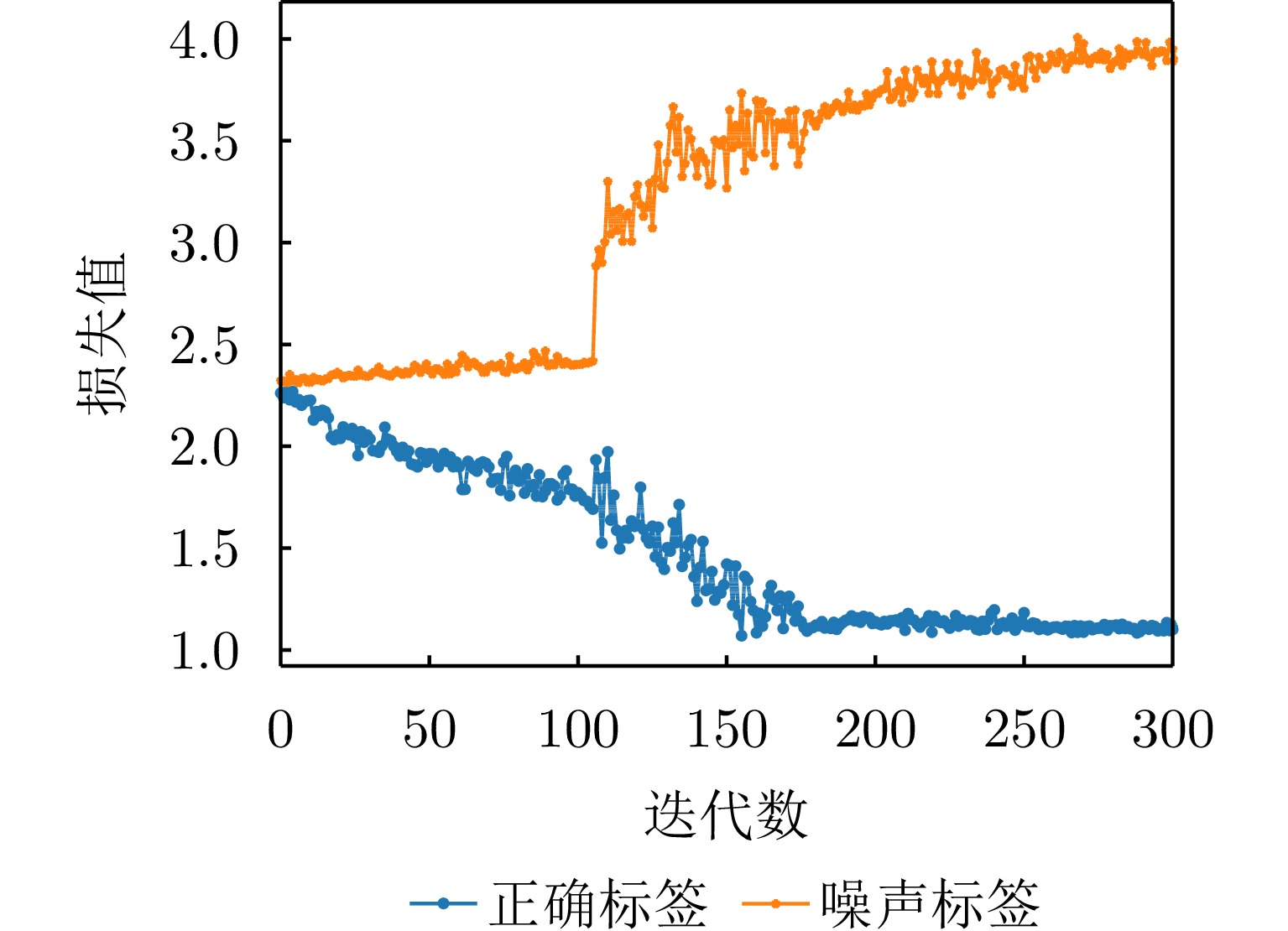

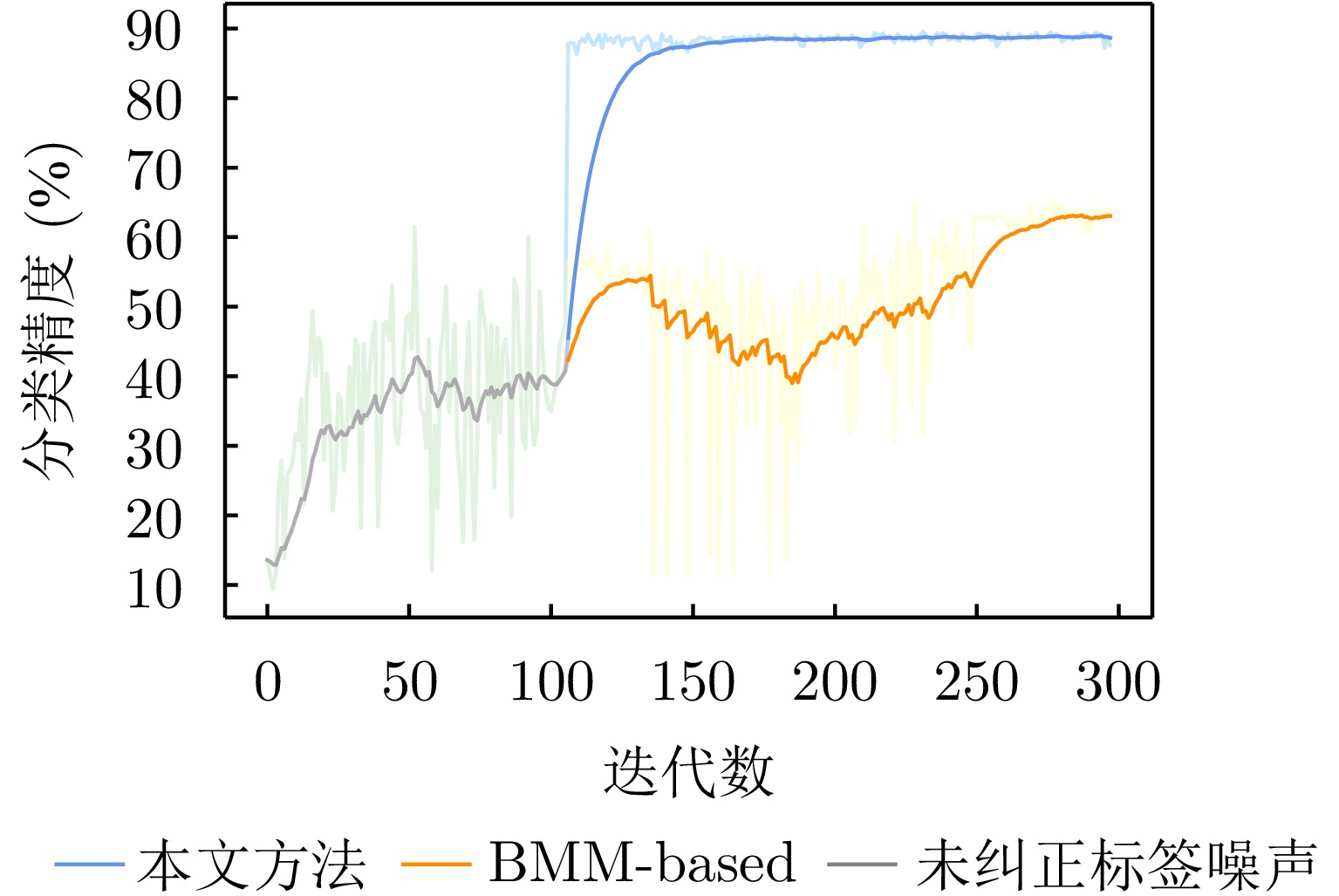

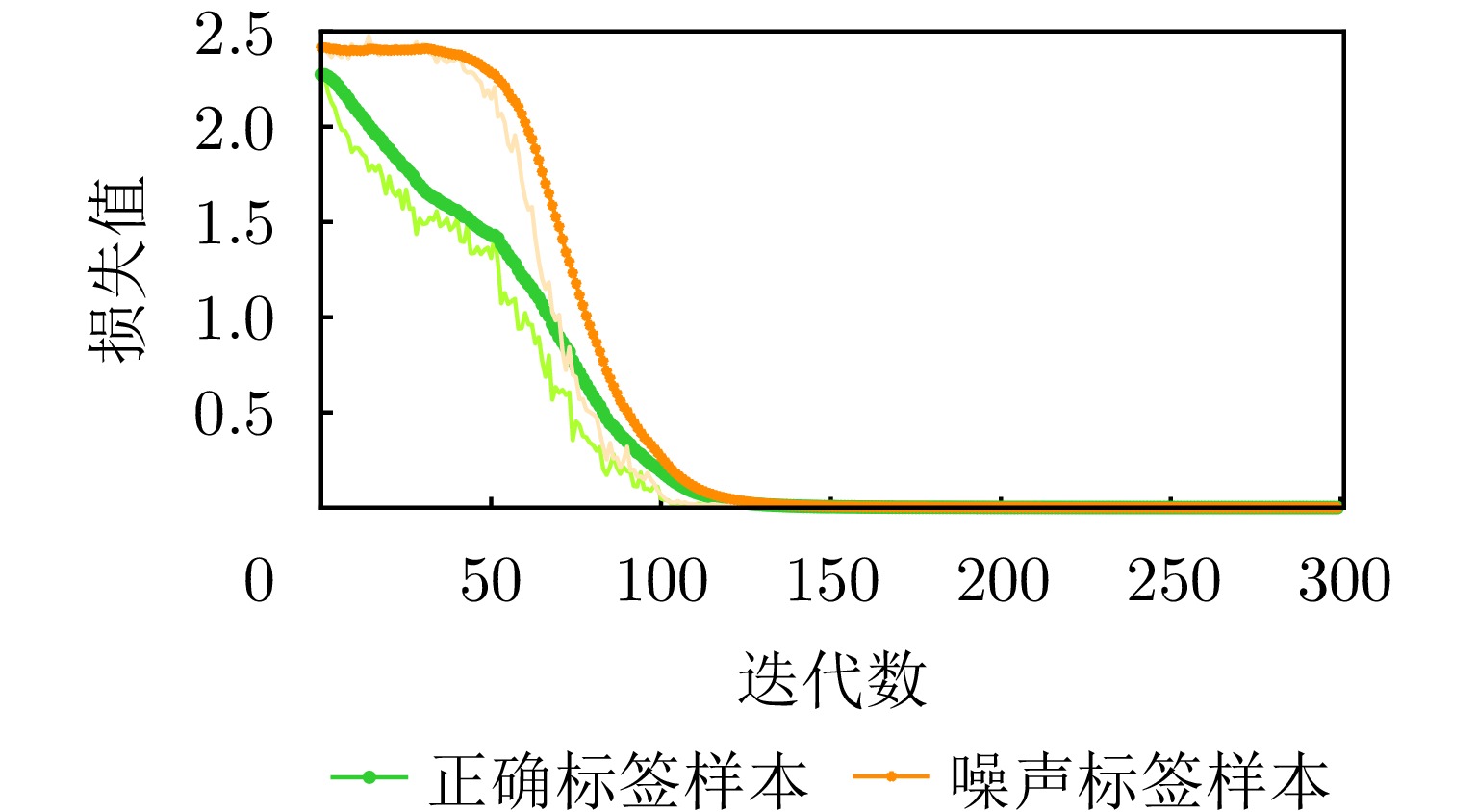

WANG Chen, SHI Jun, ZHOU Yuanyuan, et al. Label noise modeling and correction via loss curve fitting for SAR ATR[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5216210. doi: 10.1109/TGRS.2021.3121397. |

| [37] |

KEYDEL E R, LEE S W, and MOORE J T. MSTAR extended operating conditions: A tutorial[C]. The SPIE 2757, Algorithms for Synthetic Aperture Radar Imagery III, Orlando, USA, 1996: 228–242. doi: 10.1117/12.242059. |

| [38] |

SUTSKEVER I, MARTENS J, DAHL G, et al. On the importance of initialization and momentum in deep learning[C]. The 30th International Conference on Machine Learning, Atlanta, USA, 2013: 1139–1147.

|

| [39] |

PASZKE A, GROSS S, MASSA F, et al. PyTorch: An imperative style, high-performance deep learning library[C]. The 33rd Conference on Neural Information Processing Systems, Vancouver, Canada, 2019: 8026–8037.

|

Submit Manuscript

Submit Manuscript Peer Review

Peer Review Editor Work

Editor Work

DownLoad:

DownLoad: